Professional Documents

Culture Documents

Hype Cycle For The Telecommu 260996

Uploaded by

Enrique de la RosaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Hype Cycle For The Telecommu 260996

Uploaded by

Enrique de la RosaCopyright:

Available Formats

This research note is restricted to the personal use of yolanda.robles@inegi.org.

mx

G00260996

Hype Cycle for the Telecommunications

Industry, 2014

Published: 4 August 2014

Analyst(s): Kamlesh Bhatia

CSPs' future existence will depend on their ability to deliver individual

experiences over an industrial-scale infrastructure. This Hype Cycle

examines the key systems, processes and platforms that will help CSPs tide

over their competitors and remain relevant to their consumers.

Table of Contents

Analysis.................................................................................................................................................. 3

What You Need to Know.................................................................................................................. 3

The Hype Cycle................................................................................................................................ 4

The Priority Matrix.............................................................................................................................7

Off the Hype Cycle........................................................................................................................... 8

On the Rise...................................................................................................................................... 9

Cognizant Computing.................................................................................................................9

IoT Platform.............................................................................................................................. 12

Open-Source Telecom Operations Management Systems........................................................13

5G............................................................................................................................................ 15

DevOps.................................................................................................................................... 17

At the Peak.....................................................................................................................................19

In-Memory Computing..............................................................................................................19

Subscription Billing................................................................................................................... 22

Business Capability Modeling................................................................................................... 23

Managed Mobility Services....................................................................................................... 25

Open APIs in CSPs' Infrastructure............................................................................................ 27

IT/OT Integration.......................................................................................................................29

Communications Service Providers as Cloud Service Brokerages.............................................30

Heterogeneous Networks......................................................................................................... 33

Hybrid Mobile Development......................................................................................................35

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

Social Network Analysis............................................................................................................36

Capacity-Planning and Management Tools...............................................................................38

Context-Enriched Services....................................................................................................... 40

CSP Network Intelligence......................................................................................................... 42

Sliding Into the Trough....................................................................................................................43

4G Standard.............................................................................................................................43

Big Data................................................................................................................................... 45

Network Function Virtualization................................................................................................. 47

Mobile Self-Organizing Networks.............................................................................................. 49

Voice Over LTE.........................................................................................................................51

Cloud-Based RAN.................................................................................................................... 53

Hybrid Cloud Computing.......................................................................................................... 54

Software-Defined Networks...................................................................................................... 56

Innovation Management........................................................................................................... 58

Mobile QoS for LTE.................................................................................................................. 60

Mobile Unified Communications................................................................................................62

Telecom Analytics.....................................................................................................................64

Real-Time Infrastructure............................................................................................................66

Machine-to-Machine Communication Services......................................................................... 68

Next-Generation Service Delivery Platforms.............................................................................. 71

Retail Mobile Payments............................................................................................................ 74

Mobile Subscriber Data Management....................................................................................... 76

Service-Oriented Architecture in OSS/BSS and SDP................................................................ 78

Location-Based Advertising/Location-Based Marketing............................................................ 80

Climbing the Slope......................................................................................................................... 83

Content Integration................................................................................................................... 83

Infrastructure as a Service (IaaS)............................................................................................... 84

Mobile CDN..............................................................................................................................86

Rich Communication Suite........................................................................................................88

Mobile Advertising.................................................................................................................... 90

Enterprise Architecture............................................................................................................. 92

IP Service Assurance................................................................................................................ 94

Entering the Plateau....................................................................................................................... 96

Mobile DPI................................................................................................................................96

Appendixes.................................................................................................................................... 97

Hype Cycle Phases, Benefit Ratings and Maturity Levels.......................................................... 99

Page 2 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

Gartner Recommended Reading........................................................................................................ 100

List of Tables

Table 1. Hype Cycle Phases.................................................................................................................99

Table 2. Benefit Ratings........................................................................................................................99

Table 3. Maturity Levels......................................................................................................................100

List of Figures

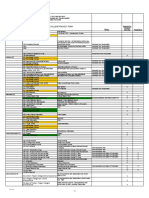

Figure 1. Hype Cycle for the Telecommunications Industry, 2014........................................................... 6

Figure 2. Priority Matrix for the Telecommunications Industry, 2014........................................................8

Figure 3. Hype Cycle for the Telecommunications Industry, 2013......................................................... 98

Analysis

What You Need to Know

Communication service providers (CSPs) find themselves at the crossroads of being efficient

connectivity providers and enabling new revenue opportunities through innovation and

collaboration. The change is largely driven by users gravitating toward convergence, content and a

better user experience, often sourced as digital services from Internet-based or over-the-top (OTT)

providers.

The migration to digital services is a fundamental shift and is having a transformational impact on

CSP business and operating models. CSPs must prepare to face this challenge at all levels, starting

with a future view of technology and influences on market developments.

Use of digital technology among individual and enterprise consumers is becoming seamless, driving

greater expectations in terms of customer experience and servicing client needs in real time.

Access to new forms of information, in conjunction with the technology (devices, social platforms

and so on) influence customers' buying behavior both their own and that of others through

network and social media interactions.

Digitization is also starting to blur traditional organizational boundaries between CSP IT and the

network to create internal struggles around the investment road map, ownership and skills. To

achieve expected business outcomes, CSPs must create a more responsive organization powered

by greater use of data, analytics, platforms and simplified user and partner interface. This will help

underpin the CSP role as an enabler for new growth areas like machine to machine (M2M) and the

Internet of Things (IoT) that demand seamless integration of network and IT in a business context.

Page 3 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

The broader focus on the 2014 Hype Cycle is to highlight technologies that contribute to trends that

Gartner is observing in the market (see "Market Trends: Worldwide, Top Five Disruptive Trends for

CSPs, 2014-2019").

This Hype Cycle is primarily intended for CSP CTOs that want to assess emerging technologies,

their relative maturity, and market adoption for use in solutions aimed at improving the end-user

experience, competitive differentiation, and monetizing new opportunities. The technology profiles

in this Hype Cycle may also interest CIOs, CMOs and CFOs in CSP organizations interested in

evaluating their implications across the organization.

The Hype Cycle

This year's Hype Cycle includes technologies that will enable CSPs to become providers of

experience-led innovation and advanced technology solutions. The technologies included are key

topics of discussion in the CSP arena and form the "building blocks" for CSPs to create new

delivery and monetization capabilities. There have been no methodological changes to the selection

process for technologies. Additions and deletions to the technology profiles are noted below.

Digitization will impact all parts of CSP operational and business environment including

established processes for product and customer management and operations. The traditional

model of delivering capabilities (process, applications and architecture) mapped to individual

services will not be sustainable, and CSPs will have to adopt a more scalable, industrialized

approach for supporting digital services.

As CSPs grapple with the technical challenges of building their own "digital technology factory,"

they must also deal with the paradoxical trend of personalization end users demanding a

customized experience from marketing through product support. The ability to deliver an individual

experience over an industrial IT infrastructure will form the basis for CSPs' future existence.

To strike the right balance, CSPs must focus on:

Customer centricity. Retool internal processes to make them more aligned to customer needs.

Leverage customer data and use of analytics to deliver a consistent experience across channels

and services.

Product leadership. Focus on reusable technology and process assets that allow CSPs to not

only "succeed fast," but also "fail fast" and "fail inexpensively." Reduce time to market for new

ideas and leverage partners to co-innovate.

Operational excellence. Deliver seamless service in terms of coverage and quality and with the

right levels of security and compliance for the business.

Cost leadership. Improve productivity by supporting self-service, automation and business

process improvement. Leverage scalable infrastructure solutions that impact positively on

expense ratios and drive standardization.

The technology profiles in this Hype Cycle contribute toward the focused goals mentioned earlier.

They also demonstrate the influence of the Nexus of Forces (cloud, mobility, social and information)

Page 4 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

on CSPs' IT architecture, and technologies that can enable new business opportunities (see "How

the Nexus of Forces Deeply Transforms Communications Service Providers' Strategy").

The Hype Cycle has a number of technologies that are new entrants this year, starting from the

Innovation Trigger. These technologies like cognizant computing, IoT platforms and DevOps are

better-suited for Type A organizations (aggressive technology adopters). The technologies offer an

early-bird advantage to CSPs seeking opportunities in new, related spaces.

Technologies like subscription billing, open APIs and integration of IT and OT are nearing the peak

on the Hype Cycle, indicating extensive interest from the media, the vendor community, and

technology evangelists to highlight potential benefits. Some CSPs are making early investment in

these technologies as products/solutions mature and there is evidence of potential benefit.

Approaches like smart city frameworks allow CSPs to engage with local government stakeholders

and be part of new, developing ecosystems early in the planning cycle.

Technology profiles in the Trough of Disillusionment are past the peak and there is gradual uptake,

mostly by Type B and C organizations that comprise CSPs that want to adopt new technology but

are generally risk-averse or have smaller budgets. Technologies like 4G, HetNets and analytics to

tap network intelligence, M2M sevices and CSP role as a service broker are now well-understood

with some examples of successful outcomes. We expect these to see sustained investment over

next few years. Software-defined networking (SDN) and network function virtualization (NFV) will

transition from field trials to implementations as standards evolve over time.

Many technologies in Slope of Enlightenment are now considered hygiene factors for CSPs to build

effective products and operational focus. The use of service-oriented architecture (SOA) and

reusable components is at the heart of all new architectures and packaged product offerings for

CSPs. In the same way, the ability to offer location-based services and better content and

infrastructure management capability is the basis for new opportunities with enterprise clients.

This Hype Cycle complements other Hype Cycles offering technology insight into specific areas of

CSP strategy and operations, see "Hype Cycle for Communications Service Provider Infrastructure,

2014," "Hype Cycle for Communications Service Provider Operations, 2014," "Hype Cycle for

Wireless Networking Infrastructure, 2014" and "Hype Cycle for Communications Service Provider

Digital Services Enablement."

Page 5 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

Figure 1. Hype Cycle for the Telecommunications Industry, 2014

Communications Service Providers as Cloud Service Brokerages

Heterogeneous Networks

Hybrid Mobile Development

Social Network Analysis

Capacity-Planning and Management Tools

Context-Enriched Services

CSP Network Intelligence

expectations

IT/OT Integration

Open APIs in CSPs' Infrastructure

Managed Mobility Services

Business Capability Modeling

Subscription Billing

In-Memory Computing

4G

Standard

Big Data

DevOps

5G

Open-Source Telecom Operations

Management Systems

IoT Platform

Cognizant Computing

Innovation

Trigger

Network Function Virtualization

Mobile Self-Organizing Networks

Voice Over LTE

Cloud-Based RAN

Hybrid Cloud Computing

Software-Defined Networks

Innovation Management

Mobile QoS for LTE

Mobile Unified Communications

Telecom Analytics

Real-Time Infrastructure

Machine-to-Machine

Communication

Services

Next-Generation Service

Delivery Platforms

Retail Mobile Payments

Mobile Subscriber Data

Management

Peak of

Inflated

Expectations

Mobile DPI

IP Service Assurance

Enterprise Architecture

Mobile Advertising

Rich Communication Suite

Mobile CDN

Infrastructure as a Service (IaaS)

Content Integration

Location-Based Advertising/Location-Based Marketing

Service-Oriented Architecture

in OSS/BSS and SDP

As of August 2014

Trough of

Disillusionment

Slope of Enlightenment

Plateau of

Productivity

time

Plateau will be reached in:

less than 2 years

2 to 5 years

5 to 10 years

more than 10 years

obsolete

before plateau

Source: Gartner (August 2014)

Page 6 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

The Priority Matrix

CSPs' future success rests on their ability to formulate operational and business strategies that

allow more control in the hands of consumers to create their own experiences. This involves finding

new ways to extract more information from consumers and their service habits, becoming agile and

flexible in product development, collaborating with the broader ecosystem and being responsive to

change.

In this section, we highlight several profiles that in our view are transformational or have a high

benefit rating. These technologies and underlying processes have a strong impact on CSPs' ability

to create differentiation through a superior user experience and targeted new areas of growth.

Context-enriched services and network functions virtualization (NFV) are two profiles that Gartner

believes should be observed closely and will play out in the next three to five years. Contextenriched services allow CSPs to leverage contextual elements about the service and situation of

their customers to target new opportunities better and improve real-time response. The underlying

architectural constructs involving services and APIs allow context enrichment of business

applications, platforms and support systems, allowing CSPs to support advanced use-cases for

marketing and customer-facing projects (often involving other big data initiatives).

NFV on the other hand, will allow CSPs to achieve a higher level of efficiency by interlacing IT

concepts of virtualization and elastic provisioning in a cloud environment. The success of such

initiatives often lies in the seamless integration of CSPs' IT and OT environment something we

highlight as having high-level benefits for CSPs over the next five years and beyond.

M2M, IoT and real-time capabilities present an opportunity for CSPs to layer enhanced value over

connectivity and target a higher share of enterprise and consumer spending in these areas. To

stand a chance over other emerging players, CSPs must make sustained investments to extend

their operational capabilities close to the end user at the edge of the network and offer services that

enhance the value in a business context.

The use of platforms that leverage the power of analytics and real-time infrastructure are required,

alongside engagement with business stakeholders, to develop technology and market road maps

for future growth. We expect "smart initiatives" to become part of the agenda for most enterprises,

as well as government and urban planning committees over the next five years, translating into

multiple opportunities for players offering connectivity, IT infrastructure and associated services.

Other noticeable technologies that will make an impact are cognizant computing, featuring interplay

between smart devices such as wearables, software and contextual information, and DevOps for

improved collaboration between operations and development teams.

Page 7 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

Figure 2. Priority Matrix for the Telecommunications Industry, 2014

benefit

years to mainstream adoption

less than 2 years

transformational

2 to 5 years

Context-Enriched

Services

Hybrid Cloud Computing

Network Function

Virtualization

Next-Generation Service

Delivery Platforms

5 to 10 years

more than 10 years

Big Data

Cognizant Computing

DevOps

In-Memory Computing

IoT Platform

Machine-to-Machine

Communication Services

Real-Time Infrastructure

high

Mobile CDN

4G Standard

5G

Mobile DPI

Business Capability

Modeling

Capacity-Planning and

Management Tools

Cloud-Based RAN

Communications Service

Providers as Cloud

Service Brokerages

Mobile Self-Organizing

Networks

Heterogeneous Networks

Hybrid Mobile

Development

Infrastructure as a Service

(IaaS)

Enterprise Architecture

IT/OT Integration

IP Service Assurance

Location-Based

Advertising/LocationBased Marketing

Mobile Advertising

Social Network Analysis

Mobile QoS for LTE

Software-Defined

Networks

Open APIs in CSPs'

Infrastructure

Service-Oriented

Architecture in OSS/BSS

and SDP

Telecom Analytics

moderate

Rich Communication Suite

CSP Network Intelligence

Innovation Management

Mobile Subscriber Data

Management

Managed Mobility

Services

Voice Over LTE

Retail Mobile Payments

Open-Source Telecom

Operations Management

Systems

Subscription Billing

low

As of August 2014

Source: Gartner (August 2014)

Off the Hype Cycle

A number of profiles have been removed from this year's Hype Cycle for the Telecommunications

Industry and new ones added to sharpen the focus for CSPs evolving as providers of experienceled innovation and advanced technology solutions. Additionally, in some cases, the profiles have

been renamed to reflect the coverage better and align with market nomenclatures; for example,

Page 8 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

cross-channel analytics changed to customer journey analytics and network intelligence to CSP

network intelligence.

The profiles being removed are: behavioral economics, big data, bring-your-own-device services

(consumer devices, browser client OS, business impact analysis, cloud computing, cloud

management platforms, cloud master data management [MDM] hub services, cloud UC [UCaaS],

cloud/Web platforms, context delivery architecture, convergent communications advertising

platforms [CCAPs]), telecom end-user experience monitoring, hybrid mobile development, MDM,

mobile cloud, mobile data protection, mobile device management, mobile social networks, mobile

virtual worlds, OneAPI (telecom, OpenFlow, open-source communications, open-source

virtualization platforms, operations support system [OSS]/business support system [BSS]) customer

experience management, personal cloud, social IT management, SaaS, Web analytics, Web

experience analytics, Web real-time communications, and Web-oriented architecture.

New profiles being added are: Cognizant computing, IoT platforms, open-source telecom

operations management systems, 5G, DevOps, in-memory computing, subscription billing,

business capability modeling, managed mobility services, open APIs in CSPs' infrastructure, IT/OT

integration, heterogeneous networks, capacity planning and management tools, CSP network

intelligence, 4G standards, mobile self-organizing networks, voice over LTE, cloud-based radio

access network (RAN), hybrid cloud computing, software-defined networks, innovation

management, mobile QoS for LTE, telecom analytics, next-generation service delivery platforms,

retail mobile payments, mobile subscriber data management, location-based advertising/locationbased marketing, application security as a service, mobile advertising, enterprise architecture, IP

service assurance, and mobile DPI.

On the Rise

Cognizant Computing

Analysis By: Jessica Ekholm; Brian Blau

Definition: Cognizant computing is the next step in the evolution of personal cloud. It uses big data

and simple rule sets in order to increase personal and commercial information about a consumer

through four stages: "Sync Me," "See Me," "Know Me" and "Be Me."

Position and Adoption Speed Justification: In the next few years, cognizant computing and smart

machines will become two of the strongest forces in consumer and business IT. Any company in

the business of providing a service, using apps or selling devices will be affected by cognizant

computing in some way. Gartner predicts that, by 2016, OSs such as iOS, Android and Windows

will no longer define a consumer's choice of smartphone. Cognizant computing also heralds the

next evolution of the personal cloud, as consumers switch their focus away from devices to apps

and the cloud (with negative implications for smartphone vendors such as Apple and Samsung). We

predict that, by 2015, most of the largest companies in the world will be using cognizant computing

to fundamentally change the way they interact with their customers.

Page 9 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

By amalgamating and analyzing data in the cloud from many sources (including apps, smartphones

and wearable devices), cognizant computing will provide contextual insights into how people

behave: what they watch, do and buy, who they meet, and where these activities take place. This

will help companies increase the lifetime value of their increasingly fickle customers, improve

customer care, boost their sales channels, and make their customer relationships more personal

and relevant. In essence, this new development will help companies innovate and create new

business opportunities.

Cognizant computing has four stages:

Sync Me Apps, content and information are made available across devices and shared

contextually.

See Me Data is continuously collected about users and their devices to gain an

understanding of users' context.

Know Me Understanding users' wants and needs, and proactively offering products and

services based on pattern recognition and other machine-learning approaches.

Be Me Developing intelligent apps and services that act on users' behalf.

At the moment, most activity is around the first two stages. As big data and the Internet of Things

(IoT) become more pervasive, the vast amounts of information produced will enable complex

systems to become more "intelligent," offering brand new opportunities in the latter two stages.

This won't be without challenges or risk, however. Critical issues that will have to be addressed

include consumer privacy, quality of execution and becoming a trusted vendor.

Cognizant computing is beginning to take shape via many mobile apps, smartphones and wearable

devices that collect and sync information about users, their whereabouts and their social graph

(mainly in the "Sync Me" and "See Me" stages of development). In addition, we are seeing the first

personal digital assistants appearing with Microsoft Cortana, calendaring apps such as Tempo AI

and, to an extent, Apple's Siri and Google Now. In the next two to five years, the IoT and big data

will meet analytics, and more data will make systems smarter. By 2017, smartphones will handle

some tasks for us better than we can do ourselves. At that point, consumers' personal clouds will

interact with their smartphones and other devices, and the intricate app ecosystems they have

created. The vast amount of very personal data that will flow between users and the brands is likely

to be of some concern to users who do not necessarily want to share this amount of data. As this is

a cause for concern for all involved, it would be advisable to work on how to create a trusted

relationship between the user and the brand at an early stage of the relationship and, of course, to

add strong privacy and security controls at all consumer touchpoints.

User Advice:

Cognizant computing drives innovative analytics, apps, data and devices. Use its four-stage

framework to stage new business models that can be used to identify supporting services

opportunities, app and device features, and as a link to smart machines and the digitalization of

business.

Page 10 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

Many cognizant computing services are still evolving, but look for companies that are

developing deep learning, analytics, sophisticated algorithms and location-based technologies.

Evaluate which cognizant computing assets you currently have and which you can either create

in-house or be without; also consider which partnerships you need to go into to create a strong

set of assets over the next 24 months, to reap future revenue.

Business Impact: We predict that most of the world's largest 200 companies will utilize the full

toolkit of big data and analytical tools to refine their offers and improve their customer experience

by 2015. Thus, over the coming two to five years we expect consumer-focused companies to use

cognizant computing techniques to an increasing degree. This in turn will have big impact and

affect the entire ecosystems and value chains across IT.

Technology and service providers that see opportunities within cognizant computing in the early

years will create strong early-mover advantages, meaning they will be better equipped to develop

stronger, more reliable ecosystems and reap early revenue benefits. They will also be able to deal

with threats and issues with greater ease than late movers. At present, in 2014, no vendor has a full

set of cognizant computing capabilities. That said, vendors such as Amazon, Apple, Facebook,

Google and Microsoft have considerable collections of individual capabilities, while a host of

smaller, more niche players such as Anki, Medio and Tempo AI have some interesting propositions.

The big vendors are already racing ahead and consumer-focused businesses that have not yet got

into this space are in danger of falling behind quickly.

Benefit Rating: Transformational

Market Penetration: 1% to 5% of target audience

Maturity: Emerging

Sample Vendors: Amazon; Apple; Facebook; Google; Here

Recommended Reading:

"Market Trends: Cognizant Computing Will Reshape Mobile and App Market Revenue"

"Market Trends: Get Ahead in the Early Cognizant Computing Market by Smart Consumer

Segmentation"

"Market Insight: Virtual Assistants Will Make Cognizant Computing Functional and Simplify Apps

Usage"

"The Disruptive Era of Smart Machines Is Upon Us"

"Predicts 2014: Cognizant Computing Another Kind of Smart Device"

"Predicts 2014: Consumer Analytics and Personalized User Experiences Transform Competitive

Advantage"

"Smart Machines Mean Big Impacts: Benefits, Risks and Mass Disruption"

Page 11 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

IoT Platform

Analysis By: Alfonso Velosa

Definition: An Internet of Things (IoT) platform enables an enterprise to better use the information

present in its devices. This platform starts by obtaining data from connected devices, and

aggregating and connecting it via a gateway to the cloud to the enterprise's systems, where that

data can be analyzed and converted into insights and action by enterprise employees and systems.

An agile IoT platform should enable enterprises to build solutions with cloud-, edge- or gatewaycentric IoT architectures.

Position and Adoption Speed Justification: There is significant opportunity for businesses to

achieve greater value from the data that is located in devices that are, or will be, spread throughout

the enterprise. Unfortunately, this data has been locked into the devices due mostly to lack of

connectivity but also due to lack of standards, systems and processes to obtain this data

systematically, and even ignorance of the value of the information on those devices.

Enterprises continue to deploy IoT systems, due to benefits like asset optimization, new revenue

models and so on. This includes fleet management options, maintenance optimization analysis of

assets, or charge-per-use models that we see in insurance. Thus, there is an increasing need,

possibility and opportunity for IoT platforms to collect, process, analyze and disseminate the data

from devices in an integrated way. To gain the full value of the data in these systems, enterprises

will need a system that incorporates:

Devices: The device may, or may not, be a part of the platform. It has sensors, processing

capabilities and connectivity to collect data and share it with other systems. It will collect data

with appropriate enterprise contextual elements, such as location and environmental

parameters.

Device Management: The device functionality or application logic and security needs to be

managed; it may also be necessary to format or process some of the data internally. This may

reside on the device, the gateway, the cloud or a combination thereof.

Connectivity: The data will need to be transmitted from the devices to enterprise systems. This

may occur via a gateway.

Application and API Layer: This layer enables enterprises to make the most of devices through

business rules, functions or applications, and/or APIs (to be consumed by other entities) that

the device will need to execute its core functions/applications. This application logic may reside

on the device, the gateway, the cloud or a combination thereof.

Security and Authentication: A comprehensive security and authentication process is required

to protect the integrity of the devices and the data. The process will need to be able to provide

scheduled and ad hoc updates on the security profile.

Analytics and Presentation Layer: The data will need to be analyzed and presented in a

format that facilitates the decision-making and action capabilities of enterprise IT and

operational technology employees and automated systems. This may reside on the device, the

gateway, the cloud or a combination thereof.

Page 12 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

User Advice: System developers will want to look at aspects of the business that may benefit from

integration of the data from devices. Observe that while the technical elements may be quite

challenging, the developers and their management should also consider the cultural elements of the

enterprise just as thoroughly. For example, factor into your plans how the data from an IoT platform

will fit into the work processes for an enterprise, as well as how to incentivize employees to leverage

the data to its fullest potential.

Recognize that data format standards vary by industry, often by vendor and by legacy systems, so

any IoT platform that pulls in enough data will need to be capable of addressing multiple formats

and industry standards. Thus, ensure you understand the value and cost of the proper use of the

data in the system, and how it will cover the costs of any necessary consulting work to audit and

integrate all of your data sources and types.

Business Impact: The value of an integrated system will depend on its ability to leverage industryspecific parameters. An IoT platform will have the potential to help enterprises that implement them

outperform their peers in maximizing the use of information, as well as to extend operational

elements, such as asset management, or create new value chains for the enterprise to drive new

revenue streams and deliver enhanced value to customers.

Benefit Rating: Transformational

Market Penetration: 1% to 5% of target audience

Maturity: Emerging

Sample Vendors: ARM; Axeda; Eurotech; Jasper; Microsoft; ThingWorx

Recommended Reading:

"Uncover Value From the Internet of Things With the Four Fundamental Usage Scenarios "

Open-Source Telecom Operations Management Systems

Analysis By: Norbert J. Scholz

Definition: This term describes how CSP back-office solutions such as billing, charging, revenue

assurance, fraud management, provisioning, network and inventory management can be

acquired through an open-source license process. Open-source software is available under license

and distribution conditions specified by the Open Source Initiative (http://www.opensource.org). An

open-source license typically permits free use, access to the source code, modification and

redistribution, subject to the conditions of the entity distributing it.

Position and Adoption Speed Justification: Open-source software plays a strong role in laying the

foundations of telecom operations management systems (TOMS) solutions, and many

communications service providers (CSPs) have dedicated internal resources to developing these

solutions in-house. Currently, open-source software is used mainly in the middleware layer to

integrate various platforms. Open-source software on the solution and application level remains in

Page 13 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

the early stages of development. Commercial deployment of open-source TOMS solutions is

minimal and mainly by small CSPs. Among the main concerns about open-source TOMS is the lack

of dedicated product road maps, proven scalability and reliability, competitive differentiation, and

committed product support, all of which are offered under traditional licensing models.

CSPs that are using a licensing model, and are looking for cost reductions and business agility, are

likely to consider open APIs, service-oriented architecture, outsourcing and software as a service.

Either that or they use open-source software in conjunction with such models. Some CSPs are

considering open-source TOMS to reduce high support and maintenance costs and to avoid vendor

lock-in.

The most likely CSPs to adopt open-source TOMS are small ones that do not compete with each

other, and over-the-top providers that are not burdened by legacy back-office infrastructure and do

not consider TOMS solutions to be competitive differentiators. Some large CSPs with strong

internal IT departments might prefer open-source TOMS solutions to avoid exposure to external

suppliers. Finally, system integrators occasionally use open-source TOMS solutions in some

engagements as an alternative to commercial off-the-shelf solutions. Billing and CRM are the most

common open-source solutions.

Open-source TOMS may never reach maturity. It is likely to remain an alternative to commercial

licensing and outsourcing models for a small number of CSPs.

User Advice:

Start using open-source software internally on the middleware and foundational levels.

Assess the vendor's track record, based on reference checks.

Focus on the following categories:

Scalability

Integration

Overall issues of process integrity

Cost savings

Ability to support process modeling

Security

Business Impact: Open-source software is used mainly in the foundational technology of TOMS

solutions. Reducing the "integration tax" remains high on CSP priority lists. But the dearth of

carrier-grade open-source TOMS solutions has compelled most CSPs to stick with conventional

license and outsourced TOMS solutions. There is little incentive for large CSPs to participate in

open-source initiatives because they often consider TOMS a competitive differentiator. Traditional

software vendors benefit from the prevalence of traditional licensing models, which provides them

with an annuity stream for customization and support. Some system integrators are working with

open-source TOMS suppliers to provide more customized solutions for CSPs than are available

Page 14 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

from mainstream software vendors. These factors are likely to delay growth in open-source TOMS

for at least five to 10 years.

Benefit Rating: Moderate

Market Penetration: Less than 1% of target audience

Maturity: Emerging

Sample Vendors: Agileco; Freeside; jBilling; Open BRM; OpenNMS Group; OpenRate; ParaBill;

ProactiveRA; Zenoss

5G

Analysis By: Sylvain Fabre

Definition: 5G is a term being used to describe the next stage of mobile network infrastructure

technology, beyond 4G (Long Term Evolution [LTE] and LTE Advanced [LTE-A]). However, 5G

standards have not yet been defined. Additionally, some of the functionalities that have been

defined beyond 4G are currently being appended to the existing 4G set of standards. 5G

throughput may be faster than 4G's theoretical maximum of 1 Gbps, but the difference may not be

very large due to 4G having approached the limitations of the laws of physics.

Position and Adoption Speed Justification: Currently, because no standards actually exist for 5G,

various lab demonstrations are able to lay claim to some "5G-related functionality." LTE-A is being

worked into the 4G standards, as all standards in the Third Generation Partnership Project (3GPP)

R11 and R12 are still related to LTE-A. Additional working groups include:

Korea 5G Forum

China IMT 2020 (5G) Promotion Group (under MIIT, NDRC and MOST)

Japan 2020 and Beyond AdHoc Group (under ARIB)

Europe METIS, 5GIC, ETSI

While it is still unclear what specific features would be included or should even be prioritized for 5G,

the technological basis for it will likely get laid down in the next three years or so, given the number

of people who claim to be working on it and the amount of interest in 5G. Based on 3GPP history

between generations of successive technologies, deployment of 5G into networks will take seven to

10 years after the start of LTE-A around 2020 to 2023. In fact, NTT Docomo, the three Korean

CSPs (SK Telecom, KT, LG U+) and China have declared their intention to launch 5G commercially

in 2020. With cellular technologies, the definition in standards emerges a long time before

mainstream adoption.

It may be that 5G is not defined as a single global standard. There seems a risk that we'll see

competing approaches just like the cellular situation in China, where the specific TD-SCDMA flavor

of 3G was created as well as the TDD variant of LTE; however, that may not necessarily matter by

Page 15 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

then, as software-defined radio (SDR) maturity could mean that supporting multiple standards will

just be a programming issue.

Despite 3GPP standardization (R14) having not yet started and frequency recommendations by ITU

having not occurred for 5G, the race for the glory of being the first to launch 5G in Asia and/or

worldwide would be a key motivation for some communications service providers (CSPs).

Furthermore, the Tokyo Olympic Games in 2020 should be a driver, as its timing would be perfect

for a launch in Japan.

User Advice:

Focus mobile infrastructure planning on LTE, LTE-A, small cells and heterogeneous networks

(HetNet); 5G is much too embryonic to be a concern for CSPs' planning at least until the end

of the decade. Commercial network equipment could be available by 2020, with commercial

CSP rollouts expected around 2020 to 2023.

Be mindful of the risk of increased hype around 5G, as well as what may or may not constitute

but is being marketed as 5G, in a similar way that 4G has been misconstrued in marketing

over the last few years. Ask vendors to indicate which standard they are building. Until a 5G

standard actually gets defined, vendors' efforts toward 5G are valuable, but can be at best

defined as early lab prototypes only.

Business Impact: Uses can be found and developed for increased bandwidth, but 5G's

incremental value on top of LTE and LTE-A, as well as a mature small cell layer and pervasive Wi-Fi,

may be limited with respect to the deployment costs involved (as is the case with every new

wireless network generation).

Rather, development of 5G standards may focus on the user's perception of unlimited capacity.

Some potential areas for 5G networks that have been considered in research include the following:

Pervasive networks

Cognitive radio

SDR

Internet Protocol version 6 (IPv6)

Wearable devices

5G could be a framework whereby many existing legacy technologies, such as 3G, 4G, Wi-Fi,

HetNet and the small cells layer, are able to better coexist and interwork, using both licensed and

unlicensed spectrum.

Benefit Rating: High

Market Penetration: Less than 1% of target audience

Maturity: Embryonic

Page 16 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

Sample Vendors: Alcatel-Lucent; BMW; Ericsson; Huawei; Nokia Solutions and Networks; NTT

Docomo; Samsung; Telecom Italia

DevOps

Analysis By: Ronni J. Colville; Jim Duggan

Definition: DevOps represents a change in IT culture, focusing on rapid IT service delivery through

the adoption of agile, lean practices in the context of a system-oriented approach. DevOps

emphasizes people (and culture), and seeks to improve collaboration between operations and

development teams. DevOps implementations utilize technology especially automation tools that

can leverage an increasingly programmable and dynamic infrastructure from a life cycle

perspective.

Position and Adoption Speed Justification: DevOps doesn't have a concrete set of mandates or

standards, or a known framework (e.g., ITIL or Capability Maturity Model Integrated [CMMI]),

making it subject to a more liberal interpretation. For many it is elusive enough to make it difficult to

know where to begin and how to measure success. This can accelerate adoption or potentially

inhibit it. DevOps is primarily associated with continuous integration and delivery of IT services as a

means of providing linkages across the application life cycle, from development to production.

DevOps concepts are becoming more widespread across cloud projects and in more traditional

enterprise environments. The creation of DevOps teams brings development and operations staff

together to more consistently manage an end-to-end view of an application or IT service. For some

IT organizations, streamlining release deployments from development through production is the first

area of attention; this is where most acute service delivery pain exists.

DevOps practices include the creation of a common process for the developer and operations

teams; formation of teams to manage the end-to-end provisioning and practices for promotion and

release; a focus on high fidelity between environments; standard and automated practices for build

or integration; higher levels of test automation and test coverage; automation of manual process

steps and informal scripts; and more comprehensive simulation of production conditions throughout

the application life cycle in the release process.

Both Dev and Ops look to tools to replace custom scripting with consistent application or service

models, improving deployment success through more predictable configurations. The adoption of

these tools is not associated with development or production support staff, but rather with groups

that straddle development and production, and is typically instantiated to address specific Web

applications with a need for increased release velocity. To facilitate and improve testing and

continuous integration, tools that offer monitoring specific to testers and operations staff are also

beginning to emerge. Another challenge of DevOps adoption is the requirement for pluggability.

Toolchains are critical to DevOps to enable the integration of function-specific automation from one

part of the life cycle to another.

DevOps implementation is not a formal process; therefore adoption is somewhat haphazard. Many

aspire to reach the promised fluidity and agility, but few do. IT organizations leveraging pacelayering techniques can stratify and categorize applications and find applications that could be

Page 17 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

good targets for adoption. We expect this bifurcation (development focus and operations focus) to

continue for the next two years, but as more applications or IT services become agile-based or

customer-focused, the adoption of DevOps will quickly follow. DevOps does not preclude the use of

other frameworks or methodologies, such as ITIL. Incorporating some of these best-practice

approaches can enhance overall service delivery.

User Advice: DevOps hype is beginning to peak among tool vendors, with the term applied

aggressively and claims outrunning demonstrated capabilities. Many vendors are adapting their

existing portfolios and branding them DevOps to gain attention. Some vendors are acquiring smaller

point solutions specifically developed for DevOps to boost their portfolios. We expect this to

continue. IT organizations must establish key criteria that will differentiate DevOps traits (strong

toolchain integration, workflow, continuity, context, specificity, automation) from traditional

management tools.

Successful adoption or incorporation of this approach will not be achieved by a tool purchase, but

is contingent on a sometimes difficult organizational philosophy shift. Because DevOps is not

prescriptive, it will likely result in a variety of manifestations, making it more difficult to know

whether one is actually "doing" DevOps. However, the lack of a formal process framework should

not prevent IT organizations from developing their own repeatable processes for agility and control.

Because DevOps is emerging in definition and practice, IT organizations should approach it as a set

of guiding principles, not as process dogma. Select a project involving development and operations

teams to test the fit of a DevOps-based approach in your enterprise. Often, this is aligned with one

application environment. If adopted, consider expanding DevOps to incorporate technical

architecture. At a minimum, examine activities along the existing developer-to-operations

continuum, and look for opportunities where the adoption of more-agile communication processes

and patterns can improve production deployments.

Business Impact: DevOps is focused on improving business outcomes via the adoption of

continuous improvement and incremental release principles adopted from agile methodologies.

While agility often equates to speed, there is a somewhat paradoxical impact; and smaller, more

frequent updates to production can work to improve overall stability and control, thus reducing risk.

Benefit Rating: Transformational

Market Penetration: 5% to 20% of target audience

Maturity: Adolescent

Sample Vendors: Boundary; CFEngine; Chef; Circonus; Puppet Labs; SaltStack

Recommended Reading:

"Deconstructing DevOps"

"DevOps Toolchains Work to Deliver Integratable IT Process Management"

"Leveraging DevOps and Other Process Frameworks Requires Significant Investment in People and

Process"

Page 18 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

"DevOps and Monitoring: New Tools for New Environments"

"Catalysts Signal the Growth of DevOps"

"Application Release Automation Is a Key to DevOps"

At the Peak

In-Memory Computing

Analysis By: Massimo Pezzini

Definition: Gartner defines in-memory computing (IMC) as an architecture style that assumes all the

data required by applications for processing is located in the main memory of their computing

environments. In IMC-style applications, hard-disk drives (HDDs; or substitutes such as solid-state

drives [SSDs]) are used to persist in-memory data for recovery purposes, to manage overflow

situations, to archive historical data and to transport data to other locations, but not as the primary

location for the application data.

Position and Adoption Speed Justification: Technology advancements have dramatically reduced

the cost of main memory (currently DRAM), to the point of making it technically feasible and

economically affordable to store in-memory multiple terabytes of data.

IMC-style applications deliver significant improvements in performance, scalability and analytic

sophistication over traditional architectures enabled by a range of software technologies,

including in-memory DBMSs (IMDBMSs), in-memory data grids (IMDGs), event-processing

platforms, high-performance messaging infrastructures and in-memory analytics tools. These

technologies have reached a notable degree of maturity and adoption; some (for example, IMDGs)

have been in the market for over a decade and in some cases (for example, in-memory analytics

tools) boast installed bases in the tens of thousands of clients. However, the maturity of IMC-style

packaged applications varies considerably by domain.

IMC opens up a number of opportunities, which would be simply unthinkable by using traditional

architectures, including, but not limited to:

Web-scale applications that support global operations (for example, e-commerce, online

entertainment and travel reservation systems) and new digital business models (for example,

API economy and cloud services).

Improved situation awareness in business operations by injecting real-time analytics, stream

processing and other operational business intelligence capabilities in transactional business

processes.

Faster delivery of business intelligence reports, interactive and unconstrained data navigation,

and self-service analytics enablement.

Support for "fast and big" data analytics scenarios.

Page 19 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

Therefore, IMC will have a long-term, disruptive impact by radically changing users' expectations,

application design principles, products' architectures and vendors' strategies.

Initially pioneered by leading-edge organizations in financial services, telecom, defense and the

Web industry, IMC is rapidly moving onto the radar screen of mainstream organizations that are

more sensitive to new technologies' perceived business value than to technology shrewdness.

IMC adoption across vertical sectors, geographies and business sizes will notably grow, favored by

factors such as:

The insatiable demand for greater speed and scale driven by digital business requirements.

Eagerness for deeper and more timely analytics.

Dramatic reduction in main memory hardware costs.

Continuous maturation of the enabling technologies and their consolidation into broad and

better-integrated suites of IMC capabilities.

Endorsement of IMC architectures by package application vendors, SaaS providers and

application infrastructure (such as portal products, content management platforms, BPM tools,

integration platforms, application platforms) vendors.

Emergence and maturation of open source IMC-enabling technologies.

However, several factors will continue to slow adoption:

Technology and vendor landscape fragmentation.

Lack of commonly agreed upon industry standards.

Scarcity of skills, and still not fully formalized industry best practices.

New security, high-availability, disaster recovery and IT operation challenges.

The efforts and costs associated with re-engineering established (custom or packaged)

applications for IMC.

The long time it will take for application providers to come out with new native IMC versions of

their products that will take several years to mature.

Consequently, overall IMC adoption and maturity still lags the hype and vendor marketing. For this

reason, we see it approaching the Peak of Inflated Expectations.

User Advice: Application architects and other IT leaders in charge of defining and implementing

application architectures to support strategic initiatives (such as Web-scale or digital business)

should identify on the basis of their organizations' desired business outcomes, risk profile,

willingness to invest in IT innovation, technology environment and available skills which of the

following approaches is the best path for IMC adoption in their organizations:

Page 20 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

Developing new custom (or purchasing packaged) applications conceived from inception on the

basis of IMC design principles. This "native IMC" style may lead to transformational business

benefits, but also exposes the organization to higher risks of failure.

Replatforming traditional applications on top of an in-memory data store (IMDBMSs or IMDGs).

This "retrofitted for IMC" style is the less invasive, but usually leads to only incremental benefits.

Re-engineering established applications by adopting IMC design principles and technologies

only in part, by extending or partially reworking the application logic but only in sections where

IMC can provide the improvements mandated by the business requirements.

Many providers will retrofit and/or rearchitect for IMC-established products and cloud services.

Therefore, IT leaders should monitor vendor road maps to identify how this might impact their

investment plans.

For many mainstream organizations, entering into IMC by adopting a native IMC style may prove

too complex and risky. Therefore, unless business imperatives set different priorities, it may be

advisable to incrementally familiarize with IMC design principles, technologies, and the new

governance and management challenges by successfully deploying a few IMC applications based

on less-challenging IMC styles before embarking on more ambitious, native-style projects.

Business Impact: IMC-style applications may drive transformational business benefits by enabling

IT leaders to:

Deliver orders-of-magnitude-faster performance for analytical and transaction processing

applications.

Support Web-scale/global-scale business models (for example, mobile banking, e-commerce,

online gaming, travel reservation and API-enabled businesses) supporting hundreds of

thousands or millions of globally distributed, possibly mobile-enabled users (clients, patients,

citizens, business partners) interacting in real time.

Provide deeper and greater real-time business insights and situation awareness.

Organizations leveraging IMC are better-positioned to build defensible business differentiation than

those sticking with traditional architectures. Organizations that fail in endorsing IMC risk falling

behind in the race for leadership in the digital era.

Benefit Rating: Transformational

Market Penetration: 5% to 20% of target audience

Maturity: Adolescent

Sample Vendors: GigaSpaces Technologies; IBM; Magic Software Enterprises; Microsoft; Oracle;

Pivotal; Qlik; Relex; SanDisk; SAP; Software AG; Tibco Software; Workday; YarcData

Recommended Reading:

Page 21 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

"Taxonomy, Definitions and Vendor Landscape for In-Memory Computing Technologies"

"The Spectrum of IMC Styles Meets the Spectrum of Business Needs"

"Hybrid Transaction/Analytical Processing Will Foster Opportunities for Dramatic Business

Innovation"

Subscription Billing

Analysis By: Norbert J. Scholz

Definition: Subscription billing is an extension of service billing. It is also known as "recurring

billing," "cloud billing," "software-as-a-service (SaaS) billing," "activity-based billing," "dynamic

revenue management" and "revenue life cycle management." It is more complex than accountingor payment-processing-based service billing in that it allows for usage-based billing together with

recurring billing for subscriptions, rather than charging for one-time transactions or static

subscriptions.

Position and Adoption Speed Justification: Subscription billing tools differ from IT chargeback

and IT financial management tools by using resource usage data (similar to call detail records

[CDRs] in the communications industry) to calculate the costs for chargeback and aggregate them

for a service. Alternatively, they may offer service-pricing options (such as per employee or per

transaction) independent of resource usage. When pricing is based on usage, these tools can

gather resource-based data across various infrastructure components, including servers, networks,

storage, databases and applications. Service-billing tools perform allocation based on the amount

of resources (including virtualized and cloud-based) allocated and used by the service, for

accounting and chargeback purposes.

Service-billing costs are based on service definitions and include infrastructure and other resource

use costs (such as people-related costs). As a result, they usually integrate with IT financial

management tools and IT chargeback tools. These tools will be developed to work with service

governors to set a billing policy that uses cost as a parameter, and to ensure that resource

allocation is managed based on cost and service levels. Due to their importance to businesses,

these tools have been deployed in service provider, cloud environments and by IT organizations

that use or deploy applications, such as e-commerce applications.

For communications service providers (CSPs), subscription billing for voice has always been part of

their billing system. Subscription billing for content is becoming increasingly important. Many

existing billing systems cannot adapt to the requirements of recurring content billing requirements

or dynamically combine voice and data subscriptions.

Subscription billing is usually provided on a SaaS basis. Solutions increasingly resemble

subscription management for e-commerce solutions. Subscription billing can contain the following

elements: real-time rating, mediation, allowance management, product catalogs, analytics and

dashboards, order management and provisioning, customer self-service, invoicing, payments,

promotion and campaign management, product management, settlement, collections and others.

Subscription billing enables activities such as multitier pricing, multiple revenue streams per

Page 22 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

customer, service and product bundling, usage caps, entitlements, personalization, cross-product

discounts, and promotions.

User Advice: Consider two scenarios for subscription billing:

Bill for your own and third-party services.

Evaluate the scalability of subscription-billing solutions and their ability to combine usagebased billing with recurring charges. Billing systems that support only recurring charges are

usually inappropriate for CSPs' requirements.

Ensure that the solution integrates well with other front- and back-office solutions.

Ascertain that nontechnical staff can easily use the solution.

Have a contingency plan in case the vendor no longer exist in its current form. Many vendors

are small and funded by venture capital.

Make subscription billing available to your small and midsize enterprise clients.

Offer subscription billing just like any other IT or value-added service in a cloud-based

environment, for a monthly charge.

Business Impact: In general, subscription billing tools are critical to running IT as a business. They

provide the means to determine the financial effect of sharing IT and other resources in the context

of services. They also feed billing data back to IT financial management tools and chargeback tools

to help businesses understand the costs of IT and to budget appropriately. These tools also provide

better cost transparency and governance in a public cloud environment.

CSPs already have specific billing systems in place that can handle many of the requirements met

by subscription-billing solutions. In general, subscription-billing solutions are desirable, but not

crucial unless CSPs' existing systems cannot adapt to the requirements of recurring billing

requirements. Subscription billers might challenge established CSP billers in the medium to long

term because they tend to offer more coherent and flexible solutions.

Benefit Rating: Moderate

Market Penetration: 1% to 5% of target audience

Maturity: Emerging

Sample Vendors: Accumulus; Aria Systems; Cerillion; Comarch; MetraTech; Monexa; Omniware;

Recurly; Redknee; SAP; Transverse; Vindicia; Zuora

Business Capability Modeling

Analysis By: Neil Osmond

Page 23 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

Definition: Business capability modeling is a technique for representing the ways in which

resources, competencies, information, processes and their environments can be combined to

deliver consistent value to customers. Business capability models are one way to represent the

future-state capabilities of a business, as well as to provide a platform for illustrating how current

capabilities and business assets (people, processes, information and technologies) may need to

change in response to strategic challenges and opportunities.

Position and Adoption Speed Justification: Communications service provider (CSP) leaders face

significant business challenges as the decline of their companies' core revenue accelerates due to

commoditization, substitution by over-the-top services and unfavorable regulatory environments.

Although data revenue is growing, margins are declining due to falling prices. To survive, CSPs will

need to grow through business diversification and consolidation. They will also need to grow their

data business profitably, while improving the customer experience and customer loyalty.

Many CSPs are looking to use a new set of digital services to drive growth. To do this successfully,

they will need to establish relevant synergies across their organization. The premise is that digital

services models are not the same as traditional CSP service models for service design,

implementation, delivery and support, where services are enabled from deep within the network

layer. This has significant implications for a CSP's IT organization, which will require different skills,

competencies and capabilities.

The concept of expressing business capabilities and business capability modeling is not new. In

fact, business academics and practitioners have been talking about modeling business capabilities

for years. As a result, there are many definitions. However, this approach is mainly being adopted

by enterprise architects who are proactively trying to mature their enterprise architecture efforts to

engage business leaders.

Over the past year, Gartner has engaged with CSP clients during inquiry sessions, one-to-one

meetings and workshops on how to use business capability modeling as a platform to inform and

guide decision making between business and IT executives, especially as they look to transform IT

to assist digital business. We therefore position business capability modeling slightly before the

Peak of Inflated Expectations.

User Advice: Create a future-state business anchor model as part of the development of a business

outcome statement and an enterprise context (see "Define the Business Outcome Statement to

Guide Enterprise Architecture Efforts").

Consider using business capability modeling as a technique for representing the organization's

future state (see "Eight Business Capability Modeling Best Practices"), along with other possible

models (such as business process, operating and functional models).

Once a future-state model exists, CSPs can use business capability modeling as a platform for

creating both diagnostic and actionable deliverables (see "Use Business Capability Modeling to

Execute CSP Digital Services IT Strategies"). Deeper, detailed business capability models may be

used to illustrate specific decisions within information, business, solution and technology

architecture viewpoints (see "Toolkit: Using Business Capability Modeling to Execute CSP Digital

Services IT Strategies").

Page 24 of 102

Gartner, Inc. | G00260996

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

This research note is restricted to the personal use of yolanda.robles@inegi.org.mx

Business Impact: Business capability modeling is of "high" benefit because it enables CSPs'

business and IT strategic planners to engage in business planning and understand the impact of

associated decisions on business and IT. The value of this modeling is principally that it helps them

focus on and explore business direction and plans. It can also help them focus on and illustrate

investment decisions. They can then link these decisions to architectural changes.

An equally important benefit is that this modeling enables enterprise architecture practitioners to

have objective discussions about business capabilities without drilling down into technology,

people, processes and information details. Drilling down into details too early can derail discussions

about business direction and strategy, and organizational optimization.

Benefit Rating: High

Market Penetration: 5% to 20% of target audience

Maturity: Adolescent

Recommended Reading:

"Business Capability Modeling Brings Clarity and Insight to Strategy and Execution"

"Business Capability Modeling Helps Mercy Execute on Business Transformation"

"Eight Business Capability Modeling Best Practices Enhance Business and IT Collaboration"

"Starter Kit: Business Capability Modeling Workshop"

"Toolkit: Business Capability Modeling Starter Kits for Multiple Industries"

"Use Business Capability Modeling to Illustrate Strategic Business Priorities"

"To Assess the Impact of Change, Connect Process Models With Business Capability Models"

Managed Mobility Services

Analysis By: Eric Goodness

Definition: Managed mobility services (MMS) encompass the IT and process services, provided by

an external service provider, required to plan, procure, provision, activate, manage and support