Professional Documents

Culture Documents

1202 Full

Uploaded by

Tarek SetifienOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

1202 Full

Uploaded by

Tarek SetifienCopyright:

Available Formats

http://sim.sagepub.

com/

SIMULATION

http://sim.sagepub.com/content/88/10/1202

The online version of this article can be found at:

DOI: 10.1177/0037549712445233

2012 88: 1202 originally published online 22 May 2012 SIMULATION

Khamron Sunat, Panida Padungweang and Sirapat Chiewchanwattana

Generalized Transport Mean Shift algorithm for ubiquitous intelligence

Published by:

http://www.sagepublications.com

On behalf of:

Society for Modeling and Simulation International (SCS)

can be found at: SIMULATION Additional services and information for

http://sim.sagepub.com/cgi/alerts Email Alerts:

http://sim.sagepub.com/subscriptions Subscriptions:

http://www.sagepub.com/journalsReprints.nav Reprints:

http://www.sagepub.com/journalsPermissions.nav Permissions:

http://sim.sagepub.com/content/88/10/1202.refs.html Citations:

What is This?

- May 22, 2012 OnlineFirst Version of Record

- Oct 8, 2012 Version of Record >>

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

Simulation

Simulation: Transactions of the Society for

Modeling and Simulation International

88(10) 12021215

2012 The Society for Modeling and

Simulation International

DOI: 10.1177/0037549712445233

sim.sagepub.com

Generalized Transport Mean Shift

algorithm for ubiquitous intelligence

Khamron Sunat

1

, Panida Padungweang

2

and

Sirapat Chiewchanwattana

1

Abstract

Much research has been conducted recently relating to ubiquitous intelligent computing. Ubiquitous intelligence-enabled

techniques, such as clustering and image segmentation, have focused on the development of intelligence methodologies. In

this paper, a simultaneous mode-seeking and clustering algorithm called the Generalized Transport Mean Shift (GTMS)

was introduced. The data points were designated as the transportertrailer characteristic. The important concept of

transportation was used to solve the problem of redundant computations of mode-seeking algorithms. The time com-

plexity of the GTMS algorithm is much lower than that of the Mean Shift (MS) algorithm. This means it is able to be used

in a problem that has a very high data point, in particular, the segmentation of images containing the green vegetation. The

proposed algorithm was tested on clustering and image-segmentation problems. The experimental results showed that

the GTMS algorithm improves upon the existing algorithms in terms of both accuracy and time consumption. The GTMS

algorithms highest speed is also 333.98 times faster than that of the standard MS algorithm. The redundancy computation

can be reduced by omitting more than 90% of the data points at the third iteration of the mode-seeking process. This is

because GTMS algorithm mainly reduces the data in the mode-seeking process. Thus, use of the GTMS algorithm would

allow for the building of an intelligent portable device for surveying green vegetables in a ubiquitous environment.

Keywords

Mean Shift algorithm, agglomerative mean shift clustering, Generalized Transport Mean Shift algorithm, image segmenta-

tion, clustering, mode seeking, ubiquitous intelligence

1. Introduction

Ubiquitous intelligence computing is widely dedicated to

research on the technologies used to improve the intelli-

gence capability of multimedia devices. In this situation,

the intelligence capacity to elaborate, extract information,

and improve the quality of the extracted information from

the environment is crucial. The development of ubiquitous

intelligence, such as data analysis, image analysis, pattern

analysis, and computer vision, is also addressed. The clus-

tering problem is an important process in data analysis.

Computer vision problems, such as video and motion

estimation, require an appropriate area of support for cor-

respondence operations. The area of support can be identi-

fied using segmentation techniques. Pattern recognition

problems can also make use of segmentation results in

matching. Consequently, image segmentation and cluster-

ing are important processes in ubiquitous intelligence com-

puting and are used for analyzing and investigating the

nature of the given data. A powerful technique for solving

this problem is to automatically find the mode of density

of the given data. Normally the algorithm is informed by

the data density. The local maximums of the density sur-

face are assumed to be the modes or the centers of clusters.

All data points are computed to find their modes. The data

points that have the same mode will be assigned to be in

the same cluster. The algorithm is useful for clustering,

1,2

image segmentation,

3,4

and tracking.

5

However, finding

the mode of all of the data points requires a repeated pro-

cess, which is very time consuming. The Generalized

Transport Mean Shift (GTMS) algorithm and its variation

are extensively proposed to overcome this difficulty.

The Mean Shift (MS) algorithm is a powerful technique

for seeking the modes of any given data. The standard MS

algorithm is an iterative procedure that can automatically

find the mode of density of a data point. It begins by

1

Department of Computer Science, Khon Kaen University, Thailand

2

Department of Mathematics, Statistic and Computer, Ubon Ratchathani

University, Thailand

Corresponding author:

Khamron Sunat, Department of Computer Science, Khon Kaen

University, Khon Kaen, 40002, Thailand.

Email: khamron_sunat@yahoo.com

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

computing the weight of all points by the function of their

distances from the considered point. The next step is to

compute the weighted mean point to get the shift position.

This changes iteratively because of density and increases

until the shift position is not changed or is changed less

than the acceptable distance. It is assumed that this posi-

tion is the mode of the considering point and all data

points that converge into the same mode will also be

assumed to be the same cluster. However, the standard

MS algorithm process is very slow because its time com-

plexity is O(kn

2

m).

6

This makes it unsuitable for use,

especially in image-segmentation applications, such as the

segmentation of images containing green vegetation,

which have a very high data point. Improving the speed of

the MS algorithm by reducing the computational complex-

ity is, therefore, very important.

Techniques to speed up the MS algorithm were fre-

quently proposed. A speed-up technique of neural network

learning was applied to the MS algorithm, as proposed by

Padungweing et al.;

7

this allowed it to perform at greater

speeds than the previous version, whilst also retaining the

accuracy of the original. Following this, the speed up of

the Gaussian Blurring Mean Shift (GBMS) algorithm has

been proposed

6

by clustering the data in each iteration and

removing the cluster that has some data converted to its

mode. Thus, the next iteration will have less data, allowing

for faster computing. However, the GBMS algorithm can

produce different results when compared to the standard

MS algorithm. This is because the density estimation is

computed using the current position in each iteration, but

the density estimation of the standard one is usually com-

puted using the initial position of the given data set.

Several methods that can improve the speed of the MS

algorithm for image segmentation were proposed.

8

Firstly,

the neighborhood pixels are grouped into the same cell and

then the MS algorithm was used. The cell that is shifted

into the shifted cell in the previous iteration will stop com-

puting and will be assumed to be the same cluster.

Secondly, the neighborhood pixels in the spatial domain,

which are indicated by the specific distance, are grouped

into the same cluster and the process continues as in the

first method. Both methods produced excellent speed ups.

However, a wrong clustering result can occur, even in the

first step of clustering the group of points. The remaining

two methods approximate the E (Expectation) and M

(Maximization) steps in each iteration using a subset of the

data and the quadratic convergence technique, which

helped to decrease the number of iterations. However, this

requires high computation, resulting in less help in speed-

ing up as hoped. The Improved Fast Gaussian Transform

Mean Shift (IFGT-MS) algorithm

9

adopts the improved

Gaussian transform for numerical approximation. It is very

efficient for large-scale and high-dimensional data sets.

However, the IFGT-MS algorithm not only is limited to

the Gaussian kernel function but also it fails on moderate

scale data.

10,11

Based on the best of the authors knowl-

edge, the recently and much proposed algorithm is

Agglomerative Mean Shift (Agglo-MS) clustering.

10,11

Covering hyper ellipsoids were used to cluster data itera-

tively, which leads to hierarchical clustering via the MS

process. The covering hyper ellipsoids need to compute

the inverse of the covariant matrix, which is an extra cost

and biased by data dimension. It can also produce a poor

result if the parameter is not properly selected. However,

this algorithm inspired us by demonstrating the use benefit

of the hill climbing algorithm, where many data points are

shifted though the same direction.

In this paper, the GTMS algorithm is proposed for intel-

ligence modeling. The basic idea of the MS algorithm,

which is the shift process, is presented in this algorithm.

However, instead of finding the mode of all points, the

GTMS algorithm requires few points, called transporters,

which are representing their trailers. Moreover, finding

the transporters does not require any extra cost, because

the GTMS algorithm uses the distance values that must be

computed in the shifting process. The trailers are the data

points that are shifted into the same mode as a transporter.

The relationship transportertrailer is investigated by

considering the direction of the transporters trajectory and

the trailers shift direction. The trailers are excluded for

the next iteration and only the transporters are computed.

In addition, a transporter can be assigned as a trailer in a

next iteration; this not only reduces the number of trans-

porters to be computed, but also performs a simultaneous

hierarchical clustering.

In Section 2, we briefly explain the nature of the MS

algorithm and the Agglo-MS algorithm. The GTMS algo-

rithm will be introduced and proposed in Section 3. The

experimental results on real-world clustering, image-

segmentation problems and discussion will be described in

Section 4. Section 5 is the conclusion.

2. Standard Mean Shift algorithm and

Agglomerative Mean Shift algorithm

Let XR

m

be a data set in an m-dimensional Euclidean

space of n data points. X = (x

1

, x

2

, ., x

n

) and x

i

= [x

1

, x

2

,

., x

m,

]

T

. A probability density estimation of a given data

x is defined by

p(x) =

1

n

n

i =1

K (x x

i

)= k k

2

_ _

; 1

where K(t) is a kernel function and s is a constant band-

width such that s>0. A mode of the density is a position

x having zero gradient, rp(x) =0: The MS algorithm is an

iterative procedure for seeking the mode of density estima-

tion with repeated shifting of the position x towards high

density and is written as

Sunat et al. 1203

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

x

( +1)

=f (x

()

); 2

with

f (x) =

n

i =1

K

0

(x x

i

)= k k

2

_ _

x

i

n

j =1

K

0

(x x

j

)

_

_

_

_

_

2

_ _ ; 3

where K(t) = dK/dt and is the iteration index. Using the

Gaussian function K(t) = e

-t/2

, (2) and (3) can be reduced

12

to

x

( +1)

=

n

i =1

p(ijx

()

)x

i

4

and

p(ijx

()

) =

exp (

1

2

(x

()

x

i

)

_

_

_

_

_

2

)

n

j =1

exp (

1

2

(x

()

x

j

)

_

_

_

_

_

2

)

: 5

The algorithm will be terminated if the shift distance is

equal to zero or is less than a tolerant threshold as follows:

x

()

x

(1)

_

_

_

_

threshold: 6

The clustering is performed by representing each mode of

the kernel density estimate as the cluster and the data

points are converged to their corresponding modes. This

idea of plotting two clusters can be depicted graphically,

as shown in Figure 1(a). Figure 1(b) shows that data points

are shifted rising toward their mode. The solid black lines

represent the trajectory of each data point.

The Agglo-MS

10,11

is an agglomerative MS clustering

algorithm. It is built upon an iterative query set compres-

sion mechanism motivated by the quadratic bounding opti-

mization characteristic of the MS algorithm. It performs

well on segmentation of images and clustering of moder-

ate scale data sets. Since the space is limited, the interested

reader is directed to Yuan et al.

10,11

3. Generalized Transport Mean Shift

algorithm

In general, there are many positions shifting through the

same trajectory and trying to place themselves at their mode,

as the example shows in Figure 1. Considering Figure 2, the

i

th

data is shifted to the position that is closed to the original

position of the k

th

data at iteration . Also, the direction of

the shift vector of ith data is in parallel to the trajectory vec-

tor of the kth data. Therefore, the ith data should be consid-

ered as the trailer of the kth data, which is assumed to be a

transporter of the ith data. Hence, the shifting of the ith

data need not be computed in the next iteration.

Even though the jth data at iteration is also shifted to

the position near the original position of the kth data, its

mode is different from the mode of the kth data. One of

the main ideas of this work is that the nearest point that is

assigned as the transporter should have the same direction

of trajectory vector as the direction of the shift vector of

the trailer.

In order to acquire the solution, four matrices are intro-

duced. The first matrix is a matrix of the trajectory vector

of all the data points. The second matrix stores the indexes

of the transporters. The last two matrices are logical, indi-

cating the convergence status and the present status of the

data points. The details of each matrix are as follows.

Let UR

mxn

. The ith column of U, denoted by u

i

, is a

unit trajectory vector of the ith data at the first iteration

and can be computed as

Figure 1. (a) The plotting of two data clusters. (b) The trajectory of data point by applying the Mean Shift algorithm to a two-

dimensional data set. The third axis denotes density of data.

1204 Simulation: Transactions of the Society for Modeling and Simulation International 88(10)

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

u

i

=

x

1

i

x

0

i

x

1

i

x

0

i

k k

: 7

Let TR

1xn

be a transporter matrix, where the ith column

of T denoted by t

i

is an index of a transporter of the ith

data, such that

t

i

=

arg min

j

x

i

x

j

_

_

_

_

_

_

2

_ _

if

ij

i otherwise;

_

_

_

8

where a is a constant threshold.

ij

denotes the generalized

angle between the trajectory vector (u

j

) and the shift vector

(v

i

). is in the range of [0 1] and is defined by

ij

=

1

2

(1

v

i

u

j

v

i

j j u

j

): 9

The vectors are parallel and have the same direction, if =

0. If = 1, the vectors are still parallel but have an opposite

direction of 180 degrees.

The last two matrices are C

1xn

, the matrix of the con-

vergence status, and A

1xn

, the matrix of the present status.

a

i

is the ith column of A and c

i

is the ith column of C,

expressed as follows:

c

i

=

1

0

_

_

_

if the i

th

data converge

otherwise

; 10

a

i

=

1

0

_

_

_

if the i

th

data should be present

to the next iteration

otherwise

: 11

The GTMS algorithm is shown as pseudo-code Algorithm

1. To reiterate, the trajectory vector is computed in step 2

and is assigned in step 5. Step 6, 9, and 10 are normally

performed by the MS algorithm. If the transporter of the

ith data is, however, found in step 8, then step 9 need not

be computed, which is computing the exponential of all

the distance values in step 6 using (5). Furthermore, the

shift position of the ith data is assigned to t

i

. We imagine

that the more trailers found then the less data that needs to

be computed in the next iteration.

Algorithm1 (GTMS)

Initialization:

C is initialized to false.

A is initialized to true.

Initialize the value of parameter D.

for each x

i

X do

1. Compute x

i

1

2. Calculate the shift distance and the trajectory vector

Set z = x

W

i

- x

i

Set s = ||z||

2

Set v

i

= z/s

0.5

3. Considering the convergence

if s threshold then

c

i

= true , a

i

= false

end if

4. Set i

th

to be a transporter itself, t

i

= i

end for

5. Set W = 1, U=V // u

i

is a unit trajectory vector

while these are a

i

= true do

6.Calculate the distance (d

k

) from x

W

i

to x

k

X,1 k n

7. Find the j

th

data that nearest to the i

th

data

j = argmin

k

d

k

8.Investigate the transporter

Compute G =(1- v

W

i

u

j

/ |v

W

i

||u

j

|)

if i j & t

i

i & G d D then

set t

i

= j, a

i

= false // assign transporter

and inactive trailer

else

9. Compute x

i

W+1

10. Follow step 2 and 3

end if

Increate the number of iteration by setting W = W+1.

HQGwhile

According to step 8, the transporter of the ith data is

found and assigned to t

i

. This means that the ith data

should move toward the same mode as its transporter.

Hierarchical clustering is simultaneously performed in this

step using matrix T. At the convergence step, the depth-

first search algorithm

13

is used for retrieving all the trailers

of the remaining transporters. At the convergence step,

each cluster may have several transporters assigned in the

same cluster. Thus, it would be easy to perform clustering

for those transporters by using the distance threshold. The

appropriate value of threshold is a proportion of the band-

width of a density estimator. In this paper we use half of

the bandwidth to be the desired threshold.

Although there is an additional parameter () in our

algorithm, this parameter makes the algorithm more flex-

ible. It is used for choosing a suitable transportertrailer

relationship. Normally, is between [0, 1]. For = 0, the

trailer is transported by the transporter; only the shift vec-

tor of the trailer and the trajectory vector of the transporter

are parallel. For = 1, the angle of the vectors in the range

of 0180 degrees is acceptable. Hence, the value of (8) is

spanned in range [0,1]. However, the parameter can be

Figure 2. Trajectory vector of x

k

, which is assigned to be the

transporter and shift direction of x

i

and x

j

at iteration .

Sunat et al. 1205

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

assigned out of this range. In the case of > 1, the near-

est position of the shift position at the ith data is always

assigned to be the transporter of the ith. In this case, the

GTMS algorithm is the fastest. Once < 0, there is no

transporter to be assigned. In this case, the GTMS algo-

rithm performs as the standard MS algorithm. Therefore,

the MS algorithm can be considered as a special case of

the proposed GTMS algorithm.

The value of a can be adapted in each iteration.

According to the nature of a mode-seeking algorithm, the

data that belongs to a different mode will be shifted far

from each other when the number of iterations is increased.

A technique for adjusting a is also provided in this paper.

Let

0

be an initial value and

be a maximum acceptable

threshold at iteration . The value of can be assigned as

= min (

,

0

+(

)i); 12

where i denotes the number of iterations. The value of

can linearly be increased from

0

to

when the number

of iterations reaches iteration .

Our claim is that the GTMS process is much faster than

the MS algorithm because the trailers will be excluded for

the next iteration. The time complexity of the GTMS algo-

rithm is O(nm

p

i =1

q

i

), where q

p

. . . q

2

( q

1

=n

and q

i

denotes the number of active data points at iteration

i. The space complexity of the GTMS algorithm is O(nm).

4. Experimental results

The GTMS algorithm was tested on clustering and image-

segmentation problems. In general, density estimator-

based algorithms need a desired bandwidth; this is still an

open problem and is beyond the scope of this paper.

Consequently, we experimentally selected some suitable

bandwidths for comparison. The algorithms were tested on

the same parameters and environment. Some notations are

introduced for short naming. MS1 denotes the standard

MS algorithm, as in Yang et al.

9

MS2 denotes the standard

MS algorithm with excluded converge points for the next

iteration. GTMS1 denotes the fastest GTMS algorithm by

choosing > 1 and GTMS2 denotes a GTMS algorithm

with

0

= 0,

= 0.5, and = 10. The tolerance threshold

is set to 10

7

for all experiments. MS1, MS2, GTMS1,

and GTMS2 are coded in MATLAB software. The MS

and Agglo-MS algorithms were downloaded from the

website of Yuan (Yuan et al.

11

) at http://sites.google.com/

site/xtyuan1980/publications. They are coded in C++.

4.1 Clustering

Experiments were conducted to evaluate the accuracy of

the proposed algorithm. The algorithm was tested using

real-world data sets from the University of California,

Irvine (UCI) machine learning repository,

14

such as iris,

soybean, and blood. The bandwidth of the density estimator

was selected by repeatedly applying the standard MS algo-

rithm with different bandwidths until the number of clusters

produced by the algorithm was equal to the real one. Every

algorithm was now governed using the same bandwidth.

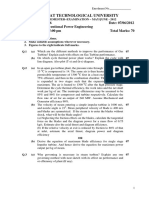

The average accuracies of the clustering results are shown

in Table 1 and the speed ups are shown in Figure 3. It can

be observed that the GTMS algorithm was not only a fast

algorithm, but it also achieved more accurate clustering

results than the standard MS algorithm. For the blood data

set, every algorithm achieved the same level of accuracy

results; GTMS1, however, took less time than the other

algorithms. GTMS1 archived 100% clustering accuracy for

the soybean data set, showing an increased speed of up to

8.31 times faster than MS1.

4.2 Image segmentation

The algorithms were evaluated on image segmentation

using 6 well known images, Hand, Lena, Hawk, House,

Small bowel loop, and 3 green vegetable images(15);

vegetable (F) , vegetable (D), and vegetable (B). A pixel

of the first four images was decomposed to a five-

dimensional vector (x, y, R, G, B). The first two dimen-

sions were normalized to range [1,100] in order to reduce

the effect of the images size. The last three images were

decomposed as in Liying et al.

15

and (x, y, G-R, G-B, H, S,

I) with (x, y) were normalized to range [1, 20]. The aver-

age times consumed on the 10 experiments and the speed

up of the GTMS algorithm with different bandwidths of

Figure 3. The averages speed up on 10 experiments of GTMS

algorithm compare with MS1 and MS2, respectively.

Table 1. Average accuracy results on 10 experiments of the

clustering.

Data set Accuracy (%)

MS1 MS2 GTMS1 GTMS2

Iris 62.67 62.67 84 82

Soybean 98.87 98.87 100 98.87

Blood 76.47 76.47 76.47 76.47

1206 Simulation: Transactions of the Society for Modeling and Simulation International 88(10)

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

density estimator are shown in Table 2. As for the compar-

ison of the MS and the Agglo-MS algorithms, all seven

images are used and preprocessed using the script pro-

vided by Yuan (Yuan et al.

11

). Their running times cannot

be compared with the proposed algorithms because they

are run by the different systems.

The results in Table 2 show that GTMS1 achieved a

speed up of 81333 times faster than MS1 and 853 times

faster than MS2. Moreover, GTMS2, which performed a

result approximate to the MS algorithm, achieved speeds

rising to 223 and 23 times faster than MS1 and MS2,

respectively. Because the Vegetable (F), (D), and (B)

image consisted of many pixels (greater than 30,000) and

the progression of MS1 was very slow, we did not per-

form this problem. Looking at Table 3, for the Vegetable

(F) image, the number of active data points of GTMS1 in

the second iteration remained at only 15 data points. This

means that there were huge trailers that were shifted to

the same direction of the transporter. The GTMS

algorithm excluded those points for the next iteration,

while the MS algorithm still computed a shift direction

for them. All points remained for MS2, since there were

no conversed points in this iteration. The numbers of

active data points of the first five iterations of all images

using different algorithms are shown in Table 3. It can be

seen that GTMS1 gave the fewest active data points at

iteration 5 for all images. The speed ups of the proposed

GTMS algorithm compared with MS1 and MS2 are

shown in Figure 4. The results show that GTMS1 gave a

better speed-up result than GTMS2 for almost all case

studies of the images. Figure 5 shows that the accuracy of

GTMS1 is comparable to GTMS2. Finally, it can be

observed that GTMS1 significantly reduced unnecessary

computation when the iteration increased. Less than 10%

of the data points of all images remained at the end of the

second iteration using GTMS1. For the Vegetable (F)

image, there were only 0.04% of the data points still

active at the end of the second iteration.

Table 2. The average time consuming of image segmentation on 10 experiments and the speed up of the Generalized Transport

Mean Shift (GTMS) algorithm compared with MS1 and MS2.

Data set s Average time (seconds)

MS1 MS2 GTMS1 GTMS2

Time Time Time Speed up Time Speed up

MS1 MS2 MS1 MS2

Hand 500 747.73 73.19 2.98 250.53 24.52 12.92 57.84 5.66

200 643.89 64.35 3.15 204.13 20.40 9.20 69.98 6.99

Lena 500 304.60 49.20 1.84 165.51 26.74 6.30 48.33 7.81

200 577.83 57.29 2.42 238.41 23.64 6.97 82.85 8.22

Hawk 500 1442.80 134.22 4.32 333.81 31.05 12.05 119.73 11.14

200 1477.17 252.16 4.72 312.62 53.37 10.62 139.00 23.73

Houses 500 500.00 75.61 3.82 130.66 19.76 13.20 37.85 5.72

200 1215.16 154.81 4.15 292.38 37.25 12.11 100.29 12.78

Cameraman 400 124.07 25.51 1.53 81.21 16.70 3.78 32.85 6.75

200 174.04 36.10 1.57 110.91 23.00 3.84 45.35 9.41

Small bowel 100 456.48 36.49 2.16 211.51 16.91 2.46 185.64 14.84

loops 90 501.23 38.06 2.21 226.72 17.22 2.24 223.80 17.00

Vegetable (F) 100 5327.93 604.82 8.81 1822.47 2.92

50 8499.35 606.04 14.02 1525.82 5.57

Vegetable (D) 100 13,274.20 476.49 16.88 1085.72 7.41

50 13,274.20 475.38 27.92 709.43 18.71

Vegetable (B) 100 3964.05 458.62 8.64 796.45 4.98

50 6729.63 455.16 14.79 662.88 10.15

Table 3. The sample of numbers of active data points of the first five iterations for three algorithms MS2, GTMS1 and GTMS2.

Data set s MS2 GTMS1 GTMS2

Hand 500 3100, 3100, 3100, 3100, 3100 3100, 82, 19, 10, 7 3100, 1822, 1352, 1224, 1094

Lena 500 2500, 2500, 2500, 2500, 2500 2500, 211, 42, 17, 6 2500, 1556, 985, 720, 593

Hawk 500 3750, 3750, 3750, 3750, 3750 3750, 157, 29, 16, 9 3750, 1432, 991, 829, 749

Houses 500 3750, 3750, 3750, 3750, 3750 3750, 104, 20, 13, 10 3750, 2163, 1254, 1066, 955

Lena 500 2500, 2500, 2500, 2500, 2500 2500, 211, 42, 17, 6 2500, 1556, 985, 720, 593

Vegetable (F) 100 33,908, 33,908, 33,908, 33,908, 33,879 33,908, 15, 7, 3, 3 33,908, 23,797, 20,194, 19,456, 19,352

Sunat et al. 1207

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

The comparison of image-segmentation results of the

proposed algorithms and the competitive algorithm, Agglo-

MS, are shown in Table 4. The experiment is conducted on

seven images. The segmented images of the MS algorithm

are the base of the references. The percentage of pixel that

has the same color at the same location from the two

images is the metric for comparison. The higher percentage

represents the higher agreement of the two algorithms. The

Agglo-MS algorithm wins two out of seven. The proposed

algorithms win five out of seven. Furthermore, we can

observe that if the Agglo-MS algorithm wins, its score is a

little higher than that of the proposed algorithms. If the

proposed algorithm wins, some of its scores are much

higher than those of the Agglo-MS algorithm. Thus, the

proposed algorithm produces a segmented image that is

more similar to the result of the conventional MS cluster-

ing than the Agglo-MS clustering. The examples of those

images are depicted in Table 5.

Table 5. The examples of the results of image segmentation using the bandwidths in Table 3 are illustrated.

1. Hand 62 50 2. Lena 50 50 3. Hawk 75 50 4. Houses 75 50 5. Vegetable 176 173

Original image

MS

GTMS1

GTMS2

Agglo-MS

(default parameters)

Table 4. The percentage of agreement of segmented image

comparisons between MS1 versus GTMS1, Mean Shift (MS)

versus GTMS2, and MS versus Agglomerative Mean-Shift

(Agglo-MS).

Data set s GTMS1 GTMS2 Agglo-MS

(default

parameters)

Hand 500 98.35 98.74 99.31

200 97.13 98.77

Hawk 500 97.44 99.57 99.63

200 95.20 99.57

Houses 500 97.77 98.64 89.41

200 99.52 99.87

Lena 500 96.04 96.36 80.13

200 94.12 95.6

Vegetable (F) 100 99.77 99.99 99.33

50 99.96 99.96

Vegetable (D) 100 99.43 99.85 99.47

50 99.60 99.68

Vegetable (B) 100 99.87 99.94 87.79

50 99.77 99.89

Figure 5. The scatter plot of the percentage of the accuracy of

image segmentation by using the proposed GTMS1 and GTMS2

for nine images is shown.

Figure 4. The scatter plots of the speed up of the proposed

GTMS algorithm compared with MS1 and MS2.

1208 Simulation: Transactions of the Society for Modeling and Simulation International 88(10)

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

4.3 Challenging application

It is clear that the GTMS algorithms main advantage is a

reduction in the amount of data that needs to be computed.

We realized that the benefit of the GTMS algorithm is its

ability to reduce the number of active data points in the

computation. On the testing environment, it can success-

fully segment images with a large data point, that is,

Vegetable (F), Vegetable (D), and Vegetable (B). A chal-

lenging issue is actually implementing the model and

presenting an example of a ubiquitous application. In exist-

ing systems,

15

the segmentation rate of the images contain-

ing green vegetation using a MS procedure into the image

segmentation was improved. Then, the back-propagation

neural network was used for classifying the image into two

parts, that is, green and non-green vegetation. A similar

application governed by the proposed GTMS was done and

the results are shown in Figure 6. This result indicates that

it would be entirely possible to build an intelligent portable

device for surveying green vegetable sites.

Figure 6. The examples of the application of color segmentation of images containing green vegetable using the proposed

Generalized Transport Mean Shift algorithm are shown.

Sunat et al. 1209

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

4.4 Discussion

There was concern about the characteristic of the image

that affected the speed up of the proposed GTMS algo-

rithm. Therefore, all images were analyzed by principal

component analysis (PCA). The main point is to reduce the

data dimensionality. Next, the data densities of the images

were characterized by the direction of both the first compo-

nent and the second component. The scatter plot of the first

component, the second component, and the probability

density function are shown in Figures 715. In the cases of

Hawk, Vegetable (B), Vegetable (D), and Vegetable (F),

Figure 8. The original image of Lena (color; size 50 50 pixels) is shown in (a). The scatter plot of principal component analysis

(PCA)-projected data is shown in (b). The three-dimensional surface showing the data density computed from the first component

and the second component of bandwidth 200 (c), and bandwidth 500 (d) are shown.

Figure 7. The original image of Cameraman (gray level, size 50 50 pixels) is shown in (a). The scatter plot of principal

component analysis (PCA)-projected data is shown in (b). The three-dimensional surface showing the data density computed from

the first component and the second component of bandwidth 200 (c), and bandwidth 400 (d) are shown.

1210 Simulation: Transactions of the Society for Modeling and Simulation International 88(10)

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

two peaks of data density clearly shown separation as in

Figures 11, and 1315. The remaining images of

Cameraman, Lena, Hand, Small bowel loops, and Houses

show several peaks of data density in Figures 710, and

12. This showed that there are many clusters in each of the

rest images. It is revealed that the data density peaks of

Figure 10. The original image of Small bowel loops (gray level; size 65 50 pixels) is shown in (a). The scatter plot of principal

component analysis (PCA)-projected data is shown in (b). The three-dimensional surface showing the data density computed from

the first component and the second component of bandwidth 90 (c), and bandwidth 100 (d) are shown.

Figure 9. The original image of Hand (color; size 50 50 pixels) is shown in (a). The scatter plot of principal component analysis

(PCA)-projected data is shown in (b). The three-dimensional surface showing the data density computed from the first component

and the second component of bandwidth 200 (c), and bandwidth 500 (d) are shown.

Sunat et al. 1211

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

Cameraman, Lena, Hand, Houses, and Small bowel loops

differ less than the data density of Hawk, Vegetable (F),

Vegetable (B), and Vegetable (D). Considering the speed

up of the proposed GTMS algorithm shown in Table 2, we

can see that GTMS1 for Hawk achieved the highest speed

up at 333.98 times faster than MS1, and 53.42 times faster

Figure 11. The original image of Hawk (color; size 75 50 pixels) is shown in (a). The scatter plot of principal component

analysis (PCA)-projected data is shown in (b). The three-dimensional surface showing the data density computed from the first

component and the second component of bandwidth 200 (c), and bandwidth 500 (d) are shown.

Figure 12. The original image of Houses (color; 75 50 pixels) is shown in (a). The scatter plot of principal component analysis

(PCA)-projected data is shown in (b). The three-dimensional surface showing the data density computed from the first component

and the second component of bandwidth 200 (c), and bandwidth 500 (d) are shown.

1212 Simulation: Transactions of the Society for Modeling and Simulation International 88(10)

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

than MS2. These results support the claim that the greater

difference the data density has, the greater the speed up the

GTMS algorithm can achieve. However, this is a subjective

conclusion from the experimental results. An investigation

in more detail that focuses on this issue needs to be carried

out in future work.

Figure 14. The original image of Vegetable (D) (color; 200 150 pixels) is shown in (a). The scatter plot of principal component

analysis (PCA)-projected data is shown in (b). The three-dimensional surface showing the data density computed from the first

component and the second component of bandwidth 50 (c), and bandwidth 100 (d) are shown.

Figure 13. The original image of Vegetable (B) (color; 200 150 pixels) is shown in (a). The scatter plot of principal component

analysis (PCA)-projected data is shown in (b). The three-dimensional surface showing the data density computed from the first

component and the second component of bandwidth 50 (c), and bandwidth 100 (d) are shown.

Sunat et al. 1213

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

5. Conclusion

In this paper, we have proposed an intelligence modeling

algorithm called the GTMS algorithm. The GTMS algo-

rithm is an unsupervised simultaneous mode-seeking and

clustering algorithm, based on the idea of transportation.

The main advantage derived from this algorithm is its abil-

ity to reduce computing times. For image-segmentation

applications, the GTMS algorithm achieved speeds of 3

333 times faster than MS1. The GTMS algorithm rapidly

reduces active data points as the iterations increase.

Finally, the ability of the proposed GTMS algorithm can

be incorporated as a part of software working on a small

device for ubiquitous intelligence applications, such as the

segmentation of images containing green vegetation.

Acknowledgment

We would like to thank Mr James McCloskey for proofreading

the manuscript of this paper.

Funding

This work was partially supported by the Computational Science

Research Group (COSRG), Faculty of Science, Khon Kaen

University (COSRG-SCKKU) and the Higher Education Research

Promotion and National Research University Project of Thailand,

Office of the Higher Education Commission, through the Cluster

of Research to Enhance the Quality of Basic Education.

References

1. Fukunaga K and Hostetler LD. The estimation of the gradient

of a density function, with applications in pattern recognition.

IEEE Trans Inf Theory 1975; 21: 3240.

2. Cheng Y. Mean shift, mode seeking, and clustering. IEEE

Trans Pattern Anal Mach Intell 1995; 17: 790799.

3. Comaniciu D and Meer P. Mean shift analysis and applica-

tions. In: proceedings of the international conference on

computer vision, Kerkyra, Corfu, Greece. 1999. pp.1197

1203.

4. Comaniciu D and Meer P. Mean shift: a robust approach

toward feature space analysis. IEEE Trans Pattern Anal

Mach Intell 2002; 24: 603619.

5. DeMenthon D. Spatio-temporal segmentation of video by

hierarchical mean shift analysis. In: statistical methods in

video processing workshop, Copenhagen, Denmark, 2002.

6. Carreira-Perpinan MA

. Fast nonparametric clustering with

Gaussian blurring mean-shift. In: proceedings of the 23rd

international conference on machine learning, 2006,

Pittsburgh, Pennsylvania, USA. pp.153160.

7. Padungweing P, Chiewchanwattana S and Sunat K. Resilient

mean shift algorithm. In: proceedings of the 13th interna-

tional conference on systems, signals and image processing,

Budapest, Hungary; 2123 September 2006. pp.181184.

8. Carreira-Perpinan MA

. Acceleration strategies for Gaussian

mean-shift image segmentation. In: proceedings of the 2006

IEEE Computer society conference on computer vision and

pattern recognition, New York, NY, USA. 2006. pp.1160

1167.

9. Yang C, Duraiswami R, Gumerov NA, et al. Improved fast

Gauss transform and efficient kernel density estimation. In:

proceedings of the IEEE international conference on com-

puter vision, vol. 1, Nice, France. 2009, pp.644671.

10. Yuan X-T, Hu B-G and He R. Agglomerative mean-shift

clustering via query set compression. In: proceedings of the

ninth SIAM international conference on data mining, 2009;

Sparks, Nevada, USA. pp.221232

Figure 15. The original image of Vegetable (F) (color; 196 175 pixels) is shown in (a). The scatter plot of principal component

analysis (PCA)-projected data is shown in (b). The three-dimensional surface showing the data density computed from the first

component and the second component of bandwidth 50 (c), and bandwidth 100 (d) are shown.

1214 Simulation: Transactions of the Society for Modeling and Simulation International 88(10)

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

11. Yuan X-T, Hu B-G and He R. Agglomerative Mean-Shift

Clustering. IEEE Trans Knowl Data Eng 2012; 24: 209219.

12. Perpinan MA

C. Mode-Finding for Mixtures of Gaussian

Distributions. IEEE Trans Pattern Anal Mach Intell 2000;

22: 13181323.

13. Tarjan RE. Depth first search and linear graph algorithms. SIAM

J Comput 1972; 1: 146160. Accessed date: April 22, 2012.

14. Frank A and Asuncion A. UCI machine learning repository.

Irvine, CA: University of California, School of Information

and Computer Science, http://archive.ics.uci.edu/ml (2010).

Accessed date: April 22, 2012.

15. Liying Z, Jingtao Z and Qianyu W. Mean-shift-based color

segmentation of images containing green vegetation. Comput

Electron Agric 2009; 65: 9398.

Author Biographies

Khamron Sunat is a lecturer at the Department of Computer

Science, Faculty of Science, Khon Kaen University, Thailand.

His main research areas are nature-inspired computing,

ubiquitous-enabled algorithms, and artificial intelligence.

Panida Padungweang is a lecturer at the Department of

Mathematics, Statistic and Computer, Faculty of Science, Ubon

Ratchatani University, Thailand.

Sirapat Chiewchanwattana is a lecturer at the Department of

Computer Science, Faculty of Science, Khon Kaen University,

Thailand. Her main research areas are clever algorithms for deci-

sion support systems, ubiquitous-enabled algorithms, asnd

knowledge management.

Sunat et al. 1215

at Bibliotheques de l'Universite Lumiere Lyon 2 on November 4, 2012 sim.sagepub.com Downloaded from

You might also like

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- CODATU Training Programme1Document4 pagesCODATU Training Programme1Tarek SetifienNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Art:10.1007/s12544 009 0022 5Document15 pagesArt:10.1007/s12544 009 0022 5Tarek SetifienNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Best Solution To Plan and Solve The Problem of TransportationDocument18 pagesBest Solution To Plan and Solve The Problem of TransportationTarek SetifienNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- OPSTE Bulletin N° 30 Avril 2012anglaisDocument8 pagesOPSTE Bulletin N° 30 Avril 2012anglaisTarek SetifienNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Art:10.1007/s12544 009 0019 0Document14 pagesArt:10.1007/s12544 009 0019 0Tarek SetifienNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Art:10.1007/s12544 009 0018 1Document8 pagesArt:10.1007/s12544 009 0018 1Tarek SetifienNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- EDocument470 pagesETarek SetifienNo ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Art:10.1007/s12544 009 0019 0Document14 pagesArt:10.1007/s12544 009 0019 0Tarek SetifienNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Art:10.1007/s12544 011 0054 5Document9 pagesArt:10.1007/s12544 011 0054 5Tarek SetifienNo ratings yet

- Optimizing hazardous materials transportation decisionsDocument52 pagesOptimizing hazardous materials transportation decisionsTarek SetifienNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Art:10.1007/s12544 009 0019 0Document14 pagesArt:10.1007/s12544 009 0019 0Tarek SetifienNo ratings yet

- Personalized Application For Multimodal Route Guidance For TravellersDocument8 pagesPersonalized Application For Multimodal Route Guidance For TravellersTarek SetifienNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- 1202 FullDocument15 pages1202 FullTarek SetifienNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Journal of Vibration and ControlDocument16 pagesJournal of Vibration and ControlTarek SetifienNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- 502 FullDocument20 pages502 FullTarek SetifienNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- An Activity-Based System of Models For Student Mobility SimulationDocument12 pagesAn Activity-Based System of Models For Student Mobility SimulationTarek SetifienNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Journal of Vibration and ControlDocument16 pagesJournal of Vibration and ControlTarek SetifienNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- 502 FullDocument20 pages502 FullTarek SetifienNo ratings yet

- Art:10.1007/s12544 010 0036 ZDocument9 pagesArt:10.1007/s12544 010 0036 ZTarek SetifienNo ratings yet

- Bca Oct2010Document118 pagesBca Oct2010lalitmunawat100% (1)

- GTU BE- Vth SEMESTER Power Engineering ExamDocument2 pagesGTU BE- Vth SEMESTER Power Engineering ExamBHARAT parmarNo ratings yet

- Vibration Measuring Instrument: Assignment of Subject NVHDocument28 pagesVibration Measuring Instrument: Assignment of Subject NVHSandeep Kadam60% (5)

- CIECO PPC1000R Installation Manual V3.3Document61 pagesCIECO PPC1000R Installation Manual V3.3TomNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Liner Product Specification Sheet PDFDocument1 pageLiner Product Specification Sheet PDFsauravNo ratings yet

- Tips For Internship ReportDocument1 pageTips For Internship ReporthummayounnasirNo ratings yet

- Compiler Design (2170701) : Epartment of Omputer NgineeringDocument3 pagesCompiler Design (2170701) : Epartment of Omputer NgineeringRancho ChauhanNo ratings yet

- MS 2400 1 2010 P-TocDocument7 pagesMS 2400 1 2010 P-Tocfauzirohani0% (1)

- Bill of Materials for Gate ValveDocument6 pagesBill of Materials for Gate Valveflasher_for_nokiaNo ratings yet

- Aligning HR Interventions With Business StrategiesDocument14 pagesAligning HR Interventions With Business StrategiesSunielNo ratings yet

- Prof TVKB Propeller TheoryDocument26 pagesProf TVKB Propeller TheorytvkbhanuprakashNo ratings yet

- Your Trip: MR Mohamed Yousuf Hasan Mohamed Iqbal BasherDocument1 pageYour Trip: MR Mohamed Yousuf Hasan Mohamed Iqbal BasherMohamed YousufNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Tm500 Lte-A 3gpp Lte TestDocument8 pagesTm500 Lte-A 3gpp Lte TestSmith KumarNo ratings yet

- Patient in The Institute of Therapy and Rehabilitation of Pondok Pesantren Ibadurrahman Tenggarong Seberang)Document10 pagesPatient in The Institute of Therapy and Rehabilitation of Pondok Pesantren Ibadurrahman Tenggarong Seberang)Fitri AzizaNo ratings yet

- Jasmi HashimDocument364 pagesJasmi HashimRudraraju ChaitanyaNo ratings yet

- Financial Inclusion Strategy of The Timor-Leste GovernmentDocument140 pagesFinancial Inclusion Strategy of The Timor-Leste GovernmentPapers and Powerpoints from UNTL-VU Joint Conferenes in DiliNo ratings yet

- I2C Comms HumidIcon TN - 009061-2-EN - Final - 07jun12 PDFDocument4 pagesI2C Comms HumidIcon TN - 009061-2-EN - Final - 07jun12 PDFAdriana Waldorf100% (1)

- GfsDocument4 pagesGfsErmianus SamaleiNo ratings yet

- Question (1) :-AnswerDocument27 pagesQuestion (1) :-Answerprofessor_manojNo ratings yet

- Isye6501 Office Hour Fa22 Week07 ThuDocument10 pagesIsye6501 Office Hour Fa22 Week07 ThuXuan KuangNo ratings yet

- ETA 11 0006 For HAC Cast in Anchor ETAG Option Approval Document ASSET DOC APPROVAL 0198 EnglishDocument27 pagesETA 11 0006 For HAC Cast in Anchor ETAG Option Approval Document ASSET DOC APPROVAL 0198 Englishlaeim017No ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Computer Architecture - Wikipedia, The Free EncyclopediaDocument8 pagesComputer Architecture - Wikipedia, The Free EncyclopediaelcorinoNo ratings yet

- Eapp Review PaperDocument3 pagesEapp Review PaperJayy H. Lopez100% (1)

- Basic Education Research AgendaDocument41 pagesBasic Education Research AgendaKristine Barredo100% (1)

- Olympus Industrial Endoscopes Offer Reliable InspectionsDocument16 pagesOlympus Industrial Endoscopes Offer Reliable InspectionsYeremia HamonanganNo ratings yet

- DXX-790-960/1710-2180-65/65-17i/18.5 - M/M: Model: ADU451807v01Document3 pagesDXX-790-960/1710-2180-65/65-17i/18.5 - M/M: Model: ADU451807v01jhon mirandaNo ratings yet

- Army C-sUAS Systems AssessmentDocument4 pagesArmy C-sUAS Systems AssessmentArthur WongNo ratings yet

- Trip TicketDocument2 pagesTrip TicketKynth Ochoa100% (2)

- Help - Walkthrough - Creating Beam and Plate Features - AutodeskDocument5 pagesHelp - Walkthrough - Creating Beam and Plate Features - AutodeskTien HaNo ratings yet