Professional Documents

Culture Documents

Huffman source coding of discrete memoryless source

Uploaded by

Manoj KumarOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Huffman source coding of discrete memoryless source

Uploaded by

Manoj KumarCopyright:

Available Formats

Advanced Digital Communication Source Coding 1. Consider a discrete memoryless source whose alphabet consists of K equiprobable symbols.

What conditions have to be satisfied by K and the code-word length for the coding to be 100 percent? [Haykin 9.9b] 2. Consider the four codes listed below: Symbol s0

s1 s2

Code I 0 10 110 1110 1111

Code II 0 01 001 0010 0011

Code III 0 01 011 110 111

Code IV 00 01 10 110 111

s3

s4

(a) Two of these four codes are prefix codes. Identify them, and construct their individual decision trees. (b) Apply the Kraft-McMillan inequality to codes I, II, III, and IV. Discuss your results in light of those obtained in part (a). [Haykin 9.10] 3. A discrete memoryless source has an alphabet of seven symbols whose probabilities of occurrence are as described here: Symbol Probability s0 0.25

s1 s2

s3 0.125

s4

s5 0.0625

s6 0.0625

0.25

0.125

0.125

Compute the Huffman code for this source, moving a "combined" symbol as high as possible. Explain why the computed source code has an efficiency of 100 percent. [Haykin 9.12] 4. Consider a discrete memoryless source with alphabet {so , s1, s2 } and statistics {0.7,0.15,0.15} for its output. (a) Apply the Huffman algorithm to this source. Hence, show that the average code-word length of the Huffman code equals 1.3 bits/symbol. (b) Let the source be extended to order two. Apply the Huffman algorithm to the resulting extended source, and show that the average code-word length of the new code equals 1.1975 bits/symbol. (c) Compare the average code-word length calculated in part (b) with the entropy of the original source. [Haykin 9.13]

5. A computer executes four instructions that are designated by the code words (00, 01, 10, 11). Assuming that the instructions are used independently with probabilities (1/2. 1/8, 1/8. 1/4), calculate the percentage by which the number of bits used for the instructions may be reduced by use of an optimum source code. Construct a Huffman code to realize the reduction. [Haykin 9.15] 6. Consider the following binary sequence 11101001100010110100 Use the Lempel-Ziv algorithm to encode this sequence. Assume that the binary symbols 0 and 1 are already in the codebook.

Solution 1. Source entropy is

For 100% efficiency, we have

To satisfy this equation, we choose

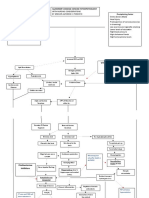

2. (a)

3.

4. (a)

The average codeword length Lav = pi Li = 0.7 1 + ... = 1.3 bits/symbol

i =0

(b) For the extended source, we have p ( s 0 s 0 ) = 0.7 0.7 = 0.49, p ( s 0 s1 ) = 0.105, p ( s 0 s 2 ) = 0.105, p ( s1 s 0 ) = 0.105, p( s1 s1 ) = 0.0225, p( s1 s 2 ) = 0.0225, p ( s 2 s 0 ) = 0.105, p( s 2 s1 ) = 0.0225, p( s 2 s 2 ) = 0.0225 Applying the Huffman algorithm to the extended source, we obtain the following source code:

s0 s0 : 1 s 0 s1 : 001 s 0 s 2 : 010 s1 s 0 : 011 s1 s1 : 000100 s1 s 2 : 000101 s 2 s 0 : 0000 s 2 s1 : 000110 s 2 s 2 : 000111

The average codeword length Lav = 0.49 1 + ... = 2.395 bits/symbol Lav / 2 = 1.1975 bits/symbol (c) source entropy H ( X ) = 0.7 log 2 0.7 0.15 log 2 0.15 0.15 log 2 0.15 = 1.18 bits/symbol

Lav 1 H(X ) + n n

Therefore, H ( X )

5.

6.

You might also like

- HW2 EE430 MuqaibelDocument1 pageHW2 EE430 MuqaibelFaridha Fitriati KhurniaNo ratings yet

- Information Theory and Coding - Chapter 3Document33 pagesInformation Theory and Coding - Chapter 3Dr. Aref Hassan KurdaliNo ratings yet

- Haykin9 PDFDocument46 pagesHaykin9 PDFdhananjayan89100% (1)

- Ain Shams University ECE457 Information Theory Sheet 4Document2 pagesAin Shams University ECE457 Information Theory Sheet 4Mo'men SamehNo ratings yet

- COMM 552 - Information Theory and Coding Lecture - Huffman's Minimum Redundancy CodeDocument23 pagesCOMM 552 - Information Theory and Coding Lecture - Huffman's Minimum Redundancy CodeDonia Mohammed AbdeenNo ratings yet

- Shannon Code Entropy Optimal Data CompressionDocument9 pagesShannon Code Entropy Optimal Data Compression123rNo ratings yet

- Data Compression TechniquesDocument11 pagesData Compression Techniquessmile00972No ratings yet

- Assignment of SuccessfulDocument5 pagesAssignment of SuccessfulLe Tien Dat (K18 HL)No ratings yet

- EE321 Contemp Comm Tutorial2 2017Document3 pagesEE321 Contemp Comm Tutorial2 2017Shounak KulkarniNo ratings yet

- Ece-V-Information Theory & Coding (10ec55) - AssignmentDocument10 pagesEce-V-Information Theory & Coding (10ec55) - AssignmentLavanya Vaishnavi D.A.No ratings yet

- Assignment - Digital CommunicationDocument6 pagesAssignment - Digital CommunicationnetsanetNo ratings yet

- Question Bank - NewDocument5 pagesQuestion Bank - NewJeevanreddy MamillaNo ratings yet

- Huffman Coding TechniqueDocument13 pagesHuffman Coding TechniqueAnchal RathoreNo ratings yet

- Truncated HuffmanDocument5 pagesTruncated HuffmanRui MenezesNo ratings yet

- Lecture35-37 SourceCodingDocument20 pagesLecture35-37 SourceCodingParveen SwamiNo ratings yet

- Arithmetic Coding: Presented By: Einat & KimDocument48 pagesArithmetic Coding: Presented By: Einat & Kimjigar16789No ratings yet

- Mad Unit 3-JntuworldDocument53 pagesMad Unit 3-JntuworldDilip TheLipNo ratings yet

- 12 - Huffman Coding AlgorithmDocument16 pages12 - Huffman Coding AlgorithmJahongir AzzamovNo ratings yet

- Data Compression Techniques and Information Theory ExercisesDocument32 pagesData Compression Techniques and Information Theory ExercisesCrystal HarringtonNo ratings yet

- Tutorial1Document2 pagesTutorial1KiddieNo ratings yet

- Raptor Codes: Amin ShokrollahiDocument1 pageRaptor Codes: Amin ShokrollahiRajesh HarikrishnanNo ratings yet

- Huffman-Based Text Encryption AlgorithmDocument7 pagesHuffman-Based Text Encryption AlgorithmWalter BirchmeyerNo ratings yet

- Detect and Correct Errors with CodesDocument16 pagesDetect and Correct Errors with CodesnarendraNo ratings yet

- CH 3 DatalinkDocument35 pagesCH 3 DatalinksimayyilmazNo ratings yet

- Channel Coding ExerciseDocument13 pagesChannel Coding ExerciseSalman ShahNo ratings yet

- CSC 310, Spring 2004 - Assignment #1 SolutionsDocument4 pagesCSC 310, Spring 2004 - Assignment #1 SolutionsPintuabcNo ratings yet

- 062 Teorija Informacije Aritmeticko KodiranjeDocument22 pages062 Teorija Informacije Aritmeticko KodiranjeAidaVeispahicNo ratings yet

- Cryptography ExercisesDocument94 pagesCryptography ExercisesNaru Desu100% (1)

- Channel Coding ProblemsDocument6 pagesChannel Coding Problemssamaa saadNo ratings yet

- Aim: To Implement Huffman Coding Using MATLAB Experimental Requirements: PC Loaded With MATLAB Software TheoryDocument5 pagesAim: To Implement Huffman Coding Using MATLAB Experimental Requirements: PC Loaded With MATLAB Software TheorySugumar Sar DuraiNo ratings yet

- Error-Free Compression: Variable Length CodingDocument13 pagesError-Free Compression: Variable Length CodingVanithaNo ratings yet

- Optimal Huffman Coding and Binary Tree ConstructionDocument6 pagesOptimal Huffman Coding and Binary Tree ConstructionjitensoftNo ratings yet

- Ch. 3 Huffman Coding OptimizationDocument10 pagesCh. 3 Huffman Coding OptimizationHermandeep KaurNo ratings yet

- Chapter 2 Entropy and Huffman Coding TechniquesDocument22 pagesChapter 2 Entropy and Huffman Coding TechniquesDarshan ShahNo ratings yet

- Lec 2 XDocument6 pagesLec 2 XAnonymous 6iFFjEpzYjNo ratings yet

- Itc Information Theory and Coding Jun 2020Document3 pagesItc Information Theory and Coding Jun 2020Margoob TanweerNo ratings yet

- Sheet No.5Document6 pagesSheet No.5samaa saadNo ratings yet

- 2nd Lecture Source CodingDocument12 pages2nd Lecture Source CodingAHMED DARAJNo ratings yet

- ch3 Part1Document7 pagesch3 Part1hassan IQNo ratings yet

- Problem Set 4: MAS160: Signals, Systems & Information For Media TechnologyDocument4 pagesProblem Set 4: MAS160: Signals, Systems & Information For Media TechnologyFatmir KelmendiNo ratings yet

- Compression Techniques 12A) Data Compression Using Huffman CodingDocument6 pagesCompression Techniques 12A) Data Compression Using Huffman CodingbbmathiNo ratings yet

- Tutorial 2Document3 pagesTutorial 2amit100singhNo ratings yet

- Information Technology V Sem Set 5Document3 pagesInformation Technology V Sem Set 5aniruthgsabapathy0% (1)

- Huffman Coding and Data Compression SolutionsDocument25 pagesHuffman Coding and Data Compression SolutionsRamNo ratings yet

- Information Theory and Coding: What You Need To Know in Today's ICE Age!Document44 pagesInformation Theory and Coding: What You Need To Know in Today's ICE Age!Vikranth VikiNo ratings yet

- Module 3: Shannon-Fano, Huffman Coding and Data CompressionDocument16 pagesModule 3: Shannon-Fano, Huffman Coding and Data CompressionSagar C VNo ratings yet

- Mini Project 2Document4 pagesMini Project 2Nutan KesarkarNo ratings yet

- Understanding Information TheoryDocument19 pagesUnderstanding Information TheorySudesh KumarNo ratings yet

- Error Unit3Document15 pagesError Unit3MOHAMMAD DANISH KHANNo ratings yet

- Error Detection and CorrectionDocument73 pagesError Detection and CorrectionJagannath AcharyaNo ratings yet

- Compression IIDocument51 pagesCompression IIJagadeesh NaniNo ratings yet

- Information Coding Techniques Question BankDocument10 pagesInformation Coding Techniques Question BankManikandan ArunachalamNo ratings yet

- Error Detection and Correction TechniquesDocument76 pagesError Detection and Correction TechniquesSaravanan Subramani SNo ratings yet

- Error Detection and CorrectionDocument44 pagesError Detection and CorrectionArjun KrishNo ratings yet

- Huffman Coding: Version of September 17, 2016Document27 pagesHuffman Coding: Version of September 17, 2016Boy Afrianda SinagaNo ratings yet

- 9 Run Length CodesDocument9 pages9 Run Length CodesJohnKiefferNo ratings yet

- UNIX Shell Programming Interview Questions You'll Most Likely Be AskedFrom EverandUNIX Shell Programming Interview Questions You'll Most Likely Be AskedNo ratings yet

- English-Tamil Tamil-English DictionaryDocument153 pagesEnglish-Tamil Tamil-English DictionaryCarlosAmadorFonsecaNo ratings yet

- 2021-10-10 07-52-44Document2 pages2021-10-10 07-52-44Manoj KumarNo ratings yet

- RH StudyGuide V2Document32 pagesRH StudyGuide V2NamraNo ratings yet

- RH StudyGuide V2Document32 pagesRH StudyGuide V2NamraNo ratings yet

- Martin Quest - Cryptocurrency Master_ Everything You Need To Know About Cryptocurrency and Bitcoin Trading, Mining, Investing, Ethereum, ICOs, and the Blockchain (2018, CreateSpace Independent Publishing Platform).pdfDocument261 pagesMartin Quest - Cryptocurrency Master_ Everything You Need To Know About Cryptocurrency and Bitcoin Trading, Mining, Investing, Ethereum, ICOs, and the Blockchain (2018, CreateSpace Independent Publishing Platform).pdfJiji Lotmani100% (1)

- RH StudyGuide V2Document32 pagesRH StudyGuide V2NamraNo ratings yet

- Martin Quest - Cryptocurrency Master_ Everything You Need To Know About Cryptocurrency and Bitcoin Trading, Mining, Investing, Ethereum, ICOs, and the Blockchain (2018, CreateSpace Independent Publishing Platform).pdfDocument261 pagesMartin Quest - Cryptocurrency Master_ Everything You Need To Know About Cryptocurrency and Bitcoin Trading, Mining, Investing, Ethereum, ICOs, and the Blockchain (2018, CreateSpace Independent Publishing Platform).pdfJiji Lotmani100% (1)

- Ace your campus placement testsDocument1 pageAce your campus placement testsManoj KumarNo ratings yet

- Pathophysiology of Alzheimer's Disease With Nursing ConsiderationsDocument10 pagesPathophysiology of Alzheimer's Disease With Nursing ConsiderationsTiger Knee100% (1)

- SESSION 8 - Anti-Malaria DrugsDocument48 pagesSESSION 8 - Anti-Malaria DrugsYassboy MsdNo ratings yet

- Math 226 Differential Equation: Edgar B. Manubag, Ce, PHDDocument18 pagesMath 226 Differential Equation: Edgar B. Manubag, Ce, PHDJosh T CONLUNo ratings yet

- Docu53897 Data Domain Operating System Version 5.4.3.0 Release Notes Directed AvailabilityDocument80 pagesDocu53897 Data Domain Operating System Version 5.4.3.0 Release Notes Directed Availabilityechoicmp50% (2)

- Anaphylactic ShockDocument19 pagesAnaphylactic ShockrutiranNo ratings yet

- Communication Process Quiz AnswersDocument3 pagesCommunication Process Quiz AnswersAbigail CullaNo ratings yet

- The United Republic of Tanzania National Examinations Council of Tanzania Certificate of Secondary Education Examination 022 English LanguageDocument5 pagesThe United Republic of Tanzania National Examinations Council of Tanzania Certificate of Secondary Education Examination 022 English LanguageAndengenie ThomasNo ratings yet

- Chapter IDocument30 pagesChapter ILorenzoAgramon100% (2)

- Wicked Arrangement WickednessDocument8 pagesWicked Arrangement WickednessbenchafulNo ratings yet

- Action Plan For My Long Term GoalsDocument4 pagesAction Plan For My Long Term Goalsapi-280095267No ratings yet

- Ovl QC Manual-2012Document78 pagesOvl QC Manual-2012Sonu SihmarNo ratings yet

- BOSS OS SeminarDocument23 pagesBOSS OS Seminarparam tube100% (1)

- Parent Training PowerpointDocument15 pagesParent Training PowerpointSamantha WeaverNo ratings yet

- Sa Tally Education BrochureDocument6 pagesSa Tally Education BrochurePoojaMittalNo ratings yet

- CArib LitDocument8 pagesCArib LitNick FullerNo ratings yet

- Unesco Furniture DesignDocument55 pagesUnesco Furniture DesignHoysal SubramanyaNo ratings yet

- TASSEOGRAPHY - Your Future in A Coffee CupDocument5 pagesTASSEOGRAPHY - Your Future in A Coffee Cupcharles walkerNo ratings yet

- KM UAT Plan - SampleDocument10 pagesKM UAT Plan - Samplechristd100% (1)

- Diss Q1 Week 7-8Document5 pagesDiss Q1 Week 7-8Jocelyn Palicpic BagsicNo ratings yet

- 50 Apo Fruits Corp V Land Bank of The PhilippinesDocument5 pages50 Apo Fruits Corp V Land Bank of The PhilippinesRae Angela GarciaNo ratings yet

- PROACTIVITYDocument8 pagesPROACTIVITYShikhaDabralNo ratings yet

- MarketingDocument3 pagesMarketingAli KhanNo ratings yet

- SQL RenzDocument11 pagesSQL RenzRenz VillaramaNo ratings yet

- Coefficient of Friction Guide for Caesar II Pipe Stress AnalysisDocument1 pageCoefficient of Friction Guide for Caesar II Pipe Stress AnalysisAlden Cayaga0% (1)

- Article 9 of Japan ConstitutionDocument32 pagesArticle 9 of Japan ConstitutionRedNo ratings yet

- Psychological ContractDocument14 pagesPsychological ContractvamsibuNo ratings yet

- Panaya SetupGuideDocument17 pagesPanaya SetupGuidecjmilsteadNo ratings yet

- 2011 Daily Bible ReadingsDocument5 pages2011 Daily Bible ReadingsTraci GuckinNo ratings yet

- Iambic Pentameter in Poetry and Verse ExamplesDocument14 pagesIambic Pentameter in Poetry and Verse ExamplesIan Dante ArcangelesNo ratings yet

- Turney-High - Primitive WarDocument280 pagesTurney-High - Primitive Warpeterakiss100% (1)