Professional Documents

Culture Documents

Microcontroller Implementation of Voice Command Recognition System For Human Machine Interface in Embedded System

Uploaded by

nimitjain03071991Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Microcontroller Implementation of Voice Command Recognition System For Human Machine Interface in Embedded System

Uploaded by

nimitjain03071991Copyright:

Available Formats

International Journal of Electronics, Communication & Soft Computing Science and Engineering (IJECSCSE) Volume 1, Issue 1

Microcontroller Implementation of a Voice Command Recognition System for Human Machine Interface in Embedded System

Sunpreet Kaur Nanda, Akshay P.Dhande

Abstract The speech recognition system is a completely assembled and easy to use programmable speech recognition circuit. Programmable, in the sense that the words (or vocal utterances) you want the circuit to recognize can be trained. This board allows you to experiment with many facets of speech recognition technology. It has 8 bit data out which can be interfaced with any microcontroller (ATMEL/PIC) for further development. Some of interfacing applications that can be made are authentication, controlling mouse of your computer and hence many other devices connected to it, controlling home appliances, robotics movements, speech assisted technologies, speech to text translation and many more. Keywords - ATMEL, Train, Voice, Embedded System

I. INTRODUCTION

Speech recognition will become the method of choice for controlling appliances, toys, tools and computers. At its most basic level, speech controlled appliances and tools allow the user to perform parallel tasks (i.e. hands and eyes are busy elsewhere) while working with the tool or appliance. The heart of the circuit is the HM2007 speech recognition IC. The IC can recognize 20 words, each word a length of 1.92 seconds. This document is based on using the Speech recognition kit SR-07 from Images SI Inc in CPU-mode with an ATMega 128 as host controller. Troubles were identified when using the SR-07 in CPU-mode. Also the HM-2007 booklet (DS-HM2007) has missing/incorrect description of using the HM2007 in CPU-mode. This appendum is giving our experience in solving the problems when operating the HM2007 in CPU-Mode. A generic implementation of a HM2007 driver is appended as reference.[12]

A.Training Words for Recognition Press 1 (display will show 01 and the LED will turn off) on the keypad, then press the TRAIN key (the LED will turn on) to place circuit in training mode, for word one. Say the target word into the headset microphone clearly. The circuit signals acceptance of the voice input by blinking the LED off then on. The word (or utterance) is now identified as the 01 word. If the LED did not flash, start over by pressing 1 and then TRAIN key. You may continue training new words in the circuit. Press 2 then TRN to train the second word and so on. The circuit will accept and recognize up to 20 words (numbers 1 through 20). It is not necessary to train all word spaces. If you only require 10 target words thats all you need to train. B.Testing Recognition: Repeat a trained word into the microphone. The number of the word should be displayed on the digital display. For instance, if the word directory was trained as word number 20, saying the word directory into the microphone will cause the number 20 to be displayed [5]. C. Error Codes: The chip provides the following error codes. 55 = word to long 66 = word to short 77 = no match D. Clearing Memory To erase all words in memory press 99 and then CLR. The numbers will quickly scroll by on the digital display as the memory is erased [11]. E. Changing & Erasing Words Trained words can easily be changed by overwriting the original word. For instances suppose word six was the word Capital and you want to change it to the word State. Simply retrain the word space by pressing 6 then the TRAIN key and saying the word State into the microphone. If one wishes to erase the word without replacing it with another word press the word number (in this case six) then press the CLR key.Word six is now erased. F.Voice Security System This circuit isnt designed for a voice security system in a commercial application, but that should not prevent anyone from experimenting with it for that purpose. A common approach is to use three or four keywords that 5

II. OVERVIEW

The keypad and digital display are used to communicate with and program the HM2007 chip. The keypad is made up of 12 normally open momentary contact switches. When the circuit is turned on, 00 is on the digital display, the red LED (READY) is lit and the circuit waits for a command [23]

Figure 1: Basic Block Diagram

International Journal of Electronics, Communication & Soft Computing Science and Engineering (IJECSCSE) Volume 1, Issue 1

must be spoken and recognized in sequence in order to open a lock or allow entry[13].

III. MORE ON THE HM2007 CHIP

The HM2007[25] is a CMOS voice recognition LSI (Large Scale Integration) circuit. The chip contains an analog front end, voice analysis, regulation, and system control functions. The chip may be used in a stand alone or CPU connected. Features: Single chip voice recognition CMOS LSI Speaker dependent External RAM support Maximum 40 word recognition (.96 second) Maximum word length 1.92 seconds (20 word) Microphone support Manual and CPU modes available Response time less than 300 milliseconds 5V power supply Following conditions must be performed before/when power-up of the hm2007: pin cpum must be logical high (pdip pin 14, plcc pin 15), this means select Cpu-mode. pin wlen must be logical low (pdip pin 13, plcc pin 14), this means select 0.92 sec word length. This is very important; else the hm2007 willlock, or give Wrong command answer when result data is read. A. Pin Configuration:

IV. CODING

void main() { TRISB = 0xFF; // Set PORTB as input Usart_Init(9600);// Initialize UART module at 9600 Delay_ms(100);// Wait for UART module to stabilize Usart_Write('S'); Usart_Write('T'); Usart_Write('A'); Usart_Write('R'); Usart_Write('T'); // and send data via UART Usart_Write(13); while(1) { if(PORTB==0x01) { Usart_Write('1'); // and send data via UART while(PORTB==0x01) {} } if(PORTB==0x02) { Usart_Write('2'); // and send data via UART while(PORTB==0x02) {} } if(PORTB==0x03) { Usart_Write('3'); // and send data via UART while(PORTB==0x03) {} } if(PORTB==0x04) { Usart_Write('4'); // and send data via UART while(PORTB==0x04) {} } if(PORTB==0x05) { Usart_Write('5'); // and send data via UART while(PORTB==0x05) {} } if(PORTB==0x06) { Usart_Write('6'); // and send data via UART while(PORTB==0x06) {} } if(PORTB==0x07) { Usart_Write('7'); // and send data via UART while(PORTB==0x07) {} 6

Figure 2 : Pin configuration of HM2007P

International Journal of Electronics, Communication & Soft Computing Science and Engineering (IJECSCSE) Volume 1, Issue 1

} if(PORTB==0x08) { Usart_Write('8'); // and send data via UART while(PORTB==0x08) {} } if(PORTB==0x09) { Usart_Write('9'); // and send data via UART while(PORTB==0x09) {} } if(PORTB==0x0A) { Usart_Write('1'); // and send data via UART Usart_Write('0'); while(PORTB==0x0A) {} } if(PORTB==0x0B) { Usart_Write('1'); // and send data via UART Usart_Write('1'); while(PORTB==0x0B) {} } if(PORTB==0x0C) { Usart_Write('1'); // and send data via UART Usart_Write('2'); // and send data via UART while(PORTB==0x0C) {} } if(PORTB==0x0D) { Usart_Write('1'); // and send data via UART Usart_Write('3'); // and send data via UART while(PORTB==0x0D) {}

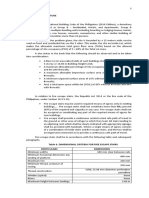

Experiment conducted in following circumstances 1. The room should be sound proof. 2. Humidity should not be greater than 50%. 3. The person speaking, should be close to microphone. 4. It does not depend on temperature.

VI. ANALYSIS A. Sensitivity of system Testing signal : impulse of 10 Hz Testing on : DSO 100 Hz bandwidth Response time : 50 ms

Figure 3 : Impulse response graph B. Response to sinusoidal Testing signal:- sinusoidal of 3.9999 Mhz Response time:- 10 ms

} if(PORTB==0x0E) { Usart_Write('1'); / and send data via UART Usart_Write('4'); // and send data via UART while(PORTB==0x0E) {} } if(PORTB==0xFF) { Usart_Write('1'); // and send data via UART Usart_Write('5'); // and send data via UART while(PORTB==0xFF) {} } }

Figure 4 : Response graph C. Voice recognition analysis Testing Signal:- human voice Error produced:- 0.4 % Response time:- 15 ms

V. RESULT

7

International Journal of Electronics, Communication & Soft Computing Science and Engineering (IJECSCSE) Volume 1, Issue 1 23. L. R. Rabiner, S. E. Levinson, A. E. Rosenberg and J. G. Wilpon, Speaker Independent Recognition of Isolated Words Using Clustering Techniques, IEEE Trans. Acoustics, Speech and Signal Proc., Vol. Assp-27, pp. 336-349, Aug. 1979. 24. B. Lowerre, The HARPY Speech Understanding System, Trends in Speech Recognition, W. Lea, Editor, Speech Science Publications, 1986, reprinted in Readings in Speech Recognition, A. Waibel and K. F. Lee, Editors, pp. 576-586, Morgan Kaufmann Publishers, 1990. 25. M. Mohri, Finite-State Transducers in Language and Speech Processing, Computational Linguistics, Vol. 23, No. 2, pp. 269312, 1997. 26. Dennis H. Klatt, Review of the DARPA Speech Understanding Project (1), J. Acoust. Soc. Am., 62, 1345-1366, 1977. 27. F. Jelinek, L. R. Bahl, and R. L. Mercer, Design of a Linguistic Statistical Decoder for the Recognition of Continuous Speech , IEEE Trans. On Information Theory, Vol. IT-21, pp. 250- 256, 1975. 28. C. Shannon, A mathematical theory of communication , Bell System Technical Journal, vol.27, pp. 379-423 and 623-656, July and October, 1948. 29. S. K. Das and M. A. Picheny, Issues in practical large vocabulary isolated word recognition: The IBM Tangora system, in Automatic Speech and Speaker Recognition Advanced Topics , C.H. Lee, F. K. Soong, and K. K. Paliwal, editors, p. 457-479, Kluwer, Boston, 1996. 30. B. H. Juang, S. E. Levinson and M. M. Sondhi, Maximum Likelihood Estimation for Multivariate Mixture Observations of Markov Chains, IEEE Trans. Information Theory, Vol. It-32, No. 2, pp. 307-309, March 1986.

Figure 5: Voice recognition

VII. CONCLUSION

From above explanation we conclude that HM2007 can be used to detect voice signals accurately. After detecting voice signals these can be used to operate the mouse as explained earlier. Thus, we can implement microcontroller in voice recognition system for human machine interface in embedded system.

REFERENCES

1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. 17. 18. H. Dudley, The Vocoder, Bell Labs Record, Vol. 17, pp. 122-126, 1939. H. Dudley, R. R. Riesz, and S. A. Watkins, A Synthetic Speaker, J. Franklin Institute, Vol.227, pp. 739-764, 1939. J. G. Wilpon and D. B. Roe, AT&T Telephone Network Applications of Speech Recognition , Proc. COST232 Workshop, Rome, Italy, Nov. 1992. C. G. Kratzenstein, Sur la raissance de la formation des voyelles , J. Phys., Vol 21, pp. 358-380, 1782. H. Dudley and T. H. Tarnoczy, The Speaking Machine of Wolfgang von Kempelen, J. Acoust. Soc. Am., Vol. 22, pp. 151-166, 1950. Sir Charles Wheatstone, The Scientific Papers of Sir Charles Wheatstone, London: Taylorand Francis, 1879. J. L. Flanagan, Speech Analysis, Synthesis and Perception , Second Edition, Springer-Verlag,1972. H. Fletcher, The Nature of Speech and its Interpretations , Bell Syst. Tech. J., Vol 1, pp. 129-144, July 1922. K. H. Davis, R. Biddulph, and S. Balashek, Automatic Recognition of Spoken Digits, J.Acoust. Soc. Am., Vol 24, No. 6, pp. 627-642, 952. H. F. Olson and H. Belar, Phonetic Typewriter, J. Acoust. Soc. Am., Vol. 28, No. 6, pp.1072-1081, 1956. J. W. Forgie and C. D. Forgie, Results Obtained from a Vowel Recognition Computer Program, J. Acoust. Soc. Am., Vol. 31, No. 11, pp. 1480-1489, 1959. J. Suzuki and K. Nakata, Recognition of Japanese Vowels Preliminary to the Recognition of Speech, J. Radio Res. Lab, Vol. 37, No. 8, pp. 193-212, 1961. J. Sakai and S. Doshita, The Phonetic Typewriter, Information Processing 1962, Proc. IFIP Congress, Munich, 1962. K. Nagata, Y. Kato, and S. Chiba, Spoken Digit Recognizer for Japanese Language, NEC Res. Develop., No. 6, 1963. D. B. Fry and P. Denes, The Design and Operation of the Mechanical Speech Recognizer at University College London , J. British Inst. Radio Engr., Vol. 19, No. 4, pp. 211-229, 1959. T. B. Martin, A. L. Nelson, and H. J. Zadell, Speech Recognition by Feature AbstractionTechniques, Tech. Report AL-TDR-64-176, Air Force Avionics Lab, 1964. T. K. Vintsyuk, Speech Discrimination by Dynamic Programming , Kibernetika, Vol. 4, No. 2, pp. 81-88, Jan.-Feb. 1968. H. Sakoe and S. Chiba, Dynamic Programming Algorithm Quantization for Spoken Word Recognition, IEEE Trans. Acoustics, Speech and Signal Proc., Vol. ASSP-26, No. 1, pp. 4349, Feb. 1978. A. J. Viterbi, Error Bounds for Convolutional Codes and an Asymptotically Optimal Decoding Algorithm, IEEE Trans. Informaiton Theory, Vol. IT-13, pp. 260-269, April 1967. B. S. Atal and S. L. Hanauer, Speech Analysis and Synthesis by Linear Prediction of the Speech Wave, J. Acoust. Soc. Am. Vol. 50, No. 2, pp. 637-655, Aug. 1971. F. Itakura and S. Saito, A Statistical Method for Estimation of Speech Spectral Density and Formant Frequencies, Electronics and Communications in Japan, Vol. 53A, pp. 36-43, 1970. F. Itakura, Minimum Prediction Residual Principle Applied to Speech Recognition, IEEE Trans. Acoustics, Speech and Signal Proc., Vol. ASSP-23, pp. 57-72, Feb. 1975.

AUTHORS PROFILE

Sunpreet Kaur Nanda

Department of Electronics and Telecommunication, Sipnas College of Engineering & Technology, Amravati, Maharashtra, India, sunpreetkaurbedi@gmail.com

Akshay P. Dhande

Department of Electronics and Telecommunication, SSGM College of Engineering, Shegaon, Maharashtra, India, akshaydhande126@gmail.com

19. 20. 21. 22.

You might also like

- Computer Organization and Design: The Hardware / Software InterfaceFrom EverandComputer Organization and Design: The Hardware / Software InterfaceRating: 4 out of 5 stars4/5 (12)

- Computer Science - Class 11 NotesDocument134 pagesComputer Science - Class 11 Notesabhhie100% (3)

- DP 900T00A ENU TrainerHandbookDocument288 pagesDP 900T00A ENU TrainerHandbookAndré baungatnerNo ratings yet

- Lab Exercise #1: Introduction To The Cypress Fm4 Board and Fm4 Starter KitDocument12 pagesLab Exercise #1: Introduction To The Cypress Fm4 Board and Fm4 Starter KitAhmed HamoudaNo ratings yet

- GPR BaseDocument50 pagesGPR Basenimitjain03071991No ratings yet

- Computer Knowledge Bit Bank for All Competitive ExamsFrom EverandComputer Knowledge Bit Bank for All Competitive ExamsRating: 4 out of 5 stars4/5 (4)

- RH 120eDocument8 pagesRH 120eSawadogo Gustave NapinguebsonNo ratings yet

- A Research Paper On DormitoriesDocument5 pagesA Research Paper On DormitoriesNicholas Ivy EscaloNo ratings yet

- Grade 8 For Demo Cigarette SmokingDocument3 pagesGrade 8 For Demo Cigarette SmokingSteven TaperoNo ratings yet

- Trend Management How To Effectively Use Trend-Knowledge in Your Company (Jörg Blechschmidt)Document121 pagesTrend Management How To Effectively Use Trend-Knowledge in Your Company (Jörg Blechschmidt)Antonio Gonzalez AriasNo ratings yet

- Computer Interface Trainer (MDA-Win8086) - ProjectDocument77 pagesComputer Interface Trainer (MDA-Win8086) - Projectranahamid00783% (12)

- Smartor manualENDocument148 pagesSmartor manualENPP043100% (1)

- 1180 DatasheetDocument9 pages1180 DatasheetVasudeva RaoNo ratings yet

- Speech Recog Board DocumentDocument11 pagesSpeech Recog Board DocumentDhanmeet KaurNo ratings yet

- Voice Controlled Robotic Vehicle: AbstractDocument11 pagesVoice Controlled Robotic Vehicle: AbstractNikhil GarrepellyNo ratings yet

- Speech Interaction SystemDocument12 pagesSpeech Interaction Systempremsagar483No ratings yet

- BME 438 Digital Logic Design and Computer Architecture LabDocument73 pagesBME 438 Digital Logic Design and Computer Architecture LabHafiz Muhammad Ahmad RazaNo ratings yet

- EDSIMDocument44 pagesEDSIMsania20110% (1)

- Mpal 2012 PDFDocument80 pagesMpal 2012 PDFudamtewNo ratings yet

- Amp DTMF PDFDocument30 pagesAmp DTMF PDFShubhadeep MajumdarNo ratings yet

- RSTS For Beginners: APRIL, 1986Document8 pagesRSTS For Beginners: APRIL, 1986BobHoskinsNo ratings yet

- Final Mapi Lab ManualDocument44 pagesFinal Mapi Lab ManualRamachandra ReddyNo ratings yet

- Speech Recognition Ic Hm2007 KitDocument5 pagesSpeech Recognition Ic Hm2007 KitGiau Ngoc HoangNo ratings yet

- Dbb1105 - Computer Fundamentals - Muj Solved Assignments by Crewcrux GroupDocument15 pagesDbb1105 - Computer Fundamentals - Muj Solved Assignments by Crewcrux GroupCrewcrux GroupNo ratings yet

- MCS 012Document65 pagesMCS 012Vishal ThakorNo ratings yet

- MCS12Document65 pagesMCS12manigandankindlyNo ratings yet

- Mpes Solved Dec 2017Document35 pagesMpes Solved Dec 2017masterjiynNo ratings yet

- ST Final Report TOMMOROW 4-4-2011 ReportDocument57 pagesST Final Report TOMMOROW 4-4-2011 Reportsureshkumar_scoolNo ratings yet

- Embedded SystemsDocument24 pagesEmbedded SystemsDileep KumarNo ratings yet

- Preview CCP Vtu ADocument8 pagesPreview CCP Vtu Aapi-238188038No ratings yet

- Chapter 1 Introduction To Computers, Programs, and Java: Prerequisites For Part IDocument74 pagesChapter 1 Introduction To Computers, Programs, and Java: Prerequisites For Part IDarajjee DhufeeraaNo ratings yet

- Computer Science Support Study Material For XI and All Competitive Examination 2016Document134 pagesComputer Science Support Study Material For XI and All Competitive Examination 2016GunjanNo ratings yet

- Ehsan Shams Saeed Sharifi TehraniDocument22 pagesEhsan Shams Saeed Sharifi TehraniHari PillaiNo ratings yet

- C&DS NotesDocument209 pagesC&DS NotesRamesh NayuduNo ratings yet

- Lectures Cs 1073Document314 pagesLectures Cs 1073dnlkabaNo ratings yet

- Wireless Networking To Support Data and Voice Communication Using Spread Spectrum Technology in The Physical LayerDocument7 pagesWireless Networking To Support Data and Voice Communication Using Spread Spectrum Technology in The Physical LayerbassssssssssssssNo ratings yet

- AC 1997paper109 PDFDocument8 pagesAC 1997paper109 PDFFranklin Cardeñoso FernándezNo ratings yet

- Computer Interface Trainer (MDA-Win8086) - PresentationDocument50 pagesComputer Interface Trainer (MDA-Win8086) - Presentationranahamid00780% (5)

- Experiment-1 Introduction To Microprocessors by Using An Emulator: EMU8086 1-1 ObjectDocument6 pagesExperiment-1 Introduction To Microprocessors by Using An Emulator: EMU8086 1-1 ObjectMohammed Dyhia AliNo ratings yet

- Word Recognition Device: C.K. Liang & Oliver Tsai ECE 345 Final Project TA: Inseop Lee Project Number: 22Document21 pagesWord Recognition Device: C.K. Liang & Oliver Tsai ECE 345 Final Project TA: Inseop Lee Project Number: 22Bruno Garcia TejadaNo ratings yet

- Gulam Amer Head, EIEDocument22 pagesGulam Amer Head, EIEamerNo ratings yet

- The Open Service Node (OSN) A Practical Approach To Getting StartedDocument11 pagesThe Open Service Node (OSN) A Practical Approach To Getting StartedJuly MinNo ratings yet

- Lec 45 Chap 4 F 04Document23 pagesLec 45 Chap 4 F 04shemariyahsworldNo ratings yet

- CS609 - Midterm Solved Subjective With References by Moaaz PDFDocument7 pagesCS609 - Midterm Solved Subjective With References by Moaaz PDFHaris Sohail100% (1)

- 08.508 DSP Lab Manual Part-ADocument64 pages08.508 DSP Lab Manual Part-AAssini HussainNo ratings yet

- Real TermDocument33 pagesReal TermAnonymous Nm9u5mNo ratings yet

- HW2 Operating SystemsDocument9 pagesHW2 Operating SystemsSainadh GodenaNo ratings yet

- A Beginner's Guide To Programming Digital Audio Effects in The KX Project EnvironmentDocument15 pagesA Beginner's Guide To Programming Digital Audio Effects in The KX Project EnvironmentEsteban BikicNo ratings yet

- Ch10 AssemDocument18 pagesCh10 Assemcorei9No ratings yet

- Peripheral Interfacing: Dr.P.Yogesh, Senior Lecturer, DCSE, CEG Campus, Anna University, Chennai-25Document57 pagesPeripheral Interfacing: Dr.P.Yogesh, Senior Lecturer, DCSE, CEG Campus, Anna University, Chennai-25Mansoor AliNo ratings yet

- C1 - Introduction To ComputingDocument23 pagesC1 - Introduction To ComputingDr. Hjh. Norasiken BakarNo ratings yet

- Interfacing GSM With LPC2148 For Sending and Receiving Message and Voice CallDocument10 pagesInterfacing GSM With LPC2148 For Sending and Receiving Message and Voice CallBhavin R DarjiNo ratings yet

- Open Firmware - Technical Note TN1061Document13 pagesOpen Firmware - Technical Note TN1061muinteresante840No ratings yet

- IntroductionDocument20 pagesIntroductionlaithismail200018No ratings yet

- Speech Based Wheel Chair Control Final Project ReportDocument77 pagesSpeech Based Wheel Chair Control Final Project ReportMohammed Basheer100% (4)

- Microprocessor: Viva QuestionsDocument6 pagesMicroprocessor: Viva QuestionshrrameshhrNo ratings yet

- 8086 Microprocessor Lab Manual - 01Document7 pages8086 Microprocessor Lab Manual - 01Uma GoNo ratings yet

- Describe The Operation of Timing and Control BlockDocument4 pagesDescribe The Operation of Timing and Control BlockTamayo Arrianna MurielNo ratings yet

- Interrupts/Polling and Input/Output With The DSK Codec: Pre-LabDocument4 pagesInterrupts/Polling and Input/Output With The DSK Codec: Pre-LabrkNo ratings yet

- College of Engineering and TechnologyDocument11 pagesCollege of Engineering and TechnologyDrHassan Ahmed ShaikhNo ratings yet

- CGC LAB MPDocument39 pagesCGC LAB MPJames ManningNo ratings yet

- Realization of Embedded Speech Recognmition Module Based On STM32Document5 pagesRealization of Embedded Speech Recognmition Module Based On STM32German Dario BuitragoNo ratings yet

- D.C Motor Control Using Voice CommandsDocument27 pagesD.C Motor Control Using Voice CommandsDeepakNayak1100% (5)

- By Vijay Kumar. K Asst. Professor Dept. of ECEDocument67 pagesBy Vijay Kumar. K Asst. Professor Dept. of ECEsrianjaneya1234No ratings yet

- Scenario Summary: Changing Cells: Result CellsDocument12 pagesScenario Summary: Changing Cells: Result Cellsnimitjain03071991No ratings yet

- Three Phase Solid State Relay With ZVSDocument4 pagesThree Phase Solid State Relay With ZVSnimitjain03071991No ratings yet

- GPR - Landmine DetectionDocument12 pagesGPR - Landmine Detectionnimitjain03071991No ratings yet

- Microwave Project ReportDocument30 pagesMicrowave Project ReportMd RakibNo ratings yet

- Chemistry Project Paper ChromatographyDocument20 pagesChemistry Project Paper ChromatographyAmrita SNo ratings yet

- Lab Science of Materis ReportDocument22 pagesLab Science of Materis ReportKarl ToddNo ratings yet

- Bibliografi 3Document3 pagesBibliografi 3Praba RauNo ratings yet

- Dyn ST 70 Ser 2Document12 pagesDyn ST 70 Ser 2alexgirard11735100% (1)

- Experiential MarketingDocument23 pagesExperiential Marketingambesh Srivastava100% (5)

- Timing Chain InstallationDocument5 pagesTiming Chain InstallationToriTo LevyNo ratings yet

- 65° Panel Antenna: General SpecificationsDocument2 pages65° Panel Antenna: General SpecificationsAnnBliss100% (2)

- If You Restyou RustDocument4 pagesIf You Restyou Rusttssuru9182No ratings yet

- MPDFDocument10 pagesMPDFshanmuganathan716No ratings yet

- Sources of LawDocument27 pagesSources of LawIshita AgarwalNo ratings yet

- Snap-Tite75 Series-Catalog 3800 - SectionBDocument3 pagesSnap-Tite75 Series-Catalog 3800 - SectionBfrancis_15inNo ratings yet

- Key Concepts: Adding and Subtracting FractionsDocument7 pagesKey Concepts: Adding and Subtracting Fractionsnearurheart1No ratings yet

- Guide For H Nmr-60 MHZ Anasazi Analysis: Preparation of SampleDocument7 pagesGuide For H Nmr-60 MHZ Anasazi Analysis: Preparation of Sampleconker4No ratings yet

- MS Y7 Spelling Bee Memo & List 21 22Document9 pagesMS Y7 Spelling Bee Memo & List 21 22Muhammad Akbar SaniNo ratings yet

- Brochure - Mounted Telescopic Crane (S) - NOVEMBER - 2018Document2 pagesBrochure - Mounted Telescopic Crane (S) - NOVEMBER - 2018Fernanda RizaldoNo ratings yet

- Remote Sensing: Carbon Stocks, Species Diversity and Their Spatial Relationships in The Yucatán Peninsula, MexicoDocument18 pagesRemote Sensing: Carbon Stocks, Species Diversity and Their Spatial Relationships in The Yucatán Peninsula, MexicoSara EspinozaNo ratings yet

- Symptoms and DiseasesDocument8 pagesSymptoms and Diseaseschristy maeNo ratings yet

- Inspection List For Electrical PortableDocument25 pagesInspection List For Electrical PortableArif FuadiantoNo ratings yet

- El Nido RW 33 Headwind 1120 + CLWDocument2 pagesEl Nido RW 33 Headwind 1120 + CLWRanny LomibaoNo ratings yet

- Teacher Empowerment As An Important Component of Job Satisfaction A Comparative Study of Teachers Perspectives in Al Farwaniya District KuwaitDocument24 pagesTeacher Empowerment As An Important Component of Job Satisfaction A Comparative Study of Teachers Perspectives in Al Farwaniya District KuwaitAtique RahmanNo ratings yet

- p-100 Vol2 1935 Part5Document132 pagesp-100 Vol2 1935 Part5Matias MancillaNo ratings yet

- C1 L2D Quadratic FormulaDocument3 pagesC1 L2D Quadratic FormulaJo-Amver Valera ManzanoNo ratings yet

- CV LeTranNguyen enDocument4 pagesCV LeTranNguyen enLe Tran NguyenNo ratings yet