Professional Documents

Culture Documents

The Role of Context in Concept Learning

Uploaded by

bobqwerty123456Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

The Role of Context in Concept Learning

Uploaded by

bobqwerty123456Copyright:

Available Formats

The Role of Context in Concept Learning

Department of Computer Science, University of Ottawa 150 Louis Pasteur, Ottawa Ontario, K1N 6N5 Canada fstan,mkubatg@csi.uottawa.ca

Stan MATWIN and Miroslav KUBAT

Abstract

Many practical, real-life applications of concept learning are impossible to address without taking into consideration the background of the concept, its frame of reference, and the particual situation and circumstances of its occurence, shortly its context. Even though the phenomenon of context has been treated by philosophers and cognitive scientists, it deserves more attention in the machine-learning community.

1 Introduction

Suppose that we are dealing with a classi cation task, and that the concept model involves a set A of attributes. The context is a set C of attributes that are not contained in A (i.e. we may have to acquire additional attributes). C has the property that this set alone does not facilitate good classi cation, but when supplemented to the attribute set A it results in a classi cation performance that outstrips the performance obtained with A alone. Taking context into account raises many questions, of which one should be highlighted as the most prominent: how to take into account the change of context between the training test and the testing set? Or later, between the learning task and the performance task? The thesis about the importance of context can be illustrated with examples of three machine-learning applications in which this phenomenon is either necessary or at least highly bene cial. The best-known among them is the Calendar Apprentice (Mitchell et al., 1994) in which personalized rules for scheduling meetings of a university professor are learned from an ongoing use of an appointment calendar. Their system, CAP, has been tested on a small group of users, working in an academic environment, and the authors have observed that "...the periods of the poorest per-

formance correlate strongly with the semester boundaries in the academic year : : : A]s the new semester begins and old scheduling priorities are replaced by new ones, CAP's performance typically recovers following these performance] dips, as it learns new rules re ecting the user's new scheduling regularities." Knowing in which semester a given meeting or appointment takes place provides a useful context for the scheduling task. It seems obvious that CAP could potentially bene t from contextual classi er selection rules. The second application is our own work on applying learning to detect oil spills on the sea surface by scanning satellite radar images. In this task, the only reasonable approach to assess the learner is to learn from one set of images, and test on a di erent set of images. The learning task involves approximately 30 attributes, representing the objects in the images in terms of their characteristics produced by a set of vision modules. These vision characteristics describe, e.g., the average brightness of the pixels in the object, the jaggedness of the object's contour, the sharpness of its edge, etc. Early experimentation has shown that these vision attributes are not su cient to classify objects into spills and non-spills with adequate accuracy. The domain experts tell us that other data, such as meteorological conditions, the angle of the radar beam that produces the image, end even the proximity of the object to land, can improve predictive accuracy. Such data are another typical example of context that has to be taken into account. At present time, we propose to use a variant of the contextual classi er selection, in which the images are clustered according to the context, and each cluster has its own classi er. The third study comes from the area of sleep analysis as presented by Kubat, Pfurtscheller, and Flotzinger (1994). A long-term study has shown that biological signals such as EEG are strongly subject-dependent, and therefore the knowledge induced from example measurements on a single person are not easy to transfer to another person. The cited work hence used

a di erent strategy: the expert classi es a subset of examples obtained from a single sleeper, the system learns from them, and then classi es all the remaining measurements on the same sleeper. Even this weaker scenario provides signi cant savings in the expensive human expertise. Here, the context is the particular sleeper. Kubat (1996) has proposed a strategy to deal with this particular kind of application. A decision tree is induced from a single sleeper and remains exactly the same across all the sleepers. What is learned for each sleeper separately, is the second tier which provides the interpretation of the tree in the context of this particular sleeper. All these applications strongly indicate that context is useful in the learning cycle, and that the learning task often cannot be resolved satisfactorily if context is ignored. In this paper, we review some of the philosophical, cognitive, and linguistic works on context. We then present the current machine learning work on this issue, and nish with an appeal to the community to pay more attention to context in the current research. To come to terms with the technical aspects of the issue, let us divide concepts into three groups based on their sensitivity to context: 1. Absolute concepts do not depend on any context. This is the case of unambiguously de ned notions such as `even number,' `abelian group,' or `quadratic equation.' 2. Relative concepts do not possess any property that is present under all circumstances. The meaning is totally di erent in di erent contexts. `Poverty,' `beauty,' and `high speed' seem to represent relative concepts. 3. Partially relative concepts can be characterized by a set of kernel properties that are always present, and some context-sensitive properties that vary in di erent contexts. For instance, a swimming suit will always consist of the same basic components but its color, shape, size, and material will follow the dictate of fashion.

2 Philosophy and Cognitive Science on Context

\One can never step twice into the same river, as it does not remain the same," the ancient philosopher Heraclitus said. Everything alters, the only permanence being the law of change. The entire cosmos is in ux and only the universal principle that everything changes remains unaltered. Even the words of our language are subject to constant ux and so are concepts that our minds use to represent and process information. Next time you step in the river, the context is

di erent. In any case, the meaning of a word, a concept, or an expression in natural language depends, sometimes fairly strongly, on a given context and/or subjective interpretation. This is well known to those thinkers that investigate the possibility of extracting true meaning from texts (hermeneutics). For instance, religious sources acquire di erent interpretations in different cultures, and this invokes the following frequently asked question: can their meaning be determined in some absolute, context-independent sense? Whereas objectivists believe that a meaning, independent of a particular interpretation, will be found if all pre-understandings, prejudices, biases, and cultural contexts are stripped from the text, many in uential philosophers are rather skeptical. Gadamer (1975, 1976) and Heidegger (1962) maintain that meaning is inseparable from the act of interpretation|every reading or hearing of a text constitutes an act of giving meaning to it. Searle, in his famous treatise on the essence of natural language (Searle, 1979), openly doubts that there might be any sense in trying to develop formal or symbolic descriptions of concepts1 in natural languages, simply because of the concepts' tendency to take on di erent meanings in di erent contexts. A large body of similar reservations has been put forward in the writings of many other contemporary philosophers, too numerous to be listed here. Readers interested in a more comprehensive account are referred to Bechtel (1988). Prevailing part of the literature dealing with concept learning from examples assumes availability of a predened set of descriptors (attributes, interim concepts, functions, or relations) that are expected to permit, to a certain extent, induction of the concept representation. Whether this scenario applies also to human recognition is uncertain. Winograd and Flores (1985) present a nice illustration of context dependency: Having been asked whether there is some water in the refrigerator, a person answers \yes, there is some water condensed on the bottom of the cooling coils." Appropriateness of this answer depends on the speci c circumstances. For a thirsty person, the information is certainly uninteresting but a photographer searching for sources of humidity that damaged some photographic plates stored in the refrigerator will nd the answer satisfactory. Apparently, even such plain concept as H2 O is, from the concept-recognition point of view, highly context dependent. This example, however illustrative, is somewhat distant from typical machine-learning problems and one can rightly question its actual relevance. But still,

1

he calls them `expressions'

context is inextricable from many classi cation tasks. For instance, medical doctors rarely work with all the information that is necessary to specify a diagnosis of, say, a u or yellow fever. Rather, they exploit just a few attributes (body temperature, character of pains) and supplement them with contextual information| yellow fever is uncommon in Ottawa region so it can perhaps be excluded; recently, several patients with u have appeared, so perhaps an epidemy is pending; and the like. Smith and Medin (1981) investigate the Cognitive Science point of view. They point out that context has di erent impact on concept learning, depending on the particular kind of learning mechanism and concept representation. The main approaches to concept representation identi ed in their work are: classical (a single concept description applies to all instances, and all features apply to all members of the concept), probabilistic featural (individual features occur with a probability conditional on the particular instance) probabilistic dimensional (the value of a feature in a concept de nition is the average of that value for all the instances of the concept), and nally the exemplar view, which closely resembles the k-nearest neighbor approach. Smith and Medin discuss context e ects in each of these representations. They do not say much about accommodating context in the classical representation, as the basic tenet in classical concepts is that their de nitions are immutable, and hence there is no mechanism which could accommodate the context. In the probabilistic model, the authors account for the temporary feature changes using the spreading activation model. They are unable to explain how the concept ambiguity can be handled in the probabilistic approach. Further on, the probabilistic dimensional approach is not conductive to the explanation of context phenomena. In the exemplar model, e ects of context are easy to handle both in terms of salient features and ambiguity, if enough exemplars exist. It is assumed that the existence of prior context skews the distribution of examples towards the instances which exhibit the salient features. If, however, there are only few typical exemplars, the context-dependent exemplar with lower typicality will not be retrieved. Context is discussed as having two e ects. The rst effect concerns the temporary changes in features. These occur when the context in uences the saliency of di erent features, e.g. the sentence context \John lifted the piano" emphasizes the heaviness of the piano, while "John tuned the piano" emphasizes the fact that the piano is a musical instrument. The second e ect occurs when concepts are ambiguous so that there are

two or more di erent concepts for the same term. Concept ambiguity occurs often for general concepts. For instance, in the sentence "There are lots of animals on this planet" the concept of animal is very general, while the same concept in the sentence "There are lots of animals in the zoo" is normally circumscribed to mammals, birds, and reptiles.

3 Related Research in Machine Learning

The opinion that concepts can be too exible to be captured by a single logical description is by no means novel to the machine-learning community. Michalski (1987) was one of the rst to call attention to context dependency and proposed the method of twotiered characterization of concepts: the `base core description' is stored as a kind of rst approximation to the concept, whereas the `inferential concept recognition' scheme is suggested to cover less typical and context-dependent aspects. The approach is discussed at length by Bergadano et al. (1992). In the terminology from Section 1, the two-tiered representation addresses partially relative concepts. The attitude advanced by Kubat (1989) and later elaborated by Widmer and Kubat (1996) allows for the extreme case of relative concepts, lacking any common kernel. The work builds on the assumption that the contexts are unknown to the learner and teacher alike. Their system FLORA assumes that the context is not likely to vary too often. The agent gradually develops the concept description on-line from a stream of examples and adjusts exibly the current description. In the process, it keeps track of the successes and failures of the classi cation attempts as well as of lexical complexity of the concept descriptions. Increased percentage of failures and/or sudden outbursts in the complexity are used as heuristics signalling a possible shift in the context and, consequently, a change in the concept description. In that case, the old description is stored and a new one is developed as fast as possible. Typically, several descriptions of the same concept (each relevant in a di erent context) are being kept in the memory for the case that the same or similar context reappears in the future. This conception is close to the somewhat older understanding of concept drift employed in the system STAGGER, developed by Schlimmer and Granger (1986). The notion of concept drift has received some attention in the literature on computational learning theory. For instance, Helmbold and Long (1991, 1994) and Kuh et al. (1991, 1992) have explicitly investigated various conditions under which drift tracking is possible. They start from the observation that drift tracking is strictly impossible if there are no restrictions on the type of

concept changes allowed (as an extreme example, consider a sequence of concepts that randomly alternates between the constant function 1 and the constant function 0 after every example). They then go on to study various restrictions on the severity (extent ) or the frequency (rate) of concept changes. In particular, Helmbold and Long (1994) assume a permanent, though very slight drift. They show that to be able to track the context shift, it is enough if the learner considers only a subset of recent examples| a window sliding over the input stream. Their analysis leads to rough estimates as to the window size needed for e ective tracking. For instance, for one of their algorithms they show that a window size m = (c0 d= ) log(1= ) (together with a speci c restriction on the allowable amount of drift) guarantees trackability. Kuh et al. (1991) introduce the notion of PAC-tracking as a straightforward extension of Valiant's (1984) PAC framework. Their general results relate to the batchlearning approach to on-line learning, where a hypothesis is recomputed from the entire window after each instance. Unlike Helmbold and Long, they rather set out to determine the maximum rate of drift, that is how frequently a concept is allowed to change if it is to be tolerable by a learner. Work trying to do justice to context dependency can be found also in the literature dealing with arti cial neural networks. For instance, the research reported by Pratt, Mostow, and Kamm (1991) and Pratt (1993) focuses on the following issue: a speech recognition network that has been trained on speakers with an American accent is to be used in an environment where a British accent prevails. The recognition performance of the network will obviously degrade, and retraining with examples generated by British speakers will be necessary. However, to try to retrain the network from scratch with randomly initialized weights and uninformed architecture would be unnecessarily expensive. Rather, the previous knowledge, encoded in the network that was trained by Americans, should somehow be utilized for the initialization of the new network or, in other words, transferred to it. After all, the two groups of speakers have a lot in common. The problems of transfer to a novel context are further treated by Pratt (1996) and in the framework of decision-tree induction by Kubat (1996) Katz, Gately, and Collins (1990) and Turney (1993) provide de nition of contextual attributes and systemize various strategies for utilizing the information about context in the process of learning. In their experiments, they demonstrate that the use of context can result in substantially more accurate classi cation. A fundamental assumption of their work is that the information about the di erent contexts be provided

prior to learning. This information then enables the learner to properly normalize the context-sensitive attributes or to adopt some other strategy that apriori re ects the di erent context. Importantly, several other authors allude to the implicit presence of context in the particular problems they attacked, even though they do not address the issue explicitly. The papers by Dent et al. (1992), Drummond, Holte, and Ionescu (1993), and Holte and Drummont (1994) emphasize the need for learning in time-varying and context-sensitive domains.

4 Conclusion

We have argued in this paper that context is often an inherent component of the concept description and classi cation. We have brie y summarized relevant work in philosophy and cognitive science, as well as the early inroads into context-sensitive systems in Machine Learning. We believe that many practical learning applications necessitate the use of context in learning and in the performance task alike. Nevertheless, context has been only marginally addressed in the Machine Learning community. One of the reasons for this may be the fact that the data sets in the Irvine repository often obliterate the context with other available data, or disregard the context altogether (e.g. would diagnostic rules learned on a dataset from one group of patients transfer well to another group of patients, in a di erent country and a di erent environment? Our experience tells us that this is not always the case). Since many authors believe (falsely) that running their algorithm on a selection of Irvine datasets is equivalent to a real-life application, the context problem is often overlooked. Anyone, however, who has ever been involved in a realistic application, in which data has to be engineered to provide input to a learning system, will likely confront the context problem sooner or later.

Acknowledgements

The work described here is carried out at the Ottawa Machine Learning Group (OMLG). Research at OMLG is supported by the Natural Sciences and Engineering Reserach Council of Canada, by Precarn, Inc. and Macdonald Dettwiler, Inc., and by the Ontario Information Technology Research Centre. Special thanks are due to Gerhard Widmer. Our discussions with him were greatly helpful in writing Section 3 of this paper.

References

Bechtel, W. (1988). Philosophy of Mind: An Overview for Cognitive Science. Lawrence Erlbaum Associates,

Hillsdale, New Jersey Bergadano, F., Matwin, S., Michalski, R.S., and Zhang, J. (1992). Learning Two-Tiered Descriptions of Flexible Concepts: The POSEIDON System. Machine Learning, 8, 5{43 Dent, L., Boticario, J., McDermott, J., Mitchell, T., and Zabowski, D. (1992). A Personal Learning Apprentice. Proceedings of 1992 National Conference on Ari cial Intelligence Drummond, C., Holte, R., and Ionescu, D. (1993). Accelerating Browsing by Automatically Inferring a User's Goal. Proceedings of the 8th Knowledge-Based Software Engineering Conference, pp. 160{167 Gadamer, H.-G. (1975). Truth and Method (translated and edited by G. Barden and J. Cumming). Seabury Press, New York Gadamer, H.-G. (1975). Philosophical Hermeneutics (translated by D.E. Linge), University of California Press, Berkeley Heidegger, M. (1962). Being and Time (translated by J. Macquarrie and E. Robinson), Harper & Row, New York Holte, R. and Drummont, C. (1994). A Learning Apprentice for Browsing. AAAI Spring Symposium on Software Agents Katz, A.J., Gately, M.T., and Collins, D.R. (1990). Robust Classi ers without Robust Features. Neural Computation, 2, 472{479 Kubat, M. (1996). Recycling Decision Trees in Numeric Domains. Machine Learning (submitted) Kubat, M., Pfurtscheller, G., and Flotzinger, D. (1994). AI-Based Approach to Automatic Sleep Classi cation. Biological Cybernetics, 79, 443{448 Michalski, R.S. (1987). How to Learn Imprecise Concepts: A Method Employing a Two-Tiered Knowledge Representation for Learning. Proceedings of the 4th International Workshop on Machine Learning (pp.50{58), Irvine, CA Mitchell, T., Caruana, R., Freitag, D., McDermott, J., and Zabowski, D. (1994). Experience with a Learning Personal Assistant. Communications of the ACM, 37, 80{91 Pratt, L.Y. (1993). Discriminability-Based Transfer Between Neural Networks. In S.J. Hanson, C.L. Giles, and J.D. Cowan (eds.): Advances in Neural Information Processing Systems 5, organ Kaufmann, San Mateo, California, 204{211 Pratt, L.Y. (1996). Transfer Between Neural Networks to Speed Up Learning. Journal of Arti cial Intelligence Research Pratt, L.Y., Mostow, J, and Kamm, C.A. (1991). Direct Transfer of Learned Information among Neural Networks. Proceedings of the 9th National Conference on Arti cial Intelligence (AAAI-91) (pp. 584{580), Anaheim, California Salganico , M. (1993). Density-Adaptive Learning and Forgetting. Proceedings of the 10th International Confer-

ence on Machine Learning (pp. 276{283), Amherst, MA. Searle, J.R. (1979). Expression and Meaning. Studies in the Theory of Speech Acts. Cambridge: Cambridge University Press Schlimmer, J.C. and Granger, R.H. (1986). Incremental Learning from Noisy Data. Machine Learning, 1, 317{354. Smith, E.E. and Medin, D.L. (1981). Categories and Concepts, Harvard University Press, Cambridge, MA Turney, P.D. (1993). Robust Classi cation with ContextSensitive Features. Proceedings of the Sixth International Conference of Industrial and Engineering Applications of Arti cial Intelligence and Expert Systems (pp.268{276), Edinburgh, Scotland. Widmer, G. and Kubat, M. (1996). Learning in the Presence of Concept Drift and Hidden Contexts. Machine Learning, 23, 69{101 Winograd, T. and Flores, F. (1988). Understanding Computers and Cognition. Addison-Wesley, Reading, Massachusetts

You might also like

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Information Brochure: (Special Rounds)Document35 pagesInformation Brochure: (Special Rounds)Praveen KumarNo ratings yet

- All Papers of Thermodyanmics and Heat TransferDocument19 pagesAll Papers of Thermodyanmics and Heat TransfervismayluhadiyaNo ratings yet

- Manual - Rapid Literacy AssessmentDocument16 pagesManual - Rapid Literacy AssessmentBaldeo PreciousNo ratings yet

- Ge 6 Art Appreciationmodule 1Document9 pagesGe 6 Art Appreciationmodule 1Nicky Balberona AyrosoNo ratings yet

- Company Profile PT. Geo Sriwijaya NusantaraDocument10 pagesCompany Profile PT. Geo Sriwijaya NusantaraHazred Umar FathanNo ratings yet

- Describe an English lesson you enjoyed.: 多叔逻辑口语,中国雅思口语第一品牌 公共微信: ddielts 新浪微博@雅思钱多多Document7 pagesDescribe an English lesson you enjoyed.: 多叔逻辑口语,中国雅思口语第一品牌 公共微信: ddielts 新浪微博@雅思钱多多Siyeon YeungNo ratings yet

- Postmodernism in Aha! MovieDocument2 pagesPostmodernism in Aha! MovieSayma AkterNo ratings yet

- Email ID: Contact No: +971562398104, +917358302902: Name: R.VishnushankarDocument6 pagesEmail ID: Contact No: +971562398104, +917358302902: Name: R.VishnushankarJêmš NavikNo ratings yet

- Report Painter GR55Document17 pagesReport Painter GR55Islam EldeebNo ratings yet

- FmatterDocument12 pagesFmatterNabilAlshawish0% (2)

- Package-Related Thermal Resistance of Leds: Application NoteDocument9 pagesPackage-Related Thermal Resistance of Leds: Application Notesalih dağdurNo ratings yet

- Burn Tests On FibresDocument2 pagesBurn Tests On Fibresapi-32133818100% (1)

- Group 2 - BSCE1 3 - Formal Lab Report#6 - CET 0122.1 11 2Document5 pagesGroup 2 - BSCE1 3 - Formal Lab Report#6 - CET 0122.1 11 2John Eazer FranciscoNo ratings yet

- 3.1 MuazuDocument8 pages3.1 MuazuMon CastrNo ratings yet

- Research Paper On N Queen ProblemDocument7 pagesResearch Paper On N Queen Problemxvrdskrif100% (1)

- Yumemiru Danshi Wa Genjitsushugisha Volume 2Document213 pagesYumemiru Danshi Wa Genjitsushugisha Volume 2carldamb138No ratings yet

- Online Dynamic Security Assessment of Wind Integrated Power System UsingDocument9 pagesOnline Dynamic Security Assessment of Wind Integrated Power System UsingRizwan Ul HassanNo ratings yet

- Slip Ring Motor CheckDocument3 pagesSlip Ring Motor CheckRolando LoayzaNo ratings yet

- Chapter 2 Axial and Torsional ElementsDocument57 pagesChapter 2 Axial and Torsional ElementsAhmad FaidhiNo ratings yet

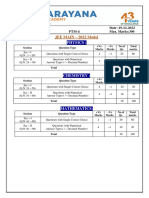

- Xii - STD - Iit - B1 - QP (19-12-2022) - 221221 - 102558Document13 pagesXii - STD - Iit - B1 - QP (19-12-2022) - 221221 - 102558Stephen SatwikNo ratings yet

- Yz125 2005Document58 pagesYz125 2005Ignacio Sanchez100% (1)

- Bosch Injectors and OhmsDocument6 pagesBosch Injectors and OhmsSteve WrightNo ratings yet

- Hooke's LawDocument1 pageHooke's LawAnan BarghouthyNo ratings yet

- Gilbert Cell Design PDFDocument22 pagesGilbert Cell Design PDFvysNo ratings yet

- Handbook+for+Participants+ +GCC+TeenDocument59 pagesHandbook+for+Participants+ +GCC+Teenchloe.2021164No ratings yet

- Identification of PolymersDocument11 pagesIdentification of PolymersßraiñlĕsšȜĭnšteĭñNo ratings yet

- Chapter 07Document16 pagesChapter 07Elmarie RecorbaNo ratings yet

- Astn/Ason and Gmpls Overview and Comparison: By, Kishore Kasi Udayashankar Kaveriappa Muddiyada KDocument44 pagesAstn/Ason and Gmpls Overview and Comparison: By, Kishore Kasi Udayashankar Kaveriappa Muddiyada Ksrotenstein3114No ratings yet

- Each Life Raft Must Contain A Few ItemsDocument2 pagesEach Life Raft Must Contain A Few ItemsMar SundayNo ratings yet

- Relay G30 ManualDocument42 pagesRelay G30 ManualLeon KhiuNo ratings yet