Professional Documents

Culture Documents

SDLC TestScenario Instructions

Uploaded by

Bharathk KldCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

SDLC TestScenario Instructions

Uploaded by

Bharathk KldCopyright:

Available Formats

TEXAS DEPARTMENT OF INFORMATION RESOURCES

Test Scenario

Instructions

Version 1.3 14 JAN 2008

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

Version History

This and other Framework Extension tools are available on the Framework Web site.

Release Date Description

14-Jan-2008

Version 1.3 released. Modified Using this Template section of the Template and italicized all section instructions to align with the Framework and Change Request (CR) #34. CR #34 was recommended by the Framework Change Advisory Board (CAB) and approved by DIR. Version 1.2 released. Made minor modifications to indicate Framework Extension. Version 1.1 Released. Revised Appendix B (Text Identifier Naming Conventions, Alternative 1). Version 1.0 Instructions and Template Released.

13-Mar-2007 08-Dec-2006 24-May-2006

DIR Document 25TS-N1-3

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

Contents

Introduction ............................................................................................................1 Use of the Test Scenario ........................................................................................1 Section 1. Overview .............................................................................................2 Section 2. Test Identifier ......................................................................................2 Section 3. Requirements Traceability Matrix .......................................................3 Section 4. Test Scenario Summary .....................................................................4 Section 5. Test Scenarios ....................................................................................4 Section 6. References ........................................................................................10 Section 7. Glossary ............................................................................................10 Section 8. Revision History ................................................................................10 Section 9. Appendices .......................................................................................10 Appendix A.Test Scenarios Organization.............................................................11 Appendix B.Test Identifier Naming Conventions ..................................................12

DIR Document 25TS-N1-3

Page i

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

Introduction

The Test Scenario Template is included within the System Development Life Cycle (SDLC) Extension of the Texas Project Delivery Framework (Framework) to establish a consistent method for documenting test scenarios, descriptions, procedures, and other testing information. Consistency in documenting test scenarios, descriptions, and procedures creates consistency in planning and executing testing of information technology (IT) systems. A test scenario describes the details required to test a major function for a system and consists of one or more test descriptions. A test description is a documented set of steps for testing a subset of the function documented within the scenario. A test description consists of one or more test procedures. A test procedure is the set of steps required to be performed to execute the test. Test scenarios, descriptions, and procedures are used in conjunction with the Test Plan to evaluate the correctness, completeness, and quality of an IT system. Testing involves any activity performed to evaluate an attribute or capability of a system or component of a system and determine if it meets its expected and required results. Methodical test planning and execution reduces project risk. A well-planned and executed test effort can reduce project risk by reducing uncertainty in implementation of the system or system component.

Use of the Test Scenario

Within the Framework, test scenarios are initiated in the Project Planning Review Gate and completed, reviewed, and approved at a project level, and executed in the Implementation Review Gate. The Test Scenario Template should be used to develop and document the test scenarios, descriptions, and procedures for each project. The format of the Test Scenario Template serves as a basis for creating an actual project document. Alternative methods for organizing test scenarios are provided in the appendix. Refer to Test Scenario Organization in the appendix and select and utilize one of the alternatives described. The Test Scenario Template Instructions and Template utilize Alternative 1. Test scenarios should be developed in coordination with and be accessible by appropriate project team and stakeholder entities. In addition, it should be updated to maintain consistency with the Test Plan and other project information and documents throughout the life of the project.

DIR Document 25TS-N1-3

Page 1

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

Section 1. Overview

Provide high-level introductory information on the test scenarios for the product being tested. A test scenario provides a complete test for a major function or use case for a system and consists of one or more test descriptions. Each test description supports a subset of major functionality, as defined by the System Requirements Specification (SyRS) and/or the Software Requirements Specification (SRS). A test description consists of one or more test procedures. A test procedure is a documented step-by-step process that supports a subset of major functionality, as described by the scenario. The successful completion of all of the test procedures and test descriptions in the test scenario constitutes the successful completion of the test scenario. The steps in a test procedure must be executed in a particular order. A set of test procedures may need to be performed in a particular order to complete a test description successfully. Many systems may require test descriptions to be executed in a particular order. For some systems, test descriptions may be executed in any order; however, the documented test scenarios may still recommend a particular order of execution in order to support more efficient completion of the complete scenario.

Section 2. Test Identifier

Specify the method used for identifying test scenarios, procedures, descriptions, and other test information. A guideline for identifying test scenarios, procedures, descriptions, and other test information is provided below. Each of the following should have a unique reference number: test scenario test description test procedure In addition, data sets and other similar information used during testing should have a unique reference number. Test descriptions should share a prefix identifier with their associated test scenario. If a test procedure is used for multiple test descriptions, the test procedure should share a prefix or middle identifier with their test descriptions. Alternatives for naming test identifiers are provided in the appendix. Refer to Test Identifier Naming Conventions. Select, specify, and utilize one of the alternatives specified, or specify and utilize a different method. This template utilizes Alternative 1.

DIR Document 25TS-N1-3

Page 2

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

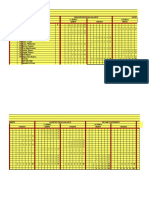

Section 3. Requirements Traceability Matrix

In this section, provide a reference to the location of the matrix that specifies the traceability of requirements (as documented in the SyRS and SRS) to design components, code components, test scenarios, test descriptions, and test procedures. If data sets or other test-related information are identified, then show the traceability of these items as well. A sample Requirements Traceability Matrix (RTM) template is provided as an additional tool in the appendix of the SyRS Template Instructions. The RTM is initiated in the SyRS and is updated appropriately during the life of the project to indicate traceabilty to the design elements documented in the SyDD, the software requirements documented in the SRS, and the design elements documented in the Software Design Description (SDD). The completed RTM assures that every requirement has been addressed in the design and that every design element addresses a requirement. The RTM also provides the necessary traceability for integration, acceptance, regression, and performance testing. The RTM referenced in the test scenario should: Indicate traceability of the system requirements to the system design elements, software requirements, and software design elements Contain the columns necessary to illustrate traceability for integration, acceptance, regression, and performance testing, including test scenario reference, test description reference, test procedure reference Indicate traceabilty from the system and software design elements to the appropriate test scenarios, test descriptions, and test procedures Indicate the source or origin of each requirement Note: Maintaining the RTM as a separate document and performing appropriate updates in a controlled fashionrather than including it within the SyRS and requiring that the SyRS be revised each time the RTM is modifiedis more efficient. Separating the RTM into two matricesrather than maintaining one large matrix that contains all traceability informationmay enhance the ability to maintain the traceability information. If the requirements traceability information that indicates the source and traceability of the system requirements to the system design elements, software requirements, and software design elements is comprehensive and baselined, the matrix referenced in the test scenarios may address traceability to testing scenarios, descriptions, and procedures. The traceability matrix may contain information indicating traceabilty from the system and software design elements to the appropriate test scenarios, test descriptions, and test procedures.

DIR Document 25TS-N1-3

Page 3

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

Design Element

Test Scenario Reference

Test Description Reference

Test Procedure Reference

ProjNameReq10000

ProjName100000

ProjName100100

ProjName100101 ProjName100102

ProjName100200

ProjName100201 ProjName100202 ProjName100203

ProjName200000

ProjName200100

ProjName200101 ProjName200102 ProjName200103

Table 1. Example of traceability matrix indicating traceabilty from design elements to test scenarios, descriptions, and procedures

Section 4. Test Scenario Summary

Provide a summary of the test effort, listing the total number of test scenarios to be executed, the total number of test descriptions for each test scenario, and the total number of test procedures to be executed for each test description. Increment the totals appropriately to account for test procedures repeated in multiple test descriptions for a scenario. Derive this overview from the traceability matrix and summarize it in the table provided in the template. An example is included below. The PASS/FAIL column should be used to indicate the status of the associated test scenario once it is executed. Unless otherwise indicated, the successful completion of all rows in this table shall constitute the successful completion of the test scenario. If any item within the scenario is incomplete or fails, the PASS/FAIL column should not indicate that the scenario has passed.

PASS/FAIL Test Scenario ID Total Test Descriptions for this Scenario Total Test Procedures for this Scenario

ProjName100000 ProjName200000 ProjName300000

Table 2. Example of Test Scenario Summary

2 3 5

10 17 52

Section 5. Test Scenarios

Customize the following sections to contain the test scenarios and their associated test descriptions and test procedures. Each subsection should be labeled sequentially and titled appropriately for a specific test scenario, description, and procedure.

DIR Document 25TS-N1-3

Page 4

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

5.1

Test Scenario PROJNAME100000

Describe the specific test scenario to be tested. Include in the description a reference to the requirements to be satisfied and the functionality to be tested. 5.1.1 Test Description PROJNAME100100 Describe the test to be performed to assure the correctness of a specific functionality contained within its associated test scenario. The test description shall reference all test procedures required to satisfy the test description and the order in which they will be performed (when there is a need to perform them in a particular order). The test description should also reference associated test information, including any setups or data shared across test procedures. An example test description table is included below. The PASS/FAIL column should be used to indicate the status of the associated test once it is executed. Unless otherwise indicated, the successful completion of all rows in this table shall constitute the successful completion of the test description. If any item within the description is incomplete or fails, the PASS/FAIL column should not indicate that the description has passed. The test procedures for this description should be documented in the sequence of execution that is specified for the test description, including any repetition of procedures, setups, or data. This method assures that the execution of the test description can be performed exactly according to procedures, in order, and with no need to reference more than one section of the document. An example of a test description is included below. Only the first two steps are depicted in the test procedures.

PASS / FAIL Sequence of Execution # Procedure Setup / Initialization Data

1 2

Projname100101 Projname100102

3 4

Projname100103 Projname100102

Projname100104

ProjnameSetup000001 State resulting from success of procedure Projname100101 ProjnameSetup000001 State resulting from success of procedure Projname100103 ProjnameSetup000004

Projnamedata000012 Projnamedata000001

None ProjNamedata000004

Projname100105

ProjnameSetup000005

Data set resulting from success of step 4 Data set resulting from success of step 5

Table 3. Example of a Test Description

5.1.1.1 Test for Test Description 100100 Sequence Item 1 Test Procedure 100101 Summary Provide an overview of the Test Procedure used in Step 1 of the testing to satisfy Test Description 100100.

DIR Document 25TS-N1-3

Page 5

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

5.1.1.2 Setup/Initialization/Special Instructions for ProjNameSetup000001 for Test Description 100100 Sequence Item 1 Test Procedure 100101 Describe the setup, initialization, and other special instructions specific to this test. Include in the description: configuration of the hardware and software which provides the infrastructure for the item under test the initial settings and conditions for the hardware and software which provides the infrastructure for the item under test the test tools, their configuration, initial settings, and conditions for this test special instructions to the tester (e.g., the expected results onscreen and in the database must match) 5.1.1.3 Data for ProjNameData000012 for Test Description 100100 Sequence Item 1 Test Procedure 100101 Specify file names for data or the actual data required to execute Step 1 Test Procedure 100101. Files or other data available in electronic formats should be placed under configuration control. Textual data within this document may be provided in the form of tables or other means. Data may include: initial inputs data provided during a particular step databases that may be accessed by the application erroneous data intended to challenge application integrity, performance, or availability data required for test tools other data used to prepare for testing or during testing

5.1.1.4 Steps for Test Description 100100 Sequence Item 1 Test Procedure 100101 Specify the steps for executing Test Procedure 100101. The steps must be executed in the order described and, unless otherwise designated, each step should be considered critical to the success of the procedure. The execution of this procedure should result in a step-by-step pass/fail result. The PASS/FAIL column should be used to indicate the status of the associated step once it is executed. Unless otherwise indicated, the successful completion of all rows in this table shall constitute the successful completion of the test description. If any step within the procedure is incomplete or fails, the PASS/FAIL column should not indicate that the step has passed.

DIR Document 25TS-N1-3

Page 6

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

An example of a test procedure is included below.

PASS / FAIL TEST PROCEDURE 100101 STEP

Ensure that the configuration described in section 5.1.1.2 is correct. Ensure that the initialization data described in 5.1.1.3 is correct and in the following directory c:/xxx. Launch Microsoft Internet Explorer Version 6.xx. Open URL c:/xxxx/yyy/zz.htm Enter the name John Smith in the field titled Name and hit enter. Enter valid password in the field titled Password. (This is a case-sensitive password.) Ensure that the application now displays a new screen with banner title Registration. Ensure that this screen displays the name (from step 5) in the Name field and an associated address in the address fields. Ensure that the address displayed for the name matches the database contents for that name. In the field titled Action, choose the Update all action. Ensure that the display shows Update Completed at the bottom of the page, upon completion of the action. Inspect the database table to ensure the information described in file c:xxx.txt has been deleted. END OF TEST PROCEDURE

Table 4. Example of a Test Procedure

5.1.1.5 Expected Results for Test Description 100100 Sequence Item 1 Test Procedure 100101 Specify the results that indicate that the test of the item is successful. Unless otherwise indicated, the success of a test procedure requires that each step be executed successfully. This subsection should specify this or other modified criteria. The criteria may include values within tolerance levels (e.g., numbers in a range from five to ten are acceptable). 5.1.2 Test Description PROjNAME100200 Describe the test to be performed to assure the correctness of a specific functionality contained within its associated test scenario. The test description shall reference all test procedures required to satisfy the test description and the order in which they will be performed (when there is a need to perform them in a particular order). The test description should also reference associated test information, including any setups or data shared across test procedures. An example test description table is included below. The PASS/FAIL column should be used to indicate the status of the associated test once it is executed. Unless otherwise indicated, the successful completion of all rows in this table shall

DIR Document 25TS-N1-3

Page 7

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

constitute the successful completion of the test description. If any item within the description is incomplete or fails, the PASS/FAIL column should not indicate that the description has passed. The test procedures for this description should be documented in the sequence of execution that is specified for the test description, including any repetition of procedures, setups, or data. This method assures that the execution of the test description can be performed exactly according to procedures, in order, and with no need to reference more than one section of the document. An example of a test description is included below. Only the first two steps are depicted in the test procedures.

PASS / FAIL Sequence of Execution # Procedure Setup / Initialization Data

1 2 3 4 5 6

Projname100201 Projname100202 Projname100203 Projname100202 Projname100204 Projname100205

ProjnameSetup000001 State resulting from success of procedure Projname100201 ProjnameSetup000001 State resulting from success of procedure Projname100203 ProjnameSetup000004 ProjnameSetup000005

Projnamedata000012 Projnamedata000001 None ProjNamedata000004 Data set resulting from success of step 4 Data set resulting from success of step 5

Table 5. Example of a Test Description

5.1.2.1 Test for Test Description 100200 Sequence Item 1 Test Procedure 100201 Summary Provide an overview of the Test Procedure used in Step 1 of the testing to satisfy Test Description 100200. 5.1.2.2 Setup/Initialization/Special Instructions for ProjNameSetup000001 for Test Description 100200 Sequence Item 1 Test Procedure 100201 Describe the setup, initialization, and other special instructions specific to this test. Include in the description: configuration of the hardware and software which provides the infrastructure for the item under test the initial settings and conditions for the hardware and software which provides the infrastructure for the item under test the test tools, their configuration, initial settings, and conditions for this test special instructions to the tester (e.g., the expected results onscreen and in the database must match)

DIR Document 25TS-N1-3

Page 8

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

5.1.2.3 Data for ProjNameData000012 for Test Description 100200 Sequence Item 1 Test Procedure 100201 Specify file names for data or the actual data required to execute Step 1 Test Procedures 100201. Files or other data available in electronic formats should be placed under configuration control. Textual data within this document may be provided in the form of tables or other means. Data may include: initial inputs data provided during a particular step databases that may be accessed by the application erroneous data intended to challenge application integrity, performance, or availability data required for test tools other data used to prepare for testing or during testing

5.1.2.4 Steps for Test Description 100200 Sequence Item 1 Test Procedure 100201 Specify the steps for executing Test Procedure 100201. The steps must be executed in the order described and, unless otherwise designated, each step should be considered critical to the success of the procedure. The execution of this procedure should result in a step-by-step pass/fail result. The PASS/FAIL column should be used to indicate the status of the associated step once it is executed. Unless otherwise indicated, the successful completion of all rows in the table shall constitute the successful completion of the test description. If any step within the procedure is incomplete or fails, the PASS/FAIL column should not indicate that the step has passed. An example of a test procedure is included below.

PASS / FAIL TEST PROCEDURE 100201 STEP

Ensure that the configuration described in section 5.1.2.2 is correct. Ensure that the initialization data described in 5.1.2.3 is correct and in the following directory c:/xxx. Launch Microsoft Internet Explorer Version 6.xx. Open URL c:/xxxx/yyy/zz.htm Enter the name John Smith in the field titled Name and hit enter. Enter valid password in the field titled Password. (This is a case sensitive password.) Ensure that the application now displays a new screen with banner title Registration. Ensure that this screen displays the name (from step 5) in the Name field and an associated address in the address fields. Ensure that the address displayed for the name matches the database contents for that name.

DIR Document 25TS-N1-3

Page 9

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

PASS / FAIL

TEST PROCEDURE 100201 STEP

In the field titled Action, choose the Update all action. Ensure that the display shows Update Completed at the bottom of the page, upon completion of the action. Inspect the database table to ensure that the information described in file c:xxx.txt has been deleted. END OF TEST PROCEDURE

Table 6. Example of Test Procedure

5.1.2.5 Expected Results for Test Description 100200 Sequence Item 1 Test Procedure 100201 Specify the results that indicate that the test of the item is successful. Unless otherwise indicated, the success of a test procedure requires that each step be executed successfully. This subsection should specify this or other modified criteria. The criteria may include values within tolerance levels (e.g., numbers in a range from five to ten are acceptable).

Section 6. References

Identify the information sources referenced in the test scenarios and utilized in developing the test scenarios. Include for each the document number, title, date, and author.

Section 7. Glossary

Define all terms and acronyms required to interpret the test scenarios properly.

Section 8. Revision History

Identify changes to the test scenarios.

Section 9. Appendices

Include any relevant appendices.

DIR Document 25TS-N1-3

Page 10

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

Appendix A. Test Scenarios Organization

Two alternatives for specifying test scenarios are specified below. Select one of the following alternatives.

Alternative 1

The document may be organized with a test scenario, followed by its specific test description(s), test procedures, in the order of their execution, including any repetition, would then follow each test description. This approach is most direct and easiest to follow during the testing process; however, there may be a great deal of redundancy using this approach, since test setup, test data, and some specific test procedures may be re-used multiple times across the various test descriptions and scenarios. In this case, the information would have to be repeated, and any updates to the information would have to be changed each time the information is repeated. If the document is organized in this fashion, each test scenario (Section 5.1, 5.2, etc.) will be followed by a one or more test descriptions (Section 5.1.1, 5.1.2) for its associated test scenario (in this case 5.1) and a given set of test procedures (Section 5.1.1.1, 5.1.1.2, 5.1.1.3, etc.) will be associated with a particular test description (in this case, Section 5.1.1).

Alternative 2

The document may be organized with a test scenario, followed by its specific test description(s). After describing all test scenarios and their associated test descriptions, a separate section can describe every test procedure. This approach minimizes redundancy and the chance of errors in the document by describing the test procedures only once (as well as the test data sets and other information) and then referencing them in the appropriate test descriptions. Conversely, this approach will require the creation of some form of additional test documentation or other means of realization to ensure that the tester can follow the step-by-step process for a specific test. Additional test documentation is necessary, for example, because the test procedures are all grouped together regardless of their associations with a specific test description and are referred to only in the test descriptions. If the document is organized in this manner, then each test scenario (Section 5.1, 5.2, etc.) will be followed by one or more test descriptions (Section 5.1.1, 5.1.2) for its associated test scenario (in this case Section 5.1), and each test description (section 5.1.1, 5.1.2, etc.) will refer to the test procedures to be used to satisfy that test description; however, the test procedures will be described in a separate section regardless of the test descriptions or test scenarios which use them.

DIR Document 25TS-N1-3

Page 11

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

Appendix B. Test Identifier Naming Conventions

Two alternative methods for identifying test scenarios, procedures, descriptions, and other test information are described below. Select one of the alternatives specified, or specify another method. Note: When using any identification method, consider including project name in the naming convention.

Alternative 1

Test scenarios are identified with a project name prefix and numeric identifier, for example: ProjName100000, ProjName200000, etc. Test descriptions are numbered using the third and fourth digits of these identifiers. For example, test descriptions for scenario ProjName100000 are designated ProjName100100, ProjName100200, etc. Test procedures are numbered using the fifth and sixth digits. For example, test procedures for Test Description ProjName100100 are designated ProjName100101, ProjName100102, etc. If any of the test procedures (or other data sets or related information) is reused and associated with more than one scenario and/or description, use zeros in the appropriate digits to indicate the association with more than one scenario and/or description. For example, ProjName000001 indicates that procedure 01 is not associated with a single scenario or description. ProjName100001 indicates that procedure 01 of scenario 10 is not associated with a particular description. Additional examples of Alternative 1 are provided below. In the following illustrations: ProjName represents the name of the project ss represents the portion of the identifier that references scenario dd represents the portion of the identifier that references description pp represents the portion of the identifier that references procedure

Description Naming Convention Example

Name of Scenario 10 of the HR Upgrade project Name of Description 01 of Scenario 10 of the HR Upgrade project Name of Procedure 01 of Scenario 10, Description 01 of the HR Upgrade project Name of Procedure 10 of the HR Upgrade project Procedure 10 is not associated with a particular scenario or description

ProjNamess0000 ProjNamessdd00 ProjNamessddpp ProjName0000pp

HR Upgrade 100000 HR Upgrade 100100 HR Upgrade 100101 HR Upgrade 100010

DIR Document 25TS-N1-3

Page 12

Texas Project Delivery Framework

TEST SCENARIOS INSTRUCTIONS

Description

Naming Convention

Example

Name of Procedure 30 of Scenario 21 of the HR Upgrade project Procedure 30 of Scenario 21 is not associated with a particular description

Table 7. Example of Test Identifier Alternative 1

ProjName0000pp

HR Upgrade 210030

Alternative 2

Each test scenario, test description, and test procedure will have a reference number that uniquely identifies the scenario/description/procedure and also indicates the parent-child relationship between the two, if applicable. The naming convention consists of a numeric identifier in the scenario_description_procedure format. Using this convention, the first test scenario identified is 1_0_0. The first test description in the first scenario is identified as 1_1_0. The first test procedure in the first test description of the first scenario is identified as 1_1_1. Test procedures not associated with a scenario or description are identified as 0_0_x, where x is an assigned sequence number. Examples of Alternative 2 are provided below. In the following illustrations: ProjName represents the name of the project s represents the portion of the identifier that references scenario d represents the portion of the identifier that references description p represents the portion of the identifier that references procedure

Example Description Naming Convention Example

Scenario Description Procedure associated with scenario and/or description Procedure not associated with scenario or description

Table 8. Example of Test Identifier Alternative 2

ProjNames_0_0 ProjNames_d_0 ProjNames_d_p ProjName0_0_p

ProjName1_0_0 ProjName1_1_0 ProjName1_1_1 ProjName0_0_1

DIR Document 25TS-N1-3

Page 13

You might also like

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Memory Aids For PMP ExamDocument7 pagesMemory Aids For PMP ExamMustafa100% (18)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- SD InterviewDocument4 pagesSD InterviewBharathk KldNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- 1576856747Document257 pages1576856747naghman-rasheed-2954No ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- PMP Formulas: Earned ValueDocument3 pagesPMP Formulas: Earned ValuefraspaNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- SAP SD BillingDocument37 pagesSAP SD BillingRam Mani100% (2)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- SAP SD Training Table of ContentsDocument12 pagesSAP SD Training Table of ContentsBharathk KldNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Role of HR FunctiDocument13 pagesRole of HR FunctiBharathk KldNo ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Memory Aids For PMP ExamDocument7 pagesMemory Aids For PMP ExamMustafa100% (18)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Raga 350Document11 pagesRaga 350Diwakar_ch_2002No ratings yet

- SAP SD T.codesDocument18 pagesSAP SD T.codesBharathk KldNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Mster DetaDocument25 pagesMster DetaBharathk KldNo ratings yet

- LIL HCM Implementation PlanDocument8 pagesLIL HCM Implementation PlanBharathk KldNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- OmDocument145 pagesOmBharathk KldNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Control RecordsDocument32 pagesControl RecordsBharathk KldNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- Text and Output Determination in Ssap SDDocument3 pagesText and Output Determination in Ssap SDBharathk KldNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Sap SD PPT-1Document47 pagesSap SD PPT-1vijaykumarNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Sap Remaining TopicsDocument113 pagesSap Remaining TopicsBharathk KldNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- ASAP Methodology in DetailDocument8 pagesASAP Methodology in DetailBharathk KldNo ratings yet

- Sap Sample Resume Cum TipsDocument13 pagesSap Sample Resume Cum TipsBharathk KldNo ratings yet

- Sap Implemented CompaniesDocument6 pagesSap Implemented CompaniesBharathk Kld50% (2)

- SD Interview QuestionsDocument9 pagesSD Interview QuestionsBharathk KldNo ratings yet

- SD Questions and AnswersDocument18 pagesSD Questions and AnswersBharathk KldNo ratings yet

- Sap Implemented CompaniesDocument6 pagesSap Implemented CompaniesBharathk Kld50% (2)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Designing System Test Cases and ScenariosDocument17 pagesDesigning System Test Cases and ScenariosBharathk KldNo ratings yet

- Which Table in SAP SD Gives Details of All Transactions Performed On A Sales OrderDocument3 pagesWhich Table in SAP SD Gives Details of All Transactions Performed On A Sales OrderBharathk KldNo ratings yet

- Number Range Does Not Exist 2012 11Document4 pagesNumber Range Does Not Exist 2012 11Bharathk KldNo ratings yet

- No Schedule Lines Due ToDocument15 pagesNo Schedule Lines Due ToBharathk KldNo ratings yet

- MMPV Error in Posting PeriodsDocument5 pagesMMPV Error in Posting PeriodsBharathk KldNo ratings yet

- Resolve Pricing Error When Creating Sales OrderDocument4 pagesResolve Pricing Error When Creating Sales OrderBharathk KldNo ratings yet

- PT Trakindo Utama Quotation for PT Basuki Rahmanta PutraDocument2 pagesPT Trakindo Utama Quotation for PT Basuki Rahmanta PutraDeden PramikaNo ratings yet

- ProblemDocument2 pagesProblemchandra K. SapkotaNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- CrosbyDocument19 pagesCrosbyDr. Sanjay Mahalingam, Asst. Professor, Management & Commerce, SSSIHLNo ratings yet

- FB Marketing Tips For YouDocument13 pagesFB Marketing Tips For YouPamoris PamorisNo ratings yet

- Weekly: Join in Our Telegram Channel - T.Me/Equity99Document6 pagesWeekly: Join in Our Telegram Channel - T.Me/Equity99Hitendra PanchalNo ratings yet

- C. Castro Company-Cdc 2018Document42 pagesC. Castro Company-Cdc 2018Gennelyn Lairise Rivera100% (1)

- Participation of SMEs in Tourism ClustersDocument11 pagesParticipation of SMEs in Tourism ClustersDaniel GardanNo ratings yet

- TCL Case StudyDocument8 pagesTCL Case StudyParag_Ghatpand_8912No ratings yet

- Relationship Between Job Satisfaction and Turnover IntentionDocument33 pagesRelationship Between Job Satisfaction and Turnover IntentionSamuel Embiza TadesseNo ratings yet

- Islamic Banks and Investment FinancingDocument29 pagesIslamic Banks and Investment FinancingAhmad FananiNo ratings yet

- Advanced Financial Accounting 11th Edition Christensen Solutions ManualDocument43 pagesAdvanced Financial Accounting 11th Edition Christensen Solutions Manualtiffanywilliamsenyqjfskcx100% (18)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Research Project On Kross CycleDocument18 pagesResearch Project On Kross CycleRAJAN SINGHNo ratings yet

- 1-2 Math at Home Game TimeDocument1 page1-2 Math at Home Game TimeKarla Panganiban TanNo ratings yet

- Sec. 24. Election of Directors or Trustees.Document7 pagesSec. 24. Election of Directors or Trustees.Lean Rain SandiNo ratings yet

- Explanatory Notes For AEO SAQDocument74 pagesExplanatory Notes For AEO SAQthrowaway12544234No ratings yet

- 9 - BSBPEF501 Appendix D - Work Goals, Plans and Activities Template Assessment 2cDocument2 pages9 - BSBPEF501 Appendix D - Work Goals, Plans and Activities Template Assessment 2cP APARNANo ratings yet

- Productivity, Efficiency and Economic Growth in Asia PacificDocument335 pagesProductivity, Efficiency and Economic Growth in Asia PacificZainudin Mohamed100% (1)

- Affidavit repair shop undertakingDocument2 pagesAffidavit repair shop undertakingZeaNo ratings yet

- Strategy and Tactics of Integrative NegotiationDocument17 pagesStrategy and Tactics of Integrative NegotiationcristianaNo ratings yet

- Alpha Graphics CompanyDocument2 pagesAlpha Graphics CompanyMira FebriasariNo ratings yet

- Assignment 1: On Formulation and Graphical MethodDocument2 pagesAssignment 1: On Formulation and Graphical MethodI.E. Business SchoolNo ratings yet

- Basel 3 PDFDocument6 pagesBasel 3 PDFNischal PatelNo ratings yet

- Paris Region Facts and Figures. 2020 EditionDocument44 pagesParis Region Facts and Figures. 2020 EditionJerson ValenciaNo ratings yet

- Legal DisabilityDocument20 pagesLegal DisabilityShashwatNo ratings yet

- Benefits and Challenges of Internationalization and International HRMDocument13 pagesBenefits and Challenges of Internationalization and International HRMMin Than Kyaw SwarNo ratings yet

- Some Thoughts On The Failure of Silicon Valley Bank 3-12-2023Document4 pagesSome Thoughts On The Failure of Silicon Valley Bank 3-12-2023Subash NehruNo ratings yet

- Introduction To Managerial EconomicsDocument37 pagesIntroduction To Managerial Economicskishore.vadlamani86% (22)

- Comprehensive Problem - (Merchandising Concern)Document19 pagesComprehensive Problem - (Merchandising Concern)Hannah Pearl Flores VillarNo ratings yet

- Decision TheoryDocument14 pagesDecision TheoryBless TettehNo ratings yet

- Unwomen What Is Local Government and How Is It OrganizedDocument42 pagesUnwomen What Is Local Government and How Is It OrganizedHifsa AimenNo ratings yet

- SUMMARY: So Good They Can't Ignore You (UNOFFICIAL SUMMARY: Lesson from Cal Newport)From EverandSUMMARY: So Good They Can't Ignore You (UNOFFICIAL SUMMARY: Lesson from Cal Newport)Rating: 4.5 out of 5 stars4.5/5 (14)

- Summary of The Galveston Diet by Mary Claire Haver MD: The Doctor-Developed, Patient-Proven Plan to Burn Fat and Tame Your Hormonal SymptomsFrom EverandSummary of The Galveston Diet by Mary Claire Haver MD: The Doctor-Developed, Patient-Proven Plan to Burn Fat and Tame Your Hormonal SymptomsNo ratings yet

- Summary of Atomic Habits by James ClearFrom EverandSummary of Atomic Habits by James ClearRating: 5 out of 5 stars5/5 (168)

- Life in the UK Test 2024: The Complete & Updated Exam Study Guide - Practice With 800+ Official Style Questions & AnswersFrom EverandLife in the UK Test 2024: The Complete & Updated Exam Study Guide - Practice With 800+ Official Style Questions & AnswersNo ratings yet

- Summary and Analysis of The Psychology of Money: Timeless Lessons on Wealth, Greed, and Happiness by Morgan HouselFrom EverandSummary and Analysis of The Psychology of Money: Timeless Lessons on Wealth, Greed, and Happiness by Morgan HouselRating: 5 out of 5 stars5/5 (11)

- Summary of Supercommunicators by Charles Duhigg: How to Unlock the Secret Language of ConnectionFrom EverandSummary of Supercommunicators by Charles Duhigg: How to Unlock the Secret Language of ConnectionNo ratings yet

- Summary of Some People Need Killing by Patricia Evangelista:A Memoir of Murder in My CountryFrom EverandSummary of Some People Need Killing by Patricia Evangelista:A Memoir of Murder in My CountryNo ratings yet