Professional Documents

Culture Documents

SM 1010 Introduction

Uploaded by

LAJAM108Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

SM 1010 Introduction

Uploaded by

LAJAM108Copyright:

Available Formats

1010 INTRODUCTION

1010 A. Scope and Application of Methods

The procedures described in these standards are intended for the examination of waters of a wide range of quality, including water suitable for domestic or industrial supplies, surface water, ground water, cooling or circulating water, boiler water, boiler feed water, treated and untreated municipal or industrial wastewater, and saline water. The unity of the elds of water supply, receiving water quality, and wastewater treatment and disposal is recognized by presenting methods of analysis for each constituent in a single section for all types of waters. An effort has been made to present methods that apply generally. Where alternative methods are necessary for samples of different composition, the basis for selecting the most appropriate method is presented as clearly as possible. However, samples with extreme concentrations or otherwise unusual compositions or characteristics may present difculties that preclude the direct use of these methods. Hence, some modication of a procedure may be necessary in specic instances. Whenever a procedure is modied, the analyst should state plainly the nature of modication in the report of results. Certain procedures are intended for use with sludges and sediments. Here again, the effort has been to present methods of the widest possible application, but when chemical sludges or slurries or other samples of highly unusual composition are encountered, the methods of this manual may require modication or may be inappropriate. Most of the methods included here have been endorsed by regulatory agencies. Procedural modication without formal approval may be unacceptable to a regulatory body. The analysis of bulk chemicals received for water treatment is not included herein. A committee of the American Water Works Association prepares and issues standards for water treatment chemicals. Part 1000 contains information that is common to, or useful in, laboratories desiring to produce analytical results of known quality, that is, of known accuracy and with known uncertainty in that accuracy. To accomplish this, apply the quality assurance methods described herein to the standard methods described elsewhere in this publication. Other sections of Part 1000 address laboratory safety, sampling procedures, and method development and validation, all of which provide necessary information.

1010 B. Statistics

1. Normal Distribution

deviation because the number of observations made is nite; the estimate of is denoted by s and is calculated as follows:

s [(x x )2/(n 1)]

1/2

If a measurement is repeated many times under essentially identical conditions, the results of each measurement, x, will be distributed randomly about a mean value (arithmetic average) because of uncontrollable or experimental error. If an innite number of such measurements were to be accumulated, the individual values would be distributed in a curve similar to those shown in Figure 1010:1. The left curve illustrates the Gaussian or normal distribution, which is described precisely by the mean, , and the standard deviation, . The mean, or average, of the distribution is simply the sum of all values divided by the number of values so summed, i.e., (ixi)/n. Because no measurements are repeated an innite number of times, an estimate of the mean is made, using the same summation procedure but with n equal to a nite number of repeated measurements (10, or 20, or. . .). This estimate of is denoted by x. The standard deviation of the normal distribution is dened as [(x)2/n]1/2. Again, the analyst can only estimate the standard

1-1

The standard deviation xes the width, or spread, of the normal distribution, and also includes a xed fraction of the values making up the curve. For example, 68.27% of the measurements lie between 1, 95.45% between 2, and 99.73% between 3. It is sufciently accurate to state that 95% of the values are within 2 and 99% within 3. When values are assigned to the multiples, they are condence limits. For example, 10 4 indicates that the condence limits are 6 and 14, while values from 6 to 14 represent the condence interval. Another useful statistic is the standard error of the mean, , which is the standard deviation divided by the square root of the number of values, or /n. This is an estimate of the accuracy

1-2

INTRODUCTION (1000)

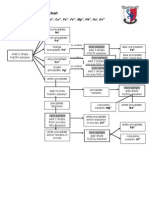

Figure 1010:1. Normal (left) and skewed (right) distributions.

of the mean and implies that another sample from the same population would have a mean within some multiple of this. Multiples of this statistic include the same fraction of the values as stated above for . In practice, a relatively small number of average values is available, so the condence intervals of the mean are expressed as x ts/n where t has the following values for 95% condence intervals:

n 2 3 4 t 12.71 4.30 3.18 n 5 10 t 2.78 2.26 1.96

3. Rejection of Data

Quite often in a series of measurements, one or more of the results will differ greatly from the other values. Theoretically, no result should be rejected, because it may indicate either a faulty technique that casts doubt on all results or the presence of a true variant in the distribution. In practice, reject the result of any analysis in which a known error has occurred. In environmental studies, extremely high and low concentrations of contaminants may indicate the existence of areas with problems or areas with no contamination, so they should not be rejected arbitrarily.

The use of t compensates for the tendency of a small number of values to underestimate uncertainty. For n 15, it is common to use t 2 to estimate the 95% condence interval. Still another statistic is the relative standard deviation, /, with its estimate s/ x, also known as the coefcient of variation (CV), which commonly is expressed as a percentage. This statistic normalizes the standard deviation and sometimes facilitates making direct comparisons among analyses that include a wide range of concentrations. For example, if analyses at low concentrations yield a result of 10 1.5 mg/L and at high concentrations 100 8 mg/L, the standard deviations do not appear comparable. However, the percent relative standard deviations are 100 (1.5/10) 15% and 100 (8/100) 8%, which indicate the smaller variability obtained by using this parameter.

2. Log-Normal Distribution

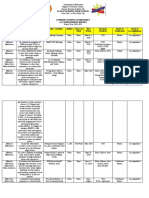

TABLE 1010:I. CRITICAL VALUES FOR 5% AND 1% TESTS OF DISCORDANCY FOR A SINGLE OUTLIER IN A NORMAL SAMPLE Number of Measurements n 3 4 5 6 7 8 9 10 12 14 15 16 18 20 30 40 50 60 100 120 Critical Value 5% 1.15 1.46 1.67 1.82 1.94 2.03 2.11 2.18 2.29 2.37 2.41 2.44 2.50 2.56 2.74 2.87 2.96 3.03 3.21 3.27 1% 1.15 1.49 1.75 1.94 2.10 2.22 2.32 2.41 2.55 2.66 2.71 2.75 2.82 2.88 3.10 3.24 3.34 3.41 3.60 3.66

In many cases the results obtained from analysis of environmental samples will not be normally distributed, i.e., a graph of the data will be obviously skewed, as shown at right in Figure 1010:1, with the mode, median, and mean being distinctly different. To obtain a nearly normal distribution, convert the results to logarithms and then calculate xand s. The antilogarithms of these two values are estimates of the geometric mean and the geometric standard deviation, xg and sg.

Source: BARNETT, V. & T. LEWIS. 1984. Outliers in Statistical Data. John Wiley & Sons, New York, N.Y.

INTRODUCTION (1010)/Glossary

1-3

An objective test for outliers has been described.1 If a set of data is ordered from low to high: xL, x2 . . . xH, and the average and standard deviation are calculated, then suspected high or low outliers can be tested by the following procedure. First, calculate the statistic T:

T (x H x )/s for a high value, or T ( x x L )/s for a low value.

measurements, n, then the xH or xL is an outlier at that level of signicance. Further information on statistical techniques is available elsewhere.2,3

4. References

1. BARNETT, V. & T. LEWIS. 1984. Outliers in Statistical Data. John Wiley & Sons, New York, N.Y. 2. NATRELLA, M.G. 1963. Experimental Statistics. National Bur. Standards Handbook 91, Washington, D.C. 3. SNEDECOR, G.W. & W.G. COCHRAN. 1980. Statistical Methods. Iowa State University Press, Ames.

Second, compare the value of T with the value from Table 1010:I for either a 5% or 1% level of signicance. If the calculated T is larger than the table value for the number of

1010 C. Glossary

1. Denition of Terms

The purpose of this glossary is to dene concepts, not regulatory terms; it is not intended to be all-inclusive. Accuracy combination of bias and precision of an analytical procedure, which reects the closeness of a measured value to a true value. Bias consistent deviation of measured values from the true value, caused by systematic errors in a procedure. Calibration check standardstandard used to determine the state of calibration of an instrument between periodic recalibrations. Condence coefcientthe probability, %, that a measurement result will lie within the condence interval or between the condence limits. Condence intervalset of possible values within which the true value will lie with a specied level of probability. Condence limit one of the boundary values dening the condence interval. Detection levelsVarious levels in increasing order are: Instrumental detection level (IDL)the constituent concentration that produces a signal greater than ve times the signal/ noise ratio of the instrument. This is similar, in many respects, to critical level and criterion of detection. The latter level is stated as 1.645 times the s of blank analyses. Lower level of detection (LLD)the constituent concentration in reagent water that produces a signal 2(1.645)s above the mean of blank analyses. This sets both Type I and Type II errors at 5%. Other names for this level are detection level and level of detection (LOD). Method detection level (MDL)the constituent concentration that, when processed through the complete method, produces a signal with a 99% probability that it is different from the blank. For seven replicates of the sample, the mean must be 3.14s above the blank where s is the standard deviation of the seven replicates. Compute MDL from replicate measurements one to ve times the actual MDL. The MDL will be larger than the LLD because of the few replications and the sample processing steps and may vary with constituent and matrix.

Level of quantitation (LOQ)/minimum quantitation level (MQL)the constituent concentration that produces a signal sufciently greater than the blank that it can be detected within specied levels by good laboratories during routine operating conditions. Typically it is the concentration that produces a signal 10s above the reagent water blank signal. Duplicate usually the smallest number of replicates (two) but specically herein refers to duplicate samples, i.e., two samples taken at the same time from one location. Internal standarda pure compound added to a sample extract just before instrumental analysis to permit correction for inefciencies. Laboratory control standarda standard, usually certied by an outside agency, used to measure the bias in a procedure. For certain constituents and matrices, use National Institute of Standards and Technology (NIST) Standard Reference Materials when they are available. Precisionmeasure of the degree of agreement among replicate analyses of a sample, usually expressed as the standard deviation. Quality assessmentprocedure for determining the quality of laboratory measurements by use of data from internal and external quality control measures. Quality assurancea denitive plan for laboratory operation that species the measures used to produce data of known precision and bias. Quality controlset of measures within a sample analysis methodology to assure that the process is in control. Random errorthe deviation in any step in an analytical procedure that can be treated by standard statistical techniques. Replicaterepeated operation occurring within an analytical procedure. Two or more analyses for the same constituent in an extract of a single sample constitute replicate extract analyses. Surrogate standarda pure compound added to a sample in the laboratory just before processing so that the overall efciency of a method can be determined. Type I erroralso called alpha error, is the probability of deciding a constituent is present when it actually is absent. Type II erroralso called beta error, is the probability of not detecting a constituent when it actually is present.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- World Wide Emission Free E-Motercycle ProjectDocument20 pagesWorld Wide Emission Free E-Motercycle ProjectAkshay SharmaNo ratings yet

- FBS Q1 WK1Document4 pagesFBS Q1 WK1Nicole Eve Pelaez-AbarrientosNo ratings yet

- Financial Problems Are Commonly Faced by EveryoneDocument2 pagesFinancial Problems Are Commonly Faced by EveryoneGrace Ann Mancao PototNo ratings yet

- Buddhist 083011Document150 pagesBuddhist 083011Mazzy S100% (1)

- La Paz National High SchoolDocument19 pagesLa Paz National High SchoolBon Ivan FirmezaNo ratings yet

- Composition Solidus Temperature Liquidus Temperature: (WT% Si) (°C) (°C)Document7 pagesComposition Solidus Temperature Liquidus Temperature: (WT% Si) (°C) (°C)Muhammad Ibkar YusranNo ratings yet

- Hookah Bar Business PlanDocument34 pagesHookah Bar Business PlanAbdelkebir LabyadNo ratings yet

- A211 Reading Set A QuestionDocument12 pagesA211 Reading Set A Questiontasya zakariaNo ratings yet

- Herbs and SpicesDocument77 pagesHerbs and SpicesNicole RicohermosoNo ratings yet

- LabStan - July 16-17, 23-24, 30-31, Aug 6-7Document25 pagesLabStan - July 16-17, 23-24, 30-31, Aug 6-7CandypopNo ratings yet

- Formula Sheet Pre-MidDocument4 pagesFormula Sheet Pre-MidUzair KhanNo ratings yet

- Leader Ship Assessment: Student No 374212036Document4 pagesLeader Ship Assessment: Student No 374212036Emily KimNo ratings yet

- MPCA Response 5.29.20Document2 pagesMPCA Response 5.29.20Duluth News TribuneNo ratings yet

- The Bitter Internal Drive of AppleDocument7 pagesThe Bitter Internal Drive of AppleBon WambuaNo ratings yet

- Testing For Cations Flow ChartDocument2 pagesTesting For Cations Flow Chartapi-252561013No ratings yet

- Weigh Bridge Miscellaneous Items DetailsDocument1 pageWeigh Bridge Miscellaneous Items DetailsChandan RayNo ratings yet

- CMO Historical Data MonthlyDocument117 pagesCMO Historical Data MonthlyHoàng Minh ChuNo ratings yet

- Cocktail Making Techniques 13.3.11Document3 pagesCocktail Making Techniques 13.3.11Ryan MenezesNo ratings yet

- V. Aa. Gram A/S: DescriptionDocument2 pagesV. Aa. Gram A/S: Descriptioncontango O&GNo ratings yet

- Outback MenuDocument2 pagesOutback MenuzeeNo ratings yet

- Should Beauty Pageants Be BannedDocument2 pagesShould Beauty Pageants Be BannedHastin Dilipbhai BagthariyaNo ratings yet

- AGRO 101 Principles of Agronomy - Acharya NG Ranga Agricultural PDFDocument133 pagesAGRO 101 Principles of Agronomy - Acharya NG Ranga Agricultural PDFShalini Singh100% (1)

- 1101259L 580.752830 Pressure Washer ManualDocument64 pages1101259L 580.752830 Pressure Washer Manualgork1roguesNo ratings yet

- Ferrography/oil Analysis: An Excellent Condition Monitoring TechniqueDocument5 pagesFerrography/oil Analysis: An Excellent Condition Monitoring Techniquedaniel zorroNo ratings yet

- Healing and Keeping Prayer (2013)Document2 pagesHealing and Keeping Prayer (2013)Kylie DanielsNo ratings yet

- 10 Major Sulfuric Acid Industrial Applications - WorldOfChemicalsDocument9 pages10 Major Sulfuric Acid Industrial Applications - WorldOfChemicalsFarhad MalikNo ratings yet

- Sebuguero V NLRC Case Digest PDFDocument2 pagesSebuguero V NLRC Case Digest PDFYodh Jamin Ong0% (1)

- Winchester Model 9422 Lever Action Rifle Owner's Manual: LicenseeDocument0 pagesWinchester Model 9422 Lever Action Rifle Owner's Manual: Licenseecarlosfanjul1No ratings yet

- Tutorials 2016Document54 pagesTutorials 2016Mankush Jain100% (1)

- Dan Zahavi Josef Parnas - Schizophrenic - Autism - Clinical - Phenomenology PDFDocument6 pagesDan Zahavi Josef Parnas - Schizophrenic - Autism - Clinical - Phenomenology PDFAdelar Conceição Dos SantosNo ratings yet