Professional Documents

Culture Documents

Stochastics

Uploaded by

Jaime1998Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Stochastics

Uploaded by

Jaime1998Copyright:

Available Formats

In probability theory, a stochastic process /sto?'kst?

k/, or sometimes random pro cess (widely used) is a collection of random variables; this is often used to re present the evolution of some random value, or system, over time. This is the pr obabilistic counterpart to a deterministic process (or deterministic system). In stead of describing a process which can only evolve in one way (as in the case, for example, of solutions of an ordinary differential equation), in a stochastic or random process there is some indeterminacy: even if the initial condition (o r starting point) is known, there are several (often infinitely many) directions in which the process may evolve. In the simple case of discrete time, as opposed to continuous time, a stochastic process involves a sequence of random variables and the time series associated with these random variables (for example, see Markov chain, also known as discre te-time Markov chain). Another basic type of a stochastic process is a random fi eld, whose domain is a region of space, in other words, a random function whose arguments are drawn from a range of continuously changing values. One approach t o stochastic processes treats them as functions of one or several deterministic arguments (inputs, in most cases regarded as time) whose values (outputs) are ra ndom variables: non-deterministic (single) quantities which have certain probabi lity distributions. Random variables corresponding to various times (or points, in the case of random fields) may be completely different. The main requirement is that these different random quantities all have the same type. Type refers to the codomain of the function. Although the random values of a stochastic proces s at different times may be independent random variables, in most commonly consi dered situations they exhibit complicated statistical correlations. Familiar examples of processes modeled as stochastic time series include stock m arket and exchange rate fluctuations, signals such as speech, audio and video, m edical data such as a patient's EKG, EEG, blood pressure or temperature, and ran dom movement such as Brownian motion or random walks. Examples of random fields include static images, random terrain (landscapes), wind waves or composition va riations of a heterogeneous material. Formal definition and basic properties[edit] Definition[edit] Given a probability space and a measurable space , an S-valued stochastic proces s is a collection of S-valued random variables on , indexed by a totally ordered set T ("time"). That is, a stochastic process X is a collection where each is an S-valued random variable on . The space S is then called the st ate space of the process. Finite-dimensional distributions[edit] Let X be an S-valued stochastic process. For every finite sequence , the k-tuple is a random variable taking values in . The distribution of this random variabl e is a probability measure on . This is called a finite-dimensional distribution of X. Under suitable topological restrictions, a suitably "consistent" collection of f inite-dimensional distributions can be used to define a stochastic process (see Kolmogorov extension in the next section). History of stochastic processes[edit] Stochastic processes were first studied rigorously in the late 19th century to a id in understanding financial markets and Brownian motion. The first person to d

escribe the mathematics behind Brownian motion was Thorvald N. Thiele in a paper on the method of least squares published in 1880. This was followed independent ly by Louis Bachelier in 1900 in his PhD thesis "The theory of speculation", in which he presented a stochastic analysis of the stock and option markets. Albert Einstein (in one of his 1905 papers) and Marian Smoluchowski (1906) brought the solution of the problem to the attention of physicists, and presented it as a w ay to indirectly confirm the existence of atoms and molecules. Their equations d escribing Brownian motion were subsequently verified by the experimental work of Jean Baptiste Perrin in 1908. An excerpt from Einstein's paper describes the fundamentals of a stochastic mode l:

"It must clearly be assumed that each individual particle executes a motion whic h is independent of the motions of all other particles; it will also be consider ed that the movements of one and the same particle in different time intervals a re independent processes, as long as these time intervals are not chosen too sma ll. We introduce a time interval into consideration, which is very small compared to the observable time intervals, but nevertheless so large that in two successive time intervals , the motions executed by the particle can be thought of as even ts which are independent of each other". Construction[edit] In the ordinary axiomatization the problem is to construct a of all functions, and then put itionally uses a method called of probability theory by means of measure theory, sigma-algebra of measurable subsets of the space a finite measure on it. For this purpose one trad Kolmogorov extension.[1]

There is at least one alternative axiomatization of probability theory by means of expectations on C-star algebras of random variables. In this case the method goes by the name of Gelfand Naimark Segal construction. This is analogous to the two approaches to measure and integration, where one ha s the choice to construct measures of sets first and define integrals later, or construct integrals first and define set measures as integrals of characteristic functions. Kolmogorov extension[edit] The Kolmogorov extension proceeds along the following lines: assuming that a pro bability measure on the space of all functions exists, then it can be used to sp ecify the joint probability distribution of finite-dimensional random variables . Now, from this n-dimensional probability distribution we can deduce an (n - 1) -dimensional marginal probability distribution for . Note that the obvious compa tibility condition, namely, that this marginal probability distribution be in th e same class as the one derived from the full-blown stochastic process, is not a requirement. Such a condition only holds, for example, if the stochastic proces s is a Wiener process (in which case the marginals are all gaussian distribution s of the exponential class) but not in general for all stochastic processes. Whe n this condition is expressed in terms of probability densities, the result is c alled the Chapman Kolmogorov equation. The Kolmogorov extension theorem guarantees the existence of a stochastic proces s with a given family of finite-dimensional probability distributions satisfying the Chapman Kolmogorov compatibility condition.

Separability, or what the Kolmogorov extension does not provide[edit] Recall that in the Kolmogorov axiomatization, measurable sets are the sets which have a probability or, in other words, the sets corresponding to yes/no questio ns that have a probabilistic answer. The Kolmogorov extension starts by declaring to be measurable all sets of functi ons where finitely many coordinates are restricted to lie in measurable subsets of . In other words, if a yes/no question about f can be answered by looking at the values of at most finitely many coordinates, then it has a probabilistic ans wer. In measure theory, if we have a countably infinite collection of measurable sets , then the union and intersection of all of them is a measurable set. For our pu rposes, this means that yes/no questions that depend on countably many coordinat es have a probabilistic answer. The good news is that the Kolmogorov extension makes it possible to construct st ochastic processes with fairly arbitrary finite-dimensional distributions. Also, every question that one could ask about a sequence has a probabilistic answer w hen asked of a random sequence. The bad news is that certain questions about fun ctions on a continuous domain don't have a probabilistic answer. One might hope that the questions that depend on uncountably many values of a function be of li ttle interest, but the really bad news is that virtually all concepts of calculu s are of this sort. For example: 1.boundedness 2.continuity 3.differentiability all require knowledge of uncountably many values of the function. One solution to this problem is to require that the stochastic process be separa ble. In other words, that there be some countable set of coordinates whose value s determine the whole random function f. The Kolmogorov continuity theorem guarantees that processes that satisfy certain constraints on the moments of their increments have continuous modifications an d are therefore separable. Filtrations[edit] Given a probability space , a filtration is a weakly increasing collection of si gma-algebras on , , indexed by some totally ordered set , and bounded above by , i.e. for s,t with s < t, . A stochastic process on the same time set is said to be adapted to the filtratio n if, for every t , is -measurable.[2] Natural filtration[edit] Given a stochastic process , the natural filtration for (or induced by) this pro cess is the filtration where is generated by all values of up to time s = t, i.e . . A stochastic process is always adapted to its natural filtration. Classification[edit] Stochastic processes can be classified according to the cardinality of its index

set (usually interpreted as time) and state space. Discrete time and discrete state space[edit] If both and belong to , the set of natural numbers, then we have models, which l ead to Markov chains. For example: (a) If means the bit (0 or 1) in position of a sequence of transmitted bits, the n can be modelled as a Markov chain with two states. This leads to the error cor recting viterbi algorithm in data transmission. (b) If means the combined genotype of a breeding couple in the th generation in an inbreeding model, it can be shown that the proportion of heterozygous individ uals in the population approaches zero as goes to 8.[3] Continuous time and continuous state space[edit] The paradigm of continuous stochastic process is that of the Wiener process. In its original form the problem was concerned with a particle floating on a liquid surface, receiving "kicks" from the molecules of the liquid. The particle is th en viewed as being subject to a random force which, since the molecules are very small and very close together, is treated as being continuous and since the par ticle is constrained to the surface of the liquid by surface tension, is at each point in time a vector parallel to the surface. Thus, the random force is descr ibed by a two-component stochastic process; two real-valued random variables are associated to each point in the index set, time, (note that since the liquid is viewed as being homogeneous the force is independent of the spatial coordinates ) with the domain of the two random variables being R, giving the x and y compon ents of the force. A treatment of Brownian motion generally also includes the ef fect of viscosity, resulting in an equation of motion known as the Langevin equa tion.[4] Discrete time and continuous state space[edit] If the index set of the process is N (the natural numbers), and the range is R ( the real numbers), there are some natural questions to ask about the sample sequ ences of a process {Xi}i ? N, where a sample sequence is {Xi(?)}i ? N. 1.What is the probability that each sample sequence is bounded? 2.What is the probability that each sample sequence is monotonic? 3.What is the probability that each sample sequence has a limit as the index ap proaches 8? 4.What is the probability that the series obtained from a sample sequence from converges? 5.What is the probability distribution of the sum? Main applications of discrete time continuous state stochastic models include Ma rkov chain Monte Carlo (MCMC) and the analysis of Time Series. Continuous time and discrete state space[edit] Similarly, if the index space I is a finite or infinite interval, we can ask abo ut the sample paths {Xt(?)}t ? I 1.What is the probability that it is bounded/integrable...? 2.What is the probability that it has a limit at 8 3.What is the probability distribution of the integral?

You might also like

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5795)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Effect of Brownian Motion and Noise Strength On Solutions of Sto 2023 OpDocument15 pagesThe Effect of Brownian Motion and Noise Strength On Solutions of Sto 2023 Oppepito perezNo ratings yet

- UT Dallas Syllabus For Stat6329.501.11f Taught by Sam Efromovich (Sxe062000)Document6 pagesUT Dallas Syllabus For Stat6329.501.11f Taught by Sam Efromovich (Sxe062000)UT Dallas Provost's Technology GroupNo ratings yet

- Acteduk Ct6 Hand Qho v04Document58 pagesActeduk Ct6 Hand Qho v04ankitag612No ratings yet

- (Lecture Notes in Engineering 21) B. F. Spencer Jr. (Auth.) - Reliability of Randomly Excited Hysteretic Structures-Springer-Verlag Berlin Heidelberg (1986)Document151 pages(Lecture Notes in Engineering 21) B. F. Spencer Jr. (Auth.) - Reliability of Randomly Excited Hysteretic Structures-Springer-Verlag Berlin Heidelberg (1986)JUAN RULFONo ratings yet

- Markov ChainsDocument91 pagesMarkov Chainscreativechand100% (7)

- Hidraulica Aplicada Hec Ras Flujo UniformeDocument316 pagesHidraulica Aplicada Hec Ras Flujo UniformeElmer Freddy Torrico RodriguezNo ratings yet

- Mathematics of Financial Derivatives: An IntroductionDocument15 pagesMathematics of Financial Derivatives: An IntroductionVinh PhanNo ratings yet

- Thesis PDFDocument167 pagesThesis PDFMandeep kaurNo ratings yet

- Mat 8Document32 pagesMat 8drjjpNo ratings yet

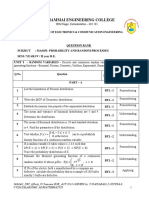

- 4 Ece Ma2261 Rp-IvDocument4 pages4 Ece Ma2261 Rp-IvBIBIN CHIDAMBARANATHANNo ratings yet

- Wood StructuresDocument390 pagesWood Structuresvladimir071No ratings yet

- Nakagami Distribution: Probability Density FunctionDocument7 pagesNakagami Distribution: Probability Density FunctionHani TaHaNo ratings yet

- Intermittent Demand ForecastingDocument6 pagesIntermittent Demand ForecastingagungbijaksanaNo ratings yet

- Poisson ProcessDocument10 pagesPoisson ProcessDaniel MwanikiNo ratings yet

- Lyapunov Functionals and Stability of Stochastic Functional Differential Equations PDFDocument351 pagesLyapunov Functionals and Stability of Stochastic Functional Differential Equations PDFAnonymous bZtJlFvPtp100% (1)

- MA6451-Probability and Random ProcessesDocument19 pagesMA6451-Probability and Random ProcessesmohanNo ratings yet

- Modul 7 MatrikDocument10 pagesModul 7 MatrikAida Nur FadhilahNo ratings yet

- Pottier 2010 NonEquilPhysicsDocument512 pagesPottier 2010 NonEquilPhysicsYin ChenNo ratings yet

- VTU EC 6TH SEM SyllabusDocument35 pagesVTU EC 6TH SEM Syllabuskeerthans_1No ratings yet

- Final 6711 F10Document4 pagesFinal 6711 F10Songya PanNo ratings yet

- StrurelDocument6 pagesStrurelamela0809No ratings yet

- Math 4511Document11 pagesMath 4511Solutions MasterNo ratings yet

- Liu Columbia 0054D 10924 PDFDocument148 pagesLiu Columbia 0054D 10924 PDF6doitNo ratings yet

- MartingalesDocument40 pagesMartingalesSidakpal Singh SachdevaNo ratings yet

- Process Analysis by Statistical Methods D. HimmelblauDocument474 pagesProcess Analysis by Statistical Methods D. HimmelblauSofia Mac Rodri100% (4)

- VolatilityDocument280 pagesVolatilityRAMESHBABU100% (1)

- Calcul Sochastique en Finance: Peter Tankov Peter - Tankov@polytechnique - Edu Nizar Touzi Nizar - Touzi@polytechnique - EduDocument238 pagesCalcul Sochastique en Finance: Peter Tankov Peter - Tankov@polytechnique - Edu Nizar Touzi Nizar - Touzi@polytechnique - EduordicompuuterNo ratings yet

- Control Engineering and Finance Lecture Notes in Control and Information Sciences PDFDocument312 pagesControl Engineering and Finance Lecture Notes in Control and Information Sciences PDFWui Kiong Ho100% (3)

- TS PartIIDocument50 pagesTS PartIIأبوسوار هندسةNo ratings yet

- Random Signals NotesDocument34 pagesRandom Signals NotesRamyaKogantiNo ratings yet