Professional Documents

Culture Documents

Documetatie Metaio

Uploaded by

Morena RotarescuCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Documetatie Metaio

Uploaded by

Morena RotarescuCopyright:

Available Formats

http://docs.metaio.

com/bin/view/Main/UnifeyeDesignSystemComponents

Unifeye graphical user interface

Using the Unifeye Design graphical user interface you can create, save, load and edit AR scenarios

(called scenes) consisting of:

an according image source (camera, video file, etc.) f

3D/multimedia content

tracking configuration (which defines the reference to the real world, detected by the image

source

camera calibration

It can also be used for the playback of workflows which you created by using the Workflow authoring

tool.

You can start the Unifeye Design user interface via the according Windows Start Menu entry. After

startup the Unifeye Design GUI comes up with an empty AR scene:

The standard user interface is divided into four different areas:

1. A menu and toolbar at the top

2. A big window in the middle containing the renderer window with the AR view

3. A toolbox on the right containing tools for editing your AR scene

4. A media player/image source selection tool at the bottom.

For a in-depth explanation of the Unifeye Design graphical user interface, please refer to this article.

You can now start working by loading an example scene as provided with the installation package of

Unifeye Design by selecting File -> Open or clicking the according icon. The example scenes are provided

within the folder: /examples/scenes. A scene has the file extension .scef. Please activate your webcam

after loading the example scene and putting the according tracking pattern or tracking reference image

into the camera view. The tracking pattern are available as a PDF file via the Start Menu entry of Unifeye

Design within the submenu Example scenes. For a detailed description regarding the provided example

scenes, please refer to this article .

Image Sources

With Augmented Reality technology, the real environment is generally expanded with a virtual content,

or virtual objects that are visualized within the real environment. For capturing the real environment

different so called image sources can be used. Within Unifeye Design that can happen either by the

means of a photograph, video or a live camera.

Photo

Unifeye Design offers the possibility to load photos into the render window and later superimpose them

with virtual objects. Supported image formats are .jpg and .bmp. If there are multiple images in one

folder, they will be shown directly withing thumbnail browser of Unifeye Design at the bottom once the

user loads the first image of that folder. The user can switch back and forth between the images.

Photo-based AR scenarios can be used for example for space/facility planning in the field of exhibition

stand construction or architecture. Regarding possible tracking scenarios usually marker based tracking

should be used in conjunction with photo based AR as it allows a reliable detection of the tracking

pattern within the photo even if it is placed relativly far away from the camera. Planar markerless

tracking in contrast is optimized for live camera mode.

Please note that in general a high resolution image is recommended (4 Megapixel and more) including a

camera calibration to allow a proper overlay.

Unifeye Design ships with sample image files for marker tracking in the folder:

<UnifeyeDesignInstallationFolder>/examples/images.

Video

Just like images, video data can also serve as an image source. Unifeye Design thereby supports the

formats .avi and .mpg / mpeg. In the lower left area of the Unifeye Design user interface, you will find a

video player with the basic features like play, pause, stop, etc.. Video-based AR scenarios are useful if a

live visualization on the spot does not make sense or is not possible. This allows to subsequently design

an integration of 3D animation into a live scene.

Please note that in order to be able to use the video file the according video CODEC must be installed on

your system. Also the CODEC must not be protected by means of digital rights management and allow

access to the pure image frames of the video itself.

Unifeye Design ships with a sample video file in the folder:

<UnifeyeDesignInstallationFolder>/examples/videos.

Live camera

The use of a live video image using a PC camera (typically a USB/Firewire webcam) offers the greatest

flexibility and is the most common AR scenario (for example, a live product presentation in combination

with a print catalog or a product packaging). Unifeye Design supports every camera that has a proper

Microsoft Windows DirectShow/VideoForWindows driver (which most of todays webcams have). You

can also connect camcorders and other image grabbing devices as long as you use an according frame

grabber card with according Windows drivers. DV camcorders usually can be used/connected directly

using the Firewire port of your computer. If you are planning to use an HD camera, please contact

support for more details as this usually requires specific hardware.

In order to start a camera click on the icon for the camera image source at the bottom of the Unifeye

Design GUI. This will give a list of connected cameras in the thumbnail browser. You can now start a

camera by double-clicking it. Please note that you can't use the camera in two applications at the same

time so before activating the camera make sure that it is connected to the PC but not in use by any

other application (otherwise you will get an error message).

After starting the camera you can alter the camera properties using two dialogs provided by the camera

manufacturer:

The left one usually contains the general camera properties and contains an interface for changing the

brightness, gain, shutter, exposure and so on. Please note that this dialog is dependant on the camera

driver and differs from camera to camera.The right button ("XY fps") will open a dialog for changing the

camera resolution and framerate. This dialog is also dependant on the camera driver.

For a proper AR experience and tracking performance a resolution of 640x480 at 25 or 30 frames per

second is recommended. Increasing the resolution can give you a better image and a more stable

tracking but also impacts system performance, as a bigger image has to be tracked and visualized in real

time. Please note that usually web-cams start at 640x480 at 15fps.

If you are experiencing a low framerate this can have several reasons:

1. adjust the shutter/exposure value of the camera accordingly (using the general settings dialog of

you camera). If e.g. the exposure is set to 1/15 sec. the camera can't deliver more than 15

frames per second even if the frames per seconds dialog tells you so.

2. Adjust the frames per seconds delivered by the camera. A standard webcam should be capable

of delivering 30fps at 640x480.

3. The resolution might be too high and the PC not powerfull enough for processing images in that

resolution. Try to lower the resolution delivered by the camera.

4. The 3D models shown contain too many polygons and thus impact rendering and overall system

performance. Try to reduce your 3D content (see also 3D content preparation).

3D content

Besides the representation of the real environment the content to be visualized is required as a second

key element for Augmented reality scenarios. A multitude of content options are possible here, for

example 3D objects and 3D animations, as well as 2D graphics and 2D animations. Basically, almost any

kind of multi-media content can be used. For example, texts, images and videos can be implemented as

a texture on a 3D geometry.

Unifeye Design allows you to load 3D content data using the VRML 97/2.0 format which most 3D

modeling and content generation tools can create or export. The VRML files (extension .wrl) exported

from a 3D content preparation tool can be directly loaded into Unifeye Design. However there should be

made some adjustments before exporting the models for Unifeye Design. Especially in terms of data

structure and surface materials (textures, light sources) as well as the model size (amount of data

regarding consumer-oriented hardware requirements) should be taken into account. See the section 3D

content preparation for more details on that.

Please note that Unifeye Design ships with a variety of sample models in the VRML format. They can be

found in the folders <UnifeyeDesignInstallationFolder>/examples/models and

<UnifeyeDesignInstallationFolder>/examples/scenarios.

Camera calibration

Every camera has several internal parameters (so called intrinsic paramaters) defined by the optical

system (e.g. camera chip, camera lens, etc.). For getting a high quality and accurate tracking and

augmentation a camera calibration is needed and recommended. As the calibration is only valid for

exactly one camera system it theoertically has to be performed for every physical camera system (which

is done in industrial high accuracy applications). But even for Unifeye Design related use cases using a

camera calibration is recommended when doing e.g. Kiosk/Terminal systems. This will usually improve

tracking stability. When no camera calibration is available Unifeye Design tries to guess the camera

intrinsic parameters.

There are several camera intrinsic parameters:

1. The focal length describes the distance between the optical center of the lens and the image

plane/sensor and also determines the field of view. If it is not set correctly tracking values won't

be and you might get strange especially the rendered 3D model is relativly far away from the

tracking coordinate system it is bound to.

2. The principal point defines the center of projection on the camera image plane.

3. Several distortion parameters describe the distortion of the camera lens (e.g. radial and

tangential distortion). In a distorted camera image, straight do not appear straight in the camera

image until you apply the according undistortion (provided by the appropriate camera

calibration):

distorted (left) and undistorted image (right)

Fortunately Unifeye Design ships with a tool giving you the ability to calculate the camera intrinsic

parameters (for both live cameras as well as still image cameras) called the Sextant camera calibration

tool. You can start the tool either from the Windows start menu ("Calibration" -> "Standard camera

calibration - Sextant") or from Unifeye Design GUI menu ("Tools" -> "Sextant"). Before doing the

calibration you need to print out a calibration pattern which you can find in the Windows start menu

("Calibration" -> "Calibration Pattern A4 18x12"). Print the PDF and put (better stick it) to a planar

surface. Now follow the process described here. After that you can load the camera calibration file into

the Unifeye Design GUI or the Workflow Authoring GUI. In the Unifeye Design GUI use the tool "Camera

Calibration" provided in the "Configuration" category. The calibration will be applied directly to the just

loaded image source (e.g. webcam) and every image source loaded/activated afterwards. It will also be

stored within a Unifeye scene file (a link to the calibration file) and loaded automatically once you load

the scene again.

Tracking Configuration

Tracking is the process of finding the pose (rotation and translation) of the camera towards the real

world and is an essential requirement for Augmented Reality. Only when having a fast, robust and

accurate tracking system 3D content can be overlayed/superimposed properly (perspectivly correct) into

the real world and in real time. Unifeye Design allows to use and configure the following systems:

Marker tracking and

2D/Planar markerless tracking.

Face Tracking

Extensible Tracking

The reference pattern/image is identified in the image input (e.g. coming from a webcam) using a built-

in image recognition software component and is compared with the patterns stored/configured in the

according configuration files. Once detected the rotation and translation of the camera in reference to

the pattern/image can be calculated to allow correct superimposition of 3D content into the input

image. Thus e.g. putting the reference pattern on a street the information of its perspective in the image

will be calculated and for example a virtual vehicle can be rendered into the image in the correct

perspective.

Which tracking system you want to use is configured in so called "Tracking Configuration" files,

sometimes also refered to as the "TrackingData" file. The tracking configuration file is a XML file. For

every tracking system there are specific parameters and options available which are reflected in the

configuration file. Fortunately you won't have to edit the XML file manually but there are tools available

that allow a step by step graphical user interface based configuration (see sections below). After the

configuration file was created you can use it directly with the Unifeye Design GUI or the Workflow

Authoring tool/engine by simply loading it.

There is a multitude of different tracking systems and approaches available. If you want to use those,

please refer to the Unifeye SDK or contact info@metaio.com.

Marker tracking

A marker is a 2-dimensional optical reference pattern similar to a 2D barcode:

The Marker tracking is based on coded markers which can be configured in arbitrary number and size

(but you can't change the inside pattern). These markers were developed by metaio and provide a

robust tracking due to a maximum contrast and an integrated error correction mechanism which allows

the detection even in relativly low quality images and from flat angles. The system determines the

identity of the marker through the inner pattern of the dark squares. Up to 512 different markers can be

created using the Marker Generator tool. In order to allow tracking, always the full marker must be

visible.

When you start the Unifeye Design GUI it automatically loads a default marker tracking configuration file

with 3 defined markers (ID 1 - 3) of size 140mm. If you print out the provided pdf file SquareMarker.pdf

(available via the Windows start menu in the submenu Tracking Patterns), activate your camera, load a

geometry and put the marker with ID 1 in front of the camera, it will be rendered on top of the marker

with ID 1. Please note, that every loaded 3D model will be placed/assigned to the first configured

tracking coordinate system (in the default case, the marker with ID 1) per default. You can change that

by the according dropdown box within the Unifeye GUI:

You can find more information about coordinate systems here.

If you want to use a different marker tracking configuration (more marker, less marker, different size),

you need to create an according configuration. Please note that you should not just configure 512

markers and use this configuration always as this heavily impacts performance. It is recommended to

always create the configuration file according to your needs.

The creation is done by the "Marker Tracking Configuration" tool within the Unifeye Design GUI. The

tool can be found in the tool category "Configuration".

1. The first step is to create a markerset which defines the markers and the size of the markers you

want to use. Usually you select "Add multiple markers of the same size", then define which

markers to use and the according size. After that you will have to save this initial marker set.

2. This marker set can now be printed, using the PDF button in the tool. This automatically opens

the metaio "Marker Generator" tool:

Leave everything unchanged and simply press "OK". Select a folder where the output PDF file should be

save to. Now the "PDF" file with your markers will be created and opened afterwards. Print out the PDF

file. In the print dialog make sure to NOT apply any kind of scaling (e.g. "fit to print area") is applied as

this will change size and potentially the aspect ratio of the markers (which has to be square).

3. Now use your marker set to create the final tracking configuration file. Usually you will create a

coordinate system for every defined marker. Do this and select all the defined markers:

4. Now save the marker tracking configuration file to disk.

5. The final step is to apply the just created marker tracking configuration file. This is achieved by

clicking the "Apply" button.

Now your just created configuration is active within the Unifeye Design GUI. If you now save the scene,

the saved scene file will contain a link to your just created marker tracking configuration file and will be

loaded automatically once you load the scene. Please note that this only works if you do not move the

created tracking configuration file to a different folder.

If you only want to load (any) tracking configuration file, e.g. when you try different configurations, you

can do so by using the Unifeye Design tool "Tracking Configuration". It provides a file dialog that allows

you to load an arbitray tracking configuration file (marker tracking, markerless tracking):

Notes:

You should not create one configuration file with 512 markers and use it all the time as this

drastically impacts the overall system performance. Better create tracking configuration files

according to the really user markers.

You should provide the correct dimension in millimeter inside the configuration file and not up-

/downscale the marker PDF file during/after printing.

If you need high precision you should provide an appropriate camera calibration.

You should always leave enough white space around the black border of the print out of the

marker:

There are some more advanced configuration options available (e.g. the difference between the fast and

robust mode) which are covered here .

2D/Planar markerless tracking

Planar markerless tracking uses arbitrary images or so called reference images/patterns/patches as

reference to the real world. Reference images can be photographs, illustrations, catalogue pages, print

advertisements and so on. In order to be suitable for the system as tracking reference they must be

sufficiently well textured and contain enough features for the internal image processing and tracking

algorithms. Good reference image usually contain a lot of contrasts, various colors and shapes and/or

color gradients. Large, monotonous surfaces and constantly repeating patterns should be avoided. Also

only printed text is not well suited for tracking purposes.

The clear advantage of planar markerless tracking is, that in general arbitrary images can be used. Also

once initialized not the whole image has to be visible to the camera all the time and can be occluded

(e.g. by a person's hand holding the reference image). The disadvantage is a lower accuracy. Also the

hardware requirements are higher (a dual core CPU is strongly recommended) and you usually can't use

more than about 20 patterns at the same time (this value depends very much on the system

performance). Planar markerless tracking is suited for live camera situations and does not work well

with still images as image source. Also the tracking pattern should not be too far away from the camera.

There are several example tracking configurations for planar markerless tracking provided within the

Unifeye Design installation package which can be found in the subfolders of

<UnifeyeDesignInstallationPath>/examples/scenes/ using the filenames TrackingData_*.xml.

If you want to use your own tracking pattern you have to create an according tracking configuration file.

Fortunately there is a graphical user interface based configuration tool available that helps you creating

a suitable tracking configuration file for Unifeye Design: "Planar markerless configuration". It can be

started either directly from within the according menu entry of the Unifeye Design GUI or by using the

link in the Windows start menu: "Configuration" -> "Planar markerless tracking configuration".

1. The tool is available in a standard (default) and expert mode. Usually the standard mode is

sufficient.

2. After startup click on the "Create patch" icon, select a well suited reference image and give the

dimensions in millimeters of the real (print out) reference. Please keep the aspect ratio and

make sure that the aspect ratio in pixels of the image matches those provided in millimeters.

Also the image used as tracking reference/tracking patch should be rather low resolution

(usually around 400x300 pixel):

3. After adding the reference image/patch, click "OK". You can now check how well your choosen

image is suited for tracking by clicking on the "Check quality" icon. This will start a simulation

process that might take up to one minute. The output is a percentage value. Usually values

above 70% indicate a well suited image. Everything below 30% is bad.

4. You can now save your planar markerless tracking configuration file and exit the planar

markerless configuration tool.

For using the just created configuration file, simply load it into e.g. into Unifeye Design using the

"Tracking Configuration" tool.

After starting the camera, loading a 3D model and putting the print out of the reference image in front

of the camera, the 3D model will appear on the reference image. Please note that by default a loaded

3D model will appear on the first defined coordinate system (see also coordinate systems). So if you

configured more tracking patches you might have to change the model to coordinate system (COS)

binding (as also described above) to see the model on your reference image. When saving the scene

now, a reference link to the currently used tracking configuration file will be stored in the scene file

itself. When loading a scene again, the according tracking configuration file then be loaded

automatically (if the file was not moved or renamed).

Notes

For a more detailed explanation of the planar markerless tracking configuration process please

go here.

If you encouter an unstable tracking the following means can be taken:

o You might define tracking regions within the planar markerless configuration tool. This is

also described here: here

o Make sure that the reference image has is suitable with respect to its texture and

content.

o Depending on how close it will be looked at the printed image with a camera, the

resolution of the reference image used in the tracking configuration should be chosen

accordingly. As a rule of thumb, the resolution of the reference image should be similar

as it is seen from a "default position" by the camera.

For example: If you want to track a DIN A4 (210mmx297mm) sized print-out and the

default resolution of the camera will be 640x480 pixel, the reference image should be

scaled to approx. 350x495 pixel. Looking at the whole page with the camera, the printed

image seen in the current camera view will have approximately the same size as the

reference image. The reference image should not be high-resolution unless you want to

look very close at the printed image.

o The digital version should have the same aspect ratio than the printed version (e.g. no

severe stretching of the image):

In this example the pixel aspect ratio is the same in the reference image and the printed version: Rx/Ry =

Px/Py. When measuring the dimension of the printed version, make sure that it is not rotated 90

Tracking Performance: to get a good tracking performance, choose a reference template size

verifying:

1. (A x B) such that min(A,B) = pow(2,n)*25, where n is a positive integer. Good sizes would be:

(A x B) where min(A,B) is equal to 25, 50, 100, 200, 400, 800, 1600, 3200,... or slightly above, i.e.

(A x B) where min(A,B) is equal to 26, 52, 105, 1606,...Avoid using sizes in-between or sizes that

are slightly below these values. Bad sizes would then be: (A x B) where min(A,B) is, for

example, equal to: 47, 98, 300, 1500,

2. For optimal performance, additionally, choose min(A,B) close to max(A,B). Example of optimal

sizes: (100 x 100), (200 x 200), Condition 2. only makes sense when condition 1. is fulfilled.

Face Tracking (Unifeye Design 2.5)

Unifeye face tracking enables you to detect a face in the input image/video stream, follow its

movements and track it in six degrees of freedom.

For the face tracking to work properly, lighting conditions are important. The face should be well lit with

the lighting coming from the front and being homogeneous. If the use case allows, the autofocus of the

camera should be turned off.

You can test the face tracking by loading the file AS_Tracking_Data_FaceTracking.xml from the config

folder in the Tracking Configuration tool. Make sure to use a camera calibration to get the best result.

For a more detailed description please refer to

http://docs.metaio.com/bin/view/Main/UnifeyeSDKTrackingConfigurationFaceTracking.

Extensible Tracking (Unifeye Design 2.5)

The 3D Extensible Tracking in Unifeye allows the generation of a 3D map of the environment "on the

fly". This means, that a 3D map of the environment is created based on the pose provided by a starting

tracking system and then constantly extended while tracking. The starting tracking system is used for as

long as it is available. If the starting tracking system is lost, the 3D map is used for tracking until the

starting tracking system is available again. The 3D map can also be used for relocalisation after the

tracking has been lost entirely. This means that if you loose the tracking while the 3D map is being used,

you can but you don't necessarily have to go back to the starting tracking system. The tracking will start

again as soon as enough features stored in the 3D map are located again or the starting tracking system

delivers valid tracking values again.

The 3D Extensible Tracking is well suited if you want to use a certain tracking system (e.g. Marker

Tracking or 3D Markerless Tracking) but you need to move around a bit and don't want to pay too much

attention to not loosing the tracking target but instead concentrate on your main task. This is very useful

in scenarios such as AR-supported maintenance. The Extensible Tracking does not only provide you with

some extra moving space but also adapts the 3D map to changing lighting situations. Make sure to use a

camera calibration to improve the tracking quality.

For a more detailed description please refer to

http://docs.metaio.com/bin/view/Main/UnifeyeSDKTrackingConfigurationExtensible.

Coordinate systems

When working with Augmented Reality you have to deal with coordinate systems (see e.g.

http://en.wikipedia.org/wiki/Coordinate_system), usually (right-handed) cartesian and 3D (see e.g.

http://en.wikipedia.org/wiki/Cartesian_coordinate_system). In Unifeye Design there are several

coordinate systems you should know about.

In the most common cases you define one coordinate system per tracking reference (marker or

reference image). Per default this is done automatically and the center of this coordinate system is

placed in the middle of the according tracking reference with the z-axis pointing out of the tracking

reference, the y-axis pointing upwards (north) and the x-axis pointing to the right (east). Every tracking

reference has it's own coordinate system and these location and rotation of those coordinate systems

are determined during image processing/tracking and are refered to as tracking values:

If you want to determine the location of the coordinate systems in your current configuration (e.g.

Unifeye scene) you can activate the visualization of the tracking indicator by using the button in the

Unifeye GUI menu called "Show COS indicator and COS labels". This will give you a COS 3D geometry per

defined tracking coordinate system (usually one per tracking reference) in the center of the according

coordinate system (red bar x-axis, green bar y-axis, blue bar z-axis):

When loading a 3D geometry you bind/assign it to the according (tracking) coordinate system which

establishes a connection between the 3D model and the tracking reference and thus the real world.

Please note that also a 3D model has a coordinate system whose origin might not be in the center of the

model itself or rotated thus causing the model to be rotated or translated when placing on a tracking

reference coordinate system.

Inside the Unifeye Design GUI and the Workflow Authoring GUI you can also move, rotate and scale

models (in respect to the tracking coordinate system they are bound to). This is done using the

"Translation", "Rotation" and "Scale" elements. In the Unifeye GUI those are located in the

"Geometries/Planes" tool of the "Objects" category and become available once a model is loaded and

selected.

For more advanced configurations you can also define tracking coordinate system offsets and even

combine several tracking references (e.g. markers) to create only one coordinate system. This enables

you to cover larger areas because the coordinate system will track as soon as one of the according

tracking references (e.g. markers) is visible in the camera image:

Workflow engine and Authoring GUI

The Workflow Engine and the according Authoring GUI is the most important module for the creation of

individual AR scenarios and offers complete freedom in the creation and implementation of ideas by

means of visual programming. The user has the option to apply virtually all functions and settings into a

"workflow" via drag & drop and let them run automatically. This in particular allows the creation of

complete scenarios, which then should operate according to a well-defined operation chart.

For example, a live show with augmented reality, in which at the push of a button a virtual 3D car model

appears on a real stage and when re-pushing the button a virtual hood opens up. Further interactivity

and workflows can be configured for example, a 3D model can be moved or rotated, change colors and

material characteristics or start, pause or stop an animation timer. The building blocks of workflow are

so-called "actions" that allow extensive configuration and interactivity. Unifeye Design already ships

with a multitude of actions but can also extended by an experienced programmer.

Unifeye Design also ships with several example workflows and workflow scenarios which are available

in: <UnifeyeDesignInstallationPath>/examples/scenarios. Please also refer to the examples section for

more details.

The Workflow Authoring GUI can be started directly via the Windows start menu or from within the

Unifeye Design GUI. You can create/save and load workflows with the Workflow Authoring GUI and also

use the Unifeye Design GUI for playback of your created workflows (by using the "Action Recorder" in

the "Extras" category).

You might also like

- Webtoon Creator PDFDocument32 pagesWebtoon Creator PDFMorena RotarescuNo ratings yet

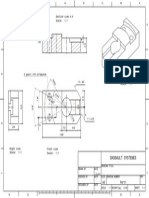

- Section View A-A Scale: 1:1: Dassault SystemesDocument1 pageSection View A-A Scale: 1:1: Dassault SystemesMorena RotarescuNo ratings yet

- Dassault Systemes: Drawn by Date Size Drawing Number REV Drawing TitleDocument1 pageDassault Systemes: Drawn by Date Size Drawing Number REV Drawing TitleMorena RotarescuNo ratings yet

- CT Image Segmentation Using Simulated AnnealingDocument4 pagesCT Image Segmentation Using Simulated AnnealingMorena RotarescuNo ratings yet

- 10 1 1 103Document84 pages10 1 1 103Morena RotarescuNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5782)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- ArrayFire Graphics Tutorial: Optical Flow GFX ExampleDocument32 pagesArrayFire Graphics Tutorial: Optical Flow GFX ExampleIndira SavitriNo ratings yet

- PUCS MCA Univ Department Revd From EmailDocument91 pagesPUCS MCA Univ Department Revd From EmailSEENIVASNo ratings yet

- ETABS Software for Building Analysis and DesignDocument2 pagesETABS Software for Building Analysis and DesignJesus Jaimes ManceraNo ratings yet

- ISO - IEC - 14496 1 System 2010Document158 pagesISO - IEC - 14496 1 System 2010Ezequiel Escudero FrankNo ratings yet

- Pumpsim enDocument169 pagesPumpsim enbenson100% (1)

- Walz S. Autodesk Civil 3D 2024 From Start To Finish 2023Document743 pagesWalz S. Autodesk Civil 3D 2024 From Start To Finish 2023ibitubiNo ratings yet

- Insetting Objects in GraphicsDocument3 pagesInsetting Objects in GraphicsDennis La CoteraNo ratings yet

- Yun HJDocument83 pagesYun HJJaspreet SinghNo ratings yet

- Im Survey Reference GuideDocument853 pagesIm Survey Reference Guidecmm5477No ratings yet

- Unit 4 Three-Dimensional Geometric TransformationDocument15 pagesUnit 4 Three-Dimensional Geometric TransformationBino100% (1)

- CVPR 2020 Paper DigestsDocument169 pagesCVPR 2020 Paper DigestsharivinodnNo ratings yet

- Lesson: Explaining Key Features and Benefits of SAP S/4HANA Manufacturing For Production Engineering and Operations (PEO)Document1 pageLesson: Explaining Key Features and Benefits of SAP S/4HANA Manufacturing For Production Engineering and Operations (PEO)gobashaNo ratings yet

- 3D Printing Models Using CADDocument7 pages3D Printing Models Using CADFresnel FisicoNo ratings yet

- Boxford CNC Machine ToolDocument6 pagesBoxford CNC Machine ToolMuhammad IsmailNo ratings yet

- PM1D TutorialDocument70 pagesPM1D Tutorialkrackku kNo ratings yet

- Creative Technologies CG 20171009Document40 pagesCreative Technologies CG 20171009Jhan G Calate100% (2)

- Viz Weather Guide Old Version PDFDocument289 pagesViz Weather Guide Old Version PDFOnasis HNo ratings yet

- Am 2Document38 pagesAm 2Chandan KumarNo ratings yet

- Design a Simple Fan in 123D DesignDocument8 pagesDesign a Simple Fan in 123D Designمحمد الشيخاويNo ratings yet

- miniFACTORY 2021Document88 pagesminiFACTORY 2021PIXIDOUNo ratings yet

- Rencana Program Perubahan New 2Document11 pagesRencana Program Perubahan New 2Anang SetiawanNo ratings yet

- Rf2D Tutorials SMSDocument141 pagesRf2D Tutorials SMSCayo LopesNo ratings yet

- Basic Tool in Learning Lumion Module Provides Effective 3D Rendering InstructionDocument14 pagesBasic Tool in Learning Lumion Module Provides Effective 3D Rendering InstructionVanAnneNo ratings yet

- Strata 3D CX User GuideDocument322 pagesStrata 3D CX User GuideJuan Fernandez100% (1)

- Mini ProjectDocument22 pagesMini ProjectSarvesh yadavNo ratings yet

- 1module Common Competencies in Introduction To Industrial ArtsDocument12 pages1module Common Competencies in Introduction To Industrial ArtsJean AmocapNo ratings yet

- SM Accuriopress c2060lDocument41 pagesSM Accuriopress c2060lANINo ratings yet

- VideosDocument1 pageVideosrafael_moraes_11No ratings yet

- Hap v6 Brochure WebDocument4 pagesHap v6 Brochure WebHasham KhanNo ratings yet

- Essential Skills in Character RiggingDocument244 pagesEssential Skills in Character RigginggffgfgNo ratings yet