Professional Documents

Culture Documents

A Vision-Based Automatic Landing Method For Fixed Wing UAVs

Uploaded by

Ali YasinOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Vision-Based Automatic Landing Method For Fixed Wing UAVs

Uploaded by

Ali YasinCopyright:

Available Formats

J Intell Robot Syst (2010) 57:217231

DOI 10.1007/s10846-009-9382-2

A Vision-Based Automatic Landing Method

for Fixed-Wing UAVs

Sungsik Huh David Hyunchul Shim

Received: 1 February 2009 / Accepted: 1 August 2009 / Published online: 23 October 2009

Springer Science + Business Media B.V. 2009

Abstract In this paper, a vision-based landing system for small-size xed-wing

unmanned aerial vehicles (UAVs) is presented. Since a single GPS without a

differential correction typically provide position accuracy of at most a few meters, an

airplane equipped with a single GPS only is not guaranteed to land at a designated

location with a sufcient accuracy. Therefore, a visual servoing algorithmis proposed

to improve the accuracy of landing. In this scheme, the airplane is controlled to y

into the visual marker by directly feeding back the pitch and yaw deviation angles

sensed by the forward-looking camera during the terminal landing phase. The visual

marker is a monotone hemispherical airbag, which serves as the arresting device

while providing a strong and yet passive visual cue for the vision system. The airbag is

detected by using color- and moment-based target detection methods. The proposed

idea was tested in a series of experiments using a blended wing-body airplane and

proven to be viable for landing of small xed-wing UAVs.

Keywords Landing dome Fixed-wing UAVs Visual servoing Automatic landing

1 Introduction

It is well known that the landing is the most accident-prone stage for both manned

and unmanned airplanes since it is a delicate process of dissipating the large amount

of kinetic and potential energy of the airplane in the presence of various dynamic and

operational constraints. Therefore, commercial airliners heavily rely on instrument

landing system (ILS) wherever available. As for UAVs, a safe and sound retrieval of

S. Huh (B) D. H. Shim

Department of Aerospace Engineering, KAIST, Daejeon, South Korea

e-mail: hs2@kaist.ac.kr

URL: http://unmanned.kaist.ac.kr

D. H. Shim

e-mail: hcshim@kaist.ac.kr

Reprinted from the journal 217

J Intell Robot Syst (2010) 57:217231

the airframe is still a signicant concern. Typically, UAVs land manually by either

internal or external human pilots on a conventional runway or arresting mechanisms.

For manual landing, the pilot obtains visual cue by naked eye or through the live

images taken by the onboard camera. Piloting outside of the vehicle needs a lot

of practice due to the limited situation awareness. As a consequence, a signicant

portion of mishaps happen during the landing phase. Nearly 50% of xed-wing

UAVs such as Hunter and Pioneer operated by the US military suffer accidents

during landing. As for Pioneer UAVs, almost 70% of mishaps occur during landing

due to human factors [1, 2]. Therefore, it has been desired to automate the landing

process of UAVs in order to reduce the number of accidents while alleviating the

pilots load.

There are a number of automatic landing systems currently in service. Global

Hawk relies on a high-precision differential GPS during take-off and landing [3].

Sierra Nevada Corporations UCARS or TALS

1

are externally located aiding sys-

tems consisting of a tracking radar and communication radios. It has been success-

fully used for landing of many military xed-wing and helicopter UAVs such as

Hunter or Fire Scout on runways or even on a ship deck. The information including

the relative position or altitude of the inbound aircraft is measured by the ground

tracking radar and relayed back for automated landing. Some UAVs are retrieved

in a conned space using a special net, which arrests the vehicle own manually

by the external pilot. Scan Eagle is retrieved by a special arresting cable attached

to the tall boom, to which the vehicle is precisely guided by a differential GPS.

2

These external aids listed above rely on special equipment and radio communication,

which are not always available or applicable due to complexity, cost, or limits from

operating environment. Therefore, automatic landing systems that are inexpensive,

passive, and reliable are highly desired.

Vision-based landing has been found attractive since it is passive and does not

require any special equipment other than a camera and a vision processing unit

onboard. A vision-enabled landing system will detects the runway or other visual

markers and guide the vehicle to the touchdown point. There are a range of

previous works, theoretical or experimental, for xed-wing and helicopter UAVs

[46]. Notably, Barber et al. [7] proposed a vision-based landing for small xed-wing

UAVs, where visual marker is used to generate the roll and pitch commands to the

ight controller.

In this paper, a BWB-shaped xed-wing UAV is controlled to land automatically

by using vision algorithms and relatively simple landing aids. The proposed method

is shown to be viable without resorting to expensive sensors and external devices.

The overall approach, its component technologies, and the landing aids including the

landing dome and recovery net are described in Section 2. The vision algorithms

including detection and visual servoing algorithm which enables airplane to land

automatically are described in Section 3. Finally, the experiment results of vision-

based landing test are shown to validate the algorithm in Section 4. In Section 5,

conclusion and closing remarks are given.

1

http://www.sncorp.com

2

http://www.insitu.com/scaneagle

218 Reprinted from the journal

J Intell Robot Syst (2010) 57:217231

2 System Description

The proposed landing system consists of three major components: an inated dome

as a visual marker, a vision processing unit, and a ight controller using a visual

servoing algorithm. In order to develop a low-cost landing system without expensive

sensors or special supporting systems, the vision systemis integrated with an MEMS-

based inertial measurement unit (IMU) and a single GPS. Without a differential

correction, the positioning accuracy of GPS is known to be a few meters horizontally

and twice as bad vertically. Therefore, even if the position of the dome is accurately

known, it will be still difcult to hit the dome consistently with the navigation system

that has position error larger than the size of the landing dome.

For landing, with roughly estimated location of the dome, the airplane starts ying

along a glide slope leading to the estimated position of the dome. When the vision

system locks on the dome, the ight control switches from glide slope tracking to

direct visual servoing, where the offset of the dome from the center of the image

taken from the onboard front-looking camera is used as the error signals for the

pitch and yaw control loops. In the following, detailed descriptions on the proposed

approach are presented (Fig. 1).

2.1 Airframe and Avionics

The platform used in this research is a BWB-based UAV (Fig. 2). BWBs are

known to carry a substantially large payload per airframe weight due to the extra

lift generated by the airfoil-shaped fuselage. Our BWB testbed is constructed with

sturdy expanded polypropylene (EPP), which is reinforced with carbon ber spars

embedded at strategic locations. The vehicle is resilient to shock and crash, a highly

welcomed trait for the landing experiments in this paper.

This BWB has only two control surfaces at the trailing edge of the wing, known

as elevons, which serve as both aileron and elevator. At the wingtips, winglets are

installed pointing down to improve the directional stability. The vehicle has a DC

brushless motor mounted at the trailing edge of the airframe, powered by Lithium-

polymer cells. The same battery powers the avionics to lower the overall weight. The

Initial

Estimation

Beginning of

glide slope

Terminal visual

servoing

Air dome

Fig. 1 Proposed dome-assisted landing procedure of a UAV

Reprinted from the journal 219

J Intell Robot Syst (2010) 57:217231

Fig. 2 The KAIST BWB (blended wing-body) UAV testbed

large payload compartment (30 15 7.5 cm) in the middle of the fuselage houses

the ight computer, IMU, battery, and radio receiver. These parts are all off the shelf

products.

The avionics of KAIST BWBUAVconsists of a ight control computer (FCC), an

inertial measurement unit(IMU), a GPS, and a servo controller module. Flight con-

trol computer is constructed using PC104-compatible boards due to their industry-

grade reliability, compactness and expandability. The navigation system is built

around a GPS-aided INS. U-blox Supersense 5 GPS is used for its excellent track-

ing capability even when the signal quality is low. Inertial Sciences MEMS-IMU

showed a robust performance during the ight. The vehicle communicates with the

ground station through a high-bandwidth telemetry link at 900 MHz to reduce the

communication latency (Table 1, Fig. 3).

The onboard vision system consists of a small forward-looking camera mounted

at the nose of the airframe, a video overlay board, and a 2.4 GHz analog video

transmitter with a dipole antenna mounted at a wingtip. Due to the payload limit,

the image processing is currently done at the ground station, which also serves as

the monitoring and command station. Therefore the image processing and vision

algorithm results are transmitted back to the airplane over the telemetry link. Image

processing and vision algorithms are processed on a laptop computer with Pentium

Core2 CPU 2 GHz processor and 2 GB memory. Vision algorithm is written in MS

Visual C++ using OpenCV library.

3

It takes 240 ms to process the vision algorithm

at an image, then, the visual servoing command is transmitted back to the vehicle at

4 Hz, which is shown to be sufcient for the visual servoing problem considered in

this research.

3

Intel Open Source Computer Vision Library

220 Reprinted from the journal

J Intell Robot Syst (2010) 57:217231

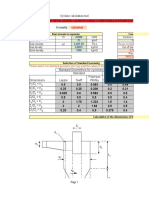

Table 1 Specication of BWB

testbed

a

http://www.haakworks.com

Base platform StingRay 60 by Haak Works

a

Blended wing-body (BWB)

Dimensions Wing span: 1.52 m(W)

Wing area: 0.52 m

2

Aspect ratio: 4.43

Weight 2.6 kg (fully instrumented)

Powerplant Axi 2217/16 DC brushless motor

12 6 APC propeller

Hacker speed controller X-40-SB-Pro

Lithium-ion-polymer (3 S: 11.1 V 5,350 mAh)

Operation time Over 10 min

Avionics Navigation: single GPS-aided INS

GPS: U-Blox Supersense 5

IMU: inertial science MEMS IMU

Differential/absolute pressure gauges

Flight computer: PC104 Pentium-M 400 MHz

Communication: 900 MHz Ethernet Bridge

Vision system Color CCD camera (70 deg FOV)

2.4 GHz analog transmitter

Frame grabber: USB 2.0 analog video

capture kit

Operation Catapult-launch, body landing

Autonomy Speed, altitude, heading, altitude

hold/command

Waypoint navigation

Automatic landing

2.2 Landing Equipment

In order to land an airplane in a conned space, an arresting device is needed to

absorb the kinetic energy in a relatively short time and distance. In this research, we

propose a dome-shaped airbag, which offers many advantages for automatic landing

scenarios.

The dome is constructed with sturdy nylon of a vivid red color, which provides a

strong visual cue even at a long distance. It also absorbs the shock during the landing

and therefore the vehicle would not need to make any special maneuver but simply

y into the dome at a low speed with a reasonable incident angle. The vision system

runs a fast and reliable color tracking algorithm, suitable for high-rate visual servoing

under various light conditions.

For operation, the bag is inated within a few minutes by a portable electric

blower or possibly by a gas-generating charge and transported to anywhere in a

compact package after deated. Using the hooks sewn around the bottom patch,

it can be secured to the ground using pegs. Its dome shape allow landing from any

direction without reinstallation while conventional arresting nets have to be installed

to face the wind to avoid crosswind landing. Since this approach is vision-based, the

landing dome is not equipped with any instruments, signal sources, or communication

devices. Therefore it does not consume any power or emits energy that can be

detected by adversaries.

Reprinted from the journal 221

J Intell Robot Syst (2010) 57:217231

Fig. 3 Avionics and hardware architecture

In addition, a recovery net can be used to capture larger vehicles. In this case,

the landing dome serves only as a visual cue and the recovery net is responsible for

arresting the vehicle.

3 Vision Algorithms

The proposed vision algorithm consists of a color-based detection, a moment-based

detection, and visual servoing. The color- and moment-based methods perform in

a complementary manner to detect the landing dome more reliably. In the terminal

stage of landing, the vehicle maneuvers by the visual servoing command only (Fig. 4).

3.1 Color-Based Target Detection

The domes color is the strongest visual cue that distinguishes the target from other

objects in the background. In RGB coding, a pixel at image coordinate (x, y) has

three integers, (I

R

, I

G

, I

B

), varying from 0 to 255, respectively. As the pixels that

belong to the red dome vary quite large depending on lighting condition, shadow,

color balance, or noise, a ltering rule is needed to determine whether a pixel

222 Reprinted from the journal

J Intell Robot Syst (2010) 57:217231

Fig. 4 Proposed landing dome and a recovery net

belongs to the dome or not. Based on many images of the dome taken under various

conditions, the following ltering rule is proposed:

aI

B

(x, y) < I

R

(x, y) 255

b I

G

(x, y) < I

B

(x, y) 255

0 c < I

R

(x, y)

(1)

where (a, b, c) = (1.5, 1.5, 20). The threshold levels are chosen rather inclusively for

detection of wider color range. Figure 5 visualizes the colors that are qualied as a

dome using Eq. 1.

3.2 Moment-Based Target Detection

When multiple red objects are found by the color-based detection described above

in a given image, another classication is needed to determine whether an object is

0

100

200

0

100

200

0

50

100

150

200

250

B

L

U

E

RGB Color Space

RED

GREEN

0

50

100

150

200

250

0

100

200

0

50

100

150

200

250

B

L

U

E

RGB Color Space

RED

GREEN

c

b

a

Fig. 5 Full RGB color space (left) and selected region by ltering rule (right)

Reprinted from the journal 223

J Intell Robot Syst (2010) 57:217231

Original

image

Thresholded

image

Filled by

morphology

Result

image

Fig. 6 Moment-based image processing procedure

indeed the landing dome or not. For this, an image moment-based ltering is devised.

Among many algorithms such as template matching or image moment comparison,

Hus method [8, 9] is used since it is computationally efcient for high-rate detection

needed for vision-based landing (Table 1).

Hus moment consists of seven moment invariants derived from the rst three

central moments. In a gray image with pixel intensities I(x, y), raw image moments

M

ij

are calculated by

M

ij

=

y

x

i

y

j

I (x, y). (2)

Then simple image properties derived by raw moments include the area of image

M

00

, and its centroid { x, y} =

M

10

M

00

, M

01

M

00

.

Central moments for digital image are dened as below

ij

=

x x

y y

q

f (x, y) (3)

Table 2 Hus moment value

of various shapes in Fig. 6

Roof Road sign Automobile Landing dome

Hus moment 1 0.48560 0.20463 0.27423 0.21429

Hus moment 2 0.18327 0.00071 0.04572 0.01806

Hus moment 3 0.01701 0.00009 0.00193 0.00106

Hus moment 4 0.00911 0.00028 0.00098 0.00007

224 Reprinted from the journal

J Intell Robot Syst (2010) 57:217231

Fig. 7 Application of Hus method to domes seen from various viewpoints

and the central moments of order up to the third order are given as

01

=

10

= 0

11

= M

11

xM

01

= M

11

yM

10

20

= M

20

xM

10

02

= M

02

yM

01

21

= M

21

2 xM

11

yM

20

+2 x

2

M

01

12

= M

12

2 yM

11

xM

02

+2 y

2

M

10

30

= M

30

3 xM

20

+2 x

2

M

10

03

= M

03

3 yM

02

+2 y

2

M

01

(4)

Fig. 8 Autopilot loops for longitudinal (top) and lateral dynamics (bottom) of airplane

Reprinted from the journal 225

J Intell Robot Syst (2010) 57:217231

In order to make the moments invariant to both translation and changes in scale,

the following formula is used to divide the corresponding central moment by the

properly scaled moment.

ij

=

ij

(

(i+j+2)

/

2

)

00

(5)

Since the dome is projected to be somewhere between a semicircle to a full circle, its

Hus moments would not vary too much regardless of the distance and the viewing

angle. Therefore Hus moment can be a good invariant characterization of a target

shape. Using the second- and third-order moments given in Eq. 5, the following Hus

image moments that are invariant to translation, rotation, and scale are derived:

1

=

20

+

02

2

=

20

02

2

+

2

11

3

=

30

3

12

2

+

3

21

03

4

=

30

12

2

+

21

+

03

2

(6)

In this paper, only four image moments are used for detection.

Hus moments approach is applied to various red objects seen fromair as shown in

Fig. 6 and their moment values are listed in Table 2. The rooftop and the automobile

in Fig. 6 has relatively larger Hus moments while the road sign and the landing

dome have comparable rst moments due to their round shapes. The higher-order

moments of the road sign has much smaller values than those of landing dome since

the road sign has a smaller area for given number of pixels. Although the landing

dome seen directly above would look much similar to the road sign, such cases are

excluded because the camera is mounted to see only the side of the dome during

landing.

In Fig. 7, the image processing results of landing domes seen from various view-

points. Once a database of Hus moments of these domes was built, the average,

standard deviation, and minimum/maximum values of landing dome can be deter-

mined. Based on these values, the blob with the largest area in a given image is nally

declared as the landing dome. In case of all candidates are not in the proper range

of Hus moments, the algorithm concludes that a landing dome does not exist in

that image.

im

Y

C

Z

im

X

T

x

f

T

y

C

Y

C

X

,

ref

ref

Fig. 9 Visual servoing scheme

226 Reprinted from the journal

J Intell Robot Syst (2010) 57:217231

0 5 10 15

10

20

30

40

50

60

70

80

time [sec]

a

l

t

i

t

u

d

e

[

m

]

alt.: Kalman filter

alt.: GPS

alt.: Ref. trajectory

landing at

t=13.2 sec

automatic

landing stage

vision-based

landing stage

Fig. 10 Experiment result of vision-based landing: vehicle altitude

3.3 Visual Servoing

Visual servoing is a method to control a robot using computer vision. The robot is

controlled by the error of features between the desired image and current image. The

visual servoing method can be applied to the xed-wing UAV such that the vehicle

is controlled by the pitch and roll angle errors estimated from an image as shown in

Fig. 8.

As illustrated in Fig. 9, the offset of the landing dome fromthe center of the image

represents the heading and pitch deviation.

= arctan

y

T

= arctan

x

T

(7)

0

50

100

-250

-200

-150

-100

-50

0

40

60

80

LCC x

LCC y [m]

L

C

C

a

l

t

[

m

]

Fig. 11 Experiment result of vision-based landing: 3D trajectory

Reprinted from the journal 227

J Intell Robot Syst (2010) 57:217231

0 5 10 15

-50

0

50

100

R

o

l

l

[

d

e

g

]

Ref.

A/C

0 5 10 15

-100

-50

0

50

P

i

t

c

h

[

d

e

g

]

Ref.

A/C

0 5 10 15

-200

0

200

Y

a

w

[

d

e

g

]

time [sec]

Ref.

A/C

Fig. 12 Experiment result of vision-based landing: vehicle attitude and heading

0 5 10 15

-20

0

20

b

o

d

y

v

e

l

[

m

/

s

]

v

x

v

y

v

z

0 5 10 15

-50

0

50

b

o

d

y

a

c

c

[

m

/

s

]

a

x

a

y

a

z

0 5 10 15

-500

0

500

a

n

g

u

l

a

r

r

a

t

e

[

r

a

d

/

s

]

time [sec]

p

q

r

Fig. 13 Experiment result: body velocity, body acceleration and angular rate

228 Reprinted from the journal

J Intell Robot Syst (2010) 57:217231

When the heading and pitch angle errors are zero, i.e. = = 0 (the landing

dome appears at the center of the image), the vehicle ies directly to the landing

dome.

Fig. 14 Sequences of images during landing to the dome (left) and the recovery net (right)

Reprinted from the journal 229

J Intell Robot Syst (2010) 57:217231

If the camera is mounted at the nose of the airplane so that the Z

C

-axis in Fig. 9

aligns with the direction of ight, any heading and pitch angle deviations indicate

that the heading of the vehicle deviates from the target point. Therefore, in order to

guide the vehicle to the target point, the controllers should minimize these angles. It

can be done simply by sending the heading and pitch angles to the heading and pitch

angle controllers. Since the pitch and yaw deviation angles computed from an image

are directly sent to the controllers, the INS output is not utilized at all. This approach

is simple and robust to any failure in the INS or GPS. This scheme is surprisingly

simple but highly effective as will be shown in the experimental result.

4 Vision-Based Landing Experiment Result

Using the experiment setup described in Section 2, the proposed vision-based landing

algorithm with landing aids was tested. First, the inated landing dome was installed

in the test eld. After the GPS is locked on and the INS is initialized, the vehicle is

launched using a catapult at an airspeed excess of 20 m/s. Once airborne, the vehicle

is commanded to look for the landing dome in its view. When the dome is located

in an image, it generates a glide slope from its current location to roughly estimated

location of the dome. During descent, the aircraft ies by visual servoing, and nally,

it lands at the dome or at the recovery net safely.

In Fig. 10, vehicle altitude as the results of ight test is shown. Before t = 6.6 s,

the vehicle follows the glide slope leading to the roughly estimated location of the

landing dome. During t = 6.613.2, the vehicle ies in the direct visual servoing

mode. At t = 13.2 s, the vehicle contacts with the landing net safely.

The reference altitude and the actual one are plotted in Fig. 10. The altitude of

the landing dome was initially estimated to be 45 maccording to reference trajectory,

but it turned out to be 50 m according to the altitude of Kalman lter at the time of

impact. This observation advocates our approach to use direct visual servoing instead

of following blindly the initial trajectory, which does contain errors large enough to

miss the landing net. Figures 11, 12, and 13 show the vehicles 3D trajectory and

states. In Fig. 14, the images taken from the onboard cameras are shown and the

green rectangles in onboard images showthat the landing dome was reliably detected

during ight. The result also shows that the landing method using both landing dome

and recovery net are feasible to recover the airplane safely.

5 Conclusion

In this paper, a vision-based automatic landing method for xed-wing unmanned

aerial vehicle is presented. The domes distinctive color and shape provide unmis-

takably strong and yet passive visual cue for the onboard vision system. The vision

algorithm detects the landing dome reliably from onboard images using color- and

moment-based detection method. Since the onboard navigation sensors based on

low-cost sensors cannot provide accurate enough navigation solutions, a direct visual

servoing is implemented for precision guidance to the dome and the recovery net.

The proposed idea has been validated in a series of experiments using a BWB

airplane to show that the proposed system is viable approach for landing.

230 Reprinted from the journal

J Intell Robot Syst (2010) 57:217231

Acknowledgement The authors gratefully acknowledge for the nancial support by Korea Ministry

of Knowledge and Economy.

References

1. Williams, K.W.: A summary of unmanned aircraft accident/incident data: human factors implica-

tions. U. S. Department of Transportation Report, No. DOT/FAA/AM04/24 (2004)

2. Manning, S.D., Rash, C.E., LeDuc, P.A., Noback R.K., McKeon, J.: The role of human causal

factors in U. S. army unmanned aerial vehicle accidents. USAARL Report No. 200411 (2004)

3. Loegering, G.: Landing dispersion resultsglobal Hawk auto-land system. In: AIAAs 1st Tech-

nical Conference and Workshop on Unmanned Aerial Vehicles (2002)

4. Saripalli, S., Montgomery, J.F., Sukhatme, G.S.: Vision-based autonomous landing of an un-

manned aerial vehicle. In: Proceedings of the IEEE International Conference on Robotics &

Automation (2002)

5. Bourquardez, O., Chaumette, F.: Visual servoing of an airplane for auto-landing. In: Proceedings

of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems (2007)

6. Trisiripisal, P., Parks, M.R., Abbott, A.L., Liu, T., Fleming, G.A.: Stereo analysis for vision-based

guidance and control of aircraft landing. In: 44th AIAA Aerospace Science Meeting and Exhibit

(2006)

7. Barber, B., McLain, T., Edwards, B.: Vision-based landing of xed-wing miniature air vehicles. In:

AIAA Infotech@Aerospace Conference and Exhibit (2007)

8. Hu, M.-K.: Visual pattern recognition by moment invariants. In: IRETransactions on Information

Theory (1962)

9. Flusser, J.: Moment invariants in image analysis. In: Proceedings of World Academy of Science,

Engineering, and Technology (2006)

Reprinted from the journal 231

You might also like

- Weld Cracking PDFDocument5 pagesWeld Cracking PDFjuanNo ratings yet

- Een Oen 498 - Pre ProposalDocument14 pagesEen Oen 498 - Pre Proposalapi-296881750No ratings yet

- Presented By: Ranjeet Singh Sachin AnandDocument24 pagesPresented By: Ranjeet Singh Sachin AnandSachin AnandNo ratings yet

- As400 Subfile Programming Part II Basic CodingDocument13 pagesAs400 Subfile Programming Part II Basic Codingmaka123No ratings yet

- Skimming and ScanningDocument17 pagesSkimming and ScanningDesi NataliaNo ratings yet

- Compressed Air System Design ManualDocument26 pagesCompressed Air System Design ManualBadrul HishamNo ratings yet

- Vdocuments - MX Sedecal HF Series X Ray Generator Service ManualDocument426 pagesVdocuments - MX Sedecal HF Series X Ray Generator Service ManualAndre Dupount .0% (1)

- Design and Devlopment of MAVSDocument66 pagesDesign and Devlopment of MAVSMatthew SmithNo ratings yet

- Intelligent Control of A Ducted-Fan VTOL UAV With Conventional Control SurfacesDocument285 pagesIntelligent Control of A Ducted-Fan VTOL UAV With Conventional Control SurfacesTushar KoulNo ratings yet

- Image-Based Face Detection and Recognition Using MATLABDocument4 pagesImage-Based Face Detection and Recognition Using MATLABIjcemJournal100% (1)

- Design and Development of Unmanned Ground and Aerial Vehicle With The Concept of Integration of Drone and RoverDocument10 pagesDesign and Development of Unmanned Ground and Aerial Vehicle With The Concept of Integration of Drone and RoverkoftamNo ratings yet

- 2021 - Design of A Small Qadrotor UAV and Modeling of An MPC-based SimulatorDocument96 pages2021 - Design of A Small Qadrotor UAV and Modeling of An MPC-based SimulatorterenceNo ratings yet

- Unmanned Aircraft SystemsFrom EverandUnmanned Aircraft SystemsElla AtkinsNo ratings yet

- Design and Development of UAV For Medical Application - Final Report PDFDocument88 pagesDesign and Development of UAV For Medical Application - Final Report PDFNagendraNo ratings yet

- Development of Isr For QuadcopterDocument9 pagesDevelopment of Isr For QuadcopterInternational Journal of Research in Engineering and TechnologyNo ratings yet

- VHDL Test Bench For Digital Image Processing SystemsDocument10 pagesVHDL Test Bench For Digital Image Processing SystemsMoazh TawabNo ratings yet

- Final Report OMNI WING AIRCRAFTDocument51 pagesFinal Report OMNI WING AIRCRAFTPrithvi AdhikaryNo ratings yet

- Addis Ababa University Addis Ababa Institute of Technology School of Electrical and Computer EngineeringDocument23 pagesAddis Ababa University Addis Ababa Institute of Technology School of Electrical and Computer Engineeringbegziew getnetNo ratings yet

- Autopilot 31Document51 pagesAutopilot 31Pramod Kumar100% (1)

- Subnetting ItexampracticeDocument12 pagesSubnetting ItexampracticeMohammad Tayyab KhusroNo ratings yet

- Design and Analysis of Cyclone Dust SeparatorDocument5 pagesDesign and Analysis of Cyclone Dust SeparatorAJER JOURNALNo ratings yet

- Drone Project: Physics 118 - Final ReportDocument13 pagesDrone Project: Physics 118 - Final ReportMahdi MiriNo ratings yet

- Addis Ababa University Addis Ababa Institute of Technology School of Electrical and Computer EngineeringDocument22 pagesAddis Ababa University Addis Ababa Institute of Technology School of Electrical and Computer Engineeringbegziew getnetNo ratings yet

- Brock Technologies Antenna Pointing System V2 BrochureDocument1 pageBrock Technologies Antenna Pointing System V2 BrochureUAVs AustraliaNo ratings yet

- Cyclone Calculation ToolDocument6 pagesCyclone Calculation ToolAli YasinNo ratings yet

- Validation of A Quad-Rotor Helicopter Matlab-Simulink and Solidworks Models - ABDocument6 pagesValidation of A Quad-Rotor Helicopter Matlab-Simulink and Solidworks Models - ABNancy RodriguezNo ratings yet

- Vdocuments - MX - Explain Tromp Curve Theorypdf PDFDocument4 pagesVdocuments - MX - Explain Tromp Curve Theorypdf PDFAli YasinNo ratings yet

- Oxygen CleaningDocument2 pagesOxygen CleaningAli Yasin0% (1)

- IEEE 24748-5 - 2017 - SW Development PlanningDocument48 pagesIEEE 24748-5 - 2017 - SW Development Planningangel tomas guerrero de la rubiaNo ratings yet

- Design and Development of The Hardware For Vision Based UAV AutopilotDocument6 pagesDesign and Development of The Hardware For Vision Based UAV AutopilotJoshua DanielNo ratings yet

- SystemVision Intro TrainingDocument205 pagesSystemVision Intro TrainingRafael SouzaNo ratings yet

- Spherical Vtol UavDocument11 pagesSpherical Vtol UavPranab PrustyNo ratings yet

- Hybrid PID LQ Quadrotor ControllerDocument14 pagesHybrid PID LQ Quadrotor ControllerGhada BeydounNo ratings yet

- Buat Camera Thermal DGN ArduinoDocument4 pagesBuat Camera Thermal DGN ArduinoelkaNo ratings yet

- RobotDocument132 pagesRobotPhong Ngo100% (1)

- Atmel Studio Integrated Development Environment With FreeRTOS AwarenessDocument8 pagesAtmel Studio Integrated Development Environment With FreeRTOS AwarenessAshok ObuliNo ratings yet

- Uav 01 PDFDocument62 pagesUav 01 PDFSahil GargNo ratings yet

- Simulating Quadrotor UAVs in Outdoor ScenariosDocument7 pagesSimulating Quadrotor UAVs in Outdoor ScenariosRodolfo Castro E SilvaNo ratings yet

- Modelling & Control of A QuadrotorDocument15 pagesModelling & Control of A Quadrotorvidc87No ratings yet

- Autonomous Uavs: Cooperative Tracking of Moving Targets by A Team ofDocument9 pagesAutonomous Uavs: Cooperative Tracking of Moving Targets by A Team ofArun GopinathNo ratings yet

- TwincopterDocument5 pagesTwincopterRinson RajNo ratings yet

- Literature Review VtolDocument14 pagesLiterature Review VtolFahadZamanMalikNo ratings yet

- Nazeer Basha Tenzin Choedak: Design of Single Engine Propeller Driven AircraftDocument69 pagesNazeer Basha Tenzin Choedak: Design of Single Engine Propeller Driven AircraftChoedak TenzinNo ratings yet

- Advantages and Disadvantages of RTOSDocument5 pagesAdvantages and Disadvantages of RTOSEulises QuinteroNo ratings yet

- HovercraftDocument6 pagesHovercraftSibasish SahuNo ratings yet

- Quad CopterDocument42 pagesQuad CopterShanaka JayasekaraNo ratings yet

- x8 Skywalker Conversion Procedure e FDocument18 pagesx8 Skywalker Conversion Procedure e Fhung dangNo ratings yet

- Fabrication of A Drone: A Major Project Report OnDocument3 pagesFabrication of A Drone: A Major Project Report OnAzam Emaad100% (1)

- 054-Micro Aerial Vehicles Design Challenges State of The Art ReviewDocument17 pages054-Micro Aerial Vehicles Design Challenges State of The Art Reviewkrishnamoorthy_krishNo ratings yet

- ME4241 - Aircraft Performance and Stability Course Assignment Stability and Control of Quadcopter DronesDocument7 pagesME4241 - Aircraft Performance and Stability Course Assignment Stability and Control of Quadcopter Dronesliuxc000No ratings yet

- Modelling and Control of A QuadrocopterDocument10 pagesModelling and Control of A QuadrocopterAdi Mulyadi100% (1)

- Attitude Control of A QuadrotorDocument6 pagesAttitude Control of A QuadrotorGhada BeydounNo ratings yet

- One: Introduction and FeaturesDocument59 pagesOne: Introduction and Featurestharun100% (2)

- Autopilot Control SystemDocument13 pagesAutopilot Control SystemGamePlay DistinctNo ratings yet

- Image Processing in UAVDocument11 pagesImage Processing in UAVVp Hari NandanNo ratings yet

- Wireless Control Quadcopter With Stereo Camera: P.Manoj, S.GokulakannanDocument24 pagesWireless Control Quadcopter With Stereo Camera: P.Manoj, S.GokulakannanManojNo ratings yet

- Designand Manufacturing of QuadcopterDocument6 pagesDesignand Manufacturing of QuadcopterashokkumarNo ratings yet

- Quadcopter Dynamics and Simulation - Andrew GibianskyDocument24 pagesQuadcopter Dynamics and Simulation - Andrew GibianskywelltonarrudaNo ratings yet

- 6-DOF Installation Instructions: Package ListDocument15 pages6-DOF Installation Instructions: Package ListJosé Javier Pérez BlancoNo ratings yet

- Human Upper Limb and Arm Kinematics For Robot Based RehabilitationDocument6 pagesHuman Upper Limb and Arm Kinematics For Robot Based RehabilitationSarut PanjanNo ratings yet

- Military Aplication UavDocument30 pagesMilitary Aplication UavElena JelerNo ratings yet

- 10 Vertical Take Off and Landing ControlDocument27 pages10 Vertical Take Off and Landing ControlkalyanNo ratings yet

- Simulation Platform For QuadrotorDocument2 pagesSimulation Platform For QuadrotorAmira HosnyNo ratings yet

- Robot VisionDocument40 pagesRobot VisionSurya KalsiNo ratings yet

- Autonomous VTOL For Avalanche Buried Searching - AVIONICSDocument89 pagesAutonomous VTOL For Avalanche Buried Searching - AVIONICSmatteoragniNo ratings yet

- Dimensiones Arduino Blog Nice Drawings of The Arduino UNO and Mega 2560Document6 pagesDimensiones Arduino Blog Nice Drawings of The Arduino UNO and Mega 2560acrodesNo ratings yet

- Conceptual Design of An Unmanned Aerial VehicleDocument47 pagesConceptual Design of An Unmanned Aerial VehicleTarik Hassan ElsonniNo ratings yet

- Presentation On:-Drones: Prepared by - Akarsht Pachyala (CSE '30')Document9 pagesPresentation On:-Drones: Prepared by - Akarsht Pachyala (CSE '30')Akarshit PachyalaNo ratings yet

- A Mini Unmanned Aerial Vehicle (Uav) : System Overview and Image AcquisitionDocument7 pagesA Mini Unmanned Aerial Vehicle (Uav) : System Overview and Image AcquisitionhougieNo ratings yet

- Fabrication & Controling of A Manipulator With Specific Coloured Object DetectionDocument18 pagesFabrication & Controling of A Manipulator With Specific Coloured Object DetectionTusher Saha50% (8)

- Safety in Lubrication: Technical TopicDocument2 pagesSafety in Lubrication: Technical TopicJahmia CoralieNo ratings yet

- Safety Data Sheet: Exxonmobil Lubricants & Specialties Europe, A Division of Exxonmobil Petroleum & Chemical, Bvba (Empc)Document8 pagesSafety Data Sheet: Exxonmobil Lubricants & Specialties Europe, A Division of Exxonmobil Petroleum & Chemical, Bvba (Empc)Ali YasinNo ratings yet

- Numerical and Experimental Analysis of Hydroxyl-Terminated Poly-Butadiene Solid Rocket Motor by Using ANSYS - ScienceDirectDocument2 pagesNumerical and Experimental Analysis of Hydroxyl-Terminated Poly-Butadiene Solid Rocket Motor by Using ANSYS - ScienceDirectAli YasinNo ratings yet

- Safety Data Sheet: Product Name: Mobil Agl - Synthetic Aviation Gear LubricantDocument9 pagesSafety Data Sheet: Product Name: Mobil Agl - Synthetic Aviation Gear LubricantWork ForceNo ratings yet

- Uncertainty Analysis and Design Optimization of Solid Rocket Motors With Finocyl Grain - SpringerLinkDocument16 pagesUncertainty Analysis and Design Optimization of Solid Rocket Motors With Finocyl Grain - SpringerLinkAli YasinNo ratings yet

- Safety Data Sheet: Product Name: Mobil Agl - Synthetic Aviation Gear LubricantDocument9 pagesSafety Data Sheet: Product Name: Mobil Agl - Synthetic Aviation Gear LubricantWork ForceNo ratings yet

- Advanced Computer Science On Internal Ballistics of Solid Rocket MotorsDocument9 pagesAdvanced Computer Science On Internal Ballistics of Solid Rocket MotorsAli YasinNo ratings yet

- CAD-Based 3D Grain Burnback Analysis For Solid Rocket Motors - SpringerLinkDocument5 pagesCAD-Based 3D Grain Burnback Analysis For Solid Rocket Motors - SpringerLinkAli YasinNo ratings yet

- Processes 07 00830 PDFDocument7 pagesProcesses 07 00830 PDFAli YasinNo ratings yet

- CyclonesDocument11 pagesCyclonesMisdi HabibiNo ratings yet

- DepositSlip NTS 19 5134335113549761Document1 pageDepositSlip NTS 19 5134335113549761Ali YasinNo ratings yet

- Processes 07 00830 PDFDocument7 pagesProcesses 07 00830 PDFAli YasinNo ratings yet

- Processes 07 00830 PDFDocument7 pagesProcesses 07 00830 PDFAli YasinNo ratings yet

- Processes 07 00427 PDFDocument16 pagesProcesses 07 00427 PDFAli YasinNo ratings yet

- 25533Document6 pages25533Ali YasinNo ratings yet

- Flyer Oct 19 PDFDocument1 pageFlyer Oct 19 PDFAli YasinNo ratings yet

- Statistical Modeling To Determine Resonant Frequency Information Present in Combustion Chamber Pressure SignalsDocument4 pagesStatistical Modeling To Determine Resonant Frequency Information Present in Combustion Chamber Pressure SignalsAli YasinNo ratings yet

- Processes 07 00427 PDFDocument16 pagesProcesses 07 00427 PDFAli YasinNo ratings yet

- Adiabatic Expansion EfficiencyDocument18 pagesAdiabatic Expansion Efficiencyharish_mit646361No ratings yet

- Excel Functions ListDocument14 pagesExcel Functions ListAli YasinNo ratings yet

- WJM Technologies: Excellence in Material JoiningDocument5 pagesWJM Technologies: Excellence in Material JoiningA K SinghNo ratings yet

- OrificesDocument11 pagesOrificesRoman KrautschneiderNo ratings yet

- Excel Functions ListDocument14 pagesExcel Functions ListAli YasinNo ratings yet

- AscTec Hummingbird SafetydatasheetDocument1 pageAscTec Hummingbird SafetydatasheetAli YasinNo ratings yet

- An Operational Amplifier Is A DC-coupled High-Gain Electronic Voltage Amplifier WithDocument16 pagesAn Operational Amplifier Is A DC-coupled High-Gain Electronic Voltage Amplifier WithsalmanNo ratings yet

- Demski2016 Open Data Models For Smart Health Interconnected Applications The Example of OpenEHRDocument9 pagesDemski2016 Open Data Models For Smart Health Interconnected Applications The Example of OpenEHRCristina CazacuNo ratings yet

- BCOM Ecommerce 3-Website InfrastructureDocument12 pagesBCOM Ecommerce 3-Website InfrastructureNeha BhandariNo ratings yet

- Datasheet IPM 100RSE120Document6 pagesDatasheet IPM 100RSE120Alif AriyadiNo ratings yet

- MockDocument19 pagesMockSourabh JakharNo ratings yet

- HP Elitebook 820 g1 Notebook PCDocument178 pagesHP Elitebook 820 g1 Notebook PCRicardo AlfaroNo ratings yet

- Trees: Discrete Structures For ComputingDocument48 pagesTrees: Discrete Structures For ComputingLê Văn HoàngNo ratings yet

- Mech2300 Bending of Beams PracticalDocument11 pagesMech2300 Bending of Beams PracticalJeremy LwinNo ratings yet

- Clicking Clean 2017Document102 pagesClicking Clean 2017dreams24No ratings yet

- Foxtools Overview: Note The Foxtools Functions Aren't Supported by Microsoft Product SupportDocument46 pagesFoxtools Overview: Note The Foxtools Functions Aren't Supported by Microsoft Product Supportp. .anthianNo ratings yet

- Testbook2 AOPDocument231 pagesTestbook2 AOPJahed AhmedNo ratings yet

- 2019 - Decision Tree Analysis With Posterior ProbabilitiesDocument10 pages2019 - Decision Tree Analysis With Posterior ProbabilitiesAvifa PutriNo ratings yet

- Lesson 17-Optimization Problems (Maxima and Minima Problems)Document18 pagesLesson 17-Optimization Problems (Maxima and Minima Problems)Jhonnel CapuleNo ratings yet

- White Elements Graphic DesignDocument10 pagesWhite Elements Graphic DesignIzaz ArefinNo ratings yet

- RFM PCV Pricing and Revenue ManagemetDocument6 pagesRFM PCV Pricing and Revenue ManagemetLoviNo ratings yet

- Bengio, 2009 Curriculum Learning PDFDocument8 pagesBengio, 2009 Curriculum Learning PDFসুনীত ভট্টাচার্যNo ratings yet

- CH 06Document106 pagesCH 06Ray Vega LugoNo ratings yet

- InstallDocument1 pageInstallTude HamsyongNo ratings yet

- Chapter 3Document9 pagesChapter 3Iris SaraNo ratings yet

- Variable Resistor - Types of Variable ResistorDocument7 pagesVariable Resistor - Types of Variable ResistorJames MukhwanaNo ratings yet

- My CAPE Computer Science Internal AssessmentDocument24 pagesMy CAPE Computer Science Internal Assessmentrod-vaughnNo ratings yet

- Low-Side Switch Ics Spf5002A: (Surface-Mount 4-Circuits)Document2 pagesLow-Side Switch Ics Spf5002A: (Surface-Mount 4-Circuits)nurwi dikaNo ratings yet

- Semiconductor Main MemoryDocument15 pagesSemiconductor Main MemoryBereket TarikuNo ratings yet

- Test Bank For Business Driven Technology 7th Edition Paige Baltzan Amy PhillipsDocument36 pagesTest Bank For Business Driven Technology 7th Edition Paige Baltzan Amy Phillipsdearieevirateq5smc100% (39)

- Keithly 2002 ManualDocument361 pagesKeithly 2002 ManualSteve KirkmanNo ratings yet