Professional Documents

Culture Documents

Computer Arithmetic

Uploaded by

shankar_mn1743Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Computer Arithmetic

Uploaded by

shankar_mn1743Copyright:

Available Formats

Computer Arithmetic

1. There are two types of arithmetic operations available in a computer. These are:

a. Integer Arithmetic

b. Real or floating point Arithmetic

2. Integer arithmetic deals with integer operands i.e., numbers without fractional parts.

3. Integer arithmetic is used mainly in counting and as subscripts.

4. Real arithmetic uses numbers with fractional parts as operands and is used in most

computations.

5. In normalized floating point mode of representing and storing real numbers, a real number is

expressed as a combination of a mantissa and an exponent.

6. The mantissa is made less than 1 and greater than or equal to 0.1 and exponent is the power

represented.

7. In .4485E8, mantissa is .4485 and the exponent is 8.

8. E8 is used to represent 108.

9. The shifting of the mantissa to the left till its most significant digit is non-zero is called

normalization.

10. When the numbers are stored using normalized floating point notation the range of numbers

that may be stored are .9999X1099 and .1000X10-99.

11. If the two numbers in normalized floating point notation are to be added the exponents of

the two numbers must be made equal and the mantissa shifted appropriately.

12. The operation of subtraction is nothing but adding a negative number.

13. Two numbers are multiplied in the normalized floating point mode by multiplying the

mantissas and adding the exponents. After the multiplication of the mantissas the result

mantissa is normalized as in addition/subtraction operation and the exponent appropriately

adjusted.

14. In dividing a number by another the mantissa of the numerator is divided by that of the

denominator. The denominator exponent is subtracted from the numerator exponent. The

quotient mantissa is normalized to make the most significant digit non-zero and the

exponent appropriately adjusted.

15. In performing numerical calculations three types of errors are encountered.

16. Errors due to the finite representation of numbers. For example 1/3 is not exactly representing

able using a finite number of digits.

17. Errors due to arithmetic operations using normalized floating point numbers. Such errors are

called rounding errors.

18. Errors due to finite representation of an inherently infinite process. For example the use of a

finite number of terms in the infinite series of expansion of sin x, cos x, etc. Such errors are

called truncation errors.

19. There are two measures of accuracy of the results: a measure of absolute error and a measure

of relative error.

20. If m1 is the correct value of a variable and m2 the value obtained by computation then |m1m2| = ea is called the absolute error.

21. A measure of relative error is defined as | (m1-m2)/m1|= |ea/m1| = er

22. Computers store numbers in binary form.

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Fuather, That Smid Govern-: Such Time As It May Deem Proper: TeDocument18 pagesFuather, That Smid Govern-: Such Time As It May Deem Proper: Tencwazzy100% (1)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Marriage Gift PolicyDocument4 pagesMarriage Gift PolicyGanesh Gaikwad100% (3)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Civil 3dDocument210 pagesCivil 3dCapacitacion Topografica100% (1)

- Spiral Granny Square PatternDocument1 pageSpiral Granny Square PatternghionulNo ratings yet

- p2 - Guerrero Ch13Document40 pagesp2 - Guerrero Ch13JerichoPedragosa88% (17)

- American Woodworker No 171 April-May 2014Document76 pagesAmerican Woodworker No 171 April-May 2014Darius White75% (4)

- O-L English - Model Paper - Colombo ZoneDocument6 pagesO-L English - Model Paper - Colombo ZoneJAYANI JAYAWARDHANA100% (4)

- Project On International BusinessDocument18 pagesProject On International BusinessAmrita Bharaj100% (1)

- Cs On RH IncompatibilityDocument17 pagesCs On RH IncompatibilityRupali Arora100% (2)

- Ericsson 3G Chapter 5 (Service Integrity) - WCDMA RAN OptDocument61 pagesEricsson 3G Chapter 5 (Service Integrity) - WCDMA RAN OptMehmet Can KahramanNo ratings yet

- Cert PortDocument3 pagesCert Portshankar_mn17430% (1)

- CADD Centre-A Brief ProfileDocument2 pagesCADD Centre-A Brief Profileshankar_mn1743No ratings yet

- CaseApp Precise MoldDocument1 pageCaseApp Precise Moldshankar_mn1743No ratings yet

- Computational AlgorithmsDocument1 pageComputational Algorithmsshankar_mn1743No ratings yet

- MotionManager Animation SolidworksDocument38 pagesMotionManager Animation SolidworksSudeep Rkp0% (1)

- FLUENT IC Tut 01 Hybrid ApproachDocument30 pagesFLUENT IC Tut 01 Hybrid ApproachKarthik Srinivas100% (2)

- AC Design CorkeDocument406 pagesAC Design CorkeAdrian ArasuNo ratings yet

- Experiment Vit CDocument4 pagesExperiment Vit CinadirahNo ratings yet

- Design of Steel Structures Handout 2012-2013Document3 pagesDesign of Steel Structures Handout 2012-2013Tushar Gupta100% (1)

- Working With Session ParametersDocument10 pagesWorking With Session ParametersyprajuNo ratings yet

- Raptor SQ2804 Users Manual English v2.12Document68 pagesRaptor SQ2804 Users Manual English v2.12JaimeNo ratings yet

- Homer Christensen ResumeDocument4 pagesHomer Christensen ResumeR. N. Homer Christensen - Inish Icaro KiNo ratings yet

- Ejemplo FFT Con ArduinoDocument2 pagesEjemplo FFT Con ArduinoAns Shel Cardenas YllanesNo ratings yet

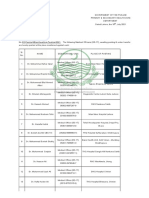

- Government of The Punjab Primary & Secondary Healthcare DepartmentDocument3 pagesGovernment of The Punjab Primary & Secondary Healthcare DepartmentYasir GhafoorNo ratings yet

- Module-1 STSDocument35 pagesModule-1 STSMARYLIZA SAEZNo ratings yet

- IS 2848 - Specition For PRT SensorDocument25 pagesIS 2848 - Specition For PRT SensorDiptee PatingeNo ratings yet

- Lending Tree PDFDocument14 pagesLending Tree PDFAlex OanonoNo ratings yet

- An Improved Ant Colony Algorithm and Its ApplicatiDocument10 pagesAn Improved Ant Colony Algorithm and Its ApplicatiI n T e R e Y eNo ratings yet

- EGMM - Training Partner MOUDocument32 pagesEGMM - Training Partner MOUShaik HussainNo ratings yet

- TSGE - TLGE - TTGE - Reduce Moment High Performance CouplingDocument6 pagesTSGE - TLGE - TTGE - Reduce Moment High Performance CouplingazayfathirNo ratings yet

- Cost Systems: TermsDocument19 pagesCost Systems: TermsJames BarzoNo ratings yet

- Robin Engine EH722 DS 7010Document29 pagesRobin Engine EH722 DS 7010yewlimNo ratings yet

- Irc SP 65-2005 PDFDocument32 pagesIrc SP 65-2005 PDFAjay Kumar JainNo ratings yet

- 3240-B0 Programmable Logic Controller (SIEMENS ET200S IM151-8)Document7 pages3240-B0 Programmable Logic Controller (SIEMENS ET200S IM151-8)alexandre jose dos santosNo ratings yet

- After EffectsDocument56 pagesAfter EffectsRodrigo ArgentoNo ratings yet

- MP & MC Module-4Document72 pagesMP & MC Module-4jeezNo ratings yet

- 2020 - Audcap1 - 2.3 RCCM - BunagDocument1 page2020 - Audcap1 - 2.3 RCCM - BunagSherilyn BunagNo ratings yet

- Introduction To Global Positioning System: Anil Rai I.A.S.R.I., New Delhi - 110012Document19 pagesIntroduction To Global Positioning System: Anil Rai I.A.S.R.I., New Delhi - 110012vinothrathinamNo ratings yet