Professional Documents

Culture Documents

Data Cleansing Example

Uploaded by

Wendy BassOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Data Cleansing Example

Uploaded by

Wendy BassCopyright:

Available Formats

Rajashree Y. Patil et al, / (IJCSIT) International Journal of Computer Science and Information Technologies, Vol.

3 (5) , 2012,5212 - 5214

A Review of Data Cleaning Algorithms for Data

Warehouse Systems

Rajashree Y.Patil,#, Dr. R.V.Kulkarni *

#

Vivekanand College, Kolhapur, Maharashtra, India

Shahu Institute of Business and Research ( SIBER) Kolhapur, Maharashtra, India

Abstract In todays competitive environment, there is a need

for more precise information for a better decision making. Yet

the inconsistency in the data submitted makes it difficult to

aggregate data and analyze results which may delays or data

compromises in the reporting of results. The purpose of this

article is to study the different algorithms available to clean

the data to meet the growing demand of industry and the need

for more standardised data. The data cleaning algorithms can

increase the quality of data while at the same time reduce the

overall efforts of data collection.

Keywords ETL, FD, SNM-IN, SNM-OUT, ERACER

I. INTRODUCTION

Data cleaning is a method of adjusting or eradicating

information in a database that is wrong, unfinished,

inappropriately formatted or reproduced. A business in a

data-intensive profession like banking, insurance, trade,

telecommunication, or transportation might use a data

cleaning algorithm to methodically inspect data for errors

by using a set of laws, algorithms and search for tables. On

average data cleaning tool consist of programs that are able

to correct a number of specific types of errors or detecting

duplicate records. Making use of algorithm can save a

database manager a substantial amount of time and can be

less expensive than mending errors manually.

Data cleaning is a vital undertaking for data warehouse

experts, database managers and developers alike.

Deduplication, substantiation and house holding methods

can be applied whether you are populating data warehouse

components, incorporating recent data into an existing

operation system or sustaining real time dedupe efforts

within an operational system. The objective is an elevated

level of data precision and reliability that transmutes into

enhanced customer service, lower expenses and tranquillity.

Data is a priceless organisational asset that should be

developed and honed to grasp its full benefit. Deduplication

guarantees that a single correct record be present for each

business unit represented in a business transactional or

analytic database. Validation guarantees that every

characteristic preserved for a specific record is accurate.

Cleaning data prior to it being stored in a reporting database

is essential to provide worth to clients of business acumen

applications. The cleaning procedure usually consists of

processes that put a stop to duplicate records from being

reported by the system. Data analysis and data enrichment

services can help improve the quality of data. These

services include the aggregation, organisation and cleaning

of data. These data cleaning and enrichment services can

ensure that your database-part and material files, product

catalogue files and item information etc. are current,

accurate and complete.

Data Cleaning Algorithm is an algorithm, a process used

to determine inaccurate, incomplete, or unreasonable data

and then improving the quality through correction of

detected errors and commissions. The process may include

format checks, completeness checks, reasonable checks,

and limit checks, review of the data to identify outliers

(geographic, statistical, temporal or environmental) or other

errors, and assessment of data by subject area experts (e.g.

taxonomic specialists). These processes usually result in

flagging, documenting and subsequent checking and

correction of suspect records. Validation checks may also

involve checking for compliance against applicable

standards, rules and conventions.

Data cleaning Approaches - In general data cleaning

involves several phases.

a. Data Analysis: In order to detect which kinds of errors

and inconsistencies are to be removed, a detailed data

analysis is required. In addition to a manual inspection of

the data or data samples, analysis programs should be used

to gain metadata about the data properties and detect data

quality problems.

b. Definition of transformation workflow and mapping

rules: Depending on the number of data sources , their

degree of heterogeneity and the dirtiness of the data, a

large number of data transformation and cleaning steps may

have to be executed.

c. Verification: The correctness and effectiveness of a

transformation workflow and the transformation definitions

should be tested and evaluate .e.g. on a sample or copy of

the source data, to improve the definitions if necessary.

d. Transformation: Execution of the transformation steps

either by running the ETL workflow for loading and

refreshing a data warehouse or during answering queries on

multiple sources.

e. Backflow of cleaned data: After errors are removed,

the cleaned data should also replace the dirty data in the

original sources in order to give legacy applications the

improved data too and to avoid redoing from the data

staging area.

II. REVIEW OF LITERATURE

While a huge body of research deals with schema

translation and schema integration, data cleaning has

received only little attention in the research community. A

number of authors focussed on the problem of data cleaning

and they suggest different algorithms to clean dirty data.

Wejje Wei, Mingwei Zhang, Bin Zhang Xiaochun Tang

In this paper, a data cleaning method based on the

association rules is proposed. The new method adjusts the

basic business rules provided by the experts with

association rules mined from multi-data sources and

5212

Rajashree Y. Patil et al, / (IJCSIT) International Journal of Computer Science and Information Technologies, Vol. 3 (5) , 2012,5212 - 5214

generates the advanced business rules for every data source.

Using this method, time is saved and the accuracy of the

data cleaning is improved.

Applied Brain and Vision Science-Data cleaning

algorithm

This algorithm is designed for cleaning the EEG resulting

from the brain function of stationary behaviour such as

for an eyes-open or eyes-closed data collection paradigm.

This algorithm assumes that the useful information

contained in the EEG data are stationary. That is, it assumes

that there is very little change in the on-going statistic of the

signals of inertest contained in the EEG data. Hence, this

algorithm is optimal for removing momentary artifacts in

EEG collected while a participant is in eyes-closed or eyesopened state.

Yiqun Liu,Min Zhang,Liyun Ru,Shaoping Ma

They propose a learning-based algorithm for reducing Web

pages which are not likely to be useful for user request. The

results obtained show that retrieval target pages can be

separated from low quality pages using query-independent

features and cleansing algorithms. Their algorithm succeeds

in reducing 95% web pages with less than 8% loss in

retrieval target pages.

Helena Galhardas, Daniela Florescu, Dennis Shasha

This paper presents a language, an execution model and

algorithms that enable users to express data cleaning

specifications declaratively and perform the cleaning

efficiently. They use as an example a set of bibliographic

references used to construct the Citesser Web Site. They

propose a model to clean textual records so that meaningful

queries can be performed.

Timothy Ohanekwu,C.I.Ezeife

This paper proposes a technique that eliminates the need to

rely on match threshold by defining smart tokens that are

used for identifying duplicates. This approach also

eliminates the need to use the entire long string records with

multiple passes, for duplicate identification.

Yu Qian, Kang Zhang

This article focuses on a role of visualization in effective

data cleaning. This addresses a challenging issue in the use

of visualization for data mining: choosing appropriate

parameters for spatial data cleaning methods. On one hand,

algorithm performance is improved through visualization.

On the other hand, characteristics and properties of methods

and features of data are visualised as feedback to the user.

Chris Mayfield, Jennifer Nevialle, Sunil Prabhakar

In A statistical method for integrated data cleaning and

imputation this paper they focus on exploiting the

statistical dependencies among tuples in relational domains

such as sensor networks, supply chain systems and fraud

detection. They identify potential statistical dependencies

among the data values of related tuples and develop

algorithms to automatically estimate these dependencies,

utilizing them to jointly fill in missing values at the same

time as identifying and correcting errors.

Data cleaning based on mathematical morphologyS.Tang

In the field of bioinformatics and medical image

understanding, data noise frequently occurs and deteriorates

the classification performance; therefore on effective data

cleansing mechanism in the training data is often regarded

as one of the major steps in the real world inductive

learning applications. In this paper the related work on

dealing with data noise is firstly revived , and then based on

the principle of mathematic morphology , the

morphological data cleansing algorithms are proposed and

two concrete morphological algorithms.

Kazi Shah Nawaz Ripon, Ashiqur Rahman,G.M. Atiqur

Rahaman

In this paper, they propose novel domain independent

techniques for better reconciling the similar duplicate

records. They also introduced new ideas for making

similar-duplicate detection algorithms faster and more

efficient. In addition, a significant modification of the

transitive rule is also proposed.

Payal Pahwa, Rajiv Arora, Garima Thakur

This paper addresses issues related to detection and

correction of duplicate records. Also, it analyses data

quality and various factors that degrade it. A brief analysis

of existing techniques is discussed, pointing out its major

limitations. And a new framework is proposed that is an

improvement over the existing technique.

R.Kavitakumar , Dr.RM.Chandrasekaran

In this paper they designed two algorithms using data

mining techniques to correct the attribute without external

reference. One is Context-dependent attribute correction

and another is Context-independent attribute correction.

Jie Gu(

In this paper , they propose a combination of random forest

based techniques and sampling methods to identify the

potential buyers. This method is mainly composed of two

phases : data cleaning and classification, both based on

random forest.

Subhi Anand , Rinkle Rani

World Wide Web is a monolithic repository of web pages

that provides the internet users with heaps on information.

With the growth in number and complexity of websites, the

size of web has become massively large. This paper

emphasizes on the Web Usage Mining process and makes

an exploration in the field of data cleaning.

Li Zhao, Sung Sam Yuan, Sun Peng and Ling Tok

Wang

They propose a new comparison method LCSS, based on

the longest common subsequence, and show that it

possesses the desired properties. They also propose two

new detection methods, SNM-IN and SNM-INOUT, which

are variances of the popular detection method SNM.

Aye T.T.

This paper mainly focus on data pre-processing stage of the

first phase of Web usage mining with activities like field

extraction and data cleaning algorithms. Field extraction

algorithm performs the process of separating fields from the

single line of the log file. This data cleaning algorithm

eliminates inconsistent or unnecessary items in the analysed

data.

Yan Cai-rong, Sun Gui-ning, Gao Nian-gao

By analysing the limitation of traditional structures of

knowledge base, an extended tree-like knowledge base is

built by decomposing and recomposing the domain

knowledge. Based on the knowledge base ,a data cleaning

algorithm is proposed. It extracts atomic knowledge of the

selected nodes firstly, then analyses their relations, deletes

5213

Rajashree Y. Patil et al, / (IJCSIT) International Journal of Computer Science and Information Technologies, Vol. 3 (5) , 2012,5212 - 5214

the same objects, builds an atomic knowledge sequence

based on weights.

Chris Mayfield, Jennifer Neville, Sunil Prabhakar

They present ERACER, an iterative statistical framework

for inferring missing information and correcting such errors

automatically. Their approach is based on on belief

propagation and relational dependency networks, and

includes an efficient approximate inference algorithm that is

easily implemented in standard DBMSs using SQL and user

defining functions.

Mohammad, H.H.

They developed a system uses the extract, transform and

load model as the system main process model to serve as a

guideline for the implementation of the system. Besides that,

parsing technique is also used for identification of dirty data.

Here they selected K-nearest Neighbour algorithm for the

data cleaning.

Shawn R. Jeffery, Minos Garofalakis, Michel J.

Franklin

In this paper they propose SMURF , the first declarative ,

adaptive smoothing filter for RFID data cleaning. SMURF

models the unreliability of RFID readings by viewing RFID

streams as a statistical sample of tags in the physical world ,

and exploits techniques grounded in sampling theory to

drive its cleaning processes.

Manuel Castejon Limas, Joaquin B. Ordiieres Mere ,

Francisco J.

A new method of outlier detection and data cleaning for

both normal and no normal multivariate data sets is

proposed. It is based on an iterated local fit without a priori

metric assumptions. They propose a new approach

supported by finite mixture clustering which provides good

results with large data sets.

Kollayut Kaewbuadee,Yaowadee Temtanapat

In this research they developed a cleaning engine by

combining an FD discovery technique with data cleaning

technique and use the feature in query optimization called

Selective Value to decrease the number of discovered

FDs.

Data cleaning is a very young field of research. This

represents the current research and practices in data

cleaning. One missing aspect in the research is the

definition of a solid theoretical foundation that would

support many of the existing approaches used in industrial

setting. The number of data cleaning methods is used to

identify a particular type of error in data. Unfortunately,

little basic research within the information systems and

computer science communities has been conducted that

directly relates to error detection and data cleaning. Indepth comparisons of data cleaning techniques and methods

have not been yet published. Typically, much of the real

data cleaning work is done in a customized, in-house,

manner. This behind-the scene process often results in the

use of undocumented and ad hoc methods. Data cleaning is

still viewed by many as a black art being done in the

basement. Some concerned effort by the database and

information systems groups is needed to address this

problem. Future research directions include investigation

and integration of various methods to address error

detection. Combination of knowledge-based techniques

with more general approaches should be pursued. The

ultimate goal of data cleaning research is to devise s set of

general operators and theory that can be combined in wellformed statements to address data cleaning problems. This

formal basis is necessary to design and construct high

quality and useful software tools to support the data

cleaning process.

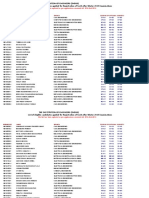

REFERENCES

[1]

[2]

[3]

[4]

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

[15]

[16]

[17]

[18]

[19]

[20]

[21]

A Data Cleaning Method Based on Association Rules by Weijie

Wei, Mingwei Zhang, Bin Zhang ,www.atlantis-press.com

Applied Brain and Vision Science-Data cleaning algorithm

Data Cleansing for Web Information Retrieval using Query

Indepandent Features by Yiqun Liu, Min Zhang, Liyun Ru,

Shaoping Ma- www.thuir.cn

An Extensive Framework for Data Cleaning by

Helena Galhardas, Daniela Florescu, Dennis Shasha, Eric Simon

A Token-Based Data Cleaning Technique for Data Warehouse by

Timothy E. Ohanekwu International Journal of Data Wrehousing

and Mining Volume 1

The role of visualisation in effective data cleaning by Yu Qian,

Kang Zhang Proceddins of 2005 ACM symposium on applied

computing

A Statistical Method for Integrating Data Cleaning and

Imputation by Chris Mayfield, Jennifer Neville, Sunil PrabahakarPurdue University(Computer Science report-2009)

Data cleansing based on mathematical morphology by Sheng

Tang published in ICBBE 2008 The second International

Conference-2008

A Domain Independent Data Cleaning Algorithm for detecting

similar-duplicates by Kazi Shah Nawaz Ripon, Ashquir Rahman

and G.M. Atiqur Rahaman Journal of Computer Vol 5, No.

12,2010

P.Pehwa An Efficient Algorithm for Data Cleaning www.igiglobal.com -2011.

Attribute Correction-Data cleaning using Association Rule and

Clustering Methods by R.KavithaKumar, Dr. RM. Chandrasekaran,

IJDKP,Vol.1,No.2 March-2011.

Random Forest Based Imbalanced Data Cleaning and Classification

Jie Gu Lamda.nju.edu.cn

Data Cleansing Based on Mathematical Morphology S.Tang-2008

ieeeexplore.ieee.org. Bioinfornatics and Biomedical Engineering ,

2008 ICBBE 2008. The 2nd International conference.

An efficient Algorithm for Data Cleaning of Log File using File

Extension International journal of Computer Appliactions

48(8):13-18, June-2012 Surabhi Anand , Rinkle Rani Aggarwal.

A New Efficient Data Cleasing Method Li Zhao, Sung Sam Yuan,

Sun Peng and Ling Tok Wang ftp10.us.freebsd.org

Computer Research and DEvleopment (ICCRD),2011,3rd

International Conference .web log cleaning for mining of web

usage patterns T.T.Aye.

Mass Data Cleaning Algorithm based on extended tree-like

knowledge base Yan Cai-rong,SUN Gui-ning , GAO Nian-gao

Computer Enginerring and application -2010

ERACER-A database approach for statistical inference and data

cleaning- Chris Mayfield, Jennifer Neville, Sunil Prabhakar

w3.cs.jmu.edu

Adaptive cleaning for RFID Data Streams by Shawn R. Jeffery,

Minos Garofalakis, Michael J. Franklin blg.itu.dk

Outlier Detection and Data Cleaning in Multivariate Non-Normal

Samples: The PAELLA Algorithm by Manuel Castejon Limas,

Joaquin B. Ordieres Mere, Francisco J. Martinezn de Pison ,

Ascacibar and Eliseo P. Vergara Gonzaalez

Informatics and Computational Intelligence (ICI) 2011 , Mohamed

H.H . IEEE Xplore Digital Library. E-Clean : A Data Cleaning

Framework for Patient Data

5214

You might also like

- CGLab 2014 Msrit - EduDocument6 pagesCGLab 2014 Msrit - EduWendy BassNo ratings yet

- Msrit 7-8 Sem Syllabus BookDocument55 pagesMsrit 7-8 Sem Syllabus BookWendy Bass100% (1)

- Computer-Graphics NOTES PDFDocument138 pagesComputer-Graphics NOTES PDFlibranhitesh7889No ratings yet

- Csl618 CD Usp QB Final RecentDocument2 pagesCsl618 CD Usp QB Final RecentWendy Bass100% (1)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5784)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Sambungan Chapter 2.2Document57 pagesSambungan Chapter 2.2iffahNo ratings yet

- Plain Bearings Made From Engineering PlasticsDocument44 pagesPlain Bearings Made From Engineering PlasticsJani LahdelmaNo ratings yet

- Non-Performing Assets: A Comparative Study Ofsbi&Icici Bank From 2014-2017Document8 pagesNon-Performing Assets: A Comparative Study Ofsbi&Icici Bank From 2014-2017Shubham RautNo ratings yet

- Managing Economies of Scale in Supply ChainsDocument25 pagesManaging Economies of Scale in Supply ChainsWei JunNo ratings yet

- Carbon FibreDocument25 pagesCarbon Fibrejagadish.kvNo ratings yet

- Basic Concepts: ProbabilityDocument32 pagesBasic Concepts: ProbabilityJhedzle Manuel BuenaluzNo ratings yet

- 2 Fourier and Wavelet Transforms: 2.1. Time and Frequency Representation of SignalsDocument25 pages2 Fourier and Wavelet Transforms: 2.1. Time and Frequency Representation of SignalszvjpNo ratings yet

- Geometri Ruang File 1Document4 pagesGeometri Ruang File 1Muhammad Isna SumaatmajaNo ratings yet

- Selection of Dryers I - IndiaDocument38 pagesSelection of Dryers I - Indiasilvership2291100% (1)

- Laing Electric Heater-CirculatorDocument20 pagesLaing Electric Heater-Circulatorkamilawehbe100% (1)

- I) All Questions Are Compulsory. Ii) Figure To The Right Indicate Full Marks. Iii) Assume Suitable Data Wherever NecessaryDocument1 pageI) All Questions Are Compulsory. Ii) Figure To The Right Indicate Full Marks. Iii) Assume Suitable Data Wherever Necessarythamizharasi arulNo ratings yet

- Justifying The CMM: (Coordinate Measuring Machine)Document6 pagesJustifying The CMM: (Coordinate Measuring Machine)pm089No ratings yet

- Uptime Awards: Recognizing The Best of The Best!Document40 pagesUptime Awards: Recognizing The Best of The Best!Eric Sonny García AngelesNo ratings yet

- CADAM - Model Parameters: General InformationDocument4 pagesCADAM - Model Parameters: General InformationmyapmisNo ratings yet

- Mini Project FormatDocument5 pagesMini Project Formatriteshrajput078No ratings yet

- Lewis Structures and Shape of Molecules and HybridizationDocument12 pagesLewis Structures and Shape of Molecules and HybridizationsanjuanaomiNo ratings yet

- 4-1 E Handbook 2013-14Document53 pages4-1 E Handbook 2013-14Siva Reddy KunduruNo ratings yet

- Indigenous Microorganisms Production and The Effect On Composting ProcessDocument5 pagesIndigenous Microorganisms Production and The Effect On Composting ProcessAldrin Baquilid FernandezNo ratings yet

- Java Array, Inheritance, Exception Handling Interview QuestionsDocument14 pagesJava Array, Inheritance, Exception Handling Interview QuestionsMuthumanikandan Hariraman0% (1)

- XCKN2145G11: Limit Switch XCKN - TH - Plastic Roller Lever Var - Length - 1NO+1NC - Snap - Pg11Document2 pagesXCKN2145G11: Limit Switch XCKN - TH - Plastic Roller Lever Var - Length - 1NO+1NC - Snap - Pg11Boby SaputraNo ratings yet

- List of Eligible Candidates Applied For Registration of Secb After Winter 2015 Examinations The Institution of Engineers (India)Document9 pagesList of Eligible Candidates Applied For Registration of Secb After Winter 2015 Examinations The Institution of Engineers (India)Sateesh NayaniNo ratings yet

- Profit Signals How Evidence Based Decisions Power Six Sigma BreakthroughsDocument262 pagesProfit Signals How Evidence Based Decisions Power Six Sigma BreakthroughsM. Daniel SloanNo ratings yet

- Swiftautoid SA T9680 Black Series 2D Imager Andriod Industrial TabletDocument2 pagesSwiftautoid SA T9680 Black Series 2D Imager Andriod Industrial TabletAirul MutaqinNo ratings yet

- Module 11A-09 Turbine Aeroplane Aerodynamics, Structures and SystemsDocument133 pagesModule 11A-09 Turbine Aeroplane Aerodynamics, Structures and SystemsИлларион ПанасенкоNo ratings yet

- Adobe After Effects CS3 Keyboard Shortcuts GuideDocument14 pagesAdobe After Effects CS3 Keyboard Shortcuts GuideBrandon Sirota100% (1)

- Cs8080 - Irt - Notes AllDocument281 pagesCs8080 - Irt - Notes Allmukeshmsd2No ratings yet

- Arduino Library For The AM2302 Humidity and Temperature SensorDocument2 pagesArduino Library For The AM2302 Humidity and Temperature SensorMallickarjunaNo ratings yet

- (PPT) Design of A Low-Power Asynchronous SAR ADC in 45 NM CMOS TechnologyDocument42 pages(PPT) Design of A Low-Power Asynchronous SAR ADC in 45 NM CMOS TechnologyMurod KurbanovNo ratings yet

- KENWOOD TK 7302 Manual - ADocument2 pagesKENWOOD TK 7302 Manual - AMas IvanNo ratings yet

- More About Generating FunctionDocument11 pagesMore About Generating FunctionThiên LamNo ratings yet