Professional Documents

Culture Documents

CS771: GROUP-19 Sentiment Analysis in Movie Reviews: Project Report

Uploaded by

triplewalkerOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

CS771: GROUP-19 Sentiment Analysis in Movie Reviews: Project Report

Uploaded by

triplewalkerCopyright:

Available Formats

CS771: GROUP-19

Project Report

Sentiment Analysis in Movie Reviews

Submitted in partial fulfillment of

the requirements for the course of the Machine Learning

Submitted by

Jaimita Bansal

14111017

Dept. of CSE

Zahira Nasrin

Rajat Kumar

Sharin KG

14111047

14111028

14111033

Dept. of CSE

Dept. of CSE

Dept.

of CSE

Under the guidance of

Dr. Harish Karnick

Department of Computer Science and Engineering

INDIAN INSTITUTE OF TECHNOLOGY KANPUR

Kanpur, Uttar Pradesh, India 208016

CS771 : Machine Learning Tools and

Techniques

Acknowledgments

We would like to express our sincere appreciation to our

supervisor Prof. Harish Karnick. This project would not have been

possible without his guidance. We owe our knowledge in Machine

Learning to his course CS771: Machine Learning Tools and

Techniques.

2 | Page

CS771 : Machine Learning Tools and

Techniques

Abstract

In this project, we aim to tackle the problem of Sentiment Analysis

which has been an active area of Research recently. We

experiment with different machine learning algorithms ranging

from simple Bag of Words to the more complex models like GloVe,

with the aim of predicting the sentiment of unseen reviews. We

also discuss the performance of different classifiers used and

compare their results to obtain the best classifier configuration for

the problem for the given dataset. To do this, we make use of

IMDB dataset which provides us with a set of 25,000 highly polar

movie reviews for training, 25,000 reviews for testing and

additional 50,000 unlabeled data.

3 | Page

CS771 : Machine Learning Tools and

Techniques

Table of Contents

1. Introduction

2. Dataset

3. Pre-processing 7

4. Approaches

4.1 Bag Of Words 8

4.1.1Results

4.2 Word2vec 9

4.2.1 Results 12

4.3 Doc2vec 14

4.3.1 Results 16

4.4 GloVe19

4.5 Perceptron

4.4.1 Results 22

24

4.5.1 Results 25

5. References 27

4 | Page

CS771 : Machine Learning Tools and

Techniques

1. Introduction

Sentiment

analysis

and

classification

is

an

area

of

text

classification that has recently been receiving lots of attention

from

researchers.

Formally

defined

as

The

process

of

computationally identifying and categorizing opinions expressed

in a piece of text, especially in order to determine whether the

writer's attitude towards a particular topic, product, etc. is

positive, negative, or neutral.

Sentiment analysis involves analyzing textual datasets which may

contain opinions (e.g., social media, movie reviews etc.) with the

aim of classifying the opinions as positive, negative, or neutral.

Classification of textual objects according to

sentiment is

considered a more difficult task than classification of textual

objects according to content because people express their

emotions in language that is often obscured by sarcasm,

ambiguity, and plays on words, all of which could be very

misleading for both humans and computers

Figure 1. Learning Task Diagram

5 | Page

CS771 : Machine Learning Tools and

Techniques

2. Dataset

The labeled data set consists of 50,000 IMDB movie reviews,

specially selected for sentiment analysis. The sentiment of

reviews is binary, meaning the IMDB rating < 5 results in a

sentiment score of 0, and rating >=7 have a sentiment score of 1.

No individual movie has more than 30 reviews. The 25,000 review

labeled training set does not include any of the same movies as

the 25,000 review test set. In addition, there are another 50,000

IMDB reviews provided without any rating labels.

Data fields are

id

sentiment

Unique ID of each review

Sentiment of the review; 1 - positive

and 0 - negative

review Text of the review

6 | Page

CS771 : Machine Learning Tools and

Techniques

3. Pre-processing

As the reviews in the dataset contained many HTML tags, stop

words and punctuation symbols, we were required to clean the

data and do some pre-processing on the reviews to make it

suitable for analysis.

7 | Page

CS771 : Machine Learning Tools and

Techniques

We used BeautifulSoup library to remove HTML Tags and markups.

Regular expressions and punctuations were dealt using re

package. Finally, we got list of English- language stop words from

Python Natural Language Toolkit and filter all of those from our

data corpus and utilize stemming package to treat same meaning

def review_to_wordlist( review, remove_stopwords=False ):

# 1. Remove HTML

review_text = BeautifulSoup(review).get_text()

# 2. Remove non-letters

review_text = re.sub("[^a-zA-Z]"," ", review_text)

# 2.1 Remove single letters

review_text = re.sub('/(?<!\S).(?!\S)\s*/', '', review_text);

# 3. Convert words to lower case and split them

words = review_text.lower().split()

newwords=[]

for word in words:

if len(word)>2:

newwords.append(word)

# 4. Optionally remove stop words (false by default)

if remove_stopwords:

stops = set(stopwords.words("english"))

newwords = [w for w in newwords if not w in stops]

# 5. Return a list of words

return(newwords)

def stemPorter(review_text):

porter = PorterStemmer()

preprocessed_docs = []

for doc in review_text:

final_doc = []

for word in doc:

final_doc.append(porter.stem(word))

final_doc.append(wordnet.lemmatize(word))

preprocessed_docs.append(final_doc)

return preprocessed_docs

Code Snippet 1. Functions for Preprocessing

words as one.

4. Approaches

4.1 Bag of Words.

8 | Page

CS771 : Machine Learning Tools and

Techniques

The bag-of-words model is a simplifying representation used in

natural language processing and information retrieval. In this

model, a text (such as a sentence or a document) is represented

as the bag of its words, disregarding grammar and even word

order but keeping multiplicity.

The bag-of-words model is commonly used in methods of

document classification, where the occurrence of each word is

used as a feature for training a classifier. This model focuses

completely on the words, or sometimes a string of words, but

usually pays no attention to the context. The Bag of Words model

learns a vocabulary from all of the documents, then models each

document by counting the number of times each word appears. To

get our bags of words, we count the number of times each word

occurs in each sentence.

In the IMDB data, since we have a very large number of reviews, it

will give us a large vocabulary. Thus, to limit the size of the

feature vectors, we vary and choose some maximum vocabulary

size. And then build different models on these features and

calculate the accuracy. We hold these results as a baseline for

further analysis.

1.1.1

S.No

9 | Page

Results

Classifier

Random Forest

Perceptron

SVM

Accuracy(%)

83.98

81.658

85.91

CS771 : Machine Learning Tools and

Techniques

1.1 Word2vec

One year ago, Tomas Mikolov made some ripples by releasing

word2vec, an unsupervised algorithm for learning the meaning

behind words. Word2Vec is a deep-learning inspired method that

focuses on the meaning of words. It ultimately learns word

vectors and word context vectors and attempts to understand

meaning and semantic relationships among words.

How it works

The word2vec tool takes a text corpus as input and produces the

word vectors as output. It first constructs a vocabulary from the

training text data and then learns vector representation of words.

The resulting word vector file can be used as features in many

natural language processing and machine learning applications.

Word2Vec Algorithm

Use documents to train a neural network model maximizing the

conditional probability of context given the word

The goal is to optimize the parameter () maximizing the

conditional probability of context (c) given the word (w). D is the

set of all (w, c) pairs.

Then we apply the model to each word to get its corresponding

vector

10 | P a g e

CS771 : Machine Learning Tools and

Techniques

And calculate the vector of sentences by averaging the vector of

their words.

These Sentences will give similarity matrix between them.

And then PageRank is used to score the sentences in graph

11 | P a g e

CS771 : Machine Learning Tools and

Techniques

Here we had used two different techniques on vector received by

word2vec model

1. Vector Averaging

Since each word is taken as a vector in 300-dimensional

space, One method we tried was to simply average the word

vectors in a given review

2. Clustering

Word2Vec creates clusters of semantically related words, so

another possible approach we tried was to exploit the

similarity of words within a cluster

12 | P a g e

CS771 : Machine Learning Tools and

Techniques

4.2.1

Results

1. Change in Accuracy with number of Trees (k-means)

Random Forest

85.0

84.5

Accuracy

84.0

83.5

83.0

60

80

120

140

Number of Trees

2. Vector Averaging

S.No

Classifier

Accuracy(%)

Random Forest

84.748

Perceptron

83.456

SVM

86.424

13 | P a g e

160

CS771 : Machine Learning Tools and

Techniques

3. Clustering

S.No

Classifier

Accuracy(%)

Random Forest

84.10

Perceptron

82.47

SVM

86.10

4. Change in Accuracy with number of Features (k-means)

Random Forest

85.0

84.0

83.0

Accuracy

82.0

81.0

80.0

200

300

Number of Features

14 | P a g e

400

CS771 : Machine Learning Tools and

Techniques

4.3 Doc2vec

Doc2vec (aka paragraph2vec, aka sentence embeddings)

modifies the word2vec algorithm to unsupervised learning of

continuous representations for larger blocks of text, such

as sentences, paragraphs or entire documents.

Algorithm

Our approach for learning paragraph vectors is inspired by the

methods for learning the word vectors. The inspiration is that the

word vectors are asked to contribute to a prediction task about

the next word in the sentence. So despite the fact that the word

vectors are initialized randomly, they can eventually capture

semantics as an indirect result of the prediction task. We will use

this idea in our paragraph vectors in a similar manner. The

paragraph vectors are also asked to contribute to the prediction

task of the next word given many contexts sampled from the

paragraph.

In our Paragraph Vector framework every paragraph is mapped to

a unique vector, represented by a column in matrix D and every

word is also mapped to a unique vector, represented by a column

in matrix W. The paragraph vector and word vectors are averaged

or concatenated to predict the next word in a context. In the

experiments, we use concatenation as the method to combine the

vectors.

15 | P a g e

CS771 : Machine Learning Tools and

Techniques

The paragraph token can be thought of as another word. It acts as

a memory that remembers what is missing from the current

context or the topic of the paragraph. For this reason, we often

call this model the Distributed Memory Model of Paragraph

Vectors (PV-DM).

The contexts are fixed-length and sampled from a sliding window

over the paragraph. The paragraph vector is shared across all

contexts generated from the same paragraph but not across

paragraphs. The word vector matrix W, however, is shared across

paragraphs. I.e., the vector for powerful is the same for all

paragraphs.

The paragraph vectors and word vectors are trained using

stochastic gradient descent and the gradient is obtained via backpropagation. At every step of stochastic gradient descent, one

can sample a fixed-length context from a random paragraph,

compute the error gradient from the network and use the gradient

to update the parameters in our model.

At prediction time, one needs to perform an inference step to

compute the paragraph vector for a new paragraph. This is also

obtained by gradient descent. In this step, the parameters for the

rest of the model, the word vectors W and the softmax weights,

are fixed. Suppose that there are N paragraphs in the corpus, M

words in the vocabulary, and we want to learn paragraph vectors

such that each paragraph is mapped to p dimensions and each

16 | P a g e

CS771 : Machine Learning Tools and

Techniques

word is mapped to q dimensions, then the model has the total of

N p + M q parameters (excluding the softmax parameters).

Even though the number of parameters can be large when N is

large, the updates during training are typically sparse and thus

efficient.

After being trained, the paragraph vectors can be used as

features for the paragraph. We can feed these features directly to

conventional machine learning techniques such as logistic

regression, support vector machines or random forest.

Advantages of paragraph vectors:

An important advantage of paragraph vectors is that they are

learned from unlabeled data and thus can work well for tasks that

do not have enough labeled data.

4.3.1Results

1. Change in Accuracy with Random Forest Classifier(Vector

Averaging)

17 | P a g e

CS771 : Machine Learning Tools and

Techniques

Random Forest

69.0

68.0

67.0

Accuracy

66.0

65.0

64.0

60

80

120

140

Number of Trees

2. Vector Averaging

S.No

Classifier

Accuracy(%)

Random Forest

67.824

Perceptron

67.356

SVM

67.332

3. Vector Averaging

S.No

18 | P a g e

Classifier

Accuracy(%)

160

CS771 : Machine Learning Tools and

Techniques

Random Forest

67.723

Perceptron

67.351

SVM

68.91

4.4 GloVe

GloVe (Global Vectors for Word Representation) is a tool recently

released by Stanford NLP Group researchers Jeffrey Pennington,

19 | P a g e

CS771 : Machine Learning Tools and

Techniques

Richard Socher, and Chris Manning for learning continuous-space

vector representations of words.

The GloVe authors present some results which suggest that their

tool is competitive with Googles popular word2vec package.

Theory

The GloVe model learns word vectors by examining word cooccurrences within a text corpus. Before we train the actual

model, we need to construct a co-occurrence matrix X, where a

cell Xij is a strength which represents how often the word i

appears in the context of the word j.

We will produce vectors with a soft constraint that for each word

pair of word i and word j, where b i and bj are scalar bias terms

associated with words i and j, respectively. Intuitively speaking,

we want to build word vectors that retain some useful information

about how every pair of words i and j co-occur.

Well do this by minimizing an objective function J, which

evaluates the sum of all squared errors based on the above

equation, weighted with a function f:

We choose an f that helps prevents common word pairs (i.e.,

those with large Xij values) from skewing our objective too much:

20 | P a g e

CS771 : Machine Learning Tools and

Techniques

When we encounter extremely common word pairs (where

Xij>xmax) this function will cut off its normal output and simply

return 1. For all other word pairs, we return some weight in the

range (0,1), where the distribution of weights in this range is

decided by .

For example, if we have the following nine preprocessed

sentences, and set window=5, the co-occurrence matrix looks like

this. Note how the matrix is very sparse and symmetrical;

The GloVe algorithm then transforms such raw integer counts into

a matrix where the co-occurrences are weighted based on their

21 | P a g e

CS771 : Machine Learning Tools and

Techniques

distance within the window (word pairs farther apart get less cooccurrence weight):

Glove vs. Word2Vec

Word2vec was a bit hazy about whats going on underneath,

GloVe explicitly names the objective matrix, identifies the

factorization, and provides some intuitive justification as to why

this should give us working similarities.

Basically, where GloVe precomputes the large word x word cooccurrence matrix in memory and then quickly factorizes it,

word2vec sweeps through the sentences in an online fashion,

handling each co-occurrence separately. So, there is a tradeoff

between taking more memory (GloVe) vs. taking longer to train

(word2vec).

GloVe can re-use the co-occurrence matrix to quickly factorize

with any dimensionality, whereas word2vec has to be trained

from scratch after changing its embedding dimensionality.

Implementation of Glove

Maciej Kula implemented GloVe in Python, using Cython for

performance. Using his neat implementation, we can try to make

sense of the performance and accuracy ourselves.

22 | P a g e

CS771 : Machine Learning Tools and

Techniques

Fig. Sample Code to train GloVe in Python

4.4.1 Results

http://nlp.stanford.edu/projects/glove/glove.pdf

- The paper

suggests For the same corpus, vocabulary, window size, and

training time, GloVe consistently outperforms word2vec

Our findings suggest that GloVe doesnt really outperform the

original word2vec.

S.No

Method Used

1

2

3

4

23 | P a g e

Clustering

Vector Avg

Classifier

Random

Forest

Perceptron

Accuracy(%)

SVM

Random

Forest

69.66

79.9

73.16

74.71

CS771 : Machine Learning Tools and

Techniques

Perceptron

79.1

SVM

79.59

Comparing Word2Vec with Glove.

24 | P a g e

CS771 : Machine Learning Tools and

Techniques

4.5 Approach 6: Perceptron

Invented in 1957 by cognitive psychologist Frank Rosenblatt, the

perceptron algorithm was the first artificial neural net

implemented in hardware. It is an algorithm for supervised

classification of an input into one of several possible non-binary

outputs. It is a type of linear classifier, i.e. a classification

algorithm that makes its predictions based on a linear predictor

function combining a set of weights with the feature vector. The

algorithm allows for online learning, in that it processes elements

in the training set one at a time.

Weights

A Perceptron works by assigning weights to incoming connections.

With the McCulloch-Pitts Neuron we took the sum of the values

from the incoming connections, then looked if it was over or

below a certain threshold. With the Perceptron we instead take

the dotproduct.

def dot_product(features,weights):

dotp = 0

for f in features:

dotp += weights[f[0]] * f[1]

return dotp

Code Snippet: Function for Dot Product

Learning

A perceptron is a supervised classifier. It learn by first making a

prediction: Is the dotproduct over or below the threshold? If it

over the threshold it predicts a 1, if it is below threshold it

predicts a 0.

Then the perceptron looks at the label of the sample. If the

prediction was correct, then the error is 0, and it leaves the

25 | P a g e

CS771 : Machine Learning Tools and

Techniques

weights alone. If the prediction was wrong, the error is either -1

or 1 and the perceptron will update the weights accordingly.

Online

dp = dot_product(features, weights) > 0.5

error = label - dp # error is 1 if misclassified as 0, error is -1 if

misclassified as 1

if error != 0:

error_counter += 1

# Updating the weights

for index, value in features:

weights[index] += opts["learning_rate"] * error * log(1.+value)

Code Snippet : Updating Weights

learning

The perceptron is capable of online learning (learning from

samples one at a time). This is useful for larger datasets since you

do not need entire datasets in memory.

Hashing trick

The vectorizing hashing trick originated with Vowpal. This trick

sets number of incoming connections to the perceptron to a fixed

size. We hash all the raw features to a number lower than the

fixed size.

Progressive Validation Loss

Learning from samples one at a time also gives us progressive

training loss. When the model encounters a sample it first makes

a prediction without looking at the target. Then we compare this

prediction with the target label and calculate an error rate. A low

error rate tells us we are close to a good model.

Normalization

26 | P a g e

CS771 : Machine Learning Tools and

Techniques

We use a simple log (feature value + 1) for online feature value

normalization, just like Vowpal Wabbit.

Multiple passes

We can also run through datasets multiple times for as long as the

error rate gets lower and lower. If the training data is linearly

separable then a perceptron should, in practice, converge to 0

errors on the train set.

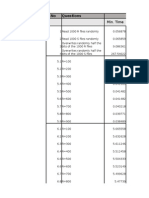

4.5.1 Results

S.No

Accurac

y

Weight Initialization

N-Gram

Random

1 Gram

93.24

Zero

1 Gram

93.67

Random

2 Gram

95.10

Zero

2 Gram

95.67

27 | P a g e

CS771 : Machine Learning Tools and

Techniques

6. References

1.

Kaggle Tutorials.

-

2.

http://www.kaggle.com/c/word2vec-nlp-tutorial

Word2Vec implementation Tutorial.

-

http://radimrehurek.com/2014/12/making-sense-of-

word2vec/

3.

http://deeplearning4j.org/word2vec.html

Published Paper - Distributed Representations of Sentences

and Documents. By QuocLe, Tomas Mikolo

4.

Perceptron Tutorial: mlwave.com/online-learning-perceptron/

5.

Doc2Vec implementation Tutorials

-

28 | P a g e

radimrehurek.com/2014/12/doc2vec-tutorial/

You might also like

- Sentiment Analysis of Talaash Movie Reviews Using Text Mining ApproachDocument9 pagesSentiment Analysis of Talaash Movie Reviews Using Text Mining ApproachsudhvimalNo ratings yet

- "Sentiment Analysis of Survey Comments: Animesh TilakDocument12 pages"Sentiment Analysis of Survey Comments: Animesh TilakAnimesh Kumar TilakNo ratings yet

- Essay Grading SystemDocument14 pagesEssay Grading SystemTunnu SunnyNo ratings yet

- DL ProjectDocument21 pagesDL ProjectsravuNo ratings yet

- Sentiment Analysis From H El Reviews: Data Mining For Business IntelligenceDocument13 pagesSentiment Analysis From H El Reviews: Data Mining For Business IntelligenceAniket SujayNo ratings yet

- A Novel Machine Learning Approach For Sentiment Analysis Based On Adverb-Adjective-Noun-Verb (AANV) CombinationsDocument5 pagesA Novel Machine Learning Approach For Sentiment Analysis Based On Adverb-Adjective-Noun-Verb (AANV) CombinationsIDESNo ratings yet

- Deep Learning For Aspect-Based Sentiment AnalysisDocument9 pagesDeep Learning For Aspect-Based Sentiment AnalysiskmayutrisnaNo ratings yet

- Sentiment Analysis On Movie Reviews: Natural Language Processing UML602 Project ReportDocument13 pagesSentiment Analysis On Movie Reviews: Natural Language Processing UML602 Project ReportHimanshu PandeyNo ratings yet

- 2 Ijcseitrfeb20172Document6 pages2 Ijcseitrfeb20172TJPRC PublicationsNo ratings yet

- Conversion of Sign Language To Text: For Dumb and DeafDocument26 pagesConversion of Sign Language To Text: For Dumb and DeafArbaz HashmiNo ratings yet

- IMDB Movie Review AnalysisDocument9 pagesIMDB Movie Review Analysisadarsh guptaNo ratings yet

- Automated Essay Grading: Alex Adamson, Andrew Lamb, Ralph Ma December 13, 2014Document5 pagesAutomated Essay Grading: Alex Adamson, Andrew Lamb, Ralph Ma December 13, 2014Diyar T AlzuhairiNo ratings yet

- Learning Vector-Space Representations of Items For Recommendations Using Word Embedding ModelsDocument6 pagesLearning Vector-Space Representations of Items For Recommendations Using Word Embedding ModelspbtaiNo ratings yet

- Automatic Essay GradingDocument12 pagesAutomatic Essay GradingKaranveer SinghNo ratings yet

- Social Media MiningDocument10 pagesSocial Media MiningHari AtharshNo ratings yet

- DATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABNo ratings yet

- Applications of Deep Learning To Sentiment Analysis of Movie ReviewsDocument8 pagesApplications of Deep Learning To Sentiment Analysis of Movie ReviewsTamara KomnenićNo ratings yet

- Sentiment Analysis PresentationnotesDocument4 pagesSentiment Analysis Presentationnotessid immanualNo ratings yet

- Zharmagambetov 2015Document4 pagesZharmagambetov 2015Rangga RamadhanNo ratings yet

- Automated Scoring System For Essays: SummaryDocument4 pagesAutomated Scoring System For Essays: SummaryR Gandhimathi RajamaniNo ratings yet

- Sentiment Analysis On Amazon Fine Food Reviews by Using Linear Machine Learning ModelsDocument6 pagesSentiment Analysis On Amazon Fine Food Reviews by Using Linear Machine Learning ModelsIJRASETPublicationsNo ratings yet

- Sentiment analysis of IMDB movie reviewsDocument16 pagesSentiment analysis of IMDB movie reviewsAvi Ajmera100% (1)

- Machine Learning Evaluates Subjective AnswersDocument13 pagesMachine Learning Evaluates Subjective AnswerspavanbhatNo ratings yet

- Sentiment Analysis On IMDB Movie Comments and TwitDocument8 pagesSentiment Analysis On IMDB Movie Comments and TwitNauffan MuftiNo ratings yet

- Best Prompts For Text-to-Image Models and How To FDocument12 pagesBest Prompts For Text-to-Image Models and How To Fkevin kenfackNo ratings yet

- Enhancing The Performance of Automated Grading of Descriptive Answers Through StackingDocument7 pagesEnhancing The Performance of Automated Grading of Descriptive Answers Through Stackingsunil_sixsigmaNo ratings yet

- Chunker Based Sentiment Analysis and Tense Classification For Nepali TextDocument14 pagesChunker Based Sentiment Analysis and Tense Classification For Nepali TextDarrenNo ratings yet

- Case Study On Automatic Transformation of User Stories Into UMLDocument6 pagesCase Study On Automatic Transformation of User Stories Into UMLChandresh PrasadNo ratings yet

- Sat - 15.Pdf - Online Subjective Answer CheckerDocument11 pagesSat - 15.Pdf - Online Subjective Answer CheckerVj KumarNo ratings yet

- Online Handwritten Cursive Word RecognitionDocument40 pagesOnline Handwritten Cursive Word RecognitionkousalyaNo ratings yet

- Using Genetic Programming To Evolve Detection Strategies For Object-Oriented Design FlawsDocument9 pagesUsing Genetic Programming To Evolve Detection Strategies For Object-Oriented Design Flawsdavidmaya2006No ratings yet

- Doc2vec ExplainDocument5 pagesDoc2vec ExplainPushkar MishraNo ratings yet

- Maneesha Nidigonda Major ProjectDocument11 pagesManeesha Nidigonda Major ProjectManeesha NidigondaNo ratings yet

- Recurrent Neural Networks Tutorial, Part 2Document16 pagesRecurrent Neural Networks Tutorial, Part 2hojaNo ratings yet

- Level Set Segmentation ThesisDocument4 pagesLevel Set Segmentation Thesistiffanylovecleveland100% (2)

- Word Embedding Generation For Telugu CorpusDocument28 pagesWord Embedding Generation For Telugu CorpusDurga PNo ratings yet

- CS 224D: Deep Learning For NLP: Lecture Notes: Part II Spring 2016Document11 pagesCS 224D: Deep Learning For NLP: Lecture Notes: Part II Spring 2016George SakrNo ratings yet

- Sentiment Analysis Using Support Vector Machine Based On Feature Selection and Semantic AnalysisDocument5 pagesSentiment Analysis Using Support Vector Machine Based On Feature Selection and Semantic AnalysisHerman RizaniNo ratings yet

- Sentiment Analysis Using Machine Learning ClassifiersDocument41 pagesSentiment Analysis Using Machine Learning Classifierssouptik.scNo ratings yet

- DATA MINING AND MACHINE LEARNING. PREDICTIVE TECHNIQUES: REGRESSION, GENERALIZED LINEAR MODELS, SUPPORT VECTOR MACHINE AND NEURAL NETWORKSFrom EverandDATA MINING AND MACHINE LEARNING. PREDICTIVE TECHNIQUES: REGRESSION, GENERALIZED LINEAR MODELS, SUPPORT VECTOR MACHINE AND NEURAL NETWORKSNo ratings yet

- Influential Vocabulary DetectionDocument15 pagesInfluential Vocabulary Detectionapi-263491997No ratings yet

- COMP 4650 6490 Assignment 3 2023-v1.1Document6 pagesCOMP 4650 6490 Assignment 3 2023-v1.1390942959No ratings yet

- Introduction To Machine Learning Prof. Anirban Santara Department of Computer Science and Engineering Indian Institute of Technology, KharagpurDocument15 pagesIntroduction To Machine Learning Prof. Anirban Santara Department of Computer Science and Engineering Indian Institute of Technology, KharagpurRishikeshNo ratings yet

- Project Report Dbms111123xyzaDocument23 pagesProject Report Dbms111123xyzaKiran BNo ratings yet

- Sentiment Analysis of Tamil Movie Reviews Via Feature Frequency CountDocument7 pagesSentiment Analysis of Tamil Movie Reviews Via Feature Frequency Countrameshbabu1984No ratings yet

- LEC 1 IntroductionDocument57 pagesLEC 1 Introductiondemro channelNo ratings yet

- Advanced C++ Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesFrom EverandAdvanced C++ Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesNo ratings yet

- Predicting The Reviews of The Restaurant Using Natural Language Processing TechniqueDocument4 pagesPredicting The Reviews of The Restaurant Using Natural Language Processing TechniqueBalu SadanalaNo ratings yet

- Multi Purposed Question Answer Generator With NLPDocument7 pagesMulti Purposed Question Answer Generator With NLPabir040995.hosenNo ratings yet

- Automatic Short - Answer Grading System (ASAGS) : AbstractDocument5 pagesAutomatic Short - Answer Grading System (ASAGS) : Abstractimot96No ratings yet

- Problem 1 ProposalDocument24 pagesProblem 1 Proposaluthayakumar jNo ratings yet

- Java/J2EE Design Patterns Interview Questions You'll Most Likely Be Asked: Second EditionFrom EverandJava/J2EE Design Patterns Interview Questions You'll Most Likely Be Asked: Second EditionNo ratings yet

- Introducing AI Business Line at Evos Solutions - AI, ML, NLP Products & ServicesDocument6 pagesIntroducing AI Business Line at Evos Solutions - AI, ML, NLP Products & ServicespikunimohantyNo ratings yet

- Cost Benefit of Sentiment AnalysisDocument8 pagesCost Benefit of Sentiment Analysisakela_engineerNo ratings yet

- Review Analysis Using R Software: Team MembersDocument10 pagesReview Analysis Using R Software: Team MembersVenkatesh BattulaNo ratings yet

- DATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLABNo ratings yet

- 1 - Assignment Details Practical Data Science With PythonDocument8 pages1 - Assignment Details Practical Data Science With Pythonshiva KumarNo ratings yet

- Math as a Programming LanguageDocument30 pagesMath as a Programming LanguageMartin Sebastian Gomez GalizziNo ratings yet

- Admission Duty AllDocument16 pagesAdmission Duty AlltriplewalkerNo ratings yet

- II Semester Time Table 2014-2015Document2 pagesII Semester Time Table 2014-2015triplewalkerNo ratings yet

- Comparing File Read Times on Hard Disks and SSDsDocument8 pagesComparing File Read Times on Hard Disks and SSDstriplewalkerNo ratings yet

- RplotDocument1 pageRplottriplewalkerNo ratings yet

- WindowsXP sp3Document12 pagesWindowsXP sp3evilanubhav100% (1)

- HW1 SolDocument9 pagesHW1 SolTDLemonNhNo ratings yet

- MBA-Marketing Management Lecture-4Value PropositionDocument50 pagesMBA-Marketing Management Lecture-4Value PropositionAndrea Torres Vicent0% (1)

- GATE 2011 Answer Key, Solution, GATE 2011 SolutionsDocument4 pagesGATE 2011 Answer Key, Solution, GATE 2011 SolutionsRavi KantNo ratings yet

- Your Residents Association: Information PackDocument32 pagesYour Residents Association: Information PacktriplewalkerNo ratings yet

- VII BtechDocument53 pagesVII BtechtriplewalkerNo ratings yet

- Tut Divide ProblemsDocument7 pagesTut Divide ProblemsTimothy KurbyNo ratings yet

- 30 Frequently Asked Deep Learning Interview Questions and AnswersDocument28 pages30 Frequently Asked Deep Learning Interview Questions and AnswersKhirod Behera100% (1)

- 08.04 - Runge-Kutta 2nd Order Method For ODEDocument10 pages08.04 - Runge-Kutta 2nd Order Method For ODEEnos Lolang100% (1)

- Mcqsuniti V 150813122121 Lva1 App6892Document8 pagesMcqsuniti V 150813122121 Lva1 App6892msurfudeen6681No ratings yet

- Chapter 2 - Algebraic Expressions: Pre-Calculus and Calculus - Indb 67 13/7/2017 5:56:57 PMDocument2 pagesChapter 2 - Algebraic Expressions: Pre-Calculus and Calculus - Indb 67 13/7/2017 5:56:57 PMDiego TrianaNo ratings yet

- Fvschemes & Fvsolution For Steady State and Transient SimuationsDocument4 pagesFvschemes & Fvsolution For Steady State and Transient SimuationsIsaacNo ratings yet

- Numerical Differentiation MethodsDocument10 pagesNumerical Differentiation MethodsHonsyben BriansNo ratings yet

- MCQ Unit 5Document4 pagesMCQ Unit 5zohaibNo ratings yet

- Syllabus For Numerical Analysis (Theory and Practical)Document2 pagesSyllabus For Numerical Analysis (Theory and Practical)Biduyt Kumar BoseNo ratings yet

- Department of Computer Engineering: Third Year B. Tech SyllabusDocument3 pagesDepartment of Computer Engineering: Third Year B. Tech Syllabuspranali suryawanshiNo ratings yet

- Deep Learning Lab Manual-36-41Document6 pagesDeep Learning Lab Manual-36-41Ajay RodgeNo ratings yet

- Dynamic Programming: An Optimization Technique for Multi-Stage Decision ProblemsDocument14 pagesDynamic Programming: An Optimization Technique for Multi-Stage Decision ProblemsYunia RozaNo ratings yet

- Mathematical Optimization Techniques: S. RussenschuckDocument13 pagesMathematical Optimization Techniques: S. RussenschuckKartikeya DhanwariaNo ratings yet

- Neural NetworkDocument4 pagesNeural NetworkMuharromi Maya AgustinNo ratings yet

- Ece345 Weekly TaskDocument1 pageEce345 Weekly TaskLBJNo ratings yet

- PolyFunc Synthetic DivisionDocument42 pagesPolyFunc Synthetic Divisionpamela ocampoNo ratings yet

- Finite Diference1 PDFDocument10 pagesFinite Diference1 PDFsusisoburNo ratings yet

- Multiple Seq AlignmentDocument36 pagesMultiple Seq AlignmentAnwar AliNo ratings yet

- Time-Dependent Methods SPRING 2016: B A A V TDocument11 pagesTime-Dependent Methods SPRING 2016: B A A V Tarifin rizalNo ratings yet

- Newton Raphson (NM)Document28 pagesNewton Raphson (NM)madnan27No ratings yet

- Newton's Interpolation FormulaDocument8 pagesNewton's Interpolation FormulaSaiful IslamNo ratings yet

- Divide and ConquerDocument50 pagesDivide and Conquerjoe learioNo ratings yet

- Lecture HW 04 RootsDocument12 pagesLecture HW 04 RootssmashthecommienwoNo ratings yet

- Numerical Differentiation and Integration: Lecture Series On " Numerical Techniques and Programming in Matlab"Document55 pagesNumerical Differentiation and Integration: Lecture Series On " Numerical Techniques and Programming in Matlab"ahmedNo ratings yet

- Lec9a SpaceComplexDocument19 pagesLec9a SpaceComplexSIMRAN SEHGALNo ratings yet

- Neural Network Toolbox Command ListDocument4 pagesNeural Network Toolbox Command ListAditya ChaudharyNo ratings yet

- Applications of Newton Raphson Method in Computational SciencesDocument3 pagesApplications of Newton Raphson Method in Computational Sciencesozila jazz100% (1)

- Polynomials - 2Document2 pagesPolynomials - 2Vivek ChaudharyNo ratings yet

- Fortran 77 Code For SplineDocument4 pagesFortran 77 Code For Splinesubha_aeroNo ratings yet

- Unit 4: Taylor Series MethodDocument5 pagesUnit 4: Taylor Series MethodPavanNo ratings yet