Professional Documents

Culture Documents

2013gecco Aco

Uploaded by

Srijan SehgalCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

2013gecco Aco

Uploaded by

Srijan SehgalCopyright:

Available Formats

Ant Colony Optimisation and the Traveling Salesperson

Problem Hardness, Features and Parameter Settings

[Extended Abstract]

Samadhi Nallaperuma

Markus Wagner

Frank Neumann

School of Computer Science

The University of Adelaide

Adelaide, SA 5005, Australia

School of Computer Science

The University of Adelaide

Adelaide, SA 5005, Australia

School of Computer Science

The University of Adelaide

Adelaide, SA 5005, Australia

ABSTRACT

2.

Our study on ant colony optimization (ACO) and the Travelling Salesperson Problem (TSP) attempts to understand the

effect of parameters and instance features on performance

using statistical analysis of the hard, easy and average problem instances for an algorithm instance.

The TSP is one of the most famous NP-hard combinatorial optimisation problems. Given a set of n cities {1, . . . , n}

and a distance matrix d = (dij ), 1 i, j n, the goal is to

compute a tour of minimal length that visits each city exactly

once and returns to the origin.

In general, the TSP is not only NP-hard, but also hard to

approximate. Therefore, we consider the still NP-hard Euclidean TSP, where cities are given by points in the plane and

the Euclidean distance is used for distance computations.

This study is focusses on a well-known ACO algorithm

called Max-Min Ant System [9]. Individual solution tours

are constructed at each iteration by the set of ants considered in the algorithm. These tours are constructed by visiting cities sequentially, according to a probabilistic formula

representing heuristic information and pheromone trails. Let

us assume that ant k is in node i and needs to select the

next city j to be visited. The applied selection formula is

called random proportional rule and it is defined as pij =

[ij ] [ij ] /(hNk [ih ] [ih ] ). Here Nk represents the

set of unvisited nodes of ant k, [ih ] and [ih ] having exponents and represent pheromone and heuristic information respectively. A detailed description and analysis of this

algorithm on TSP can be found in the textbook of Dorigo and

Sttzle (Chapter 3) [2].

Categories and Subject Descriptors

D.2.8 [Software Engineering]: Metricscomplexity measures,

performance measures ; F.2 [Theory of Computation]: Analysis

of Algorithms and Problem Complexity

Keywords

Ant Colony Optimisation, Traveling Salesperson Problem,

Features, Parameters

1.

INTRODUCTION

Throughout the history of heuristic optimisation, attempts

have been made to analyse ACO algorithm performance theoretically [3, 8] and experimentally [6, 10]. We study ACO

on hard problems in a novel perspective based on problem

hardness features. Our study presents hardness analysis for

one of the most famous ACO algorithms, the Max-Min Ant

System, on the well-studied Travelling Salesperson Problem

(TSP). The aim of this research is to understand the impact

of problem features and of algorithm parameters on the algorithm performance. An evolutionary algorithm approach

similar to the one by Mersmann et al. [4] is utilised to generate easy and hard instances for different parameter settings.

Statistical features of these instances are investigated in order to determine the impact of problem structure on performance for a particular algorithm instance with a specified

configuration. Furthermore, hard and easy instances of algorithm instances with different parameter settings are compared in order to understand the impact of parameters on

performance. With this understanding, we will contribute to

ACO research in the areas of algorithm design and parameter prediction.

3.

PRELIMINARIES

EXPERIMENTAL INVESTIGATIONS

We use an evolutionary algorithm to evolve easy and hard

instances for the ant algorithms, which is similar to previous work done by Smith-Miles and Lopes [7] on the LinKernighan heuristic, the work by Mersmann et al. [4] on the

local search 2-opt, and the work by Nallaperuma et al. [5] on

two approximation algorithms. Evolutionary algorithms are

based to a large extent on random decisions. We use several

runs of an algorithm to create a diverse set of hard and easy

instances. The search is guided by the approximation quality of the solution. Hence by minimising and maximising the

fitness it is possible to evolve easy and hard instances.

The approximation ratio A (I) of an algorithm A for a

given instance I is defined as

(I) = A(I)/OP T (I)

Copyright is held by the author/owner(s).

GECCO13 Companion, July 610, 2013, Amsterdam, The Netherlands.

ACM 978-1-4503-1964-5/13/07.

where A(I) is the tour length produced by algorithm A for

the given instance I and OPT(I) is the value of an optimal

solution of I. OP T (I) is obtained by using the exact TSP

solver Concorde [1].

Our experimental set up is as follows. For each parameter

setting a set of random TSP instances is generated, and an

evolutionary algorithm runs on them for 5000 generations to

generate extreme sets of instances. In each iteration, the ACO

algorithm is run several times on a single instance, and the

average is taken and divided by the optimal solution to the

obtain approximation ratio. This entire process is done for

instances of sizes 25, 50, 100 and 200, and with the goal of

generation easy and hard instances respectively.

The comprehensive feature set introduced by Mersmann et

al. [? ] is used for this study to analyse the hard and the easy

generated TSP instances. These features include distances of

edge cost distribution, angles between neighbours, nearest

neighbour statistics, mode, cluster and centroid features as

well as features representing minimum spanning tree heuristics and of the convex hull. The parameters considered in this

study are the most popular and critical ones in any ACO algorithm, namely the exponents and that represent the

influence of the pheromone trails and of the heuristic information respectively. We consider three parameter settings

for our analysis (setting 1 with = 1 and = 2, 2 with

= 0 and = 4 and 3 with = 4 and = 0) The feature values for each instance set are analysed in detail with

reference to the parameter setting it is based on. By comparing feature values of instances with different parameter

settings, it is possible to understand the impact of parameter settings on the performance of the algorithm. Moreover,

cross comparison of different parameter configurations can

reveal any complimentary capabilities of parameter settings.

This is possible through running algorithm instances on each

others hard and easy instances.

Our initial experimental results for the Max-Min algorithm

with default parameters( = 1 and = 2) show the following. The distances between cities on the optimal tour

are more uniform in the hard instances than in the easy

ones. The approximation ratio is very close to 1 for all the

generated easy instances whereas for the hard instances it

is higher ranging from 1.04 to 1.29. Optimal tours for the

easy instances lead to higher angles than in optimal tours of

the hard instances. The standard deviation of angles of the

easy instances are significantly smaller than the values of the

hard instances. These values for both the hard and the easy

instances slightly decrease with the instance size. Instance

shapes for small instances structurally differ from the respective shapes of larger instances.

Furthermore, results of the second ( = 0, = 4) parameter setting support the above patterns. For example, the standard deviation of angles to the next two nearest neighbours

follows a similar pattern for the second parameter combination. However, the third combination ( = 4, = 0) shows

an increasing pattern (over increasing instance size) of standard deviation values in contrast to the decreasing pattern

(over increasing instance size) in other parameter settings .

This clearly shows that the heuristic information parameter

has significant influence, as = 0 in the third setting.

We have compared the performance of ACO with the three

considered parameter settings on each others hard and easy

instances. In summary, our comparisons suggest that the first

and second parameter settings complement each other, performing better on each others hard instances. The third parameter setting has performed poorly compared to the other

two settings. This provides insights into the impact of the

heuristic information through the parameter , as = 0

effectively disables the heuristic information. These results

reveals that, compared to pheromone information, heuristic

information tends to play a more important role in ant tour

construction. The second parameter setting , which lacks parameter representing pheromone information, could still

perform competitively against the default parameter setting.

However, we cannot argue on the missing parameter as the

sole reason for performance. For example, the extremely

large value of could impact tour construction significantly,

thus causes the worse results of the third parameter setting.

4.

FUTURE WORK

We have carried out an evolutionary algorithm approach

to generate easy and hard instances for several parameter

settings for a standard ACO algorithm and the Travelling

Salesperson Problem. Hard and easy instances of several parameter settings are analysed and compared. Future work

will concentrate on using our insights for algorithm design

and parameter prediction in ACO.

References

[1] D. Applegate, W. J. Cook, S. Dash, and A. Rohe. Solution of a Min-Max Vehicle Routing Problem. INFORMS

Journal on Computing, 14(2):132143, 2002.

[2] M. Dorigo and T. Sttzle. Ant Colony Optimization. Bradford Company, 2004.

[3] T. Ktzing, F. Neumann, H. Rglin, and C. Witt. Theoretical analysis of two ACO approaches for the traveling

salesman problem. Swarm Intelligence, 6:121, 2012.

[4] O. Mersmann, B. Bischl, H. Trautmann, M. Wagner,

J. Bossek, and F. Neumann. A novel feature-based approach to characterize algorithm performance for the

traveling salesperson problem. Annals of Mathematics

and Artificial Intelligence, pages 132, 2013.

[5] S. Nallaperuma, M. Wagner, F. Neumann, B. Bischl,

O. Mersmann, and H. Trautmann. A Feature-based

Comparison of Local Search and the Christofides Algorithm for the Travelling Salesperson Problem. In Proceedings of the International Conference on Foundations of

Genetic Algorithms (FOGA), 2013. To appear.

[6] P. Pellegrini, D. Favaretto, E. Moretti. On MAX-MIN

ant systems parameters. In Proceedings of the 5th International Conference on Ant Colony Optimization and Swarm

Intelligence (ANTS), pages 203214. Springer, 2006.

[7] K. Smith-Miles, J. van Hemert, and X. Y. Lim. Understanding TSP difficulty by learning from evolved instances. In Proceedings of the 4th International Conference

on Learning and Intelligent Optimization (LION), pages

266280. Springer, 2010.

[8] T. Sttzle and M. Dorigo. A short convergence proof for

a class of Ant Colony Optimization algorithms. IEEE

Trans. on Evolutionary Computation, pages 358365, 2002.

[9] T. Sttzle and H. H. Hoos. MAX-MIN Ant system. Future Generation Computer Systems, 16(9):889914, 2000.

[10] T. Sttzle, M. Lpez-Ibez, P. Pellegrini, M. Maur,

M. Montes de Oca, M. Birattari, and M. Dorigo. Parameter Adaptation in Ant Colony Optimization. In Autonomous Search, pages 191215. Springer, 2012.

You might also like

- Applying Kalman Filtering in Solving SSM Estimation Problem by The Means of EM Algorithm With Considering A Practical ExampleDocument8 pagesApplying Kalman Filtering in Solving SSM Estimation Problem by The Means of EM Algorithm With Considering A Practical ExampleJournal of ComputingNo ratings yet

- 90 102 PDFDocument15 pages90 102 PDFanon_962931433No ratings yet

- An Alternative Ranking Problem For Search Engines: 1 MotivationDocument22 pagesAn Alternative Ranking Problem For Search Engines: 1 MotivationbghermidaNo ratings yet

- SPE-172432-MS Guidelines For Uncertainty Assessment Using Reservoir Simulation Models For Green-And Brown-Field SituationsDocument15 pagesSPE-172432-MS Guidelines For Uncertainty Assessment Using Reservoir Simulation Models For Green-And Brown-Field SituationsDuc Nguyen HoangNo ratings yet

- OPTIMIZING BUILDING SIMULATIONDocument11 pagesOPTIMIZING BUILDING SIMULATIONtamann2004No ratings yet

- Université Libre de Bruxelles: Tuning MAX-MIN Ant System With Off-Line and On-Line MethodsDocument23 pagesUniversité Libre de Bruxelles: Tuning MAX-MIN Ant System With Off-Line and On-Line Methodslcm3766lNo ratings yet

- Framework For Particle Swarm Optimization With Surrogate FunctionsDocument11 pagesFramework For Particle Swarm Optimization With Surrogate FunctionsLucas GallindoNo ratings yet

- Solving Unique Shortest Path Problems Using Ant Colony OptimisationDocument26 pagesSolving Unique Shortest Path Problems Using Ant Colony Optimisationiskon_jskNo ratings yet

- Optimizatsiya Vnutrennih Poter V Elementah Konstruktsii Osnovannaya Na Statisticheskom Energeticheskom Metode Rascheta Vibratsii IDocument11 pagesOptimizatsiya Vnutrennih Poter V Elementah Konstruktsii Osnovannaya Na Statisticheskom Energeticheskom Metode Rascheta Vibratsii IKirill PerovNo ratings yet

- SMS Call PredictionDocument6 pagesSMS Call PredictionRJ RajpootNo ratings yet

- Approximation Models in Optimization Functions: Alan D Iaz Manr IquezDocument25 pagesApproximation Models in Optimization Functions: Alan D Iaz Manr IquezAlan DíazNo ratings yet

- Bearing Defect Identification Using Acoustic Emission SignalsDocument7 pagesBearing Defect Identification Using Acoustic Emission Signalsmhsgh2003No ratings yet

- Algorithms For Game MetricsDocument27 pagesAlgorithms For Game Metricsjmcs83No ratings yet

- 43 PDFDocument9 pages43 PDFarindamsinharayNo ratings yet

- Extended Process Algebra For Cost AnalysisDocument14 pagesExtended Process Algebra For Cost AnalysisijfcstjournalNo ratings yet

- An Algorithmic Approach To The OptimizatDocument22 pagesAn Algorithmic Approach To The OptimizatTodor GospodinovNo ratings yet

- Some Studies of Expectation Maximization Clustering Algorithm To Enhance PerformanceDocument16 pagesSome Studies of Expectation Maximization Clustering Algorithm To Enhance PerformanceResearch Cell: An International Journal of Engineering SciencesNo ratings yet

- PSO AlgorithmDocument8 pagesPSO AlgorithmMaahiSinghNo ratings yet

- Monte Carlo Techniques For Bayesian Statistical Inference - A Comparative ReviewDocument15 pagesMonte Carlo Techniques For Bayesian Statistical Inference - A Comparative Reviewasdfgh132No ratings yet

- Fuzzy C-Regression Model With A New Cluster Validity CriterionDocument6 pagesFuzzy C-Regression Model With A New Cluster Validity Criterion105tolgaNo ratings yet

- Christophe Andrieu - Arnaud Doucet Bristol, BS8 1TW, UK. Cambridge, CB2 1PZ, UK. EmailDocument4 pagesChristophe Andrieu - Arnaud Doucet Bristol, BS8 1TW, UK. Cambridge, CB2 1PZ, UK. EmailNeil John AppsNo ratings yet

- Hardware Implementation of Finite Fields of Characteristic ThreeDocument11 pagesHardware Implementation of Finite Fields of Characteristic ThreehachiiiimNo ratings yet

- Machine Learning With CaeDocument6 pagesMachine Learning With CaeHarsha KorlapatiNo ratings yet

- On Unbounded Path-Loss Models: Effects of Singularity On Wireless Network PerformanceDocument15 pagesOn Unbounded Path-Loss Models: Effects of Singularity On Wireless Network PerformanceSefineh KetemawNo ratings yet

- Multi-Dimensional Model Order SelectionDocument13 pagesMulti-Dimensional Model Order SelectionRodrigo RozárioNo ratings yet

- Efficient Calculation of SEA Input Parameters Using A Wave Based Substructuring TechniqueDocument16 pagesEfficient Calculation of SEA Input Parameters Using A Wave Based Substructuring Techniquereek_bhatNo ratings yet

- Paper 7-Analysis of Gumbel Model For Software Reliability Using Bayesian ParadigmDocument7 pagesPaper 7-Analysis of Gumbel Model For Software Reliability Using Bayesian ParadigmIjarai ManagingEditorNo ratings yet

- Egg Et Al 13Document5 pagesEgg Et Al 13Aya NofalNo ratings yet

- 03 0121Document6 pages03 0121trossouwNo ratings yet

- Coupling Mesh Morphing and Parametric Shape Optimization Using SimuoptiDocument7 pagesCoupling Mesh Morphing and Parametric Shape Optimization Using SimuoptiVikram MangaloreNo ratings yet

- Determination of Modal Residues and Residual Flexibility For Time-Domain System RealizationDocument31 pagesDetermination of Modal Residues and Residual Flexibility For Time-Domain System RealizationSamagassi SouleymaneNo ratings yet

- LIPIcs ESA 2018 1Document14 pagesLIPIcs ESA 2018 1Ibrahim MlaouhiNo ratings yet

- Modeling and Prediction of Wear Rate of Aluminum Alloy (Al 7075) Using Power Law and AnnDocument9 pagesModeling and Prediction of Wear Rate of Aluminum Alloy (Al 7075) Using Power Law and AnnSravan Kumar50No ratings yet

- Meeting RecognisionDocument4 pagesMeeting RecognisionpeterijNo ratings yet

- Parametric Query OptimizationDocument12 pagesParametric Query OptimizationJoseph George KonnullyNo ratings yet

- CosineSimilarityMetricLearning ACCV10Document13 pagesCosineSimilarityMetricLearning ACCV10iwei2005No ratings yet

- Chapter 22 Approximate Data StructuresDocument8 pagesChapter 22 Approximate Data StructuresAbdirazak MuftiNo ratings yet

- Statistical Analysis of Sound and Vibration Signals For Monitoring Rolling Element Bearing ConditionDocument16 pagesStatistical Analysis of Sound and Vibration Signals For Monitoring Rolling Element Bearing ConditionPradeep KunduNo ratings yet

- Mixture of Partial Least Squares Regression ModelsDocument13 pagesMixture of Partial Least Squares Regression ModelsalexeletricaNo ratings yet

- ChoiDocument12 pagesChoiXinCheng GuNo ratings yet

- Simulation OptimizationDocument6 pagesSimulation OptimizationAndres ZuñigaNo ratings yet

- A Sparse Matrix Approach To Reverse Mode AutomaticDocument10 pagesA Sparse Matrix Approach To Reverse Mode Automaticgorot1No ratings yet

- Approximation Algorithms and Decision MakingDocument21 pagesApproximation Algorithms and Decision MakingROHIT ROY PGP 2019-21 BatchNo ratings yet

- An ANFIS Based Fuzzy Synthesis Judgment For Transformer Fault DiagnosisDocument10 pagesAn ANFIS Based Fuzzy Synthesis Judgment For Transformer Fault DiagnosisDanh Bui CongNo ratings yet

- GA Local Search SA For Flow Shop Murata Et Al CIE1996Document11 pagesGA Local Search SA For Flow Shop Murata Et Al CIE1996Aswin RamachandranNo ratings yet

- Validación Formulario CMM y Software Evaluación ToleranciaDocument10 pagesValidación Formulario CMM y Software Evaluación ToleranciaAdolfo RodriguezNo ratings yet

- Denoising of Natural Images Based On Compressive Sensing: Chege SimonDocument16 pagesDenoising of Natural Images Based On Compressive Sensing: Chege SimonIAEME PublicationNo ratings yet

- Paper 12Document5 pagesPaper 12api-282905843No ratings yet

- Interatomic Potentials from First-Principles Calculations Using the Force-Matching MethodDocument7 pagesInteratomic Potentials from First-Principles Calculations Using the Force-Matching MethodMaria Jose Aliaga GosalvezNo ratings yet

- Quantum Data-Fitting: PACS Numbers: 03.67.-A, 03.67.ac, 42.50.DvDocument6 pagesQuantum Data-Fitting: PACS Numbers: 03.67.-A, 03.67.ac, 42.50.Dvohenri100No ratings yet

- SVM TSA of Power Systems Using Single Machine AttributesDocument5 pagesSVM TSA of Power Systems Using Single Machine Attributesrasim_m1146No ratings yet

- Aiml Case StudyDocument9 pagesAiml Case StudyAMAN PANDEYNo ratings yet

- Advanced Signal Processing Techniques For Wireless CommunicationsDocument43 pagesAdvanced Signal Processing Techniques For Wireless CommunicationsShobha ShakyaNo ratings yet

- Optimum Coordination of Overcurrent Relays Using SADE AlgorithmDocument10 pagesOptimum Coordination of Overcurrent Relays Using SADE AlgorithmJamile_P_NNo ratings yet

- Reliability Analysis of Large Structural SystemsDocument5 pagesReliability Analysis of Large Structural Systemsuamiranda3518No ratings yet

- Ancient language reconstruction using probabilistic modelsDocument30 pagesAncient language reconstruction using probabilistic modelsANo ratings yet

- Abstraction Selection in Model-Based Reinforcement LearningDocument10 pagesAbstraction Selection in Model-Based Reinforcement LearningBarbara Reis SmithNo ratings yet

- 99 01 009Document11 pages99 01 009Shubham SinghNo ratings yet

- Subsidiary For B.sc. Hons. - II Physics - Paper III Waves and OscillacationDocument2 pagesSubsidiary For B.sc. Hons. - II Physics - Paper III Waves and OscillacationSrijan SehgalNo ratings yet

- Ant Colony Optimization Based Feature Selection in Rough Set TheoryDocument4 pagesAnt Colony Optimization Based Feature Selection in Rough Set TheorySrijan SehgalNo ratings yet

- Number SystemsDocument24 pagesNumber SystemsShishupal SinghNo ratings yet

- Portfolio Optimization: Proceedings of The 2nd Fields-MITACS Industrial Problem-Solving Workshop, 2008Document13 pagesPortfolio Optimization: Proceedings of The 2nd Fields-MITACS Industrial Problem-Solving Workshop, 2008Srijan SehgalNo ratings yet

- Ant ColonyDocument7 pagesAnt ColonyJorge GomezNo ratings yet

- Comparison of Ant Colony Based Routing AlgorithmsDocument5 pagesComparison of Ant Colony Based Routing AlgorithmsSrijan SehgalNo ratings yet

- Comparative Analysis of Ant Colony and Particle Swarm Optimization TechniquesDocument6 pagesComparative Analysis of Ant Colony and Particle Swarm Optimization TechniquesSrijan SehgalNo ratings yet

- Computational Intelligence Applied On CryptologyDocument21 pagesComputational Intelligence Applied On CryptologySrijan SehgalNo ratings yet

- Geneticos Con HormigasDocument7 pagesGeneticos Con Hormigassistematico2011No ratings yet

- Career Path of an ActuaryDocument14 pagesCareer Path of an ActuarySrijan SehgalNo ratings yet

- Pheromone UpdateDocument16 pagesPheromone UpdateSrijan SehgalNo ratings yet

- 11 Boolean Algebra: ObjectivesDocument18 pages11 Boolean Algebra: ObjectivesS.L.L.CNo ratings yet

- Laws and Theorems of Boolean AlgebraDocument1 pageLaws and Theorems of Boolean AlgebraJeff Pratt100% (5)

- 555 TimerDocument10 pages555 Timerniroop12345No ratings yet

- Rural-Urban Education, Wage and Occupation Gaps in IndiaDocument24 pagesRural-Urban Education, Wage and Occupation Gaps in IndiaSrijan SehgalNo ratings yet

- Problems in ElectrodynamicsDocument18 pagesProblems in ElectrodynamicsSrijan SehgalNo ratings yet

- BSC (Hons) Physics Part III Electronic Devices VDocument4 pagesBSC (Hons) Physics Part III Electronic Devices VSrijan SehgalNo ratings yet

- B.sc. (Hons.) Physics Quantum Mechanics (PHHT-516) B.sc. (Hons) Physics SEM-V (6216)Document6 pagesB.sc. (Hons.) Physics Quantum Mechanics (PHHT-516) B.sc. (Hons) Physics SEM-V (6216)Srijan SehgalNo ratings yet

- Mathematical Statistics With Applications Solution Manual Chapter 1Document8 pagesMathematical Statistics With Applications Solution Manual Chapter 1yyyeochan58% (12)

- IDocument2 pagesIsometoiajeNo ratings yet

- Iq TestDocument9 pagesIq TestAbu-Abdullah SameerNo ratings yet

- Sarvali On DigbalaDocument14 pagesSarvali On DigbalapiyushNo ratings yet

- Do You Agree With Aguinaldo That The Assassination of Antonio Luna Is Beneficial For The Philippines' Struggle For Independence?Document1 pageDo You Agree With Aguinaldo That The Assassination of Antonio Luna Is Beneficial For The Philippines' Struggle For Independence?Mary Rose BaluranNo ratings yet

- Sentinel 2 Products Specification DocumentDocument510 pagesSentinel 2 Products Specification DocumentSherly BhengeNo ratings yet

- FranklinDocument4 pagesFranklinapi-291282463No ratings yet

- Inorganica Chimica Acta: Research PaperDocument14 pagesInorganica Chimica Acta: Research PaperRuan ReisNo ratings yet

- Malware Reverse Engineering Part 1 Static AnalysisDocument27 pagesMalware Reverse Engineering Part 1 Static AnalysisBik AshNo ratings yet

- Game Rules PDFDocument12 pagesGame Rules PDFEric WaddellNo ratings yet

- Polytechnic University Management Services ExamDocument16 pagesPolytechnic University Management Services ExamBeverlene BatiNo ratings yet

- Quantification of Dell S Competitive AdvantageDocument3 pagesQuantification of Dell S Competitive AdvantageSandeep Yadav50% (2)

- Sewage Pumping StationDocument35 pagesSewage Pumping StationOrchie DavidNo ratings yet

- Important Instructions To Examiners:: Calculate The Number of Address Lines Required To Access 16 KB ROMDocument17 pagesImportant Instructions To Examiners:: Calculate The Number of Address Lines Required To Access 16 KB ROMC052 Diksha PawarNo ratings yet

- Difference Between Mark Up and MarginDocument2 pagesDifference Between Mark Up and MarginIan VinoyaNo ratings yet

- Unit 3 Computer ScienceDocument3 pagesUnit 3 Computer ScienceradNo ratings yet

- تاااتتاااDocument14 pagesتاااتتاااMegdam Sameeh TarawnehNo ratings yet

- Free Radical TheoryDocument2 pagesFree Radical TheoryMIA ALVAREZNo ratings yet

- Bharhut Stupa Toraa Architectural SplenDocument65 pagesBharhut Stupa Toraa Architectural Splenအသွ်င္ ေကသရNo ratings yet

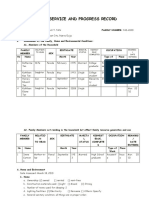

- Family Service and Progress Record: Daughter SeptemberDocument29 pagesFamily Service and Progress Record: Daughter SeptemberKathleen Kae Carmona TanNo ratings yet

- BIBLIO Eric SwyngedowDocument34 pagesBIBLIO Eric Swyngedowadriank1975291No ratings yet

- Briana SmithDocument3 pagesBriana SmithAbdul Rafay Ali KhanNo ratings yet

- System: Boehringer Mannheim/Hitachi AnalysisDocument20 pagesSystem: Boehringer Mannheim/Hitachi Analysismaran.suguNo ratings yet

- Yellowstone Food WebDocument4 pagesYellowstone Food WebAmsyidi AsmidaNo ratings yet

- Lifespan Development Canadian 6th Edition Boyd Test BankDocument57 pagesLifespan Development Canadian 6th Edition Boyd Test Bankshamekascoles2528zNo ratings yet

- Service and Maintenance Manual: Models 600A 600AJDocument342 pagesService and Maintenance Manual: Models 600A 600AJHari Hara SuthanNo ratings yet

- Electronics Ecommerce Website: 1) Background/ Problem StatementDocument7 pagesElectronics Ecommerce Website: 1) Background/ Problem StatementdesalegnNo ratings yet

- August 03 2017 Recalls Mls (Ascpi)Document6 pagesAugust 03 2017 Recalls Mls (Ascpi)Joanna Carel Lopez100% (3)

- Panel Data Econometrics: Manuel ArellanoDocument5 pagesPanel Data Econometrics: Manuel Arellanoeliasem2014No ratings yet

- Audit Acq Pay Cycle & InventoryDocument39 pagesAudit Acq Pay Cycle & InventoryVianney Claire RabeNo ratings yet

- Oxford Digital Marketing Programme ProspectusDocument12 pagesOxford Digital Marketing Programme ProspectusLeonard AbellaNo ratings yet

- ChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveFrom EverandChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveNo ratings yet

- Scary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldFrom EverandScary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldRating: 4.5 out of 5 stars4.5/5 (54)

- Artificial Intelligence: A Guide for Thinking HumansFrom EverandArtificial Intelligence: A Guide for Thinking HumansRating: 4.5 out of 5 stars4.5/5 (30)

- Generative AI: The Insights You Need from Harvard Business ReviewFrom EverandGenerative AI: The Insights You Need from Harvard Business ReviewRating: 4.5 out of 5 stars4.5/5 (2)

- The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldFrom EverandThe Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldRating: 4.5 out of 5 stars4.5/5 (107)

- Artificial Intelligence: The Insights You Need from Harvard Business ReviewFrom EverandArtificial Intelligence: The Insights You Need from Harvard Business ReviewRating: 4.5 out of 5 stars4.5/5 (104)

- ChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindFrom EverandChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindNo ratings yet

- How to Make Money Online Using ChatGPT Prompts: Secrets Revealed for Unlocking Hidden Opportunities. Earn Full-Time Income Using ChatGPT with the Untold Potential of Conversational AI.From EverandHow to Make Money Online Using ChatGPT Prompts: Secrets Revealed for Unlocking Hidden Opportunities. Earn Full-Time Income Using ChatGPT with the Untold Potential of Conversational AI.No ratings yet

- ChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessFrom EverandChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessNo ratings yet

- AI Money Machine: Unlock the Secrets to Making Money Online with AIFrom EverandAI Money Machine: Unlock the Secrets to Making Money Online with AINo ratings yet

- Who's Afraid of AI?: Fear and Promise in the Age of Thinking MachinesFrom EverandWho's Afraid of AI?: Fear and Promise in the Age of Thinking MachinesRating: 4.5 out of 5 stars4.5/5 (12)

- Mastering Large Language Models: Advanced techniques, applications, cutting-edge methods, and top LLMs (English Edition)From EverandMastering Large Language Models: Advanced techniques, applications, cutting-edge methods, and top LLMs (English Edition)No ratings yet

- Artificial Intelligence: The Complete Beginner’s Guide to the Future of A.I.From EverandArtificial Intelligence: The Complete Beginner’s Guide to the Future of A.I.Rating: 4 out of 5 stars4/5 (15)

- 100+ Amazing AI Image Prompts: Expertly Crafted Midjourney AI Art Generation ExamplesFrom Everand100+ Amazing AI Image Prompts: Expertly Crafted Midjourney AI Art Generation ExamplesNo ratings yet

- 2084: Artificial Intelligence and the Future of HumanityFrom Everand2084: Artificial Intelligence and the Future of HumanityRating: 4 out of 5 stars4/5 (81)

- Make Money with ChatGPT: Your Guide to Making Passive Income Online with Ease using AI: AI Wealth MasteryFrom EverandMake Money with ChatGPT: Your Guide to Making Passive Income Online with Ease using AI: AI Wealth MasteryNo ratings yet

- Power and Prediction: The Disruptive Economics of Artificial IntelligenceFrom EverandPower and Prediction: The Disruptive Economics of Artificial IntelligenceRating: 4.5 out of 5 stars4.5/5 (38)

- The Digital Mindset: What It Really Takes to Thrive in the Age of Data, Algorithms, and AIFrom EverandThe Digital Mindset: What It Really Takes to Thrive in the Age of Data, Algorithms, and AIRating: 3.5 out of 5 stars3.5/5 (7)

- ChatGPT 4 $10,000 per Month #1 Beginners Guide to Make Money Online Generated by Artificial IntelligenceFrom EverandChatGPT 4 $10,000 per Month #1 Beginners Guide to Make Money Online Generated by Artificial IntelligenceNo ratings yet

- System Design Interview: 300 Questions And Answers: Prepare And PassFrom EverandSystem Design Interview: 300 Questions And Answers: Prepare And PassNo ratings yet

- Killer ChatGPT Prompts: Harness the Power of AI for Success and ProfitFrom EverandKiller ChatGPT Prompts: Harness the Power of AI for Success and ProfitRating: 2 out of 5 stars2/5 (1)