Professional Documents

Culture Documents

FELFCNCA: Fast & Efficient Log File Compression Using Non Linear Cellular Automata Classifier

Uploaded by

SEP-PublisherCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

FELFCNCA: Fast & Efficient Log File Compression Using Non Linear Cellular Automata Classifier

Uploaded by

SEP-PublisherCopyright:

Available Formats

InternationalJournalofCommunications(IJC)Volume1Issue1,December2012www.seipub.

org/ijc

FELFCNCA:Fast&EfficientLogFile

CompressionUsingNonLinearCellular

AutomataClassifier

P.KiranSree*1,InampudiRameshBabu2,SSSNUshaDeviN3

ResearchScholar,DeptofCSE,JNTUHyderabad,India;2Professor,DeptofCSE,AcharyaNagarjuna

University,India;3DeptofCSE,BVCECIndia.

*1profkiran@yahoo.com;2drirameshbabu@gmail.com;3usha.jntuk@gmail.com

1

Abstract

Current computer networks consist of a myriad of

interconnected, heterogeneous devices in different

physical locations (this is termed a distributed

computing environment). Computer networks are

used by many different sized corporations,

educationalinstitutions,smallbusinessesandresearch

organizations.Inmanyofthesenetworks,monitoring

is required to determine the system state, improve

securityorgatherinformation.

Log Files are created for Traffic Analysis, Maintenance,

Software debugging, customer management at multiple

places like System Services, User Monitoring Applications,

Network servers, database management systems which

must be kept for long periods of time. These Log files may

grow to huge sizes in this complex systems and

environments.Forstorageandconveniencelogfilesmustbe

compressed. Most of the existing algorithms do not take

temporal redundancy specific Log Files into consideration.

WeproposeaNonLinearbasedClassifierwhichintroduces

amultidimensionallogfilecompressionschemedescribedin

eight variants, differing in complexity and attained

compression ratios. The FELFCNCA scheme introduces a

transformationforlogfilewhosecompressibleoutputisfar

better than general purpose algorithms. This proposed

method was found lossless and fully automatic. It does not

imposeanyconstraintonthesizeoflogfile.

Log files are excellent sources for determining the

health status of a system and are used to capture the

eventshappenedwithinanorganizationssystemand

networks.Logsareacollectionoflogentriesandeach

entry contains information related to a specific event

thathastakenplacewithinasystemornetwork.Many

logs within an association contain records associated

withcomputersecuritywhicharegeneratedbymany

sources, including operating systems on servers,

workstations, networking equipment and other

security softwares, such as antivirus software,

firewalls, intrusion detection and prevention systems

and many other applications. Routine log analysis is

beneficial for identifying security incidents, policy

violations, fraudulent activity, and operational

problems.

Keywords

Log Files; Non Linear Linear; Compression

Introduction

Logfilekeepstrackofeverythinggoesinandoutofa

particular server. It is a concept much like the black

box of an airplane that records everything going on

with the plane in the event of a problem. The

information is frequently recorded chronologically,

andislocatedintherootdirectory,oroccasionallyina

secondary folder, depending on how it is set up with

theserver.Withtheuseoflogfileitspossibletogeta

good idea of where visitors are coming from, how

often they return, and how they navigate through a

site. Using cookies enables Webmasters to log even

moredetailedinformationabouthowindividualusers

are accessing a site. The storage requirement for

operational data is increasing as increase in the

computingtechnology.

Initially,logswereusedfortroubleshootingproblems,

butnowadaystheyareusedformanyfunctionswithin

most organizations and associations, such as

optimizing system and network performance,

recording the actions of users, and providing data

useful for investigating malicious activity. Logs have

evolved to contain information related to many

different types of events occurring within networks

and systems. Within an organization, many logs

containrecordsrelatedtocomputersecurity;common

examples of these computer security logs are audit

www.seipub.org/ijcInternationalJournalofCommunications(IJC)Volume1Issue1,December2012

compression standards are listed under audio codes.

Voice compression is used in Internet telephony, for

example while audio compression is used for CD

rippingandisdecodedbyaudioplayers.

logs that track user authentication attempts and

securitydevicelogsthatrecordpossibleattacks.

Log File Compression

Thissectionmotivatestheneedfordatareductionand

explainshowdatacompressioncanbeusedtoreduce

thequantityofdata.Italsodiscussestheneedforthe

approacheswhichcanbeusedandtheroleoflogfiles.

In many environments, tracked logs can happen very

often. As a result, there is a huge amount of data

producedthiswayeveryday.Andoftenitisnecessary

to store them for a long period of time. Regardless of

thetypeofrecordedlogs,forreasonsofsimplicityand

convenience, they are usually stored in plain text log

files. Both the content type and the storage format

suggest that it is possible to significantly reduce the

size of log files through lossless data compression,

especially if specialized algorithm was used. The

smaller,compressedfileshavetheadvantagesofbeing

easiertohandleandsavingstoragespace.

Based on the literature reviews, it can be noted that

many approaches can be considered to design and

develop an intelligent system. For our system, we

intend to implement RBR and OO methodology. RBR

canbeusedtoeasilyconstruct,debugandmaintainthe

systemandbyusinganOracledatabaseforknowledge

representation, rules are more structured whereas OO

methodologyhelpstostructurethewholesystemina

conceptual view which will ease the programming

implementation.Themainobjectiveofthisstudyisto

build a system for diabetes diagnosis based on RBR

OOmethodology.Tobeprecise,weaimto(i)designa

generalframeworkoftheintelligentdiagnosticsystem

based on RBROO methodology, (ii) implement RBR

algorithm to diagnose the patients based on the sign

symptoms provided, (iii) provide reasoning on the

diagnosis and (iv) demonstrate the applicability of

RBROOmethodologyinthehealthcareenvironment.

Types Of Compression

Lossless compression algorithms frequently use

statistical redundancy in such a way as to represent

the senders data more concisely without error.

Lossless compression is possible as most realworld

data has statistical redundancy. For instance, in

Englishtext,thelettereismuchmoreusedthanthe

letter z, and the probability that the letter q will be

followedbytheletterzisverytiny.Anotherkindof

compression called lossy data compression or

perceptualcodingispossibleinthisschemesomeloss

of data is acceptable. In general a lossy data

compression will be guided by research on how

peopleperceivethedatainquestion.Forexample,the

human eye is more sensitive to slight variations in

luminancethanitistovariationsincolor.JPEGimage

compressionworksin part by rounding off some of

thislessimportantinformation.

Non Linear Cellular Automata (CA)

Non Linear Cellular Automata use generalized

structures to solve problems in an evolutionary way.

CA often demonstrates also significant ability toward

selforganization that comes mostly from the

generalized structure on which they operate. By

organization, one means that after some time in the

evolutionary process, the system exhibits more or less

stable generalized structures. This behaviour can be

foundnomattertheinitialconditionsoftheautomaton.

A CA consists of a number of cells organized in the

formofalattice.

Genetic Algorithm & CA

The main motivation behind the evolving Non Linear

Cellular Automata framework is to understand how

genetic algorithms evolve Non Linear Cellular

Automata that perform computational tasks requiring

global information processing, since the individual

cellsinaCAcancommunicateonlylocallywithoutthe

existenceofacentralcontroltheGAhastoevolveCA

that exhibit higherlevel emergent behaviour in order

to perform this global information processing. Thus

thisframeworkprovidesanapproachtostudyinghow

evolution can create dynamical systems in which the

interactions of simple components with local

Lossy Compression

Lossy image compression is exercised in digital

cameras, to increase storage capacity with negligible

degradation of picture quality. Similarly, DVDs use

the lossy MPEG2 Video codec for compression.

Compression of individual speech is often performed

with more specialized methods, so that speech

compression or voice coding is sometimes

distinguished as a separate discipline from audio

compression. Different audio and speech

InternationalJournalofCommunications(IJC)Volume1Issue1,December2012www.seipub.org/ijc

cells NLCAn have information of the global state of

the NLCA lattice. So, the question is whether there

existsaNLCAupdaterulethatNLCAndecideonthe

density of black cells (smaller or larger than 0.5) for

anyIC.

information storage and communication give rise to

coordinatedglobalinformationprocessing.

Crossover and Mutation

The performance of an individual is measured by a

fitness function. A fitness function rates the

performance of an individual in its environment by

comparing the results of the individuals

chromosomes with the desired results of the problem

asdefinedbytheauthorofthealgorithm.Thefitness

is generally expressed within the algorithm as a

floatingpoint(i.e.,decimal)numberwithapredefined

range of values, from best performing to worst

performing. As in Darwinian evolution, low

performing individuals are eliminated from the

population and high performing individuals are

cloned and mutated, replacing those that were

eliminated.

Log files are plain text files in which every line

corresponds to a singlelogged event description. The

lines are separated by endofline marks. Each event

description consists of at least several tokens,

separated byspaces. A token may bea date, hour, IP

address, or any string of characters being a part of

event description. In typical log files the neighboring

lines are very similar, not only in their structure, but

also in their content. The proposed transform takes

advantageofthisfactbyreplacingthetokensofanew

linewithreferencestothepreviousline.Theremaybe

a row of more than one token that appears in two

successive lines, and the tokens in the neighbouring

lines can have common prefixes but different suffixes

(the opposite is possible, but far less frequent in

typicallogfiles).

Themainfeaturewhichisachievedwhendeveloping

CA Agent systems, if they work, is flexibility, since a

CA Agent system can be added to, modified and

reconstructed,withouttheneedfordetailedrewriting

oftheapplication.

Non Linear Cellular Automata Classifier

NLCATreeBuilding(AssumingKNLCABasins)

Emergent Computation In Non Linear Linear

Cellular Automata

Input:Intrusionparameters

Output:NLCABasedinvertedtree(LOGINDEX)

A particular NLCA update rule and a corresponding

initial configuration were constructed, or at least it

was shown that such a construction is possible in

principle. However, for most computational tasks of

interest,itisverydifficulttoconstructaNLCAupdate

ruleoritisverytimeconsumingtosetupthecorrect

initialconfiguration.

Step0:Start.

Step1:GenerateaNLCAwithknumberofNLCA

basins

Step2:DistributetheparametersintokNLCAbasins

Step3:Evaluatethedistributionineachclosetbasin

Step4:CalculatetheRq

Ageneticalgorithm(GA)isasearchalgorithmbasedon

the principles of natural evolution (see the

introduction to evolutionary computation in this

volume). It NLCAn be used to search through the

space of possible NLCA rules to try to find a NLCA

thatNLCAnperformagivencomputationaltask.The

specifictaskthatMitchelletal.originallyconsideredis

density classifiNLCAtion. Consider onedimensional,

twostate NLCA. For any initial configuration (IC) of

blackandwhitecells,weNLCAnask:Aretheremore

blackcellsormorewhitecellsintheIC?Notethatfor

mostcomputingsystems(includinghumans),thisis

an easy question to answer, as the number of black

and white cells NLCAn easily be counted and

compared.However,foraNLCAthisisadifficulttask,

since each individual cell in the lattice NLCAn only

checkthestatesofitsdirectneighbors,andnoneofthe

Step5:Swapthemoreappropriatefeaturestothe

bottomleavesoftheinvertedtree.

Step6:Stop.

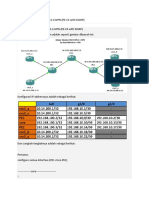

Results & Discussion

The result shows the two major indispensible

propertiesofLogFiles.Thefirstoneisthecompressed

file size and the other one is compaction ratio. Here

the graphs show both ofthe properties. On one hand

first graph shows the difference between original log

files and compressed log files .On other hand second

graph displays the compaction ratio. The third graph

compares the existing method and the proposed

method which shows that the proposed method is

betterthantheexistingmethod.

www.seipub.org/ijcInternationalJournalofCommunications(IJC)Volume1Issue1,December2012

Conclusion

Systemfilescontainlotsofcontemporaryinformation

replete with log files and occupy huge amounts of

space.Thegeneralpurposealgorithmsdonottakefull

advantage of redundancy in log files. The existing

specializedcompressiontechniquesforlogfilesneeds

lot of human assistance and not practical for the

general applications. The proposed classifier

introduces a multidimensional log file compression

scheme described in eight variant differing in

complexity and attained compression ratios. This

FCLFCDNAclassifierisdesignedfordifferenttypesof

logs and experimental results conclude the

compression improvement in different log files. The

proposed method is fully automatic and lossless. The

mostimportantfunctionalityofthisproposedworkis

itdoesnoimposeanyconstraintsonthesizeofthelog

file.

FIG.1COMPARISIONAMONGFELFCNCA&EXISTING

APPROACH

REFERENCES

RashmiGupta,RajKumarGuptaAModifiedEfficientLog

File Compression Mechanism for Digital Forensic in

Web Environment International Journal of Computer

Science and Information Technologies, Vol. 3 (4) ,

2012,48784882.

PrzemysawSkibiskiandJakubSwacha,Fastandefficient

logfilecompression,TechnicalReport.

Kessler,M.G.(2006).KesslersCorner:Thegrowingfieldof

computerforensics.TheKesslerReport,9(1),7.

GaryLPalmerARoadMapforDigitalForensicResearch.

Technical ReportDTRT001001, DFRWS. Report for the

First Digital Forensic Research Workshop (DFRWS),

2001

FIG.2COMPACTIONRATIOS

Balakrishnan, R., Sahoo R. K.: Lossless Compression for Large

ScaleClusterLogs.

IBMResearchReportRC23902(W0603038),March3,(2006).

Gailly,

J.L.:

gzip

1.2.4

compression

utility.

compression

utility.

http://www.gzip.org.(1993).

Kolivas,

C.:

lrzip

0.18

http://ck.kolivas.org/apps/lrzip.(2006).

P. Kiran Sree, G.V.S. Raju, I. Ramesh Babu ,S. Viswanadha

Raju Improving Quality of Clustering using Cellular

1.GZIP2.GZIP+LP13.GZIP+LP24.GZIP+LP35.

GZIP+LP36.LRZIP7.BZIP28.LZMA9.PPMVC

10.FCLFCNA

Automata for Information retrieval in International

Journal of Computer Science 4 (2), 167171, 2008. ISSN

15493636.(SciencePublicationsUSA)

FIG3.COMPARISONOFCOMPRESSIONRATIOS

10

InternationalJournalofCommunications(IJC)Volume1Issue1,December2012www.seipub.org/ijc

P.KIRANREEreceivedhisB.Techin

Computer Science & Engineering,

from J.N.T.U and M.E in Computer

Science & Engineering from Anna

University. He is pursuing Ph.D in

Computer Science from J.N.T.U,

Hyderabad. His areas of interests

include Cellular Automata, Parallel

Algorithms, Artificial Intelligence,

and Compiler Design. He was the reviewer for some of

International Journals and IEEE Society Conferences on

ArtificialIntelligence&ImageProcessing.Heisalsolistedin

MarquisWhosWhointheWorld,29thEdition(2012),USA.

Sree takes much commitment as the recipient of Bharat

Excellence Award from Dr GV Krishna Murthy, Former

Election Commissioner of India, and the Board of Studies

member of Vikrama Simhapuri University, Nellore in

Computer Science & Engineering stream; track chair for

someinternationalconferences.Meantime,hehaspublished

30 technical papers both in international journals and

conferences

P.Kiran Sree, I.Ramesh Bababu, Identification of Protein

Coding Regionsin GenomicDNA Using Unsupervised

FMACA Based Pattern Classifier in International

Journal of Computer Science & Network Security with

ISSN:17387906,Vol.8,No.1,305308.TT

P.Kiran See, I.Ramesh Babu,Towards an Artificial Immune

System to Identify and Strengthen Protein Coding

Identification

Region

Using

Cellular

Automata

Classifier, in International Journal of Computers and

Communications, Issue 1, Volume 1, 2007,Issue 2,

Volume1,2007,(2634).ISSN:20741294.

P.Kiran Sree, I. Ramesh BabuFace Detection from still and

Video Images using Unsupervised Cellular Automata

with

means

clustering

algorithm

ICGST

International Journal on Graphics, Vision and Image

Processing(GVIP),ISSN: 1687398X, Volume 8, Issue II,

2008,(17).

P.KiranSree,ClassificationSchemeforIPv6RoutersUsing

ParallelSearchofIndividualsTries.inInternational

Journal of Computer Science & Network Security with

ISSN:17387906,Vol.8No.1pp.275280

R. Lippmann, An introduction to computing with neural

nets,IEEEASSPMag.4(22)(2004),pp.121129.

P. Maji and P. P. Chaudhuri (2004),FMACA: A Fuzzy

Cellular Automata Based Pattern Classifier,

Proceedings of 9th International Conference on

DatabaseSystems,Korea,2004,pp.494505.

P.MajiandP.P.Chaudhuri,FuzzyCellularAutomataFor

Modeling Pattern Classifier, Accepted for publication in

IEICE,(2004).

Inampudi Ramesh Babu, received

his Ph.D in Computer Science from

Acharya Nagarjuna University, M.E

in Computer Engineering from

AndhraUniversity,B.EinElectronics

& Communication Engg from

UniversityofMysore.Heiscurrently

working as Head & Professor in the

departmentofcomputerscience,NagarjunaUniversity.Also

he is the senate member of the same University from 2006.

Hisareasofinterestareimageprocessing&itsapplications,

and he is currently supervising 10 Ph.D students who are

working in different areas of image processing. He is the

senior member of IEEE, and has published 70 papers in

internationalconferencesandjournals.

11

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5795)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Mill's Critique of Bentham's UtilitarianismDocument9 pagesMill's Critique of Bentham's UtilitarianismSEP-PublisherNo ratings yet

- Emerson Av Catalog 4825Document124 pagesEmerson Av Catalog 4825junaid khalidNo ratings yet

- Microstructure and Wear Properties of Laser Clad NiCrBSi-MoS2 CoatingDocument5 pagesMicrostructure and Wear Properties of Laser Clad NiCrBSi-MoS2 CoatingSEP-PublisherNo ratings yet

- Quantum Meditation: The Self-Spirit ProjectionDocument8 pagesQuantum Meditation: The Self-Spirit ProjectionSEP-PublisherNo ratings yet

- Mindfulness and Happiness: The Empirical FoundationDocument7 pagesMindfulness and Happiness: The Empirical FoundationSEP-PublisherNo ratings yet

- Cold Mind: The Released Suffering StabilityDocument3 pagesCold Mind: The Released Suffering StabilitySEP-PublisherNo ratings yet

- 013 MGW Overview v5Document38 pages013 MGW Overview v5rudolfh1309No ratings yet

- Belajar Simulasi CISCO MPLSDocument19 pagesBelajar Simulasi CISCO MPLSRizki Achmad FadilahNo ratings yet

- Advanced Computer Network by Prof Dayanand AmbawadeDocument91 pagesAdvanced Computer Network by Prof Dayanand AmbawadeAustin Tyson DsouzaNo ratings yet

- openSAP Ui52 Week 1 Unit 2a 2b ExercisesDocument29 pagesopenSAP Ui52 Week 1 Unit 2a 2b ExercisesSubrata PatraNo ratings yet

- Anti Phishing AttacksDocument58 pagesAnti Phishing AttacksSneha Gupta0% (1)

- Node B Manual Commissioning Steps by StepsDocument28 pagesNode B Manual Commissioning Steps by StepsCarlos Antonio Bongiovanni100% (1)

- Aim: Case Study On Kismet. Software Used: Internet, MS WordDocument3 pagesAim: Case Study On Kismet. Software Used: Internet, MS Wordd208643No ratings yet

- HDDScan Eng PDFDocument18 pagesHDDScan Eng PDFnoisi80No ratings yet

- Handout-on-Network-Analysis 4Document10 pagesHandout-on-Network-Analysis 4Janine SalvioNo ratings yet

- HP Pavilion x360 - 14-cd0036tx Product Specifications: HP Customer Support - Knowledge BaseDocument2 pagesHP Pavilion x360 - 14-cd0036tx Product Specifications: HP Customer Support - Knowledge Baseshailendubey17No ratings yet

- HostsDocument2 pagesHostsJubayer AhmadNo ratings yet

- DG MC5123Document1 pageDG MC5123Manu JagdishNo ratings yet

- Setting Up Database Monitoring and Alerting in SAP Solution ManagerDocument2 pagesSetting Up Database Monitoring and Alerting in SAP Solution ManagerZainNo ratings yet

- ANSI IEEE C37.2-2008 - Power - System - Device - Function - Numbers - Acronyms - and - Contact - Designations PDFDocument12 pagesANSI IEEE C37.2-2008 - Power - System - Device - Function - Numbers - Acronyms - and - Contact - Designations PDFOswaldo Jose MachadoNo ratings yet

- Wireless Communication: © 2010 Karel de Grote Hogeschool, Dominique DaensDocument47 pagesWireless Communication: © 2010 Karel de Grote Hogeschool, Dominique DaensSam eagle goodNo ratings yet

- RFC 2544 Ethernet Test ReportDocument5 pagesRFC 2544 Ethernet Test ReportLe My YenNo ratings yet

- HTML 5 & Css 3Document4 pagesHTML 5 & Css 3Kommula Narayana SwamyNo ratings yet

- FYP Chapter 4Document13 pagesFYP Chapter 4nfatin_32No ratings yet

- Embitel WIKIDocument3 pagesEmbitel WIKIembitel1No ratings yet

- UniFi Security Enhancement - FAQDocument2 pagesUniFi Security Enhancement - FAQZwan JbNo ratings yet

- FAQ Internship AgreementDocument4 pagesFAQ Internship AgreementTrúc LinhNo ratings yet

- Antennas at Cell SiteDocument14 pagesAntennas at Cell SiteMark Abadies100% (1)

- A Reference Architecture For SAP Solutions On Dell and SUSEDocument17 pagesA Reference Architecture For SAP Solutions On Dell and SUSEnarsingthakurNo ratings yet

- The Intrusion Detection Service (Ids) Policy Management Project A Newera Software, Inc. White Paper July-August, 2012Document28 pagesThe Intrusion Detection Service (Ids) Policy Management Project A Newera Software, Inc. White Paper July-August, 2012apmountNo ratings yet

- How To Configure The FTP Gateway ServiceDocument3 pagesHow To Configure The FTP Gateway Servicenmhung1097No ratings yet

- LAPORAN PKL PT ADISURYA CIPTA LESTARI Last Revisi - DiterjemahkanDocument51 pagesLAPORAN PKL PT ADISURYA CIPTA LESTARI Last Revisi - DiterjemahkanMassToniiNo ratings yet

- Quanta ZN1Document41 pagesQuanta ZN1Analia Madeled Tovar JimenezNo ratings yet

- Hindi Jokes in EnglishDocument6 pagesHindi Jokes in Englishroshanjain17No ratings yet

- Computer Networks: Delay, Loss and Throughput Layered ArchitecturesDocument10 pagesComputer Networks: Delay, Loss and Throughput Layered Architecturessatish2628No ratings yet