Professional Documents

Culture Documents

SCoPE Towards A Systolic Array For SVM Object Detection-wD5

Uploaded by

ArchOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

SCoPE Towards A Systolic Array For SVM Object Detection-wD5

Uploaded by

ArchCopyright:

Available Formats

46

IEEE EMBEDDED SYSTEMS LETTERS, VOL. 1, NO. 2, AUGUST 2009

SCoPE: Towards a Systolic Array for SVM Object

Detection

Christos Kyrkou, Student Member, IEEE, and Theocharis Theocharides, Member, IEEE

AbstractThis paper presents SCoPE (Systolic Chain of Processing Elements), a first step towards the realization of a generic

systolic array for support vector machine (SVM) object classification in embedded image and video applications. SCoPE provides

efficient memory management, reduced complexity, and efficient

data transfer mechanisms. The proposed architecture is generic

and scalable, as the size of the chain, and the kernel module can be

changed in a plug and play approach without affecting the overall

system architecture. These advantages provide versatility, scalability and reduced complexity that make it ideal for embedded

applications. Furthermore, the SCoPE architecture is intended

to be used as a building block towards larger systolic systems

for multi-input or multi-class classification. Simulation results

indicate real-time performance, achieving face detection at 33

frames per second on an FPGA prototype.

Index TermsField-programmable gate array (FPGA), object

detection, support vector machine (SVM), systolic array.

I. INTRODUCTION

UPPORT vector machines (SVMs) have been widely

adopted since their introduction by Cortes and Vapnik [1].

They are considered one of the most powerful classification

engines due to their high classification accuracy rates, which

in many cases outperform well established classification algorithms such as neural networks. Consequently, there has been

growing interest in utilizing SVMs in embedded object detection and other image classification applications. While software

implementations of SVMs yield high accuracy rates, they

cannot efficiently meet real-time requirements of embedded

applications (failing to take advantage of the inherent parallelism of the algorithms). Hence, SVM hardware architectures

emerged as a potential solution for real-time performance. The

majority of these emerging architectures presented in literature

is directed towards specific applications and cannot be easily

modified to adapt in other scenarios. Only few works have

looked into generic and versatile architectures to satisfy the

variety of embedded object-detection applications. Literature

suggests that existing general-purpose SVM architectures

do not scale well in terms of required hardware resources,

complexity, data transfer (wiring) and memory management,

primarily because of two important constraints; the number of

support vectors (SVs) and their dimensionality [2]. An SVM

with a small number of SVs, requires only a few computational

Manuscript received September 02, 2009; revised September 18, 2009. First

published October 16, 2009; current version published November 20, 2009.

This work was supported in part by the Cyprus Research Promotion Foundation under contract T5E=53HPO=0308(BIE)=04.

The authors are with the Department of Electrical and Computer Engineering,

University of Cyprus, Nicosia, Cyprus (e-mail: kyrkou.christos@ucy.ac.cy and

ttheocharides@ucy.ac.cy).

Digital Object Identifier 10.1109/LES.2009.2034709

modules, however, several applications require a large number

of high dimensional SVs. As such, a general-purpose SVM

architecture with a large number of modules faces design

challenges such as fan-out and routing, increased complexity,

and other performance limitations. An efficient SVM architecture needs to be scalable, provide real-time performance,

and be easy to design and implement. Issues such as parallel

memory access, distribution of the inputs to the computation

modules while taking into consideration fan-out requirements,

and efficient computation of the kernel operations (requires the

most computational power), need to be considered.

This work presents a distributed pipelined architecture that

can be expanded in a systolic manner, providing efficient management for memory and data (transfer/wiring) resources. The

Systolic Chain of Processing Elements (SCoPE) is a modular

architecture consisting of almost identical processing elements

(PEs). The architecture can be scaled according to the available hardware budget, without affecting the operation or frequency of the system. The architecture also addresses the wiring

and fan-out complexity of routing the input vector to the classification modules. A prototype chain of the SCoPE architecture was implemented on a Virtex 5 FPGA and was evaluated

using training data from a face detection application. However,

the architecture is suitable for several SVM image classification

applications.

This paper briefly explains the basic principles of SVM classification, and provides a brief overview of other hardware implementations that are present in the literature in Section II. The

SCoPE architecture is presented in Section III and an evaluation of a SCoPE prototype is presented in Section IV. Finally

Section V provides some insight on the ongoing work and future considerations.

II. SVM BACKGROUND AND RELATED WORK

A. Review of Support Vector Machines

An SVM is a binary classification algorithm where each

sample in the data set is viewed as a -dimensional vector

[3]. The SVM takes a data set that contains samples from

two classes (labeled

and

), and constructs separating

hyperplanes between them. The separating hyperplane that best

separates the two classes is called the maximum-margin hyperplane and forms the decision boundary for classification. The

margin (shown in Fig. 1(a)) is defined as the distance between

two parallel hyperplanes that lie at the boundary of each class.

The data samples that lie at the boundary of each class and

determine how the maximum-margin hyperplane is formed,

are called support vectors (SVs) [3]. The SVs are obtained

during the training phase and are then used for classifying

new (unknown) data. When two data classes are not linearly

separable, a kernel function is used to project data to a higher

1943-0663/$26.00 2009 IEEE

Authorized licensd use limted to: IE Xplore. Downlade on May 13,20 at 1:460 UTC from IE Xplore. Restricon aply.

KYRKOU AND THEOCHARIDES: SCOPE: TOWARDS A SYSTOLIC ARRAY FOR SVM OBJECT DETECTION

47

restricted to 8-dimensional vectors, limiting the application

space. An integrated vision system for object detection and

localization based on SVM was presented in [6]; however, the

system offered limited scalability. An analog custom processor

was presented in [7] and an implementation of a training algorithm for SVMs was presented in [8]. SVM implementations

have also been explored using embedded processors in [9] and

[10]. Considering the limitations of previous works, SCoPE

was designed to provide scalability, and reduced memory and

data flow complexity, making it suitable for general purpose

embedded classification applications.

Fig. 1. (a) Maximum-margin hyperplane and margin for an SVM trained with

samples from two classes. (b) Data flow for the computation of (1).

dimensional space (feature space), where linear classification

is possible. This is known as the kernel trick [3] and allows

an SVM to solve nonlinear problems. An input is classified at

runtime using the classification decision function,

(1)

in which are the alpha coefficients (weight of each SV), are

the class labels of the SVs, are the SVs, is the input vector,

is the chosen kernel function and is a bias value. The

flow of this computation is illustrated in Fig. 1(b). The kernel

computation (steps 2 and 3 in Fig. 1(b)) is split into two parts,

the vector operation and kernel-dependent additional operations

on the produced scalar value. Both parts depend on the choice of

kernel function (popular kernel functions are shown in (2)(5)).

For example, the vector operation for kernels (2), (3) and (4)

involves a dot-product computation, whereas for (5), it involves

the computation of the squared norm of the difference of two

vectors.

Popular Kernel Functions:

(2)

(3)

(4)

(5)

B. Related Work on Support Vector Machines

Hardware implementations of SVMs have gained noticeable

interest in recent years, primarily because of the potential

real-time performance benefits they offer. Most implementations have targeted either specific applications, or require a large

amount of computational resources, and thus are not suitable

for general-purpose embedded environments. An attempt to

design a massively parallel architecture to accelerate learning

algorithms was presented in [4], which utilized arrays of vector

processing elements controlled through a host CPU in SIMD

fashion. The centralized nature of the CPU, however, creates

a bottleneck, emphasizing the need for distributed control. An

FPGA implementation utilizing a Microblaze processor as a

control unit and custom hardware coprocessors to perform

the SVM classification was presented in [5]. The design was

Authorized licensd use limted to: IE Xplore. Downlade on May 13,20 at 1:460 UTC from IE Xplore. Restricon aply.

III. SCOPE: THE PROPOSED ARCHITECTURE

The proposed architecture consists of a chain of PEs that operates in a pipelined manner and can be expanded vertically with

minor modifications (introduction of vertical data flow) to operate in a systolic manner. This paper focuses on describing the

design and flow of operation of a single horizontal chain; the

full architecture is part of ongoing work.

A. The SCoPE Components

SCoPE consists of three main regions (Fig. 2); the front-end

(input), the middle (computation) and back-end (output) regions.

The front-end includes the input vector memory, address generator units and the front-end PE, which is the input part of the chain.

The middle region bears the bulk of the computation where each

PE receives data from the previous PE, processes the data, and

propagates it to the next PE in pipelined manner. The back-end

operates as the output of the system; it consists of the back-end

PE that transfers all the data outside of the chain, a kernel unit, a

MAC unit, and the alpha coefficients memory. All three regions

are supervised by a finite state machine control unit.

The front-end and back-end PEs differ with the middle PEs by

having additional control signals and modules in order to interact

with the I/O interfaces at each end. Each PE consists of a data

transfer unit, a processing unit and a memory unit, along with

glue logic assisting in synchronization and control (Fig. 3). The

main processing unit is a multiplication-accumulation (MAC)

unit that performs the kernels vector operation between the input

vector and SV elements. The transfer unit propagates data from

one PE to the next. The memory unit holds a group of SVs for

which the PE performs the kernel vector operation with the input

vector. Distributing the SV data to each PE, allows for a scalable

and manageable system, and also contributes to smaller and

faster memory units. Each PE has three operational states: PROCESSING, IDLE, and TRANSFERRING. In the PROCESSING

state, the PE is involved with the computation of the vector operation, and simultaneously transfers data/control values to the next

PE. These values are encoded in a 25-bit word as shown in Fig. 4.

In the IDLE state, the PE remains idle. Lastly, in the TRANSFERRING state, the 25-bit scalar value computed by each PE is

transferred to the next PE. Data switching is done through a 21

multiplexer and data propagation through a register.

The kernel and back-end MAC units make up the remaining

components and require the most processing resources. The type

of kernel does not impact the data flow, but influences the maximum achievable system performance. The kernel can be implemented either as a look-up table (LUT) or by using custom

logic. The latter may reduce the maximum operating frequency

due to increasing computational complexity, while the former

48

IEEE EMBEDDED SYSTEMS LETTERS, VOL. 1, NO. 2, AUGUST 2009

Fig. 2. Block diagram of the SCoPE architecture illustrating the three main regions and their basic components.

B. The SCoPE Flow of Operation

The classification procedure begins with the PEs in the IDLE

state. The system receives the first input vector element and

generates the address for the SV memory, leading the front-end

PE in the PROCESSING state. The SV memory in the front-end

PE outputs the corresponding SV element and the computation

begins. The rest of the PEs in the chain follow this computation

pattern, one after another. When the scalar value is computed in

all PEs, all PEs enter the TRANSFERING state simultaneously,

propagating the computed scalar value towards the back-end

PE. The kernel and back-end MAC units then begin processing

each scalar value that they receive from the back-end PE. The

kernel outcome is computed, multiplied with its respective preloaded coefficient and accumulated. When the accumulation is

completed, the control unit asserts an accumulation reset signal.

This signal is propagated through the chain to reset the MAC

unit values in each PE. Each PE will then again enter the PROCESSING state to begin a new kernel vector operation. When all

SV operations finish, the bias is processed. The total number of

cycles required for the classification of an input vector is given by

(6)

Fig. 3. Main architectural components of a processing element.

Fig. 4. In the PROCESSING state, data values and control signals are transferred in an encoded 25-bit word: (1) Address for SV memories. (2) Input vector

element. (3) New address signal for SV memory. (4) Enable signal for PEs

MAC unit. (5) Reset signal for PEs MAC unit. (6) Left unused for future implementations.

The first input vector element takes n (number of PEs) cycles

to reach the back-end PE (i.e., to fill the pipeline) and the PEs

then take (number of elements in vector) cycles to compute

the scalar value. The front-end PEs scalar value (which is the

furthest away from the back-end region) takes an additional

cycles to reach the kernel unit and be accumulated. The chain

processes SVs in parallel, so if we assume total SVs in a

repetitions in total, for all SVs.

training set, we need

impacts the accuracy of the classification and increases memory

demands, depending on the size and precision of the LUT. The

back-end MAC units bitwidth (precision) is determined by the

choice of kernel (i.e., the kernels output bitwidth), the number

of SVs (determines the number of accumulations and consequently the precision of the accumulator) as well as the chosen

precision for the alpha coefficients. One of the main advantages of the proposed architecture is that these two resourcehungry components are shared amongst the PEs, thus reducing

the overall hardware overhead and complexity.

A prototype of the proposed architecture with a chain of 100

PEs was implemented on a Virtex 5 FPGA and evaluated using

face detection, a popular embedded application. The MAC units

were mapped both on the FPGAs embedded DSP units and on

the slice logic, and the internal SV memory in each PE was implemented using the dedicated FPGA block RAM. The SVM

was trained in MATLAB, using 6 800 samples (4 400 nonfaces,

2400 faces) with training information from [11]. The training

phase produced

SVs that were distributed amongst

the

PEs (some PEs had 9 SVs and some had 8 SVs

IV. EVALUATION AND RESULTS

Authorized licensd use limted to: IE Xplore. Downlade on May 13,20 at 1:460 UTC from IE Xplore. Restricon aply.

KYRKOU AND THEOCHARIDES: SCOPE: TOWARDS A SYSTOLIC ARRAY FOR SVM OBJECT DETECTION

49

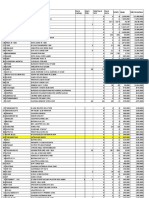

TABLE I

SYNTHESIS RESULTS FOR THE XILINX VIRTEX 5-LX110T FPGA

TABLE II

EXPERIMENTAL SETUP AND PERFORMANCE RESULTS

in their SV memory). Each vector consisted of

8-bit

elements, corresponding to an input 20 20 grayscale image.

The polynomial kernel was used, with parameters

and

, (i.e., a square operation). The alpha coefficients had

a fixed point 18-bit format. Table I shows the synthesis results

of the proposed architecture, which is limited by the multiplier

resources (available slice logic and DSP units) and operating

frequency of the FPGA. The design can be scaled to the available hardware budget and its modularity and ability to be superpipelined allow for further performance improvement.

To the best of our knowledge, there is limited work dealing

with general SVM hardware implementations, and performance

metrics used in existing works relate to the specific benchmark

applications used in each implementation. Therefore, comparing our preliminary results to other works is impractical,

as several factors affect the resulting frame rate, such as the

search window size, window overlap and the source image

size. Table II shows the parameters chosen in the experimental

framework and the computation of the frame rate achieved

for a single chain. The targeted FPGA can, however, fit five

parallel SCoPE modules with shared SV memory units, raising

fps, shown also in Table II. The

the frame rate up to

experimental architecture achieves 88% detection accuracy,

which can be further increased by improving the training set

with more training samples. However, this may result in an

increased number of SVs.

V. ONGOING AND FUTURE WORK AND CONCLUSIONS

The regular and modular nature of SCoPE provides scalability, reduced complexity, and efficient memory and data/control transfer management, especially when dealing with applications requiring a large number of high dimensional SVs. Work in

progress involves evaluating the architecture using other kernel

functions as well as other object detection applications. However, emphasis is being placed on expanding the architecture

into a systolic array.

The future systolic array implementation will utilize both

a horizontal (currently employed in SCoPE) and a vertical

pipeline, where input vector elements will move along the

Authorized licensd use limted to: IE Xplore. Downlade on May 13,20 at 1:460 UTC from IE Xplore. Restricon aply.

Fig. 5. Block diagram of a possible future systolic array implementation.

horizontal pipeline and SV elements along the vertical. One

of the rows of the array will be identical to SCoPE and will

contain the distributed SV memories. The other rows will not

have a memory unit, but will receive the SV elements from that

row in a vertical systolic manner, as illustrated in Fig. 5.

SCoPE can potentially support multiclass classification problems with minor modifications. Each row in the array will follow

the SCoPE architecture, with its own set of SVs and kernel function (if necessary), so that each row can perform different classification from the other rows, with no need for vertical data

movement. However, further work is necessary to address the

increasing memory demands.

SCoPE provides a modular and regular way of performing

SVM classification and can be used in a variety of embedded

applications. The expected systolic array implementation will

take advantage of the inherent parallelism, to increase SCoPEs

performance capabilities while maintaining versatility and

scalability.

REFERENCES

[1] C. Cortes and V. Vapnik, Support-vector networks, Mach. Learning,

vol. 20, no. 3, pp. 273297.

[2] R. Reyna-Rojas, D. Houzet, D. Dragomirescu, F. Carlier, and S. Ouadjaout, Object recognition system-on-chip using the support vector machines, EURASIP J. Appl. Signal Processing, vol. 2005, no. 7, pp.

9931004, 2005.

[3] C. J. C. Burges, A tutorial on support vector machines for pattern

recognition, Data Mining Knowl. Discovery, vol. 2, no. 2, pp. 12116,

1998.

[4] H. P. Graf et al., A massively parallel digital learning processor, in

Proc. NIPS 2008, pp. 529536.

[5] I. Biasi, A. Boni, and A. Zorat, A reconfigurable parallel architecture

for SVM classification, in Proc. IEEE Int. Joint Conf. Neural Netw.,

2005, vol. 5, pp. 28672872.

[6] R. A. Reyna, D. Esteve, D. Houzet, and M.-F. Albenge, Implementation of the SVM neural network generalization function for image processing, in Proc. 5th IEEE Int. Workshop Comput. Architect. Mach.

Percept., 2000, p. 147.

[7] R. Genov and G. Cauwenberghs, Kerneltron: Support vector machine

in silicon, IEEE Trans. Neural Netw., vol. 14, no. 5, pp. 14261434,

2003.

[8] D. Anguita, A. Boni, and S. Ridella, A digital architecture for support

vector machines: Theory, algorithm, and FPGA implementation, IEEE

Trans. Neural Netw., vol. 14, no. 5, pp. 9931009, Sept. 2003.

[9] R. Pedersen and M. Schoeber, An embedded support vector machine,

in Proc. Int. Workshop Intell. Solutions Embedded Syst., Jun. 2006, pp.

111.

[10] S. Dey, M. Kedia, N. Agarwal, and A. Basu, Embedded support vector

machine: Architectural enhancements and evaluation, in Proc. 20th

Int. Conf. VLSI Design, 2007, pp. 685690.

[11] E. Osuna, R. Freund, and F. Girosit, Training support vector machines: An application to face detection, in Proc. IEEE Conf. Comput.

Vision Pattern Recogn., 1997, pp. 130136.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Lab 12Document13 pagesLab 12Brian PaciaNo ratings yet

- Gigabyte GA-H81M-S2VDocument32 pagesGigabyte GA-H81M-S2VDan Lucian PopaNo ratings yet

- Obstacle Avoidance With Ultrasonic Sensors-NFkDocument6 pagesObstacle Avoidance With Ultrasonic Sensors-NFkk1gabitzu9789No ratings yet

- Lamp83 SilverDocument1 pageLamp83 SilverArchNo ratings yet

- Luminaire Schedule: Yas Mall Redevelopment - Ib 07/01/2021 1Document1 pageLuminaire Schedule: Yas Mall Redevelopment - Ib 07/01/2021 1ArchNo ratings yet

- Robotics and Intelligent Machines in agriculture-DA6 PDFDocument4 pagesRobotics and Intelligent Machines in agriculture-DA6 PDFArchNo ratings yet

- A Unified Approach For Motion and Force Control of Robot Manipulators The Operational Space formulat-PaJ PDFDocument11 pagesA Unified Approach For Motion and Force Control of Robot Manipulators The Operational Space formulat-PaJ PDFArchNo ratings yet

- Closed-Loop Manipulator Control Using Quaternion Feedback-Owh PDFDocument7 pagesClosed-Loop Manipulator Control Using Quaternion Feedback-Owh PDFArchNo ratings yet

- A Robust Layered Control System For A Mobile Robot-WtXDocument10 pagesA Robust Layered Control System For A Mobile Robot-WtXArchNo ratings yet

- Direct Power Control of Doubly-Fed-Induction-Generator-Based Wind Turbines Under Unbalanced Grid Vol-fCD PDFDocument11 pagesDirect Power Control of Doubly-Fed-Induction-Generator-Based Wind Turbines Under Unbalanced Grid Vol-fCD PDFArchNo ratings yet

- Direct Power Control of Doubly-Fed-Induction-Generator-Based Wind Turbines Under Unbalanced Grid Vol-fCD PDFDocument11 pagesDirect Power Control of Doubly-Fed-Induction-Generator-Based Wind Turbines Under Unbalanced Grid Vol-fCD PDFArchNo ratings yet

- VSC-Based HVDC Power Transmission Systems An Overview-jLJDocument11 pagesVSC-Based HVDC Power Transmission Systems An Overview-jLJJayant PaulNo ratings yet

- Sensorless Control of Surface-Mount Permanent-Magnet Synchronous Motors Based On A Nonlinear Observe-sMC PDFDocument8 pagesSensorless Control of Surface-Mount Permanent-Magnet Synchronous Motors Based On A Nonlinear Observe-sMC PDFArchNo ratings yet

- Single-Phase To Single-Phase Full-Bridge Converter Operating With Reduced AC Power in The DC-Link Ca-9PV PDFDocument8 pagesSingle-Phase To Single-Phase Full-Bridge Converter Operating With Reduced AC Power in The DC-Link Ca-9PV PDFArchNo ratings yet

- A Complete Generalized Solution To The Inverse Kinematics of robots-UgA PDFDocument7 pagesA Complete Generalized Solution To The Inverse Kinematics of robots-UgA PDFArchNo ratings yet

- Direct Torque Control of Four-Switch Brushless DC Motor With Non-Sinusoidal Back EMF-A82 PDFDocument9 pagesDirect Torque Control of Four-Switch Brushless DC Motor With Non-Sinusoidal Back EMF-A82 PDFArchNo ratings yet

- Model-Based Predictive Direct Power Control of Doubly Fed Induction Generators-0tJ PDFDocument11 pagesModel-Based Predictive Direct Power Control of Doubly Fed Induction Generators-0tJ PDFArchNo ratings yet

- Sensorless Control of Surface-Mount Permanent-Magnet Synchronous Motors Based On A Nonlinear Observe-sMC PDFDocument8 pagesSensorless Control of Surface-Mount Permanent-Magnet Synchronous Motors Based On A Nonlinear Observe-sMC PDFArchNo ratings yet

- VSC-Based HVDC Power Transmission Systems An Overview-jLJDocument11 pagesVSC-Based HVDC Power Transmission Systems An Overview-jLJJayant PaulNo ratings yet

- Model-Based Predictive Direct Power Control of Doubly Fed Induction Generators-0tJ PDFDocument11 pagesModel-Based Predictive Direct Power Control of Doubly Fed Induction Generators-0tJ PDFArchNo ratings yet

- Single-Phase To Single-Phase Full-Bridge Converter Operating With Reduced AC Power in The DC-Link Ca-9PV PDFDocument8 pagesSingle-Phase To Single-Phase Full-Bridge Converter Operating With Reduced AC Power in The DC-Link Ca-9PV PDFArchNo ratings yet

- Design of Parallel Inverters For Smooth Mode Transfer Microgrid Applications-MHLDocument10 pagesDesign of Parallel Inverters For Smooth Mode Transfer Microgrid Applications-MHLArchNo ratings yet

- Direct Torque Control of Four-Switch Brushless DC Motor With Non-Sinusoidal Back EMF-A82 PDFDocument9 pagesDirect Torque Control of Four-Switch Brushless DC Motor With Non-Sinusoidal Back EMF-A82 PDFArchNo ratings yet

- Sensorless Control of Surface-Mount Permanent-Magnet Synchronous Motors Based On A Nonlinear Observe-sMC PDFDocument8 pagesSensorless Control of Surface-Mount Permanent-Magnet Synchronous Motors Based On A Nonlinear Observe-sMC PDFArchNo ratings yet

- Sensorless Control of Surface-Mount Permanent-Magnet Synchronous Motors Based On A Nonlinear Observe-sMC PDFDocument8 pagesSensorless Control of Surface-Mount Permanent-Magnet Synchronous Motors Based On A Nonlinear Observe-sMC PDFArchNo ratings yet

- A Wide-Inputamp Wide-Output WIWO DCamp DC Converter-HIaDocument10 pagesA Wide-Inputamp Wide-Output WIWO DCamp DC Converter-HIaArchNo ratings yet

- A Single-Phase Z-Source Buckamp Boost Matrix Converter-Ip9Document10 pagesA Single-Phase Z-Source Buckamp Boost Matrix Converter-Ip9ArchNo ratings yet

- Direct Torque Control of Four-Switch Brushless DC Motor With Non-Sinusoidal Back EMF-A82 PDFDocument9 pagesDirect Torque Control of Four-Switch Brushless DC Motor With Non-Sinusoidal Back EMF-A82 PDFArchNo ratings yet

- Direct Power Control of Doubly-Fed-Induction-Generator-Based Wind Turbines Under Unbalanced Grid Vol-fCD PDFDocument11 pagesDirect Power Control of Doubly-Fed-Induction-Generator-Based Wind Turbines Under Unbalanced Grid Vol-fCD PDFArchNo ratings yet

- Model-Based Predictive Direct Power Control of Doubly Fed Induction Generators-0tJ PDFDocument11 pagesModel-Based Predictive Direct Power Control of Doubly Fed Induction Generators-0tJ PDFArchNo ratings yet

- Single-Phase To Single-Phase Full-Bridge Converter Operating With Reduced AC Power in The DC-Link Ca-9PV PDFDocument8 pagesSingle-Phase To Single-Phase Full-Bridge Converter Operating With Reduced AC Power in The DC-Link Ca-9PV PDFArchNo ratings yet

- A Modified SEPIC Converter For High Power Factor Rectifier and Universal Input Voltage ApplicationsDocument12 pagesA Modified SEPIC Converter For High Power Factor Rectifier and Universal Input Voltage ApplicationsBritto TigerNo ratings yet

- RC118 Remote Control User GuideDocument9 pagesRC118 Remote Control User Guidebae323No ratings yet

- Lastexception 63738573352Document5 pagesLastexception 63738573352Jennifer GonzálezNo ratings yet

- All Config Reference Files in OpenstackDocument623 pagesAll Config Reference Files in OpenstackraviNo ratings yet

- X or Com Rapid Recovery User GuideDocument3 pagesX or Com Rapid Recovery User Guidejoentoro123No ratings yet

- Chapter 3Document49 pagesChapter 3Getsi JebamalarNo ratings yet

- Deltav Workstation Hardware EmersonDocument23 pagesDeltav Workstation Hardware EmersonmhaioocNo ratings yet

- Oracle Database Standard Edition 2: Affordable, Full-Featured Databas e For SMB CustomersDocument4 pagesOracle Database Standard Edition 2: Affordable, Full-Featured Databas e For SMB Customersjoffo89No ratings yet

- DX DiagDocument32 pagesDX Diagedward djamiNo ratings yet

- Block Diagram (SBD) - Network Projector Front End - TIDocument3 pagesBlock Diagram (SBD) - Network Projector Front End - TIvkarthikeyanvNo ratings yet

- List PersediaanDocument15 pagesList Persediaanonlen ctvNo ratings yet

- A02 User Manual (Quick Start in English Spanish German Italian French Japanese) PDFDocument42 pagesA02 User Manual (Quick Start in English Spanish German Italian French Japanese) PDFRiski IrawanNo ratings yet

- E3423 P5KPL-VMDocument94 pagesE3423 P5KPL-VMfreimannNo ratings yet

- Vsphere Administration Guide For Acropolis (Using Vsphere HTML 5 Client)Document36 pagesVsphere Administration Guide For Acropolis (Using Vsphere HTML 5 Client)David Hernan Lira CarabantesNo ratings yet

- VIMSpc2011A Getting Started Guide 26-Sep-2011Document19 pagesVIMSpc2011A Getting Started Guide 26-Sep-2011blaktionNo ratings yet

- DS FitCon 100Document3 pagesDS FitCon 100Terrel YehNo ratings yet

- MSP 430 F 5418Document95 pagesMSP 430 F 5418anurag2006agarwalNo ratings yet

- H901MPSC Board DatasheetDocument1 pageH901MPSC Board DatasheetLuiz OliveiraNo ratings yet

- Silkworm 4100: Quickstart GuideDocument12 pagesSilkworm 4100: Quickstart Guidevju000No ratings yet

- GCBASIC Command GuideDocument9 pagesGCBASIC Command GuideJoao Joaquim100% (1)

- Fec BeckDocument76 pagesFec BeckMichell MuñozNo ratings yet

- TC-P42X3X: 42 Inch Class 720p Plasma HDTVDocument7 pagesTC-P42X3X: 42 Inch Class 720p Plasma HDTVEstuardoNo ratings yet

- Viotech 3200+ - 20190527Document2 pagesViotech 3200+ - 20190527Jesus ManuelNo ratings yet

- EB8000 Manual All in OneDocument697 pagesEB8000 Manual All in OneNikos ChatziravdelisNo ratings yet

- User Manual PLC Board SC143-C1 Series IEC-line: Update: 06-11-2012Document5 pagesUser Manual PLC Board SC143-C1 Series IEC-line: Update: 06-11-2012alinupNo ratings yet

- Cache Memory: Computer Architecture Unit-1Document54 pagesCache Memory: Computer Architecture Unit-1KrishnaNo ratings yet

- Msi Ms-7061 Rev 0a SCHDocument29 pagesMsi Ms-7061 Rev 0a SCHMelquicedecGonzalezNo ratings yet

- Allegro 2 Operator's ManualDocument115 pagesAllegro 2 Operator's ManualGustavoNo ratings yet

- Computing System ArchDocument5 pagesComputing System ArchAditya JadhavNo ratings yet