Professional Documents

Culture Documents

A Comparative Study of Continuous Multimodal Global Optimization Using PSO, FA and Bat Algorithms

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Comparative Study of Continuous Multimodal Global Optimization Using PSO, FA and Bat Algorithms

Copyright:

Available Formats

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Web Site: www.ijettcs.org Email: editor@ijettcs.org

Volume 5, Issue 4, July - August 2016

ISSN 2278-6856

A comparative study of continuous multimodal

global optimization using PSO, FA and Bat

Algorithms

Radha A. Pimpale1, P.K. Butey2

1

PhD Scholar, Rashtrasant Tukdoji Maharaj Nagpur University, Nagpur, Maharashtra India

2

Associate Professor and Head, Kamla Nehru Mahavidhalaya,

Rashtrasant Tukdoji Maharaj Nagpur University, Nagpur, Maharashtra India

Abstract

This paper introduces the nature-inspired metaheuristic

algorithms for optimization of standard Benchmark function,

including Firefly algorithm, PSO algorithms and Bat

algorithm. We have implemented these algorithms in

MATLAB. We have considered here how this algorithms work

on continuous multimodal global optimization benchmark

function. All these are the evolutionary nature inspired

optimization metaheuristic algorithms and are inspired by the

nature. to find out optimal solutions of

continuous,

multimodel Benchmark function. The stimulation results of

this experiment were analyzed and compared to the best

solutions found and compare with time. So the Bat algorithm

in each continuous, multimodel

Benchmark function

optimization function seems to perform better and efficient.

Keywords:-Firefly algorithm, Metaheuristic algorithm,

PSO, fireflies, bat.

1.Introduction

The goal of global optimization is to find the best possible

possible solution from the given

constraints. The

objective function is minimize or maximize and the

constraints are the relationships [10, 11, 13].

Optimization algorithms can be divided in two basic

classes: deterministic and stochastic (or probabilistic).

Deterministic algorithms are almost all local search

algorithms, and they are quite efficient and aim in finding

the local optima. Stochastic (or probabilistic) algorithms

are optimization methods that generate and use random

variables and have ability to local as well as global

solution. There are three Metaheuristic algorithms like

PSO, Firefly algorithm, Bat algorithms having aim to find

the optimal solution.

Optimization

algorithms

have exploration

and

exploitation problem. In the optimization term,

exploration means visiting new areas of the search space

which have not been investigated before. Exploration is a

procedure by which we try to find novel and better

solution states. Parameters such as convergence, elapse

time, stability of algorithms are considered

Volume 5, Issue 4, July August 2016

Particle swarm optimization (PSO)

Particle swarm optimization (PSO) is a population based

stochastic optimization technique developed by Dr.

Eberhart and Dr. Kennedy in 1995, inspired by social

behavior of bird flocking or fish schooling which aims at

finding the food [1]. The particle swarm optimization

concept consists of, at each time step, changing the

velocity of (accelerating) each particle toward its pbest

and lbest locations. Each swarm moves in the search space

in a cooperative search procedure. Acceleration is

weighted by a random term, with separate random

numbers being generated for acceleration toward pbest

and lbest locations. The group of possible solutions is a set

of particles, called swarms.

PSO is initialized with a group of random particles

(solutions) and then searches for optima by updating

generations. In every iteration, each particle is updated by

following two "best" values. The first one is the best

solution (fitness) it has achieved so far. (The fitness value

is also stored). This value is called pbest. Another "best"

value that is tracked by the particle swarm optimizer is the

best value, obtained so far by any particle in the

population. This best value is a global best and called

gbest. When a particle takes part of the population as its

topological neighbours, the best value is a local best and is

called lbest.

After finding the two best values, the particle updates its

velocity and positions with the PSO. The PSO has

algorithmic parameters:(a) Vmax or maximum velocity

which restricts Vi(t) within the interval [Vmax, Vmax]

(b) An inertial weight factor (c) Two uniformly

distributed random numbers 1 and 2 respectively that

determine the influence of p(t) and g(t) on the velocity

update formula.

(d) Two constant multiplier terms C1

and C2 known as self-confidence and swarm

confidence, respectively following equation 1(a) and 1(b).

v t+1 = v t + c 1 r 1 (g x t ) c 2 r 2 (p x t )

1(a)

Page 137

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Web Site: www.ijettcs.org Email: editor@ijettcs.org

Volume 5, Issue 4, July - August 2016

x t+1 = x t + v t+1

1(b)

PSO Algorithm

For each particle

Initialize particle

END

Do

For each particle

Calculate fitness value

If the fitness value is better than the best fitness value

(pbest) in history set current value as the new pbest

End

Choose the particle with the best fitness value of all the

particles as the gbest

For each particle

Calculate particle velocity according equation (a)

Update particle position according equation (b)

End

While maximum iterations or minimum error criteria is

not attained Particles' velocities on each dimension are

clamped to a maximum velocity Vmax. If the sum of

accelerations would cause the velocity on that dimension

to exceed Vmax, which is a parameter specified by the

user. Then the velocity on that dimension is limited to

Vmax.

Firfly Algorithm

Firefly algorithm is a population based algorithms, The

Firefly algorithm was developed by Xin-She [Yang XS

(2008) Nature-Inspired Metaheuristic Algorithms and it is

based on idealized behaviour of the flashing

characteristics of fireflies. For simplicity, we can

summarize these flashing characteristics as the following

three rules [2]:

a) All fireflies are unisex, so that one firefly is

attracted to other fireflies regardless of their sex.

b) Attractiveness is proportional to their brightness,

thus for any two flashing fireflies, the less bright one will

move towards the brighter one. The attractiveness is

proportional to the brightness and they both decrease as

their distance increases. If no one is brighter than a

particular firefly, it will move randomly.

c) The brightness of a firefly is affected or determined by

the landscape of the objective function to be optimized

For simplicity we can assume that the attractiveness of a

firefly is determined by its brightness or light intensity

which in turn is associated with the encoded objective

function. In the simplest case for an optimization problem,

the brightness I of a firefly at a particular position X can

be chosen as

. However the attractiveness

is relative, it should vary with the distance rij between

firefly i and firefly j. As light intensity decreases with the

distance from its source and light is also absorbed in the

Volume 5, Issue 4, July August 2016

ISSN 2278-6856

media, so we should allow the attractiveness to vary with

degree of absorption [3].

Step 1: Attractiveness and light intensity

In the firefly algorithm, there are two important issues: the

variation of the light intensity and the formulation of the

attractiveness. We know, the light intensity I(r) varies

with distance r monotonically and exponentially, that is:

(2)

Where I0 the original light intensity and is the light

absorption coefficient. As firefly attractiveness is

proportional to the light intensity seen by adjacent

fireflies, we can now define the attractiveness of a firefly

by Equation (2)

(3)

Where r is the distance between each two fireflies and 0

is their attractiveness at r = 0 i.e., when two fireflies are

found at the same point of search space. The value of

determines the variation of attractiveness with increasing

distance from communicated firefly.

Step 2: Distance

The distance between any two fireflies i and j at xi and xj

respectively, the Cartesian distance is determined by

equation (3) where xi,k is the kth component of the spatial

coordinate of xi the ith firefly and n is the number of

dimensions.

(4)

Step 3: Movement

The firefly i movement is attracted to another more

attractive (brighter) firefly j is determined by:

( 5)

Where the second term is due to the attraction while the

third term is randomization with being the

randomization parameter and r and is a random number

generator uniformly distributed in [0, 1].

Why firefly algorithms is special [4]

1.The first response that can be made regarding this

question is the moment strategy of the fireflies. Eqn (1) is

the moment equation and is consist of 2 main parts. We

can mention them as information based movement and

random moment. These two parts are responsible for

providing proper exploitation and the exploration over the

search space for finding optimal solutions.

Page 138

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Web Site: www.ijettcs.org Email: editor@ijettcs.org

Volume 5, Issue 4, July - August 2016

2.The given fireflys brightness and its distance with

another brighter firefly are taken into the account when

modifying its solution. Random part will create some

random solution which can lead the solution to a good or

bad situation.

Fireflies Algorithms [5]

initialize n fireflies to random positions

loop max Epochs times

for i := 0 to n-1

for j := 0 to n-1

if intensity(i) < intensity(j)

compute attractiveness

move firefly(i) toward firefly(j)

update firefly(i) intensity

end for

end for

sort fireflies

end loop

return best position found

Bat Algorithms

The bat algorithm is a metaheuristic algorithm for global

optimization. It was inspired by the echolocation behavior

of microbats, with varying pulse rates of emission and

loudness. [6] [7]

The following approximate or idealized rules were used.

1. All bats use echolocation to sense distance and they also

know the difference between food/prey and background

barriers in some magical way;

2. Bats fly randomly with velocity vi at position xi with a

frequency fmin , varying wavelength and loudness A0 to

search for prey. They can automatically adjust the

wavelength (or frequency) of their emitted pulses and

adjust the rate of pulse emission r [0,1], depending on

the proximity of their target;

3. Although the loudness can vary in many ways, we

assume that the loudness varies from a large (positive) A0

to a minimum constant value Amin how their positions xi

and velocities vi in a d-dimensional search space are

updated. The new solutions xtiand velocities vti at time

step t are given by

fi = fmin + (fmax+fmin),

(6)

vit + 1 = vti + (xitx0)fi,

(7)

xit+1=xit+vit

(8)

Initially, each bat is randomly assigned a frequency which

is drawn uniformly from [fmin, fmax]. For the local

search part, once a solution is selected among the current

best solutions, a new solution for each bat is generated

locally using random walk

Where is a random number vector drawn from [1,1],

while At = <At> is the average loudness of all the bats at

this time step. T

Volume 5, Issue 4, July August 2016

ISSN 2278-6856

Loudness and Pulse Emission

The loudness Ai and the rate ri of pulse emission have to

be updated accordingly as the iterations proceed. As the

loudness usually decreases once a bat h as found its prey,

while the rate of pulse emission increases, the loudness

can be chosen as any value of convenience. For example,

we can use A0= 100 and Amin= 1. For simplicity, we can

also use A0 = 1 and Amin = 0, assuming Amin = 0 means

that a bat has just found the prey and temporarily stop

emitting any sound. Now we have

At+1i = Ati, rt i= r0i[1exp(t)]

(10)

where and are constants. In fact, is similar to the

cooling factor of a cooling schedule in the simulated

annealing (Kirkpatrick et al., 1983). For any 0 < < 1

and

>0,

Ati0, rtir0i, as t

Bat Algorithms [12]

Objective function

f(x), x = (x1, ..., xd)T

Initialize the bat population

xi (i= 1,2, ..., n) and vi

Define pulse frequency fi at xi

Initialize pulse rates ri and the loudness Ai

while (t < Max number of iterations)

Generate new solutions by adjusting frequency, and

updating velocities and locations/solutions [equations

(9) and (10)]

if (rand> ri)

Select a solution among the best solutions

Generate a local solution around the selected best

solution

end if

Generate a new solution by flying randomly

if (rand < Ai & f(xi)< f(xi+1))

Accept the new solutions

Increase ri and reduce Ai

end if

Rank the bats and find the current best xi

end while

RESULTS AND DISCUSSION

Consider unconstrained global optimization benchmark

function in order to demonstrate how the firefly, PSO and

Bat algorithm works, we have implemented it in matlab

and the results are shown below. In order to show that

both the global optima and local optima can be found

simultaneously, Simulations were carried out by assigning

equal size of population to be 100,300 and 500 for the all

the algorithms. All the mentioned algorithms are run with

1000 iterations,, then the results are recorded. The

stopping criteria is set to maximum number of iterations.

For fair analysis and comparison all the algorithms are

Page 139

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Web Site: www.ijettcs.org Email: editor@ijettcs.org

Volume 5, Issue 4, July - August 2016

well tested on standard unconstrained global optimization

benchmark function.

In particle swarm optimization parameters are considered

as Maximum Number of Iterations are 1000,population

size of particle are considered as 100 and 300 and 500,

Inertia Weight w=1, Inertia Weight Damping Ratio

wdamp=0.99 and Personal Learning Coefficient

c1=1.5,and

Global

Learning

Coefficient=2.0.The

dimensions of the problems are set to be 30, for every

population the results are recorded and presented in two

different ways viz. a) best solution i.e global minimum b)

elapsed time

In Firefly Algorithms optimization parameter Maximum

Number of Iterations are 1000, population size of fireflies

are considered as 100 and 300 and 500,

alpha=0.5,

beta=0.1 and gamma =1 , The dimensions of the problems

are set to be 30, for every population the results are

recorded and presented in two different ways viz. a) best

solution i.e global minimum b) elapsed time

In Bat Algorithms parameter considered as Maximum

Number of Iterations are 1000, population size of fireflies

are considered as 100 and 300 and 500, Loudness =0.5

(constant or decreasing), Pulse rate (constant or

decreasing)=0.5 , Lb=-2*ones(1,d),Ub=2*ones(1,d); The

dimensions of the problems are set to be 30, for every

population the results are recorded and presented in two

different ways viz. a) best solution i.e global minimum b)

elapsed time

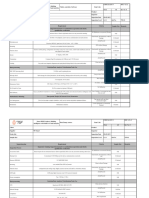

Table 1 Comparative performance of Algorithms

(Population=100)

Benchmark

function

Sphere Function

PSO

FA

Bat

4.3267

(34.1273)

7.136

(24.94)

Ackley function

8.881

(22.8763)

Rastrigin function

0

(31.4657)

Griewank

function

0.007396

(30.4459)

1.1878

(63.5433

)

8.8818

(18.1611

)

0

(36.9632

)

0

Rosenbrock

Function

0.2898

(47.1184)

Schwefel

Function

798.5128

(32.1073)

(0.03925

)

1.5731

(62.3811

)

801.3398

(31.4254

)

Volume 5, Issue 4, July August 2016

0.00216

(37.69)

57.7098

(42.49)

5.942

(32.11)

29.40

(46.25)

ISSN 2278-6856

Table 2 Comparative performance of Algorithms

(Population=300)

Benchmark

function

sphere Function

PSO

FA

Bat

Ackley function

0

(110.3773)

8.8818e-16

Rastrigin

function

Griewank

function

Rosenbrock

Function

Schwefel

Function

0

(99.24.2)

0

(95.975335)

4.0364

(122.3400)

798.5128

(79.837988)

1.1671

(556.952)

8.8818(451.71

7)

0

(386.0997)

0

(0.3090)

1.2441

(560.4124)

808.3921

(279.2434)

4.7428

(30.10)

0.002579

(38.95)

43.7803

(43.35)

5.942

(32.11)

4.4691

(46.74)

5.7732

(49.64)

Table 3 Comparative performance of Algorithms

(Population=500)

Benchmark

function

Sphere Function

PSO

FA

Bat

0(136.2281)

Ackley function

8.8818(175.3

049)

0(151.844925

)

0.007396(30.

445990)

0.0729(222.7

411)

798.5128(167

.4429)

1.0810

(1560.2457)

8.8818

(451.7176)

0

(1319.7696)

0

(0.8410)

1.4270

(1533.43)

808.3333

(1557.870)

2.5845

(30.67)

0.002277

(41.56)

45.7697

(44.04)

5.0217

(34.77)

0.6223

(47.38)

2.8866

(49.66)

Rastrigin function

Griewank function

Rosenbrock

Function

Schwefel Function

Performance of Multimodel function with given

algorithm

Ackleys function has one global optimum and many

minor local optima. It is better for the Bat algorithm

among the six standard benchmark function as its local

optima are shallow. It has been observed that Ackley

function has best performance than other function.

Griewanks function is that it is good for higher

population for best cost . Rastrigins function is a complex

multimodal problem with a large number of local optima.

When attempting to solve Rastrigins function, algorithms

may easily fall into a local optimum. Sphere function is

differentiated in every algorithum. Firefly algorithms

reaches towards optima in Sphere Benchmark function.

Schwefels function is due to its deep local optima being

far from the global optimum

3.2196

(48.50)

Page 140

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Web Site: www.ijettcs.org Email: editor@ijettcs.org

Volume 5, Issue 4, July - August 2016

Comparison Graph for Time required to run Different

Algorithms

Comparing the coverage and the execution speed , among

these six multimodal, n dimensional global optimization

benchmark function with PSO algorithms, fireflies and

Bat Algorithms it has been observed that Bat algorithms is

better for convergence and execution speed.

ISSN 2278-6856

[5]. ztok Fister Jr.a, MatjazPercb,c,d, Salahuddin M.

Kamalc, Iztok Fister ,A review of chaos-based firefly

algorithms: Perspectives and research challenges, .

Fister Jr. et al./Applied Mathematics and

Computation 252 (2015) 155165

[6]. J. D. Altringham, Bats: Biology and Behaviour,

Oxford University Press, (1996).

[7]. P. Richardson, Bats. Natural History Museum,

London, (2008), Yang, X. S. (2010). "A New

Metaheuristic Bat-Inspired Algorithm, in: Nature

Inspired Cooperative Strategies for Optimization

(NISCO

2010)". Studies

in

Computational

Intelligence 284: 6574. arXiv:1004.417

[8]. .X. S., Yang, A New Metaheuristic Bat -Inspired

Algorithm, in: Nature Inspired Cooperative Strategies

for Optimization (NISCO 2010) (Eds. Cruz, C.;

Gonzalez, J. R.; Pelta, D. A.; Terrazas, G), Studies in

Computational Intelligence Vol. 284, Springer Berlin,

pp. 65 74, 2010.

[9]. [9] P. Agarwal and S. Mehta, Nature-Inspired

Algorithms: State-of-Art, Problems and Prospects,

International Joournal of Computer Applications,

2014, Vol. 100, No.14, pp. 14-21.

[10]. I. Fister, X. S. Yang, J. Brest, an d D. Fister, A

Brief Review of Nature-Inspired Algorithms for

Optimization, ElektrotehniSki Vestnik, Jul, 2013.

[11]. WengKee Wong, Nature-Inspired Metaheuristic

Algorithms for Generating Optimal Experimental

Designs, 2011

Fig 2 Comparison of Time required to run Different

Algorithms

References

[1]. Kennedy, J. and Eberhart, R. Particle swarm

optimization. In Proceedings of the IEEE

International Conference on Neural Networks , Vol.

4. 1995, 19421948

[2]. Yang XS (2008) Nature-Inspired Metaheuristic

Algorithms. Luniver Press.

[3]. Yang, X. S., (2011). Bat algorithm for multi-objective

optimisation,Int. J. Bio-Inspired Computation, Vol. 3,

No. 5, pp. 267274

[4]. MKA Ariyaratne, WPJ Pemarathne, A Review of

Recent Advancements of Firefly Algorithm; A

Modern Nature Inspired Algorithm , Proceedings of

8 th International Research Conference, KDU,

Published November 2015

Volume 5, Issue 4, July August 2016

[12]. Yazan A. Alsariera, Hammoudeh S. Alamri,

Abdullah M. Nasser, Mazlina A. Majid, Kamal Z.

Zamli, Comparative Performance Analysis of Bat

Algorithm and Bacterial Foraging Optimization

Algorithm using Standard Benchmark Functions,

2014 8th Malaysian Software Engineering

Conference (MySEC).

[13]. S K Subhani Shareef E. Rasul Mohideen Layak Ali

,Directed Firefly algorithm for multimodal

problems, 2015 IEEE International Conference on

Computational Intelligence and Computing Research

AUTHORS

Prof. Radha A. Pimpale is

presently working as Assistant

Professor at

Priyadarshini

Institute of

Engineering and

Technology Nagpur. She has

11 research papers published in

reputed

national

and

international

journals. She is

research scholar at Rashtrasant Tukadoji Maharaj Nagpur

University, Nagpur. She perusing Ph. D in under the

Page 141

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Web Site: www.ijettcs.org Email: editor@ijettcs.org

Volume 5, Issue 4, July - August 2016

ISSN 2278-6856

guidance of Dr P.K. Butey. She has total experience of 15

years in teaching and research in the field of Computer

Science

Dr. Pradeep Butey is resently

working as associate professor and

Head of the Department of

Computer Science, Kamla Nehru

Mahavidyalaya, Nagpur, He has

done his doctorate in Computer

Science from RTM

Nagpur

University, Nagpur, in Mar 2011.

His areas of interest include data

mining, fuzzy logic, optimization, RDBMS. He has 18

Research papers published in reputed International

Journals. He is Chairman Board of Studies in Computer

Science of the University, He has vast experience of 12

years in the field of teaching and research in Computer

Science. He has worked as a member of selection

committee at many instances. He is a Member of

Academic Council RTM Nagpur University.

Volume 5, Issue 4, July August 2016

Page 142

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSDocument7 pagesTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyDocument6 pagesAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesDocument6 pagesDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Experimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterDocument7 pagesExperimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewDocument9 pagesAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesDocument6 pagesDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Customer Satisfaction A Pillar of Total Quality ManagementDocument9 pagesCustomer Satisfaction A Pillar of Total Quality ManagementInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiDocument12 pagesStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Experimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterDocument7 pagesExperimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiDocument12 pagesStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Customer Satisfaction A Pillar of Total Quality ManagementDocument9 pagesCustomer Satisfaction A Pillar of Total Quality ManagementInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSDocument7 pagesTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Soil Stabilization of Road by Using Spent WashDocument7 pagesSoil Stabilization of Road by Using Spent WashInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyDocument6 pagesAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Impact of Effective Communication To Enhance Management SkillsDocument6 pagesThe Impact of Effective Communication To Enhance Management SkillsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- A Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)Document10 pagesA Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Design and Detection of Fruits and Vegetable Spoiled Detetction SystemDocument8 pagesDesign and Detection of Fruits and Vegetable Spoiled Detetction SystemInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Mexican Innovation System: A System's Dynamics PerspectiveDocument12 pagesThe Mexican Innovation System: A System's Dynamics PerspectiveInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewDocument9 pagesAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- A Digital Record For Privacy and Security in Internet of ThingsDocument10 pagesA Digital Record For Privacy and Security in Internet of ThingsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Deep Learning Based Assistant For The Visually ImpairedDocument11 pagesA Deep Learning Based Assistant For The Visually ImpairedInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Staycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityDocument10 pagesStaycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Performance of Short Transmission Line Using Mathematical MethodDocument8 pagesPerformance of Short Transmission Line Using Mathematical MethodInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Advanced Load Flow Study and Stability Analysis of A Real Time SystemDocument8 pagesAdvanced Load Flow Study and Stability Analysis of A Real Time SystemInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Impact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryDocument8 pagesImpact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Challenges Faced by Speciality Restaurants in Pune City To Retain Employees During and Post COVID-19Document10 pagesChallenges Faced by Speciality Restaurants in Pune City To Retain Employees During and Post COVID-19International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Secured Contactless Atm Transaction During Pandemics With Feasible Time Constraint and Pattern For OtpDocument12 pagesSecured Contactless Atm Transaction During Pandemics With Feasible Time Constraint and Pattern For OtpInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Synthetic Datasets For Myocardial Infarction Based On Actual DatasetsDocument9 pagesSynthetic Datasets For Myocardial Infarction Based On Actual DatasetsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Anchoring of Inflation Expectations and Monetary Policy Transparency in IndiaDocument9 pagesAnchoring of Inflation Expectations and Monetary Policy Transparency in IndiaInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Predicting The Effect of Fineparticulate Matter (PM2.5) On Anecosystemincludingclimate, Plants and Human Health Using MachinelearningmethodsDocument10 pagesPredicting The Effect of Fineparticulate Matter (PM2.5) On Anecosystemincludingclimate, Plants and Human Health Using MachinelearningmethodsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Self-Efficacy and Academic Stressors in University StudentsDocument9 pagesSelf-Efficacy and Academic Stressors in University StudentskskkakleirNo ratings yet

- Buku BaruDocument51 pagesBuku BaruFirdaus HoNo ratings yet

- Theory of Construction of The Giza Plateau Pyramids (Original Title Hypothesis of Construction of The Pyramids of The Valley of Gizeh (Giza)Document15 pagesTheory of Construction of The Giza Plateau Pyramids (Original Title Hypothesis of Construction of The Pyramids of The Valley of Gizeh (Giza)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Appsc Aee Mains 2019 Electrical Engineering Paper III 1fcbb2c9Document12 pagesAppsc Aee Mains 2019 Electrical Engineering Paper III 1fcbb2c9SURYA PRAKASHNo ratings yet

- Top Survival Tips - Kevin Reeve - OnPoint Tactical PDFDocument8 pagesTop Survival Tips - Kevin Reeve - OnPoint Tactical PDFBillLudley5100% (1)

- Deictics and Stylistic Function in J.P. Clark-Bekederemo's PoetryDocument11 pagesDeictics and Stylistic Function in J.P. Clark-Bekederemo's Poetryym_hNo ratings yet

- Electronic Waste Management in Sri Lanka Performance and Environmental Aiudit Report 1 EDocument41 pagesElectronic Waste Management in Sri Lanka Performance and Environmental Aiudit Report 1 ESupun KahawaththaNo ratings yet

- Risk Management: Questions and AnswersDocument5 pagesRisk Management: Questions and AnswersCentauri Business Group Inc.No ratings yet

- Week 1-2 Module 1 Chapter 1 Action RseearchDocument18 pagesWeek 1-2 Module 1 Chapter 1 Action RseearchJustine Kyle BasilanNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- GSP AllDocument8 pagesGSP AllAleksandar DjordjevicNo ratings yet

- Old Man and The SeaDocument10 pagesOld Man and The SeaXain RanaNo ratings yet

- (LaSalle Initiative) 0Document4 pages(LaSalle Initiative) 0Ann DwyerNo ratings yet

- MGT403 Slide All ChaptersDocument511 pagesMGT403 Slide All Chaptersfarah aqeelNo ratings yet

- Week1 TutorialsDocument1 pageWeek1 TutorialsAhmet Bahadır ŞimşekNo ratings yet

- IRJ November 2021Document44 pagesIRJ November 2021sigma gaya100% (1)

- 42ld340h Commercial Mode Setup Guide PDFDocument59 pages42ld340h Commercial Mode Setup Guide PDFGanesh BabuNo ratings yet

- ZyLAB EDiscovery 3.11 What's New ManualDocument32 pagesZyLAB EDiscovery 3.11 What's New ManualyawahabNo ratings yet

- PTE Writing FormatDocument8 pagesPTE Writing FormatpelizNo ratings yet

- Diagnostic Test Everybody Up 5, 2020Document2 pagesDiagnostic Test Everybody Up 5, 2020George Paz0% (1)

- ACTIVITY Design - Nutrition MonthDocument7 pagesACTIVITY Design - Nutrition MonthMaria Danica89% (9)

- An Analysis of The Cloud Computing Security ProblemDocument6 pagesAn Analysis of The Cloud Computing Security Problemrmsaqib1No ratings yet

- 02 Laboratory Exercise 1Document2 pages02 Laboratory Exercise 1Mico Bryan BurgosNo ratings yet

- 2015 Grade 4 English HL Test MemoDocument5 pages2015 Grade 4 English HL Test MemorosinaNo ratings yet

- Mythology GreekDocument8 pagesMythology GreekJeff RamosNo ratings yet

- Computer Science HandbookDocument50 pagesComputer Science HandbookdivineamunegaNo ratings yet

- Enzymatic Hydrolysis, Analysis of Mucic Acid Crystals and Osazones, and Thin - Layer Chromatography of Carbohydrates From CassavaDocument8 pagesEnzymatic Hydrolysis, Analysis of Mucic Acid Crystals and Osazones, and Thin - Layer Chromatography of Carbohydrates From CassavaKimberly Mae MesinaNo ratings yet

- Quality Assurance Plan-75FDocument3 pagesQuality Assurance Plan-75Fmohamad chaudhariNo ratings yet

- Idoc - Pub - Pokemon Liquid Crystal PokedexDocument19 pagesIdoc - Pub - Pokemon Liquid Crystal PokedexPerfect SlaNaaCNo ratings yet

- Agreement - IDP - Transformation Institute - 2023Document16 pagesAgreement - IDP - Transformation Institute - 2023Elite ProgramNo ratings yet

- Green ProtectDocument182 pagesGreen ProtectLuka KosticNo ratings yet

- The Voice of God: Experience A Life Changing Relationship with the LordFrom EverandThe Voice of God: Experience A Life Changing Relationship with the LordNo ratings yet

- From Raindrops to an Ocean: An Indian-American Oncologist Discovers Faith's Power From A PatientFrom EverandFrom Raindrops to an Ocean: An Indian-American Oncologist Discovers Faith's Power From A PatientRating: 1 out of 5 stars1/5 (1)