Professional Documents

Culture Documents

Stat 151 Formulas

Uploaded by

Tanner HughesCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Stat 151 Formulas

Uploaded by

Tanner HughesCopyright:

Available Formats

Stat 151 Formula Sheet

Sampling Distribution of the sample proportion:

Numerical Summaries :

i Sample Mean : y =

yi

n

( yi y ) 2

n 1

2

i Sample Variance : s

i Range : max min

i Interquartile Range : IQR = Q3 Q1

i Sample Standard Deviation : s =

i 5 # summary: min, Q1 , median , Q3 , max

i Standardized Value : z score =

i Least-Squares regression: y = b0 + b1 x ,

sy

where b1 = r , b0 = y b1 x ,

sx

s

1 n

i Correlation: r =

z x z y = b1 x

n 1 i =1

sy

A 100(1 )% C.I for : Y z* /2

P( A B)

P( B)

General additional law:

P( A B ) = P( A) + P ( B) P( A B )

If A and B are disjoint, then

P ( A B) = P( A) + P( B)

General Multiplication law:

P ( A B) = P( A) P ( B | A) = P( B) P( A | B)

If A and B are independent, then

P( A B) = P ( A) P( B)

Sampling Distribution of the sample mean:

For a random sample of size n from a

population with mean , and standard

deviation , the sampling distribution of the

sample mean, Y , has a mean of Y = and a

standard deviation of SD(Y ) = Y =

p (1 p )

pq

=

, where q = 1 p

n

n

For large n ( np 10 and n(1 p) 10 ), the

sampling distribution of p is approximately

normal.

Inference for one population mean:

Sample size: For a 100(1 )% confidence with

SD( p ) = p =

z* / 2

margin of error E , n =

E

Case 1: is known

Probability Formulas:

Complement: P( AC ) = 1 P ( A)

Conditional probability: P ( A | B) =

For a random sample of size n from a

population with proportion p , the sampling

distribution of the sample proportion has a mean

of p = p and a standard deviation of

n

If population distribution is normal, then Y has

a normal distribution.

Central Limit Theorem: For large n (n > 30) ,

the sampling distribution of Y is approximately

normal.

n

Test-Statistic for testing H 0 : = 0 :

TS =

Y 0

~ Normal distribution if H 0 is true.

/ n

Case 2: is unknown

A 100(1 )% C.I for : Y tn*1, /2

s

n

Test-Statistic for testing H 0 : = 0 :

TS =

Y 0

~ t distribution with df = n 1 ,

S/ n

if H 0 is true.

Large Sample Inference for one proportion:

Sample size: For a 100(1 )% confidence with

2

z*

margin of error E , n = / 2 p (1 p )

E

An approximate 100(1 )% C.I. for p :

p (1 p )

n

Test-Statistic for testing H 0 : p = p0 :

p p 0

~ Normal distribution if H 0 is

TS =

p 0 (1 p 0 )

n

true.

p z* / 2

Stat 151 Formula Sheet

Large sample inference for two proportions:

Inference for two population means:

Independent samples:

Case 1: Assuming 1 = 2

Sp =

(n1 1) S12 + (n2 1) S 22

n1 + n2 2

A 100(1 )% C.I. for 1 2 :

1 1

+

Y1 Y2 tn*1 + n2 2, /2 S p

n1 n2

Test-Statistic for testing H 0 : 1 2 = 0 :

TS =

Y1 Y2 0

~ t distribution with

1 1

Sp

+

n1 n2

df = n1 + n 2 2 if H 0 is true.

A 100(1 )% C.I. for p1 p 2 :

p 1 p 2 z* / 2

Test-Statistic for testing H 0 : p1 p 2 = 0 :

p 1 p 2 0

~ Normal distribution

TS =

1

1

p (1 p ) +

n1 n2

if H 0 is true, where p =

S2 S2

*

Y1 Y2 tMin ( n1 1,n2 1), /2 1 + 2

n1 n2

Test-Statistic for testing H 0 : 1 2 = 0 :

TS =

Y1 Y2 0

2

1

2

2

S

S

+

n1 n2

~ t distribution with

Total number of observations: N = n1 +

Grand mean:

Y =

A 100(1 )% C.I. for D = 1 2 :

S

D t n*1, / 2 D

n

Test-Statistic for testing

H 0 : D = 1 2 = 0 :

TS =

D 0

SD / n

if H 0 is true.

~ t distribution with df = n 1

n1Y1 + n2Y2 +

N

+ nI

+ nk Yk

Between- group variability:

n1 (Y1 Y ) 2 + + nk (Yk Y ) 2

MS ( B) =

k 1

Within-group variability:

(n1 1) S12 + + (nk 1) S k2

MS (W ) =

N k

df = Min(n1 1, n 2 1) if H 0 is true.

Paired samples: D = Y1 Y 2

y1 + y2 n1 p1 + n2 p 2

=

n1 + n2

n1 + n2

One Factor ANOVA F-test:

Case 2: Not Assuming 1 = 2

A 100(1 )% C.I. for 1 2 :

p 1 (1 p 1 ) p 2 (1 p 2 )

+

n1

n2

Total sum of squares:

SS (Total) = SS (Between) + SS (Within)

Test statistic for testing H 0 : 1 = 2 =

= k

MS ( B)

~ F distribution, if H 0 is true,

MS (W )

with df1 = k 1 and df 2 = N k

TS =

Stat 151 Formula Sheet

Simple Linear Regression:

Model: {Y | X } = 0 + 1 X , 0 = Intercept ,

1 = Slope

Estimated model: {Y | X } = b0 + b1 X , where

sy

b1 = r and b0 = y b1 x

sx

SS ( Error )

n2

- the standard error of the model

= se = MS Error =

SS (Total ) = SS (Re gression) + SS ( Error )

SS (Re gression) = SS Regression = b12 (n 1) sx2

SS (Total ) = SS Total = (n 1) s y2

Inference for slope:

se

(n 1) s

2

x

Estimated value for single future observation, Y

when X = x : y = b0 + b1 x

SE ( y ) = se 1 +

the standard deviation of the model

SE (b1 ) =

Inference for single observation:

se

(n 1) sx

A 100(1 )% C.I. for B : b1 tn* 2, / 2 SE (b1 )

1 ( x x ) 2

+

n (n 1) sx2

A 100(1 )% C.I. for Y : y tn* 2, / 2 SE ( y )

ANOVA F-test:

Test-Statistic for testing H 0 : 1 = 0 :

MS (Regression) SS (Regression) /1

~F

TS =

=

MS (Error)

SS (Error) / (n 2)

distribution, if H 0 is true, with df 1 = 1 and

df 2 = n 2 .

Coefficient of Determination:

SS (Total) SS (Error)

R2 = r 2 =

SS (Total)

SS (Regression)

=

SS (Total)

Chi-Square Test:

Test-Statistic for testing H 0 : 1 = 0 :

b1 0

~ t distribution with df = n 2 if

SE (b1 )

H 0 is true.

TS =

Inference for population mean:

Estimated value for mean when X = x :

{Y | X = x } = = b0 + b1 x

SE ( ) = se

1 ( x x ) 2

+

n (n 1) sx2

A 100(1 )% C.I. for : tn* 2, / 2 SE ( )

Obs Exp

2

TS =

~ distribution with

Exp

i =1

df = k 1 if H 0 is true.

k

Expected Values :

= E[ X ] = x p( x)

i 2 = Var[ X ] = ( x ) 2 p( x)

i E[aX bY c] = aE[ X ] bE[Y ] c

i If X are Y independent, then

Var[aX bY c] = a 2Var[ X ] + b 2Var[Y ]

Standardized values :

Observation Mean

z values =

Standard Deviation

You might also like

- Proceedings of the Metallurgical Society of the Canadian Institute of Mining and Metallurgy: Proceedings of the International Symposium on Fracture Mechanics, Winnipeg, Canada, August 23-26, 1987From EverandProceedings of the Metallurgical Society of the Canadian Institute of Mining and Metallurgy: Proceedings of the International Symposium on Fracture Mechanics, Winnipeg, Canada, August 23-26, 1987W. R. TysonNo ratings yet

- Shaktiman Tech. Tools (India) - Patiala Data SheetDocument1 pageShaktiman Tech. Tools (India) - Patiala Data Sheetਗਗਨ ਜੋਤNo ratings yet

- Calculation of Load Capacity of Spur and Helical Gears - Calculation of Tooth Bending StrengthDocument2 pagesCalculation of Load Capacity of Spur and Helical Gears - Calculation of Tooth Bending StrengthКирилл0% (1)

- STI 463 ModelDocument1 pageSTI 463 Modelਗਗਨ ਜੋਤNo ratings yet

- Module Gear DataDocument2 pagesModule Gear DataMuhammad HaiderNo ratings yet

- 2938Document8 pages2938Risira Erantha KannangaraNo ratings yet

- Part List Rga-2315h1jt-11 2-La4-E1 (GB Cv05)Document4 pagesPart List Rga-2315h1jt-11 2-La4-E1 (GB Cv05)restu yanuar salamNo ratings yet

- Synchronous Belt Drives - Automotive PulleysDocument14 pagesSynchronous Belt Drives - Automotive Pulleystv-locNo ratings yet

- Round Washers SN 808: July 2000Document1 pageRound Washers SN 808: July 2000Maurício Duarte de AndradeNo ratings yet

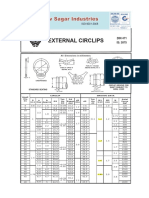

- External CirclipDocument2 pagesExternal CirclipDivyang MistryNo ratings yet

- 1 2312 PDFDocument4 pages1 2312 PDFFrancisco CarrascoNo ratings yet

- Iso 2901 2016Document9 pagesIso 2901 2016Brandon Vicuña GalánNo ratings yet

- 6885 1Document1 page6885 1ajeshNo ratings yet

- HVATACDocument2 pagesHVATACHrvatski strelicarski savezNo ratings yet

- 20 INV SPL. 2.50 MOD 20 PA 22F01113 HB IND. 55x50x MM (PART - ModelDocument1 page20 INV SPL. 2.50 MOD 20 PA 22F01113 HB IND. 55x50x MM (PART - Modelhb IndustriesNo ratings yet

- Total DesignDocument26 pagesTotal DesignsathishjeyNo ratings yet

- Home Search Collections Journals About Contact Us My IopscienceDocument8 pagesHome Search Collections Journals About Contact Us My IopscienceCan CemreNo ratings yet

- Din 333Document1 pageDin 333premeoNo ratings yet

- GS52Document3 pagesGS52S.Hasan MirasadiNo ratings yet

- DIN HANdsfsDBOOK 1 PDFDocument6 pagesDIN HANdsfsDBOOK 1 PDFsohaiblatif3No ratings yet

- Table For Gear DrawingDocument1 pageTable For Gear DrawingprasannaNo ratings yet

- Trocoide Thurman PDFDocument4 pagesTrocoide Thurman PDFmgualdiNo ratings yet

- Parallel Key Calculation According To DIN 6892Document21 pagesParallel Key Calculation According To DIN 6892zahirshah1436923No ratings yet

- Hand Book G 06.05Document68 pagesHand Book G 06.05Jon Morales100% (1)

- Pins and KeysDocument12 pagesPins and KeysSam GillilandNo ratings yet

- Parallel Keys Is.2048.1983Document9 pagesParallel Keys Is.2048.1983sbalajimNo ratings yet

- WEG Roller Table 50040456 Brochure EnglishDocument12 pagesWEG Roller Table 50040456 Brochure EnglishjmartinezmoNo ratings yet

- HeliCoil Insert Specs ImperialDocument1 pageHeliCoil Insert Specs ImperialAce Industrial SuppliesNo ratings yet

- Calculation of Gear Dimensions - KHK Gears PDFDocument25 pagesCalculation of Gear Dimensions - KHK Gears PDFlawlawNo ratings yet

- Thread Standard BSPDocument7 pagesThread Standard BSPĐạt TrầnNo ratings yet

- ISO#TR 14638 1995 (E) - Image 600 PDF DocumentDocument5 pagesISO#TR 14638 1995 (E) - Image 600 PDF DocumentCamila QuidorneNo ratings yet

- BS Au 225-1988 (2000) Iso 7803-1987Document6 pagesBS Au 225-1988 (2000) Iso 7803-1987Jeff Anderson CollinsNo ratings yet

- RCPretorius AWS Weld Strength CalculationsDocument1 pageRCPretorius AWS Weld Strength CalculationsrcpretoriusNo ratings yet

- ISO 724 1993 ISO Diş Açma StandardıDocument10 pagesISO 724 1993 ISO Diş Açma StandardıGANESH GNo ratings yet

- Iso 6336 5 1996 PDFDocument13 pagesIso 6336 5 1996 PDFThanh LongNo ratings yet

- ASM Material Data Sheet PDFDocument2 pagesASM Material Data Sheet PDFtiele_barcelosNo ratings yet

- Characteristics of Trochoids and Their Application To Determining Gear Teeth Fillet ShapesDocument14 pagesCharacteristics of Trochoids and Their Application To Determining Gear Teeth Fillet ShapesJohn FelemegkasNo ratings yet

- Bearing SU004Document8 pagesBearing SU004Rashid AliNo ratings yet

- 1 50ug Pipe and TubesDocument92 pages1 50ug Pipe and TubesTC İsmail TalayNo ratings yet

- Norma de Tallado y Acanalado de EngranajesDocument7 pagesNorma de Tallado y Acanalado de EngranajesJohn Walter RodriguezNo ratings yet

- Technical Data TB2448 Ver March 9, 2012Document13 pagesTechnical Data TB2448 Ver March 9, 2012anhthoNo ratings yet

- Din 6798 ADocument3 pagesDin 6798 ADuong BachNo ratings yet

- Schallater Gaas80 Gaa100 DownloadDocument4 pagesSchallater Gaas80 Gaa100 DownloadRakesh SrivastavaNo ratings yet

- DIN 13-1 (1999) - General Purpose ISO Metric Screw ThreadsDocument4 pagesDIN 13-1 (1999) - General Purpose ISO Metric Screw Threadsbriano100% (1)

- Shaft KeywayDocument8 pagesShaft KeywayturboconchNo ratings yet

- SENTRON LV36 Complete English 2014Document284 pagesSENTRON LV36 Complete English 2014charlonNo ratings yet

- Gears FundamentalDocument24 pagesGears FundamentalVIMAL ANo ratings yet

- A Crowning Achievement For Automotive ApplicationsDocument10 pagesA Crowning Achievement For Automotive ApplicationsCan CemreNo ratings yet

- Free Download Here: Iso 2768 MK PDFDocument2 pagesFree Download Here: Iso 2768 MK PDFbksinghsNo ratings yet

- Worm GearingDocument22 pagesWorm Gearingkismugan0% (1)

- Porca Din 439 BDocument7 pagesPorca Din 439 BFrank NunesNo ratings yet

- Bend Calculation DIN 6935Document2 pagesBend Calculation DIN 6935nadimuddinNo ratings yet

- Contact Stress Analysis of Spur Gear Teeth Pair PDFDocument6 pagesContact Stress Analysis of Spur Gear Teeth Pair PDFCan CemreNo ratings yet

- Parallel Pin Din 6325-2.5X12 PDFDocument1 pageParallel Pin Din 6325-2.5X12 PDFFer VFNo ratings yet

- Formula Sheet (1) Descriptive Statistics: Quartiles (n+1) /4 (n+1) /2 (The Median) 3 (n+1) /4Document13 pagesFormula Sheet (1) Descriptive Statistics: Quartiles (n+1) /4 (n+1) /2 (The Median) 3 (n+1) /4Tom AfaNo ratings yet

- List MF9: MATHEMATICS (8709, 9709) Higher Mathematics (8719) STATISTICS (0390)Document8 pagesList MF9: MATHEMATICS (8709, 9709) Higher Mathematics (8719) STATISTICS (0390)Muhammad FarhanNo ratings yet

- Formulas 12 EngDocument2 pagesFormulas 12 EngJoseNo ratings yet

- FormulaeDocument4 pagesFormulaeode89aNo ratings yet

- Formula Sheet and Statistical TablesDocument11 pagesFormula Sheet and Statistical TablesdonatchangeNo ratings yet

- Basic Statistical ConceptsDocument14 pagesBasic Statistical ConceptsVedha ThangavelNo ratings yet

- Tracer Study of PNU GraduatesDocument19 pagesTracer Study of PNU GraduatesMaorin SantosNo ratings yet

- MATLab Manual PDFDocument40 pagesMATLab Manual PDFAkhil C.O.No ratings yet

- CharacterSheet - Slayer LVL 7 - AlexDocument2 pagesCharacterSheet - Slayer LVL 7 - AlexMariano de la PeñaNo ratings yet

- Rubric For Talk ShowDocument1 pageRubric For Talk ShowRN Anunciacion100% (2)

- Endocrine System Lesson Plan-1Document2 pagesEndocrine System Lesson Plan-1Je Lan NieNo ratings yet

- Adt 11-1Document27 pagesAdt 11-1Paul Bustamante BermalNo ratings yet

- The Historical & Evolutionary Theory of The Origin of StateDocument5 pagesThe Historical & Evolutionary Theory of The Origin of StateMateen Ali100% (1)

- Codigos International 2001Document6 pagesCodigos International 2001Scan DieselNo ratings yet

- The Use of Mathematica in Control Engineering: Neil Munro Control Systems Centre Umist Manchester, EnglandDocument68 pagesThe Use of Mathematica in Control Engineering: Neil Munro Control Systems Centre Umist Manchester, EnglandBalaji SevuruNo ratings yet

- Expert Guide To Cognos Audit DataDocument30 pagesExpert Guide To Cognos Audit Dataleonardo russoNo ratings yet

- Gestalt Peeling The Onion Csi Presentatin March 12 2011Document6 pagesGestalt Peeling The Onion Csi Presentatin March 12 2011Adriana Bogdanovska ToskicNo ratings yet

- Annotated BibliographyDocument17 pagesAnnotated Bibliographyapi-2531405230% (1)

- Stem Collaborative Lesson PlanDocument5 pagesStem Collaborative Lesson Planapi-606125340No ratings yet

- Prompt Cards For DiscussionsDocument2 pagesPrompt Cards For DiscussionsDaianaNo ratings yet

- Diana's DisappointmentDocument3 pagesDiana's DisappointmentDubleau W100% (4)

- Primavera P6Document2 pagesPrimavera P6tutan12000No ratings yet

- Art Intg Proj CL 6-10Document5 pagesArt Intg Proj CL 6-10Sarthak JoshiNo ratings yet

- Anthropology and Architecture: A Misplaced Conversation: Architectural Theory ReviewDocument4 pagesAnthropology and Architecture: A Misplaced Conversation: Architectural Theory ReviewSonora GuantánamoNo ratings yet

- Civil Engineering, Construction, Petrochemical, Mining, and QuarryingDocument7 pagesCivil Engineering, Construction, Petrochemical, Mining, and QuarryinghardeepcharmingNo ratings yet

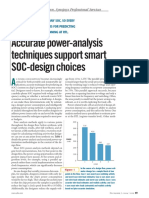

- Accurate Power-Analysis Techniques Support Smart SOC-design ChoicesDocument4 pagesAccurate Power-Analysis Techniques Support Smart SOC-design ChoicesSaurabh KumarNo ratings yet

- Aashto T 99 (Method)Document4 pagesAashto T 99 (Method)이동욱100% (1)

- Ed 501 Reading Lesson PlanDocument5 pagesEd 501 Reading Lesson Planapi-362126777No ratings yet

- Autism Awareness Carousel Project ProposalDocument5 pagesAutism Awareness Carousel Project ProposalMargaret Franklin100% (1)

- How To Live To Be 200Document3 pagesHow To Live To Be 200Anonymous 117cflkW9W100% (2)

- Movie Theater Building DesignDocument16 pagesMovie Theater Building DesignPrathamesh NaikNo ratings yet

- Oracle AlertsDocument3 pagesOracle AlertstsurendarNo ratings yet

- Population, Sample and Sampling TechniquesDocument8 pagesPopulation, Sample and Sampling TechniquesMuhammad TalhaNo ratings yet

- Opnet BasicsDocument9 pagesOpnet BasicsDuong Duc HungNo ratings yet

- Dr. Nirav Vyas Numerical Method 4 PDFDocument156 pagesDr. Nirav Vyas Numerical Method 4 PDFAshoka Vanjare100% (1)

- A&O SCI 104 Sylabus PDFDocument2 pagesA&O SCI 104 Sylabus PDFnadimNo ratings yet