Professional Documents

Culture Documents

The Problem of Overfitting - Coursera

Uploaded by

Brian Ramiro Oporto QuispeOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

The Problem of Overfitting - Coursera

Uploaded by

Brian Ramiro Oporto QuispeCopyright:

Available Formats

The Problem of Overfitting | Coursera https://www.coursera.org/learn/machine-learning/supplement/VTe37/th...

Volver a la semana 3 Lecciones This Course: Aprendizaje Automático Anterior Siguiente

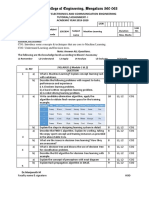

The Problem of Overfitting

Consider the problem of predicting y from x ∈ R. The leftmost figure below shows

the result of fitting a y = θ0 + θ1 x to a dataset. We see that the data doesn’t really

lie on straight line, and so the fit is not very good.

Instead, if we had added an extra feature x2 , and fit y = θ0 + θ1 x + θ2 x2 , then

we obtain a slightly better fit to the data (See middle figure). Naively, it might seem

that the more features we add, the better. However, there is also a danger in

adding too many features: The rightmost figure is the result of fitting a 5th order

5

polynomial y = ∑j=0 θj xj . We see that even though the fitted curve passes

through the data perfectly, we would not expect this to be a very good predictor

of, say, housing prices (y) for different living areas (x). Without formally defining

what these terms mean, we’ll say the figure on the left shows an instance of

underfitting—in which the data clearly shows structure not captured by the

model—and the figure on the right is an example of overfitting.

Underfitting, or high bias, is when the form of our hypothesis function h maps

poorly to the trend of the data. It is usually caused by a function that is too simple

or uses too few features. At the other extreme, overfitting, or high variance, is

caused by a hypothesis function that fits the available data but does not generalize

well to predict new data. It is usually caused by a complicated function that creates

a lot of unnecessary curves and angles unrelated to the data.

This terminology is applied to both linear and logistic regression. There are two

main options to address the issue of overfitting:

1) Reduce the number of features:

Manually select which features to keep.

Use a model selection algorithm (studied later in the course).

1 de 1 31/05/2018 2:51

You might also like

- Fitting Nonlinear ModelsDocument8 pagesFitting Nonlinear ModelsFelipe Ignacio Castro JaraNo ratings yet

- CS168: Generalization or How Much Data Is EnoughDocument16 pagesCS168: Generalization or How Much Data Is EnoughDanish ShabbirNo ratings yet

- The Problem of Overfitting: Overfitting With Linear RegressionDocument32 pagesThe Problem of Overfitting: Overfitting With Linear RegressionPallab PuriNo ratings yet

- Regularization PDFDocument32 pagesRegularization PDFDimas WihandonoNo ratings yet

- Linear RegressionDocument29 pagesLinear RegressionSoubhav ChamanNo ratings yet

- Mathematical Foundations of Machine LearningDocument7 pagesMathematical Foundations of Machine LearningYasin Çağatay GültekinNo ratings yet

- Machine Learning - Home - Week 1 - Notes - CourseraDocument7 pagesMachine Learning - Home - Week 1 - Notes - CourseracopsamostoNo ratings yet

- All LectureNotesDocument155 pagesAll LectureNotesSrinivas NemaniNo ratings yet

- ML:Introduction: Week 1 Lecture NotesDocument8 pagesML:Introduction: Week 1 Lecture NotesAmanda GarciaNo ratings yet

- Machine Learning - SoS 2017Document15 pagesMachine Learning - SoS 2017Alex hackingyouNo ratings yet

- Lec 2Document5 pagesLec 2fwoods10No ratings yet

- Calculus Sem1 StudentVersionDocument298 pagesCalculus Sem1 StudentVersionratliffjNo ratings yet

- Lecture 3: Applications of Machine Learning Algorithms Jul. 06 & 09, 2018Document3 pagesLecture 3: Applications of Machine Learning Algorithms Jul. 06 & 09, 2018Akash GuptaNo ratings yet

- Data Cleaning: Remove Unwanted ObservationsDocument5 pagesData Cleaning: Remove Unwanted Observationstest oneNo ratings yet

- Essentials of Linear Regression in PythonDocument23 pagesEssentials of Linear Regression in PythonSourav DasNo ratings yet

- Handout5 RegularizationDocument20 pagesHandout5 Regularizationmatthiaskoerner19No ratings yet

- Solving Optimization Problems With JAX by Mazeyar Moeini The Startup MediumDocument9 pagesSolving Optimization Problems With JAX by Mazeyar Moeini The Startup MediumBjoern SchalteggerNo ratings yet

- Lecture 2: Basics and Definitions: Networks As Data ModelsDocument28 pagesLecture 2: Basics and Definitions: Networks As Data ModelsshardapatelNo ratings yet

- ML:Introduction: Week 1 Lecture NotesDocument5 pagesML:Introduction: Week 1 Lecture NotesAKNo ratings yet

- Difference Between Logit and Probit ModelsDocument7 pagesDifference Between Logit and Probit Modelswinkhaing100% (1)

- MicroEconometrics Lecture10Document27 pagesMicroEconometrics Lecture10Carolina Correa CaroNo ratings yet

- Safeguarded Zero-Finding Methods: Sumanth Hegde and Saurav KaseraDocument19 pagesSafeguarded Zero-Finding Methods: Sumanth Hegde and Saurav KaseraDuy TùngNo ratings yet

- Maths c3 Coursework ComparisonDocument6 pagesMaths c3 Coursework Comparisonfqvtmyzid100% (2)

- 07: Regularization: The Problem of OverfittingDocument5 pages07: Regularization: The Problem of OverfittingmarcNo ratings yet

- Machine Learning for Robotics IntroductionDocument9 pagesMachine Learning for Robotics IntroductionmovicNo ratings yet

- Types of Machine Learning: Supervised Learning: The Computer Is Presented With Example Inputs and TheirDocument50 pagesTypes of Machine Learning: Supervised Learning: The Computer Is Presented With Example Inputs and TheirJayesh GadewarNo ratings yet

- Contents MLP PDFDocument60 pagesContents MLP PDFMohit SharmaNo ratings yet

- Example of Mei c3 CourseworkDocument6 pagesExample of Mei c3 Courseworkegdxrzadf100% (2)

- Pauls Online Notes Calculus I - The LimitDocument13 pagesPauls Online Notes Calculus I - The LimitKye GarciaNo ratings yet

- Calculus I - The LimitDocument6 pagesCalculus I - The LimitAdriano PassosNo ratings yet

- Remember When Applying Topkis TheoremDocument2 pagesRemember When Applying Topkis TheoremRaffael RussoNo ratings yet

- Linear Algebra ThesisDocument4 pagesLinear Algebra ThesisWhatAreTheBestPaperWritingServicesNaperville100% (2)

- Neural Networks Study NotesDocument11 pagesNeural Networks Study Notespekalu100% (2)

- Beyond Binary Classification: Subsampling for Imbalanced DataDocument14 pagesBeyond Binary Classification: Subsampling for Imbalanced DataJiahong HeNo ratings yet

- Linear RegressionDocument31 pagesLinear RegressionPravin PanduNo ratings yet

- Basic Data Science Interview Questions ExplainedDocument38 pagesBasic Data Science Interview Questions Explainedsahil kumarNo ratings yet

- Lecture 1, Part 3: Training A Classifier: Roger GrosseDocument11 pagesLecture 1, Part 3: Training A Classifier: Roger GrosseShamil shihab pkNo ratings yet

- Structured Learning and Prediction in CVDocument178 pagesStructured Learning and Prediction in CVjmcclane92No ratings yet

- ML: Introduction 1. What Is Machine Learning?Document38 pagesML: Introduction 1. What Is Machine Learning?rohitNo ratings yet

- Nonlinear Systems ScilabDocument12 pagesNonlinear Systems ScilabCarlos Soza RossNo ratings yet

- Comp Intel NotesDocument7 pagesComp Intel NotesDanielNo ratings yet

- Everything Is The Same Modeling Engineered SystemsDocument82 pagesEverything Is The Same Modeling Engineered Systemsyashar2500No ratings yet

- Predicting and Evaluating The Popularity of Online News: He Ren Quan YangDocument5 pagesPredicting and Evaluating The Popularity of Online News: He Ren Quan Yangabhinav30No ratings yet

- Understanding and implementing Machine learningDocument17 pagesUnderstanding and implementing Machine learningAnuranan DasNo ratings yet

- The Hundred-Page Machine Learning Book - Andriy BurkovDocument16 pagesThe Hundred-Page Machine Learning Book - Andriy BurkovKlepsusNo ratings yet

- Fundamental Act. 1.3Document7 pagesFundamental Act. 1.3Alfredo de Alba AlvaradoNo ratings yet

- CPSC 540 Assignment 1 SolutionsDocument9 pagesCPSC 540 Assignment 1 SolutionsJohnnyDoe0x27ANo ratings yet

- Machine Learning: An Applied Econometric Approach: Sendhil Mullainathan and Jann SpiessDocument48 pagesMachine Learning: An Applied Econometric Approach: Sendhil Mullainathan and Jann SpiessFabian SalazarNo ratings yet

- Machine Learning: An Applied Econometric ApproachDocument31 pagesMachine Learning: An Applied Econometric ApproachJimenezCarlosEduardo100% (1)

- A Blockchain Future To Internet of Things Security A Position PaperDocument4 pagesA Blockchain Future To Internet of Things Security A Position PaperLong VỹNo ratings yet

- Jep 31 2 87Document62 pagesJep 31 2 87Chhoeurng KimthanNo ratings yet

- Machine Learning: An Applied Econometric Approach: Sendhil Mullainathan and Jann SpiessDocument38 pagesMachine Learning: An Applied Econometric Approach: Sendhil Mullainathan and Jann Spiessabdul salamNo ratings yet

- Essential Machine Learning Algorithms Guide with Code ExamplesDocument15 pagesEssential Machine Learning Algorithms Guide with Code ExamplesAndres ValenciaNo ratings yet

- Module 3.3 Classification Models, An OverviewDocument11 pagesModule 3.3 Classification Models, An OverviewDuane Eugenio AniNo ratings yet

- Synthetic DivisionDocument6 pagesSynthetic DivisionRobert KikahNo ratings yet

- A Low-Cost Accelerometer Developed by Inkjet Printing TechnologyDocument7 pagesA Low-Cost Accelerometer Developed by Inkjet Printing TechnologyBrian Ramiro Oporto QuispeNo ratings yet

- Applied and Computational Harmonic Analysis: Ingrid Daubechies, Jianfeng Lu, Hau-Tieng WuDocument19 pagesApplied and Computational Harmonic Analysis: Ingrid Daubechies, Jianfeng Lu, Hau-Tieng WuBrian Ramiro Oporto QuispeNo ratings yet

- NI CDAQ 9171 EspecificationsDocument9 pagesNI CDAQ 9171 EspecificationsBrian Ramiro Oporto QuispeNo ratings yet

- 2015 VibrationDocument28 pages2015 VibrationSuprapto ToNo ratings yet

- GuardianesDocument1 pageGuardianesBrian Ramiro Oporto QuispeNo ratings yet

- Vibration On Solidworks SimulationDocument304 pagesVibration On Solidworks SimulationBrian Ramiro Oporto QuispeNo ratings yet

- CAPITULO 6 ACTUADORES NEUMÁTICOS - Automatizacion PDFDocument9 pagesCAPITULO 6 ACTUADORES NEUMÁTICOS - Automatizacion PDFBrian Ramiro Oporto QuispeNo ratings yet

- Bayesian Spectral Density Approach For Modal Updating Using Ambient DataDocument21 pagesBayesian Spectral Density Approach For Modal Updating Using Ambient DataBrian Ramiro Oporto QuispeNo ratings yet

- 2015 VibrationDocument28 pages2015 VibrationSuprapto ToNo ratings yet

- 2015 VibrationDocument28 pages2015 VibrationSuprapto ToNo ratings yet

- Site Report - Solar PV in AcehDocument1 pageSite Report - Solar PV in AcehAndre SNo ratings yet

- NJPW g1 Climax 28Document17 pagesNJPW g1 Climax 28Brian Ramiro Oporto QuispeNo ratings yet

- Kyocera KD Modules: Kyocera Empowers Your FutureDocument2 pagesKyocera KD Modules: Kyocera Empowers Your FutureiptusNo ratings yet

- Site Report - Solar PV in AcehDocument1 pageSite Report - Solar PV in AcehAndre SNo ratings yet

- PulsarsDocument23 pagesPulsarsBrian Ramiro Oporto QuispeNo ratings yet

- Labview DocumentDocument1 pageLabview DocumentBrian Ramiro Oporto QuispeNo ratings yet

- How To Build Your Own Neural Network From Scratch inDocument6 pagesHow To Build Your Own Neural Network From Scratch inBrian Ramiro Oporto QuispeNo ratings yet

- Using The NI PXI-407X DMM As An Isolated Digitizer - National InstrumentsDocument2 pagesUsing The NI PXI-407X DMM As An Isolated Digitizer - National InstrumentsBrian Ramiro Oporto QuispeNo ratings yet

- User InterfaceDocument11 pagesUser InterfaceBrian Ramiro Oporto QuispeNo ratings yet

- Wrestling For DummiesDocument14 pagesWrestling For DummiesYosiNo ratings yet

- CH 13Document26 pagesCH 13Kartik BhandariNo ratings yet

- Data Structure Previous Question PaperDocument2 pagesData Structure Previous Question PaperteralaNo ratings yet

- Tutorial-1-Machine Learning-2020Document1 pageTutorial-1-Machine Learning-2020Niranjitha HVNo ratings yet

- Logic Sheet 2 AnswersDocument7 pagesLogic Sheet 2 AnswersAhmedGamalNo ratings yet

- Algorithms Solution - 2Document9 pagesAlgorithms Solution - 2MiltonNo ratings yet

- 10.1007 - 978 3 319 33003 7 - 11Document10 pages10.1007 - 978 3 319 33003 7 - 11Lowleary1942No ratings yet

- Searching Techniques: Linear and Binary SearchDocument13 pagesSearching Techniques: Linear and Binary SearchMeer MusahibNo ratings yet

- Hats (En)Document3 pagesHats (En)tammim98100No ratings yet

- Chapter 7 Problem Solving With LoopsDocument18 pagesChapter 7 Problem Solving With LoopslatishyNo ratings yet

- Class-4 - GA - CrossoverDocument60 pagesClass-4 - GA - CrossoverUjjwala J.BNo ratings yet

- BTech CS/IT VI Sem AI & ML Exam QuestionsDocument5 pagesBTech CS/IT VI Sem AI & ML Exam QuestionsNandita Hans0% (1)

- Lab Experiment # 8: Implementation and Verification of Truth Tables of Logic Gates (AND, OR, NOT, NOR, NAND) On TrainerDocument7 pagesLab Experiment # 8: Implementation and Verification of Truth Tables of Logic Gates (AND, OR, NOT, NOR, NAND) On TrainerSaad khanNo ratings yet

- TCS - CodeVita - Coding Arena Page6Document4 pagesTCS - CodeVita - Coding Arena Page6Dr.Krishna BhowalNo ratings yet

- C# OOP concepts and syntax overviewDocument22 pagesC# OOP concepts and syntax overviewArindam BasuNo ratings yet

- Pertemuan 04 - KalkulusDocument13 pagesPertemuan 04 - KalkulusAinul Hikam Al-ArdiNo ratings yet

- DSP ch08 S2.3 2.7P PDFDocument57 pagesDSP ch08 S2.3 2.7P PDFBelaliaNo ratings yet

- Rajshri DTE Micro ProjectDocument12 pagesRajshri DTE Micro ProjectayushNo ratings yet

- ADS: Dynamic Programming Matrix Chain MultiplicationDocument17 pagesADS: Dynamic Programming Matrix Chain MultiplicationnehruNo ratings yet

- US - Osnove JAVA Programiranja - Zbirka PDFDocument320 pagesUS - Osnove JAVA Programiranja - Zbirka PDFssteticNo ratings yet

- LAB 9 - Multirate SamplingDocument8 pagesLAB 9 - Multirate SamplingM Hassan BashirNo ratings yet

- Assignment 1 - 1618 - PresentationDocument15 pagesAssignment 1 - 1618 - Presentationzamui sukiNo ratings yet

- Load BalancingDocument46 pagesLoad BalancingLoreNo ratings yet

- Discrete Chapter3Document40 pagesDiscrete Chapter3DesyilalNo ratings yet

- Discussion 2 Fall 2019 (Solutions)Document7 pagesDiscussion 2 Fall 2019 (Solutions)samNo ratings yet

- Neural Networks and Deep LearningDocument3 pagesNeural Networks and Deep LearningAniKet B100% (1)

- AI ManualDocument9 pagesAI ManualVikrant MayekarNo ratings yet

- Program Correctness PDFDocument11 pagesProgram Correctness PDFSuhas PaiNo ratings yet

- Noc20-Cs53 Week 04 Assignment 02Document4 pagesNoc20-Cs53 Week 04 Assignment 02Modicare QuiteNo ratings yet

- Aho-Corasick String MatchingDocument24 pagesAho-Corasick String MatchingArabs KnightNo ratings yet

- 3.4-16 EditedDocument4 pages3.4-16 EditedKricel MaqueraNo ratings yet