Professional Documents

Culture Documents

Neural Networks Unit - 8 Perceptrons Objective: An Objective of The Unit-8 Is, The Reader Should Be Able To Know What Do You

Uploaded by

Mohan AgrawalOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Neural Networks Unit - 8 Perceptrons Objective: An Objective of The Unit-8 Is, The Reader Should Be Able To Know What Do You

Uploaded by

Mohan AgrawalCopyright:

Available Formats

Perceptrons /cs502a/8 62

NEURAL NETWORKS

Unit - 8

PERCEPTRONS

Objective: An objective of the unit-8 is, the reader should be able to know what do you

mean by perceptron, perceptron architecture and its training algorithm with suitable

examples.

Contents

8.0.0 Introduction

8.1.0 Perceptron Model.

8.2.0 Single layer discrete perceptron networks.

8.2.1 Summary of the discrete perceptron training algorithm.

8.3.0 Single layer continuous perceptron networks.

8.3.1 Summary of the continuous perceptron training algorithm.

8.4.0 Perceptron convergence theorem

8.5.0 Problems and limitations of the perceptron training algorithms.

8.5.1 Limitations of perceptrons.

8.6.0 Practice questions.

8.0.0 Introduction

We know that perceptron is one of the early models of artificial neuron. It was

proposed by Rosenblatt in 1958. It is a single layer neural network whose weights and

biases could be trained to produce a correct target vector when presented with the

corresponding input vector. The perceptron is a program that learns concepts, i.e. it can

learn to respond with True (1) or False (0) for inputs we present to it, by repeatedly

"studying" examples presented to it. The training technique used is called the perceptron

learning rule. The perceptron generated great interest due to its ability to generalize from

its training vectors and work with randomly distributed connections. Perceptrons are

especially suited for simple problems in pattern classification. In this also we give the

perceptron convergence theorem.

8.1.0 Perceptron Model

In the 1960, perceptrons created a great deal of interest and optimism. Rosenblatt (1962)

proved a remarkable theorem about perceptron learning. Widrow (Widrow 1961, 1963,

Widrow and Angell 1962, Widrow and Hoff 1960) made a number of convincing

demonstrations of perceptron like systems. Perceptron learning is of the supervised type. A

perceptron is trained by presenting a set of patterns to its input, one at a time, and adjusting

the weights until the desired output occurs for each of them.

School of Continuing and Distance Education – JNT University

Perceptrons /cs502a/8 63

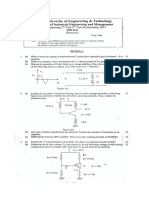

The schematic diagram of perceptron is shown inn Fig. 8.1. Its synaptic weights are

denoted by w1, w2, . . . wn. The inputs applied to the perceptron are denoted by x1, x2, . . . .

xn. The externally applied bias is denoted by b.

bias, b

x1 w1

net Output

w2 f(.) o

x2

Hard

wn limiter

Fig. 8.1 Schematic diagram of perceptron

xn

Inputs

The net input to the activation of the neuron is written as

n

net w i x i b (8.1)

i 1

The output of perceptron is written as o = f(net) (8.2)

where f(.) is the activation function of perceptron. Depending upon the type of activation

function, the perceptron may be classified into two types

i) Discrete perceptron, in which the activation function is hard limiter or sgn(.) function

ii) Continuous perceptron, in which the activation function is sigmoid function, which is

differentiable. The input-output relation may be rearranged by considering w 0=b and

fixed bias x0 = 1.0. Then

n

net wi 0

i x i WX (8.3)

where W = [w0, w1, w2, . . . . wn] and X = [x0, x1, x2, . . . xn]T.

The learning rule for perceptron has been discussed in unit 7. Specifically the learning of

these two models is discussed in the following sections.

8.2.0 Single Layer Discrete Perceptron Networks

For discrete perceptron the activation function should be hard limiter or sgn()

function. The popular application of discrete perceptron is a pattern classification. To

develop insight into the behavior of a pattern classifier, it is necessary to plot a map of the

decision regions in n-dimensional space, spanned by the n input variables. The two decision

regions separated by a hyper plane defined by

School of Continuing and Distance Education – JNT University

Perceptrons /cs502a/8 64

w

i 0

i xi 0 (8.4)

This is illustrated in Fig. 8.2 for two input variables x1 and x2, for which the decision

boundary takes the form of a straight line.

x2

Class C

1

x1

Class C

2

Fig. 8.2 Illustration of the hyper plane (in this example, a straight lines)

as decision boundary for a two dimensional, two-class patron classification problem.

For the perceptron to function properly, the two classed C1 and C2 must be linearly

separable. This in turn, means that the patterns to be classified must be sufficiently

separated from each other to ensure that the decision surface consists of a hyper plane. This

is illustrated in Fig. 8.3.

Decision boundary

2

C2

Class C

1

1

Class C

Class C

Class

(a) (b)

Fig. 8.3 (a) A pair of linearly separable patterns

(b) A pair of nonlinearly separable patterns.

In Fig. 8.3(a), the two classes C1 and C2 are sufficiently separated from each other to draw a

hyper plane (in this it is a straight line) as the decision boundary. If however, the two

classes C1 and C2 are allowed to move too close to each other, as in Fig. 8.3 (b), they

N. Yadaiah, Dept. of EEE, JNTU College of Engineering, Hyderabad

Perceptrons /cs502a/8 65

become nonlinearly separable, a situation that is beyond the computing capability of the

perceptron.

Suppose then that the input variables of the perceptron originate from two linearly

separable classes. Let æ1 be the subset of training vectors X 1(1), X1(2), . . . . , that belongs

to class C1 and æ2 be the subset of train vectors X 2(1), X2(2), . . . . . , that belong to class

C2. The union of æ1 and æ2 is the complete training set æ. Given the sets of vectors æ1

and æ2 to train the classifier, the training process involves the adjustment of the W in such

a way that the two classes C1 and C2 are linearly separable. That is, there exists a weight

vector W such that we may write,

WX 0 for every input vector X belonging to class C1

(8.5)

WX 0 for every input vector X belonging to class C 2

In the second condition, it is arbitrarily chosen to say that the input vector X belongs

to class C2 if WX = 0.

The algorithm for updating the weights may be formulated as follows:

1. If the kth member of the training set, X k is correctly classified by the weight vector

W(k) computed at the kth iteration of the algorithm, no correction is made to the

weight vector of perceptron in accordance with the rule.

Wk+1 = Wk if WkXk >0 and Xk belongs to class C1 (8.6)

Wk+1 = Wk if Wk X k 0 and Xk belongs to class C2 (8.7)

2. Otherwise, the weight vector of the perceptron is updated in accordance with the

rule.

W ( k 1)T W kT - ηX k if Wk Xk >0 and Xk belongs to class C2 (8.8a)

W ( k 1)T W kT η X k if WkXk 0 and Xk belongs to class C1 (8.8b)

where the learning rule parameter controls the adjustment applied to the weight vector.

Equations (8.8a) and (8.8b) may be written general expression as

W ( k 1) W kT ( d k ok ) X k (8.9)

2

8.2.1 Summary of the discrete perceptron training algorithm

Given are P training pairs of patterns

School of Continuing and Distance Education – JNT University

Perceptrons /cs502a/8 66

{X1, d1, X2, d2, . . . . Xp, dp}, where Xi is (n×1), di is (1×1), i = 1, 2, . . . P. Define w 0=b is

bias and X0 = 1.0 , then the size of augmented input vector is Xi ((n+1)×).

In the following, k denotes the training step and p denotes the step counter with the training

cycle.

Step 1: 0 is chosen and define Emax.

Step 2: Initialize the weights at small random values, W = [w ij] , augmented size is (n+1)×1

and initialize counters and error function as:

k 1, p 1 E 0.

Step 3: The training cycle begins. Apply input and compute the output:

X Xp, d dp, o sgn(WX)

Step 4: Update the weights: W T W T (d - o) X

1

Step 5: Compute the cycle error: E (d o ) 2 E

2

Step 6: If p < P, the p p+1, k k+1 and go to step 3, otherwise go to step 7.

Step 7: The training cycle is completed. For E< E max terminates the training session with

output weights and k. If E > E max , then E 0, p 1 and enter the new training

cycle by going to step 3.

In general, a continuous perceptron element with sigmoidal activation function will be used

to facilitate the training of multi layer feed forward networks used for classification and

recognition.

8.3.0 Single-Layer Continuous Perceptron networks

In this, the concept of an error function in multidimensional weight space has been

introduced. Also the hard limiter (sgn(.)) with weights will be replaced by the continuous

perceptron. By introduction of this continuous activation function, there are two advantages

(i) finer control over the training procedure and (ii) differential characteristics of the

activation function, which is used for computation of the error gradient.

The gradient or steepest descent is used in updating weights starting from any arbitrary

weight vector W, the gradient E(W) of the current error function is computed. The next

value of W as obtained by moving in the direction of the negative gradient along the

multidimensional error surface. Therefore the relation of modification of weight vector may

be written as

N. Yadaiah, Dept. of EEE, JNTU College of Engineering, Hyderabad

Perceptrons /cs502a/8 67

W ( k 1)T W kT - E(W k ) (8.10)

where is the learning constant and is the positive constant and the superscript k denotes

the step number. Let us define the error function between the desired output d k and actual

output ok as

1

Ek d k - ok 2 (8.11a)

2

or

Ek =

1

2

dk - f W k X 2

(8.11b)

where the coefficient ½ in from of the error expression is only for convenience in

simplifying the expression of the gradient value and it does not effect the location of the

error function minimization. The error minimization algorithm (8.10) requires computation

of the gradient of the error function (8.11) and it may be written as

1

d - f(net k )

2

E ( W k ) (8.12)

2

E

w

0

E

w

1

The n+1 dimensional gradient vector is defined as E (W k ) . (8.13)

.

E

wn

School of Continuing and Distance Education – JNT University

Perceptrons /cs502a/8 68

(netk )

w

0

(netk )

w1

k '

Using (8.12), we obtain the gradient vector as E (W ) - (d k - o k ) f (net k ) . (8.14)

.

(netk )

n w

Since netk = WkX, we have

( net k )

xi , for i =0, 1, . . . n. (8.15)

wi

(x0=1 for bias element) and equation (8.15) can be written as

E (W k ) - (d k - o k )f ' (net k )X (8.16a)

or

E

- (d k - o k )f ' (net k )x i for i = 0, 1, . . . n (8.16b)

wi

k

w i - E(W k ) (d k - o k )f ' (net k )x i (8.17)

Equation (8.17) is the training rule for the continuous perceptron. Now the requirement is

how to calculate f ' (net ) in terms of continuous perceptron output. Consider the bipolar

activation function f(net) of the form

2

f (net ) - 1 (8.18)

1 exp(-net)

' 2 exp(-net)

Differentiating the equation (8.18) with respect to net: f (net )

1 exp(-net) 2

(8.19)

The following identity can be used in finding the derivative of the function.

2 exp(-net) 1

2 = (1 - o 2 ) (8.20)

1 exp(-net) 2

N. Yadaiah, Dept. of EEE, JNTU College of Engineering, Hyderabad

Perceptrons /cs502a/8 69

The relation (8.20) may be verified as follows:

2

1 1 1 exp( net )

(1 - o 2 ) 1 (8.21)

2 2 1 exp( net )

The right side of (8.21) can be rearranged as

2

1 1 exp( net ) 2 exp( net )

1 (8.22)

1 exp( net )

2

2 1 exp( net )

This is same as that of (8.20) and now the derivative may be written as

1 2

f ' (net k ) (1 o k ) (8.23)

2

k 1 2

The gradient (8.16a) can be written as E( W ) - (d k - o k ) (1 - o k )X (8.24)

2

and the complete delta training for the bipolar continuous activation function results from

(8.24) as

1

W ( k 1)T W kT ( d k - o k ) (1 - o k 2 )X k

2

(8.25)

where k denotes the reinstated number of the training step.

The weight adjustment rule (8.25) corrects the weights in the same direction as the discrete

perceptron learning rule as in equation (8.8). The main difference between these two is the

presence of the moderating factor (1-ok2). This scaling factor is always positive and smaller

than 1. Another main difference between the discrete and continuous perceptron training is

that the discrete perceptron training algorithm always leads to a solution for linearly

separable problems. In contrast to this property, the negative gradient-based training does

not guarantee solutions for linearly separable patterns.

8.3.1 Summary of the Single Continuous Perceptron Training Algorithm

Given are P training pairs

{X1, d1, X2, d2, . . . . . Xp, dp}, where Xi is ((n+1)1), di is (11), for i = 1, 2, . . . P

School of Continuing and Distance Education – JNT University

Perceptrons /cs502a/8 70

xi0

x

i1

Xi . , where x i0 = 1.0 (bias element)

.

xin

Let k is the training step and p is the step counter within the training cycle.

Step 1: 0 and Emax > 0 chosen.

Step 2: Weights are initialized at W at small random values, W = [w ij] is (n+1)×1. Counter

and error function are initialized.

k 1, p 1 E 0.

Step 3: The training cycle begins. Input is presented and output is computed.

X Xp, d dp, o f(WX)

T T 1 2

Step 4: Weights are updated: W W (d - o) (1 - o )X

2

1

Step 5: Cycle error is computed: E (d o ) 2 E

2

Step 6: If p < P, the p p+1, k k+1 and go to step 3, otherwise go to step 7.

Step 7: The training cycle is completed. For E < E max terminated the training session with

output weights, k and E. If E E max , then E 0, p 1 and enter the new

training cycle by going to step 3.

8.4.0 Perceptron Convergence Theorem

This theorem states that the perceptron learning law converges to a final set of

weight values in a finite number of steps, if the classes are linear separable. The proof of

this theorem is as follows:

Let X and W are the augmented input and weight vectors respectively. Assume that

there exits a solution W* for the classification problem, we have to show that W * can be

approached in a finite number of steps, starting from some initial weight values. We know

that the solution W* satisfies the following inequality as per the equation (8.5):

N. Yadaiah, Dept. of EEE, JNTU College of Engineering, Hyderabad

Perceptrons /cs502a/8 71

W*X > >0, for each X C1 (8.26)

where min (W *T X )

XC1

The weight vector is updated if WkTX0, for X C1. That is,

W k 1 W k X ( k ) , for X(k) = X C1 (8.27)

where X(k) is used to denote the input vector at step k. If we start with W(0)=0, where 0 is

an all zero column vector, then

k1

W k c X (i ) (8.28)

i 0

Multiplying both sides of equation (8.28) by W*T, we get

k1

W *T W k c W *T X (i ) ck (8.29)

i 0

*T

since W X ( k ) according to equation (8.26). Using the Cauchy-Schwartz inequality

2 2

W *T .W k

[W *T W k ] 2 (8.30)

We get from equation (8.29)

2 c 2 k 2 2

Wk 2 (8.31)

W *T

We also have from equation (8.27)

2

W k 1 (W k cX ( k )) T (W k cX ( k ))

2

(8.32)

2

W k c 2 X (k ) 2cW kT X ( k )

2 2

W k c 2 X (k )

kT

since for learning W X (k ) 0 when X(k) C1 . Therefore, starting from W0=0, we get

from equation (8.32)

2 k1

2

Wk c 2 X (i ) c 2 k (8.33)

i 0

2

where β Xmax

( i )C

X (i ) . Combining equations (8.31) and (8.33), we obtain the optimum

1

value of k by solving

k 2 2

2

βk (8.34)

W *T

or

School of Continuing and Distance Education – JNT University

Perceptrons /cs502a/8 72

β 2 β 2

k 2 W *T 2 W* (8.35)

Since is positive, equation (8.35) shows that the optimum weight value can be

approached in a finite number of steps using the perceptron learning law.

8.5.0 Problems and Limitations of the perceptron training algorithms

It may be difficult to determine if the caveat regarding linear separability is satisfied

for the particular training set at hand. Further more, in many real world situations the

inputs are often time varying and may be separable at one time and not at another. Also,

these is no statement in the proof of the perceptron learning algorithm that indicates how

many steps will be required to train the network. It is small consolation; to know that

training will only take a finite number of steps if the time it takes is measured in geological

units.

Further more, there is no proof that perceptron training algorithm is faster than

simply trying all possible adjustment of the weights; in some cases this brute force

approach may be superior.

8.5.1 Limitations of perceptrons

There are limitations to he capabilities of perceptrons however. They will learn the

solution, if there is a solution to be found. First, the output values of a perceptron can take

on only one of two values (True or False). Second, perceptrons can only classify linearly

separable sets of vectors. If a straight line or plane can be drawn to separate the input

vectors into their correct categories, the input vectors are linearly separable and the

perceptron will find the solution. If the vectors are not linearly separable learning will never

reach a point where all vectors are classified properly. The most famous example of the

perceptron's inability to solve problems with linearly non-separable vectors is the boolean

exclusive-OR problem.

Consider the case of the exclusive-or (XOR) problem. The XOR logic function has

two inputs and one output, how below.

x x y Z

z 0 0 0

y

(a) Exclusive –OR gate

0 1 1

1 0 1

1 1 0

Fig. 8.4 (b) Truth Table

N. Yadaiah, Dept. of EEE, JNTU College of Engineering, Hyderabad

Perceptrons /cs502a/8 73

It produces an output only if either one or the other of the inputs is on, but not if

both are off or both are on. It is shown in above table. We can consider this has a problem

that we want the perceptron to learn to solve; output a 1 of the x is on and y is off or y is on

and x is off, otherwise output a ‘0’. It appears to be a simple enough problem.

We can draw it in pattern space as shown in Fig. (8.5). The x-axis represents the

value of x, the y-axis represents the value of y. The shaded circles represent the inputs that

produce an output of 1, whilst the un-shaded circles show the inputs that produce an output

of 0. Considering the shaded circles and un-shaded circles as separate classes, we find that,

we cannot draw a straight line to separate the two classes. Such patterns are known as

linearly inseparable since no straight line can divide them up successfully. Since we cannot

divide them with a single straight line, the perceptron will not be able to find any such line

either, and so cannot solve such a problem. In fact, a single-layer perceptron cannot solve

any problem that is linearly inseparable.

y

x inputs output

y

0 0 0 0 0 0,1 1,1

1

1 0 1 0 1

0 1 0 1

1 0,0 1,0

1 1 1 1 0

0 1 x

Fig. 8.5 The XOR problem in pattern space

8.6.0 Practice questions

8.1. What is the perceptron?

8.2 What are types of perceptrons?.

8.3 Define the perceptron training rule.

8.4 What is the perceptron convergence theorem?

8.5 What are the limitations of perceptron?

References

1. Simon Haykin, Neural Networks, 2nd Eds. Pearson Education Asia, 2001.

2. Jacek M. Zurada, Introduction to Artificial Neural Systems, JAICO Publ., 1997.

3. J. K. Wu, Neural Networks and Simulation Methods, Marcel Dekker, Inc., 1994.

4. James A. Freeman and David M. Skapura, Neural Networks: Algorithms,

Applications, and Programming Techniques, 1991, Addison-Wesley.

School of Continuing and Distance Education – JNT University

Perceptrons /cs502a/8 74

5. Waserman, Neural Computing: Theory and Practice.

***

N. Yadaiah, Dept. of EEE, JNTU College of Engineering, Hyderabad

You might also like

- 9489 Enp Mexico Akal Ofa Rev1Document47 pages9489 Enp Mexico Akal Ofa Rev1Mijail David Felix Narvaez100% (1)

- Lesson 2 Vector Spaces PDFDocument15 pagesLesson 2 Vector Spaces PDFShela RamosNo ratings yet

- Mock Paper Computer Architecture AnswersDocument26 pagesMock Paper Computer Architecture AnswersYang ZhouNo ratings yet

- Immitance InverterDocument4 pagesImmitance Inverteramitgh88No ratings yet

- NNFL 3unitDocument10 pagesNNFL 3unitv.saigeetha lakshmiNo ratings yet

- Perceptron Model and Learning RuleDocument32 pagesPerceptron Model and Learning Rulevasu_koneti5124No ratings yet

- Perceptons Neural NetworksDocument33 pagesPerceptons Neural NetworkssanthoshiNo ratings yet

- Linear Algebra 3.1Document6 pagesLinear Algebra 3.1Ricardo RossieNo ratings yet

- Perceptons Neural NetworksDocument33 pagesPerceptons Neural Networksvasu_koneti5124No ratings yet

- Displacement of Beams: 8.1 Double Integration MethodDocument18 pagesDisplacement of Beams: 8.1 Double Integration MethodwinzNo ratings yet

- Convolutional Coding and Viterbi Decoding ExplainedDocument30 pagesConvolutional Coding and Viterbi Decoding ExplainedEmNo ratings yet

- B.sc. Engineering 2nd Year 2nd Term Regular Examination, 2015Document11 pagesB.sc. Engineering 2nd Year 2nd Term Regular Examination, 2015Zubayer HassanNo ratings yet

- Vector Fields and Solutions To Ordinary Differential Equations UsingDocument10 pagesVector Fields and Solutions To Ordinary Differential Equations UsingphysicsnewblolNo ratings yet

- A Novel Loss Estimation Technique For Power ConverDocument7 pagesA Novel Loss Estimation Technique For Power ConverSridhar MNo ratings yet

- CT 12 All C SectionDocument14 pagesCT 12 All C SectionUmor faruk suptooNo ratings yet

- IES CONV Electrical Engineering 2000Document9 pagesIES CONV Electrical Engineering 2000jitenNo ratings yet

- Extract Pages From (George B. Arfken, Hans J.Document3 pagesExtract Pages From (George B. Arfken, Hans J.Alaa MohyeldinNo ratings yet

- Ps 2Document5 pagesPs 2MITESH UPRETINo ratings yet

- Understanding Horizontal Geodetic Network Precision and Accuracy Determination Using Least Squares TechniqueDocument7 pagesUnderstanding Horizontal Geodetic Network Precision and Accuracy Determination Using Least Squares TechniqueETEJE SYLVESTSTER OKIEMUTE (Ph.D)No ratings yet

- 3 EC IES 2011 Conventional Paper IDocument6 pages3 EC IES 2011 Conventional Paper IBodhisatwa ChowdhuryNo ratings yet

- Towards A Calculus of Echo State Networks: Procedia Computer ScienceDocument6 pagesTowards A Calculus of Echo State Networks: Procedia Computer ScienceEkanshNo ratings yet

- December 2016: AMIETE - ET (Current & New Scheme)Document3 pagesDecember 2016: AMIETE - ET (Current & New Scheme)mraavulaNo ratings yet

- Net09 28Document11 pagesNet09 28shardapatelNo ratings yet

- Appendix C Tensor Indicial Notation in The Approximation of Elasticity Problems 2013 The Finite Element Method Its Basis and Fundamentals Seventh EditDocument10 pagesAppendix C Tensor Indicial Notation in The Approximation of Elasticity Problems 2013 The Finite Element Method Its Basis and Fundamentals Seventh EditLeo Salazar EsquivelNo ratings yet

- EP431: Semiconductor Physics: More Practice Problems..... : X y X yDocument7 pagesEP431: Semiconductor Physics: More Practice Problems..... : X y X yप्रियरंजन सिंह राजपूतNo ratings yet

- Bourlard, Kamp - 1988 - Auto-association by multilayer perceptrons and singular value decompositionDocument4 pagesBourlard, Kamp - 1988 - Auto-association by multilayer perceptrons and singular value decompositionEwerton DuarteNo ratings yet

- State Space For Electric CircuitDocument16 pagesState Space For Electric CircuitThafer MajeedNo ratings yet

- BackPropagation Through TimeDocument6 pagesBackPropagation Through Timemilan gemerskyNo ratings yet

- Cdc1997 To Look atDocument24 pagesCdc1997 To Look atninaNo ratings yet

- Dynamics of Systems Phase Plane Analysis For Nonlinear SystemsDocument25 pagesDynamics of Systems Phase Plane Analysis For Nonlinear SystemsDerry RenaldyNo ratings yet

- Auto-Encoder Based Data Clustering: Abstract. Linear or Non-Linear Data Transformations Are Widely UsedDocument8 pagesAuto-Encoder Based Data Clustering: Abstract. Linear or Non-Linear Data Transformations Are Widely Usedpelican2016No ratings yet

- Power Quality Signal Classification Using Convolutional Neural NetworkDocument10 pagesPower Quality Signal Classification Using Convolutional Neural NetworkBharath ykNo ratings yet

- Assignment 6Document3 pagesAssignment 6sekharNo ratings yet

- VAR Stability AnalysisDocument12 pagesVAR Stability AnalysiscosminNo ratings yet

- Pulse Propagation Along Close Conductors: Numerical Solution of The Telegraph Equations Using MathematicaDocument60 pagesPulse Propagation Along Close Conductors: Numerical Solution of The Telegraph Equations Using MathematicasyedshahabudeenNo ratings yet

- P5 2-Sensor2011Document6 pagesP5 2-Sensor2011Gokul VenugopalNo ratings yet

- Raptis2019 N QueensDocument16 pagesRaptis2019 N Queensraptis.theophanesNo ratings yet

- Endsem 18-19 NIT RKLDocument2 pagesEndsem 18-19 NIT RKLRitunjay GuptaNo ratings yet

- I Computing With Matrices: 1 What Is Numerical Analysis?Document19 pagesI Computing With Matrices: 1 What Is Numerical Analysis?Anonymous zXVPi2PlyNo ratings yet

- Models - Woptics.bandgap Photonic CrystalDocument24 pagesModels - Woptics.bandgap Photonic CrystalAjay GhatakNo ratings yet

- 2019MAST20029 - Assignment 2Document4 pages2019MAST20029 - Assignment 2leonNo ratings yet

- ANN For AHWRDocument4 pagesANN For AHWRvgautambarcNo ratings yet

- Devoirs 1 - Vue D'ensemble de L'optique de FourierDocument4 pagesDevoirs 1 - Vue D'ensemble de L'optique de FourierDIBINo ratings yet

- Homework 0Document6 pagesHomework 0jerryNo ratings yet

- Jnu Pyq 2010Document5 pagesJnu Pyq 2010Manas BiswalNo ratings yet

- Naor 1995Document12 pagesNaor 1995Minh TuấnNo ratings yet

- TutorialDocument6 pagesTutorialspwajeehNo ratings yet

- Indian Institute of Science: RoblemDocument2 pagesIndian Institute of Science: RoblemADSTE ETWNo ratings yet

- Sample Examination PaperDocument5 pagesSample Examination Paperjunehum049No ratings yet

- Degree Program Academic Year Course Code Assignment Course Part - ADocument4 pagesDegree Program Academic Year Course Code Assignment Course Part - Ausha raniNo ratings yet

- Transient Analysis of Microstrip-Like Interconnections Guarded by Ground TracksDocument14 pagesTransient Analysis of Microstrip-Like Interconnections Guarded by Ground TracksSaranya KalaichelvanNo ratings yet

- Ee610 hw2 Au23Document3 pagesEe610 hw2 Au23Manish kumawatNo ratings yet

- IES 2001 EE Conventional Paper01Document4 pagesIES 2001 EE Conventional Paper01Shubham KumarNo ratings yet

- Perceptron: Tirtharaj DashDocument22 pagesPerceptron: Tirtharaj DashKishan Kumar GuptaNo ratings yet

- 나완수 전자기학 - 중간Document1 page나완수 전자기학 - 중간전호정No ratings yet

- Paper 4Document5 pagesPaper 4PavloNo ratings yet

- Understanding Backpropagation Algorithm - Towards Data ScienceDocument11 pagesUnderstanding Backpropagation Algorithm - Towards Data ScienceKashaf BakaliNo ratings yet

- On the Tangent Space to the Space of Algebraic Cycles on a Smooth Algebraic Variety. (AM-157)From EverandOn the Tangent Space to the Space of Algebraic Cycles on a Smooth Algebraic Variety. (AM-157)No ratings yet

- Organic Light-Emitting Transistors: Towards the Next Generation Display TechnologyFrom EverandOrganic Light-Emitting Transistors: Towards the Next Generation Display TechnologyNo ratings yet

- Potpourri GénéralDocument105 pagesPotpourri GénéralMohan AgrawalNo ratings yet

- Comprehensive Analysis of Planck 2015 TT Spectrum Peak PositionsDocument14 pagesComprehensive Analysis of Planck 2015 TT Spectrum Peak PositionsMohan AgrawalNo ratings yet

- Cosmology Lectures Part III Mathematical Trips - Daniel Baumann, CambridgeDocument130 pagesCosmology Lectures Part III Mathematical Trips - Daniel Baumann, CambridgeBrayan del ValleNo ratings yet

- Bertulani 1988Document110 pagesBertulani 1988Mohan AgrawalNo ratings yet

- Glossary of Heat Exchanger TerminologyDocument2 pagesGlossary of Heat Exchanger Terminologypvwander100% (1)

- Market Plan On Vodafone vs. Pakistani OperatorsDocument47 pagesMarket Plan On Vodafone vs. Pakistani OperatorswebscanzNo ratings yet

- Specification (General) Road Concreting ProjectDocument66 pagesSpecification (General) Road Concreting ProjectMARK RANEL RAMOSNo ratings yet

- Sanet - ST Sanet - ST Proceedings of The 12th International Conference On Measurem PDFDocument342 pagesSanet - ST Sanet - ST Proceedings of The 12th International Conference On Measurem PDFmaracaverikNo ratings yet

- Humidifier Servo and Non Servo Mode of DeliveryDocument2 pagesHumidifier Servo and Non Servo Mode of DeliveryAlberth VillotaNo ratings yet

- SuperSafe T (Front Terminal) PG Issue 2 May 2001Document20 pagesSuperSafe T (Front Terminal) PG Issue 2 May 2001Stephen BlommesteinNo ratings yet

- Session Plan (Julaps)Document10 pagesSession Plan (Julaps)Wiljhon Espinola JulapongNo ratings yet

- Operations Management Customer FocusDocument13 pagesOperations Management Customer FocusYusranNo ratings yet

- Database ScriptsDocument111 pagesDatabase ScriptsVikas GaurNo ratings yet

- HelicommandDocument11 pagesHelicommandNandin OnNo ratings yet

- Elizabeth Hokanson - Resume - DB EditDocument2 pagesElizabeth Hokanson - Resume - DB EditDouglNo ratings yet

- PSR 640Document188 pagesPSR 640fer_12_328307No ratings yet

- Personal PositioningDocument4 pagesPersonal PositioningJaveria MasoodNo ratings yet

- Maintain Safe Systems with Maintenance Free EarthingDocument12 pagesMaintain Safe Systems with Maintenance Free EarthingRavi Shankar ChakravortyNo ratings yet

- Hi Tech ApplicatorDocument13 pagesHi Tech ApplicatorSantosh JayasavalNo ratings yet

- Nuclear Medicine Inc.'s Iodine Value Chain AnalysisDocument6 pagesNuclear Medicine Inc.'s Iodine Value Chain AnalysisPrashant NagpureNo ratings yet

- Alejandrino, Michael S. Bsee-3ADocument14 pagesAlejandrino, Michael S. Bsee-3AMichelle AlejandrinoNo ratings yet

- Hybrid SCADA NetworksDocument11 pagesHybrid SCADA NetworksMarisela AlvarezNo ratings yet

- Rilem TC 162-TDF PDFDocument17 pagesRilem TC 162-TDF PDFAref AbadelNo ratings yet

- H Value1Document12 pagesH Value1Sahyog KumarNo ratings yet

- M433 Center of Mass Location Throughout Fuze Arming CycleDocument17 pagesM433 Center of Mass Location Throughout Fuze Arming CycleNORDBNo ratings yet

- Samsung WayDocument8 pagesSamsung WayWilliam WongNo ratings yet

- Module 7: Resolving Network Connectivity IssuesDocument54 pagesModule 7: Resolving Network Connectivity IssuesJosé MarquesNo ratings yet

- MFXi MPMi User Guide 02122010 DraggedDocument1 pageMFXi MPMi User Guide 02122010 DraggedDani BalanNo ratings yet

- Sumit Pandey CVDocument3 pagesSumit Pandey CVSumit PandeyNo ratings yet

- ME116P Weeks 1-4Document243 pagesME116P Weeks 1-4Ska dooshNo ratings yet

- Training Estimator by VladarDocument10 pagesTraining Estimator by VladarMohamad SyukhairiNo ratings yet

- TNEB vacancy cut-off datesDocument7 pagesTNEB vacancy cut-off dateswinvenuNo ratings yet

- Schematic Diagrams: Compact Component SystemDocument12 pagesSchematic Diagrams: Compact Component SystemGustavo DestruelNo ratings yet