Professional Documents

Culture Documents

Scientific Conceptualization of Information

Uploaded by

ogangurelCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Scientific Conceptualization of Information

Uploaded by

ogangurelCopyright:

Available Formats

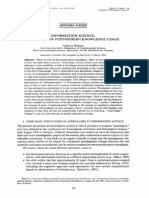

The Scientific

Conceptualization of

Information: A Survey

WILLIAM ASPRAY

The article surveys the development of a scientific conceptualization of

information during and in the decade following World War II. It examines the

roots of information science in nineteenth- and early twentieth-century

mathematical logic, physics, psychology, and electrical engineering, and then

focuses on how Warren McCulloch, Walter Pitts, Claude Shannon, Alan Turing,

John von Neumann, and Norbert Wiener combined these diverse studies into a

coherent discipline.

Categories and Subject Descriptors: E.4 [Coding and Information Theory];

F. 1 [Computation by Abstract Devices]: Models of Computation, Modes of

Computation; F.4.1 [Mathematical Logic and Formal Languages]:

Mathematical Logic-computability theory, recursive function theory; H. 1.1

[Models and Principles]: Systems and Information Theory-information

theory; K.2 [History of Computing]-people

General Terms: Theory

Additional Key Words and Phrases: W. McCulloch, W. Piths, C. Shannon,

A. Turing, J. von Neumann, N. Wiener

Modern scholarship has tended to equate the history which could be quantified’ and examined using math-

of information processing with the history of comput- ematical tools. Around this conceljt grew a number of

ing machinery. Because of the phenomenal growth of research areas involving study of both machines and

a new generation of more powerful machines every living organisms. They included the mathematical

few years, other important events in information- theory of communication, mathematical modeling of

processing history have been overshadowed. One such the brain, artificial intelligence, cybernetics, automata

event is the scientific conceptualization of information theory, and homeostasis.

that occurred during and in the decade following Of course, the word information. was in common

World War II. In that period a small group of math- usage for many years before its scientific conceptual-

ematically oriented scientists developed a theory of ization. It was recorded in print in 1390 to mean

information and information processing. For the first “communication of the knowledge or ‘news’ of some

time, information became a precisely defined concept fact or occurrence” (Oxford English Dictionary). In-

amenable to scientific study. Information was given formation also found a place in the traditional scien-

the status of a physical parameter, such as entropy, tific discourse of physics, mathematical logic, electri-

cal engineering, psychology, and biology--in some in-

stances as early as the nineteenth century. These

0 1985 by the American Federation of Information Processing disciplines provided the avenues to the study of infor-

Societies, Inc. Permission to copy without fee all or part of this

material is granted provided that the copies are not made or distrib-

mation for the scientists reformulating the concept

uted for direct commercial advantage, the AFIPS copyright notice during and after World War II.

and the title of the publication and its date appear, and notice is The pursuit of five research areas in particular led

given that the copying is by permission of the American Federation to the scientific conceptualization of information.

of Information Processing Societies, Inc. To copy otherwise, or to

republish, requires specific permission.

Author’s Address: Charles Babbage Institute, 104 Walter Library, i Quantification is not universally possible in information science.

University of Minnesota, Minneapolis, MN 55455. For example, it is possible to quantify coding counts, but not most

0 1985 AFIPS 0164-1239/85/020117-l 40$01 .OO/OO semantic concerns.

Annals of the History of Computing, Volume 7, Number 2, April 1985 * 117

W. Aspray l Conceptualization of Information

1. James Clerk Maxwell, Ludwig Boltzmann, and whose similar laws of functioning could be better

Leo Szilard’s work in thermodynamics and statistical understood with the help of the abstract results de-

mechanics, especially on the concept of entropy and duced from the mathematical models of automata

its mathematical formulation. theory.

2. The emergence of control and communication as The major figures in this movement were Claude E.

a new branch of electrical engineering, supplementary Shannon, Norbert Wiener, Warren S. McCulloch,

to power engineering, as a result of the development Walter Pitts, Alan M. Turing, and John von Neu-

of telegraphy, radio, and television. mann. They came to the subject from various estab-

3. The study of the physiology of the nervous sys- lished scientific disciplines: mathematics, electrical

tern, beginning in the nineteenth century with the engineering, psychology, biology, and physics. Despite

work of H. von Helmholtz and Claude Bernard, and their diverse backgrounds and research specialties,.

continuing into the twentieth century, especially with they functioned as a cohesive scientific community.

the work of Walter Cannon on homeostasis and the They shared a common educational background in

internal regulation of living organisms. mathematical logic. Most had wartime experience that

4. The development of functionalist and behavior- sharpened their awareness of the general importance

ist theories of the mind in psychology, leading to a of information as a scientific concept. Each appreci-

view of the brain as a processor of information and to ated the importance of information to his own re-

a demand for experimental verification of theories of search. Each was familiar with the others’ work and

mind through observation of external behavior. recognized its importance to his own work and to the

5. The development of recursive function theory in science of information generally. Most were personally

mathematical logic as a formal, mathematical char- acquainted,’ and often collaborated with or built di-

acterization of the human computational process. rectly upon the work of the others. They reviewed one

What was new after the war was a concerted effort another’s work in the scientific literature3 and often

to unify these diverse roots through a common math- attended the same conferences or meetings-some of

ematical characterization of the concepts of informa- which were designed specifically to study this new dis-

tion and information processing. The seminal idea cipline. Typical was a 1944 conference in Princeton or-

was that an interdisciplinary approach is appropriate ganized by Wiener and von Neumann for mathemati-

to solve problems in both biological and physical set- cians, engineers, and physiologists to discuss problems

tings in cases where the key to the problems is the of mutual interest in cybernetics and computing.4 Out-

manipulation, storage, or transmission of information siders recognized them as forming a cohesive commu-

and where the overall structure can be studied using nity of scholars devoted to a single area of research.

mathematical tools. For these scientists, both the hu- The introduction to Wiener’s Cybernetics (1948)

man brain and the electronic computer were consid- describes the sense of community and common pur-

ered twes of complicated information processors pose among these diversely trained scientists. Perhaps

2 It is not clear whether Pitts knew Turing personally. Wiener,

William Aspray (B.A., M.A. McCulloch, and Pitts were associates at MIT. Von Neumann was

Wesleyan, 1973; M.A. involved in several conferences with McCulloch and Wiener. Turing

worked with von Neumann in Princeton in 1937 and 1938, and with

1976, Ph.D. 1980 Shannon during the war at the Bell Telephone Laboratories in New

i

Wisconsin) is associate York City. Wiener and McCulloch both visited Turing in England.

director of the Charles Shannon had ample opportunity to become personally acquainted

with Wiener, von Neumann, McCulloch, and Pitts, and he was

Babbage institute for the certainly familiar with their work.

History of Information 3 See, for example, Shannon’s reviews of four papers authored or

Processing at the University coauthored by Pitts, including the famous McCulloch and Pitts

of Minnesota in paper, in Mathematical Reviews 5 (1944), p, 45, and 6 (1945), p. 12.

Minneapolis. Prior to joining 4 Turing, as a confirmed loner and the only non-American among

the major pioneers, is the least likely to have had contact with the

CBI Aspray taught group. Nevertheless, he discussed artificial-intelligence issues with

mathematical science at Shannon (in fact, both had developed chess programs); Wiener

Williams College and sought him out in England and regarded him as one of the cyber-

neticists; McCulloch also went out of his way to visit in Manchester,

history of science at Harvard University. His historical although there is reason to believe that Turing did not think highly

research interests include modern mathematics and of McCulloch (Hodges 1983); von Neumann offered Turing a job as

his assistant at the Institute for Advanced Study to continue his

logic, theoretical computer science, social diffusion of

theoretical work (a position Turing refused). Turing was also in

computing, computer education, and the economics of contact with others in England working in this area, most notably

the computer industry. Ross Ashby and Grey Walter.

118 l Annals of the History of Computing, Volume 7, Number 2, April 1985

W. Aspray * Conceptualization of Information

even more telling were both Wiener’s attempts to Lee on wave filters, and his collaboration with Julian

develop an interdisciplinary science, known as “cyber- Bigelow on fire control for antiaircraft artillery. Each

netics,” around the concept of feedback information, of these projects was related to the war effort. Both

and von Neumann’s attempts to unify the work of Turing and von Neumann applied wartime computing

Shannon, Turing, and McCulloch and Pitts into a experience to their postwar work on artificial intelli-

general theory of automata. gence and automata theory. Shannon’s theory of com-

Mathematical logic does not have many real-world munication resulted partly from the tremendous ad-

applications. It did here because it studies the laws of vances in communications engineering spawning from

thought in abstraction, and more particularly because the development of radar and electronics and partly

from the 1930s logicians were concerned with finding from the need for secure communications during the

a mathematical characterization of the process of com- war.

putation. The focus in this paper is on the sources and con-

In fact, training in mathematical logic was the most tributions of the six most important early figures in

salient tie among these early pioneers. Shannon com- this movement: Shannon, Wiener, McCulloch, Pitts,

pleted a master’s thesis (Shannon 1940) in electrical Turing, and von Neumann. It is impossible in a work

engineering at the Massachusetts Institute of Tech- of this length to present an exhaustive treatment of

nology on the application of mathematical logic to the the contributions of any of these pioneers, or even to

study of switching systems. Wiener studied mathe- mention the less centrally related work of their col-

matical logic with Bertrand Russell and averred its leagues. Instead, the intent is to sketch the general

influence on his later work in cybernetics (Wiener outlines of this new conceptualization, with hope that

1948). Turing received his doctoral degree from others will contribute the fine brushstrokes necessary

Princeton University for work on ordinal logics (Tur- to complete the picture.

ing 1938). Pitts studied mathematical logic under Ru-

Claude Shannon and the Mathematical Theory of

dolf Carnap, while his associate, McCulloch, was a

Communication

physiological psychologist interested in questions con-

cerning the learning of logic and mathematics. Early While working at Bell Laboratories in the 1940s on

in his career, von Neumann contributed significantly communication problems relating to radio and tele-

to the two branches of logic known as “proof theory” graphic transmission, Shannon developed a general

and “set theory.” theory of communication that would treat of the trans-

The similarity in their work does not end with the mission of any sort of information from one point to

common use of mathematical logic to solve problems another in space or time.6 His aim was to give specific

in a variety of fields. They also shared the conviction technical definitions of concepts general enough to

that the newly discovered concept of information obtain in any situation where information is manipu-

could tie together, in a fundamental way, problems lated or transmitted-concepts such as information,

from different branches of science. While the content noise, transmitter, signal, receiver, and message.

of their work shows this common goal,5 so does the At the heart of the theory was a new conceptuali-

social organization of their research. Despite widely zation of information. To make communication theory

diverse backgrounds and research interests, these sci- a scientific discipline, Shannon needed to provide a

entists were in close contact through collaboration, precise definition of information that transformed it

scholarly review of one another’s work, and frequent into a physical parameter capable of quantification.

interdisciplinary conferences. He accomplished this transformation by distinguish-

The growth of this interdisciplinary science in the ing information from meaning. He reserved “meaning”

late 1940s and early 1950s was at least partially the for the content actually included in a particular mes-

product of the massive cooperative and interdiscipli- sage. He used “information” to refer to the number of

nary scientific ventures of World War II that carried different possible messagesthat could be carried along

many scientists to subjects beyond the scholarly a channel, depending on the message’slength and on

bounds of their specialties. At no previous time had the number of choices of symbols for transmission at

there been such a mobilization of the scientific com-

munity. Wiener was led to develop cybernetics at least

6 Note that Shannon had already begun to work on his theory of

partly on account of his participation in Vannevar E. communication prior to his arrival at Bell Labs in 1941 (Shannon

Bush’s computing project at MIT, his work with Y. W. 1949a), and he continued to develop the theory while there. Witness

his later paper (Shannon 1949b), originally written as a Bell Con-

fidential Report based on work in the labs during the war (Shannon

’ An article by E. Colin Cherry (1952) shows that the unity of this 1945). For more information, see the biography of Turing by Hodges

work was already recognized by outsiders. (1983).

Annals of the History of Computing, Volume 7, Number 2, April 1985 l 11 g

W. Aspray l Conceptualization of Information

Selective Chronology of Events in the Scientific Conceptualization of Information

1390 First recorded printed use of word information 1945 Shannon, “A Mathematical Theory of Cryptography”

184Os-1860s Helmholtz, investigations of the physiology (Bell Laboratories report, published in 1949 under

and psychology of sight and sound different title)

1843-l 877 Bernard, work on homeostasis 1945 McCulloch, “A Heterarchy of Values Determined by

1868 Maxwell, “On Governors” the Topology of Nerve Nets”

1881 Ribot, Diseases of Memory 1946 Macy Foundation meetings on feedback organized by

1890 James, Principles of Psychology McCulloch

1891 Waldeyer, “neurone” theory 1946 Turing, NPL report on ACE computer

1894 Boltzmann, work on statistical physics 1946-l 948 Burks, Goldstine, von Neumann, IAS computer

1906 Sherrington, The Integrative Action of the Nervous reports

System 1947 Ashby, “The Nervous System as Physical Machine”

1915 Holt, The Freudian Wish 1947 McCulloch and Pitts, work on a prosthetic device to

1919 Watson, Psychology from the Standpoint of a Behav- enable the blind to read by ear

iorist 1947 McCulloch and Pitts, “How We Know Universals“

1919-l 923 McCulloch, work on logic of transitive verbs 1948 Wiener, Cybernetics

1923 Lashley, “The Behaviorist lntepretation of Conscious- 1948 Turing, “Intelligent Machinery“ (NPL Report)

ness” 1948 Turing, first work programming the Manchester com-

1924 Nyquist, ‘Certain Factors Affecting Telegraph Speed” puter to carry out “purely mental activities”

1925 Szilard, work on entropy and statistical mechanics 1948 Von Neumann, “General and Logical Theory of Auto-

1928 Hartley, “Transmission of Information” mata” (Hixon Symposium, Pasadena)

1932 Von Neumann, Foundation of Quantum Mechanics 1948 Shannon, “The Mathematical Theory of Communica-

Mid-l 930s Development of recursive function theory (work tion”

of Godel, Church, Kleene, Post, Turing) 1949 Von Neumann, “Theory and Organization of Compli-

Mid-1930s Feedback concept studied by electrical engi- cated Automata” (University of Illinois Lectures)

neers (work of Black, Nyquist, Bode) 1949 Shannon and Weaver, The Mathematical Theory of

Mid-l 930s Development of electroencephalography Communication

1937 Turing, “On Computable Numbers” 1950 Shannon, “Programming a Computer to Play Chess”

1937 Turing and von Neumann, first discussions about com- 1950 McCulloch, “Machines That Think and Want”

puting and artificial intelligence at Princeton University 1950 McCulloch, “Brain and Behavior”

1938 Shannon, “Symbolic Analysis of Relay and Switching 1950 Turing, ‘Computing Machinery and Intelligence”

Circuits” (published 1938, M.A. Thesis 1940) 1950 Ashby, “The Cerebral Mechanism of Intelligent Action”

Late-l 930s Harvard Medical School seminar led by Cannon 1952 Turing, “The Chemical Basis of Morphogenesis”

1940 Wiener, work at MIT on computers 1952 McCulloch, accepts position at MIT to be with Pitts

1940 Shannon, “Communication in the Presence of Noise” and Wiener

(submitted; published 1948) 1952 Ashby, Design for a Brain

1942 Macy Foundation meeting on central inhibition in the 1952 Von Neumann, “Probabilistic Logics and the Synthesis

nervous system of Reliable Organisms from Unreliable Components”

1942 Bigelow, Rosenblueth, Wiener, “Behavior, Purpose, (California Institute of Technology Lectures)

Teleology” 1952-I 953 Von Neumann, “The Theory of Automata: Con-

1943 Turing, work with Shannon and Nyquist at Bell Labo- struction, Reproduction, Homogeneity”

ratories in New York City 1953 Walter, The Living Brain

1943 McCulloch and Pitts, “Logical Calculus of the Ideas 1953 Turing, “Digital Computers Applied to Games: Chess”

Immanent in Nervous Activity” 1953 Turing, ‘Some Calculations on the Riemann Zeta

1943 Pitts accepts position at MIT to work with Wiener Function”

1944 Princeton conference organized by Wiener and 1956 Von Neumann, The Computer and the Brain (prepared

von Neumann on topics related to computers and for the Silliman Lectures, Yale; published 1958)

control 1956 Ashby, Introduction to Cybernetics

1945 Von Neumann, draft report on EDVAC 1956 Ashby, “Design for an Intelligence Amplifier”

each point in the message. Information in Shannon’s Shannon admitted the importance of previous work

sense was a measure of orderliness (as opposed to in communications engineering to his interest in a

randomness) in that it indicated the number of pos- general theory of information, in particular the work

sible messagesfrom which a particular messageto be of Harry Nyquist and R. V. Hartley.

sent was chosen. The larger the number of possibili- The recent developmentof various methodsof

ties, the larger the amount of information transmitted, modulation such as PGM and PPM which exchange

because the actual message is distinguished from a bandwidth for signal-to-noiseratio has intensified the

greater number of possible alternatives. interest in a generaltheory of communication. A basis

120 * Annals of the History of Computing, Volume 7, Number 2, April 1985

W. Aspray - Conceptualization of Information

for such a theory is contained in the important papersof the term intelligence, which masked the difference

Nyquist and Hartley on this subject. In this paper we between information and meaning-his work was im-

will extend the theory to include a number of new portant for presenting the first statement of a loga-

factors. (Shannon 1948,pp. 31-32) rithmic law for communication and the first exami-

Nyquist was conducting research at Bell Laborato- nation of the theoretical bounds for ideal codes for the

ries on the problem of improving transmission speeds transmission of information. Shannon later gave a

over telegraph wires when he wrote a paper on the more general logarithmic rule as the fundamental law

transmission of “intelligence” (Nyquist 1924).7 This of communication theory, which stated that the quan-

paper concerned two factors affecting the maximum tity of information is directly proportional to the

speed at which intelligence can be transmitted by logarithm of the number of possible messages. Ny-

telegraph: signal shaping and choice of codes. As quist’s law became a specific case of Shannon’s law,

Nyquist stated, because the number of current values is directly re-

The first is concernedwith the best shapeto be lated to the number of symbols that can be transmit-

impressedon the transmitting medium so asto permit ted. Nyquist was aware of this relation, as his defini-

of greater speedwithout undue interference either in the tion of speed of transmission indicates.

circuit under consideration or in those adjacent, while

the latter dealswith the choice of codeswhich will By the speedof transmissionof intelligence is meant the

permit of transmitting a maximum amount of number of characters, representingdifferent letters,

intelligence with a given number of signal elements. figures, etc., which can be transmitted in a given length

(Nyquist 1924,p. 324) of time. (Nyquist 1924,p. 333)

While most of Nyquist’s article considered the prac- By “letters, figures, etc.” he meant a measure propor-

tical engineering problems associated with transmit- tional to what Shannon later would call “bits of infor-

ting information over telegraph wires, one theoretical mation.” Nyquist’s table, listing the relative amount

section was of importance to Shannon’s work, entitled of intelligence transmitted, illustrates the gain in in-

“Theoretical Possibilities Using Codes with Different formation consequent to a greater number of possible

Numbers of Current Values.” In this section Nyquist choices. That he listed the relative amount indicates

presented the first logarithmic rule governing the his awareness that there is an important relation

transmission of information. between the number of figures and the amount of

Nyquist proved that the speed at which intelligence intelligence (information) being transmitted. This re-

can be transmitted over a telegraph circuit obeys the lation is at the heart of Shannon’s theory of commu-

equation nication. Nyquist did not generalize his concept of

“intelligence” beyond telegraphic transmissions, how-

w = k log m

ever.

where W is the speed of transmission of intelligence, Shannon’s other predecessor in information theory,

m is the number of current values that can be trans- Hartley, was also a research engineer at Bell Labora-

mitted, and k is a constant. He also prepared the tories. Hartley’s intention was to establish a quanti-

following table by which he illustrated the advantage tative measure to compare capacities of various sys-

of using a greater number of current values for trans- terns to transmit information. His hope was to provide

mitting messages (Nyquist 1924, p. 334). a theory general enough to include telegraphy, teleph-

ony, television, and picture transmission-communi-

Relative Amount of

Intelligence that Can Be cations over both wire and radio paths. His in-

Number of Transmitted with the Given vestigation (Hartley 1928) began with an attempt to

Current Values Number of Signal Elements establish theoretical limits of information transmis-

2 100 sion under idealized situations. This important step

3 158 led him away from the empirical studies of engineering

4 200 adopted by most earlier researchers and toward a

5 230 mathematical theory of communication.

8 300 Before turning to concrete engineering problems,

16 400 Hartley addressed “more abstract considerations.” He

Although Nyquist’s work was primarily empirical began by making the first attempt to distinguish a

and concerned with engineering issues-and he used notion of information amenable to use in a scientific

context. He realized that any scientifically usable def-

’ Nyquist worked closely with Shannon during the war. He also had inition of “information” should be based on what he

discussions about communications theory a nd engineering with

rn..~...~~.

--~,:I- m :..- ..._- _ -.z_:i^-

~urmg wnue ~urmg wab a v~snor a~ LIK MA ^L iL^ ,-I-- in 1943. See Hodges called “physical” instead of “psychological” consider-

(1983) for details. ations. He meant that information is an idea involving

Annals of the History of Computing, Volume 7, Number 2, April 1985 l 121

W. Aspray l Conceptualization of Information

a quantity of physical data and should not be confused of great concern to electrical engineers at the time.

with the meaning of a message. He found that the total amount of information that

could be transmitted over a steady-state system of

The capacity of a systemto transmit a particular

sequenceof symbolsdependsupon the possibility of alternating currents limited to a given frequency-

distinguishing at the receiving end between the results range is proportional to the product of the frequency-

of the various selectionsmadeat the sendingend. The range on which it transmits and the time during which

operation of recognizing from the received record the it is available for transmission.’

sequenceof symbolsselectedat the sendingend may be Hartley had arrived at many of the most important

carried out by those of us who are not familiar with the ideas of the mathematical theory of communication:

Morse code. We could do this equally well for a sequence the difference between information and meaning, in-

representinga consciouslychosenmessageand for one formation as a physical quantity, the logarithmic rule

sent out by the automatic selectingdevice already for transmission of information, and the concept of

referred to. A trained operator, however, would say that noise as an impediment in the transmission of infor-

the sequencesent out by the automatic device wasnot

mation.

intelligible. The reasonfor this is that only a limited

number of the possiblesequenceshave beenassigned Hartley’s aim had been to construct a theory capable

meanings commonto him and the sendingoperator. of evaluating the information transmitted by any of

Thus the number of symbolsavailable to the sending the standard communication technologies. Starting

operator at certain of his selectionsis here limited by with these ideas, Shannon developed a general theory

psychologicalrather than physical considerations.Other of communication, not restricted to the study of tech-

operatorsusing other codesmight make other selections. nologies designed specifically for communication.

Hence in estimating the capacity of the physical system to Later, Shannon’s collaborator, Warren Weaver, de-

transmit information we should ignore the question of scribed the theory clearly.

interpretation, make each selection perfectly arbitrary,

and base our results on the possibility of the receiver’s The word communication will be usedhere in a very

distinguishing the result of selecting any one symbol from broad senseto include all of the proceduresby which

that of selecting any other. By this meansthe one mind may affect another. This, of course,involves

psychologicalfactors and their variations are eliminated not only written and oral speech,but also music,the

and it becomespossibleto set up a definite quantitative pictorial arts, the theatre, the ballet, and in fact all

measureof information basedon physical considerations human behavior. In someconnectionsit may be

alone. (Hartley 1928,pp. 537-538; emphasisadded) desirableto use a still broader definition of

communication, namely, one which would include the

Thus Hartley distinguished between psychological and proceduresby meansof which one mechanism(say

physical considerations-that is, between meaning automatic equipment to track an airplane and to

and information. The latter he defined as the number computeits probable future positions) affects another

of possible messages,independent of whether they are mechanism(say a guidedmissilechasingthis airplane).

meaningful. He used this definition of information to (Shannon and Weaver 1949,Introductory Note)

give a logarithmic law for the transmission of infor- What began as a study of transmission over telegraph

mation in discrete messages:

lines was developed by Shannon into a general theory

H = K log S” of communication applicable to telegraph, telephone,

where H is the amount of information, K is a constant, radio, television, and computing machines-in fact, to

n. is the number of symbols in the message, s is the any system, physical or biological, in which informa-

size of the set of symbols, and therefore sn is the tion is being transferred or manipulated through time

number of possible symbolic sequencesof the specified or space.

length n. This law included the case of telegraphy and In Shannon’s theory a communication system con-

subsumed Nyquist’s earlier law. Once the law for the sists of five components related to one another, as

discrete transmission of information had been estab- illustrated in Figure 1 (Shannon and Weaver 1949, p.

lished, Hartley showed how it could be modified to 34). These components are:

treat continuous transmission of information, as in 1. An information sourcewhich producesa message

the case of telephone voice transmission. or sequenceof messages to be communicatedto the

Hartley turned next to questions of interference and receiving terminal. . . .

described how the distortions of a system limit the 2. A transmitter which operateson the messagein

rate of selection at which differences between trans- someway to produce a signal suitable for transmission

mitted symbols may be distinguished with certainty. over the channel.. . .

His special concern was with the interference caused

by the storage and subsequent release of energy 8Cherry (1952)reviewsthe researchemanatingfrom or relatedto

through induction and capacitance, a source of noise Hartley’swork.

122 * Annals of the Hlstory of Computing, Volume 7, Number 2, April 1985

W. Aspray l Conceptualization of Information

then it is arbitrarily saidthat the information,

Information

source Transmitter

associatedwith this situation, is unity. Note that it is

misleading(although often convenient) to say that one

or the other messageconveys unit information. The

Message Message concept of information appliesnot to the individual

Signal IL

messages (as the concept of meaning would), but rather

to the situation as a whole, the unit information

indicating that in this situation one has an amount of

freedomof choice, in selecting a message,which is

convenient to regard asa standard or unit amount.

Noise

SOWX (Shannon and Weaver 1949,pp. 8-9)

Shannon recognized that information could be

Figure1. Schematic diagram of a generalcommunication

system. (Redrawn from Shannon and Weaver (1949, p. measured by any increasing monotonic function, pro-

341.) vided the number of possible messages is finite. He

chose from among these the logarithmic function for

the same reason as Hartley: that it accords well with

3. The channel is merely the mediumusedto our intuition of what the appropriate measure should

transmit the signal from transmitter to receiver. . . . be. We intuitively feel that two punched cards should

4. The receiver ordinarily performs the inverse convey twice the information of one punched card.

operation of that done by the transmitter, reconstructing Assuming one card can carry n symbols, two cards

the messagefrom the signal. will carry n* combinations. The logarithmic function

5. The destination is the person (or thing) for whom then measures the two cards as conveying log n2 = 2

the messageis intended.

log n bits of information, twice that conveyed by one

The importance of this characterization is its appl- card (log n). Shannon chose base 2 for his logarithmic

icability to a wide variety of communication problems, measure since log, assigns one unit of information to

provided that the five components are appropriately a switch with two positions. Then N two-position

interpreted. For example, it applies equally well to switches could store log, 2N (=N) binary digits of

conversations between humans, interactions between information.’ If there were N equiprobable choices,

machines, and even to communication between parts the amount of information would be given by log, N.

of an organism. Properly interpreted, the communi- Shannon generalized this equation to the nonequi-

cation both between the stomach and the brain and probable situation, where the amount of information

between the target and the guided missile could be H would be given by

seen as examples of a communication system. Using

Shannon’s theory, previously unrecognized connec- H = --(PI log2 PI + . . . + pn log, P,)

tions between the biological and the physical worlds

if the choices have probabilities pl, . . . , p,,.

could be unmasked.

While Hartley recognized that a distinction must Shannon recognized that this formulation of infor-

be drawn between information and meaning, Shannon mation is closely related to the concept of entropy,

sharpened the distinction by giving the first definition since the information parameter measures the order-

of information sufficiently precise for scientific dis- liness of the communication channeLlo

course. Weaver described the importance of this defi- Quantities of the form H = -Pi log Pi (the constant K

nition. merely amountsto a choice of a unit of measure)play a

central role . . . as measuresof information, choice and

The word information, in this theory, is usedin a special uncertainty. The form of H will be recognizedasthat of

sensethat must not be confusedwith its ordinary usage. entropy asdefined in certain formulations of statistical

In particular, information must not be confusedwith mechanicswhere Pi is the probability of a systembeing

meaning. in cell i of its phasespace.H is then, for example, the H

In fact, two messages, one of which is heavily loaded in Boltzmann’s famousH theorem. (Shannon and

with meaning and the other of which is pure nonsense, Weaver 1949)

can be exactly equivalent, from the present viewpoint, as

regardsinformation. . . .

To be sure, this word information in communication ’ “Binary digits” was shortened to “bits” by John Tukey, a Princeton

University professor who also worked at Bell Laboratories (see the

theory relates not so much to what you do say, as to

Annals, Vol. 6, No. 2, April 1984, pp. 152-155). The introduction of

what you could say. That is, information is a measureof a new term such as bit is a good indication of the introduction of a

one’sfreedom of choice when one selectsa message.If new concept.

one is confronted with a very elementary situation lo Shannon refers t.he reader to Tolman’s book (1938) on statistical

where he has to chooseone of two alternative messages, mechanics.

Annals of the History of Computing, Volume 7, Number 2, April 1985 l 123

W. Aspray 1 Conceptualization of Information

Entropy has a long history in physics, and during involving other physical and complicated biological

the twentieth century had already become closely as- phenomena. Wiener recognized that several diverse

sociated with the amount of information in a physical problems he had confronted during the war had

system. Weaver carefully credited these roots of Shan- yielded to quite similar approaches involving feedback

non’s work.” control and communication mechanisms. This reali-

Dr. Shannon’swork roots back, as von Neumann has zation was the beginning of his new interdisciplinary

pointed out, to Boltzmann’s work on statistical physics science, cybernetics, which considered problems of

(1894), that entropy is related to “missinginformation,” control and communication wherever they occurred.13

inasmuchas it is related to the number of alternatives Many of his subsequent scientific projects were de-

which remain possibleto a physical system after all the signed to illustrate the power of cybernetics in under-

macroscopicallyobservableinformation concerning it standing biological functioning.

hasbeen recorded.L. Szilard (Zsch. f. Phys., Vol. 53, Wiener recognized the importance of his war-re-

1925)extended this idea to a generaldiscussionof lated work to his later development of cybernetics.

information in physics, and von Neumann (Math. Elaborating on the conviction he shared with physiol-

Foundation of Quantum Mechanics, Berlin, 1932,Chap. ogist Arturo Rosenblueth “that the most fruitful areas

V) treated information in quantum mechanicsand

for the growth of the sciences were those which had

particle physics. (Shannon and Weaver 1949,p. 3, fn)

been neglected as a no-man’s land between the various

This close relation of information to entropy is not established fields” (Wiener 1948, p. S), he wrote:

surprising, for information is related to the amount of We had agreedon these matters long before we had

freedom of choice one has in constructing messages. chosenthe field of our joint investigations and our

This tie between thermodynamics, statistical mechan- respectiveparts in them. The deciding factor in this new

its, and communication theory suggests that commu- step was the war. I had known for a long time that if a

nication theory involves a basic and important prop- national emergencyshould come,my function in it

erty of the physical universe and is not simply a would be determined largely by two things: my close

scientific by-product of modern communication tech- contact with the program of computing machines

nology. developedby Dr. Vannevar Bush, and my own joint

Shannon used his theory to prove several theoretical work with Dr. Yuk Wing Lee on the designof electrical

results about communication systems and to demon- networks. In fact, both proved important. (Wiener 1948,

strate applications to the communications industry. p. 9)

He established theoretical limits applicable to practi- In 1940 Wiener began work, in contact with Bush

cal communication systems.12His theory furnished a at MIT, on the development of computing machinery

new definition of information that could be applied in for the solution of partial differential equations. One

a wide variety of physical settings. It provided a math- outcome of this project was a proposal by Wiener,

ematical approach to the study of theoretical problems purportedly made to Bush, of features to be incorpo-

of information transmission and processing. Shannon rated into future computing machines. Included were

and Weaver continued the theoretical study of this many of the features critical in the following decade

subject. Meanwhile, the theory provided the basis for to the development of the modern computer: numeri-

interdisciplinary information studies carried out by cal instead of analog central adding and multiplying

many others on electronic computing machines and equipment, electronic tubes instead of gears or me-

on physical and biological feedback systems. chanical relays for switching, binary instead of deci-

mal representation, completely built-in logical facili-

Norbert Wiener and Cybernetics ties with no human intervention necessary after the

introduction of data, and an incorporated memory

While Shannon concentrated mainly on applications with capability for rapid storage, recall, and erasure.

of information theory to communications engineering, For Wiener the importance of, and presumably the

Wiener stressed its application to control problems source of, these suggestions was that “they were all

ideas which are of interest in connection with the

I1 In the Szilard article cited by Weaver, “Uber die Entropievermin- study of the nervous system” (Wiener 1948, p. 11).

derung in einem Thermodynamischen System bei Eingriffen intel- Wiener may have been the first to compare explicitly

ligenter Wesen,” p. 840, Szilard discusses Maxwell’s Demon. He

points out that the entropy lost by the gas through the separation features of the electronic computer and the human

of the high- and low-energy particles corresponds to the information

used by the Demon to decide whether or not to let a particle through I3 Wiener’s interest in these subjects had first been piqued before

the door. the war in an interdisciplinary seminar he attended at the Harvard

i* According to Hodges (1983), Bell Labs was beginning to use Medical School (discussed later in this section). See Wiener’s intro-

Shannon’s ideas in its research by 1943. Also see Cherry (1952). duction to Cybernetics (1948) for details.

124 l Annals of the History of Computing, Volume 7, Number 2, April 1985

W. Aspray l Conceptualization of Information

brain.14 His comments certainly illustrated the simi- These feedback problems often reduced to partial

larity of structure in diverse settings, which he em- differential equations representing the stability of the

phasized later in his cybernetics program. system. Wiener’s third war-related project, the work

Another war-related program, undoubtedly the with Lee on wave filters, reinforced the close tie to

most important to Wiener’s formulation of cybernet- information theory; the purpose of that research was

its, involved the development of fire-control apparatus to remove extraneous background noise from electrical

for antiaircraft artillery. This problem was made ur- networks.

gent at the beginning of the war by the threat of Wiener contributed significantly to the mathemat-

German air attack on the weakly defended English ical theory underlying these diverse engineering prob-

coast. The appreciable increase in velocity of the new lems. Like Shannon, Wiener was moving from the art

German aircraft made earlier methods for directing of engineering to the precision of science. Using

antiaircraft fire obsolete. Wiener’s research suggested the statistical methods of time-series analysis, he was

that a new device for antiaircraft equipment might able to show that the problem of prediction could be

effectively incorporate a feedback system to direct solved by the established mathematical technique of

future firings. Thus Wiener and Julian Bigelow minimization.

worked toward a theory of prediction (for the flight of Minimization problemsof this type belong to a

aircraft) and on its effective application to the anti- recognizedbranch of mathematics,the calculusof

aircraft problem at hand. variations, and this branch has a recognizedtechnique.

With the aid of this technique, we were able to obtain an

It will be seenthat for the secondtime I had become explicit best solution of the problem of predicting the

engagedin the study of a mechanico-electricalsystem future of a time series,given its statistical nature; and

which was designedto usurp a specifically human even further, to achieve a physical realization of this

function-in the first case,the execution of a solution by a constructible apparatus.

complicatedpattern of computation; and in the second, Once we had done this, at least one problem of

the forecasting of the future. (Wiener 1948,p. 13) engineeringdesigntook on a completely new aspect.In

Bigelow and Wiener recognized the importance of general,engineeringdesignhasbeen held to an art

this concept of feedback in a number of different rather than a science.By reducing a problem of this sort

electromechanical and biological systems. For exam- to a minimization principle, we had establishedthe

subject on a far more scientific basis.It occurred to us

ple, the movement of the tiller to regulate the direction

that this was not an isolated case,but that there wasa

of a ship was shown to invblve a feedback process whole region of engineeringwork in which similar

similar to that used in hand-eye coordinations neces- designproblemscould be solvedby the methodsof the

sary to pick up a pencil. (The Wiener-Bigelow work calculusof variations. (Wiener 1948,p. 17)

was apparently never implemented in a fire-control

mechanism.) The recurrence of similar problems of control and

Wiener quickly saw that the mathematics of feed- communication in widely diverse fields of engineering

back control was closely associated with aspects of sta- and the availability of a mathematical theory with

tistics, statistical mechanics, and information theory. which to organize these problems led Wiener to the

On the communication engineeringplane, it had already creation of his new interdisciplinary science of cyber-

becomeclear to Mr. Bigelow and myself that the netics. “We have decided to call the entire field of

problemsof control engineeringand of communication control and communication theory, whether in the

engineeringwere inseparable,and that they centered not machine or in the animal, by the name of Cybernetics”

around the technique of electrical engineeringbut (Wiener 1948, p. 19).

around the much more fundamental notion of the Long before Wiener’s formulation of the science of

message,whether this should be transmitted by cybernetics in 1947, results had been obtained that

electrical, mechanical,or nervous means.The messageis Wiener included as cybernetic, The word cybernetics

a discreteor continuous sequenceof measurableevents

derived from the Greek kybernetes (“steersman”). Ky-

distributed in time-precisely what is called a time-

seriesby the statisticians. (Wiener 1948,p. 16) bernetes in Latin was gubernator, from which our word

governor derived. The connotations, both of a steers-

man of public policy and of a self-regulating mecha-

I4 Both Turing and von Neumann made direct comparisons between nism on a steam engine, are faithful to the word’s

the computer and the brain publicly in the 1950s. McCulloch and

Pitts might also be regarded as having made this comparison in ancient roots. The governor on a steam engine is a

their famous joint paper (McCulloch and Pitts 1943). It is hard to feedback mechanism that increases or decreases the

date when, if ever, Wiener first made the comparison. He suggests speed of the engine depending on its current speed.

in the introduction to Cybernetics a date as early as 1940. The

author and others have searched unsuccessfully for written docu- Maxwell published a paper (1868) giving a mathemat-

mentation. ical characterization of governors. Similar feedback

Annals of the History of Computing, Volume 7, Number 2, April 1985 l 125

W. Aspray - Conceptualization of Information

mechanisms were discussed by the physiologist Ber- Wiener’s cybernetics work paid at least as much

nard in his discussion of homeostasis, the means by attention to biological as to electromechanical appli-

which an organism regulates its internal equilibrium. cations. This interest was rooted in his participation

(For a history of feedback control, see Mayr (1970). in a series of informal monthly discussions in the

See Cannon (1932) for a discussion of Bernard’s 1930s on scientific method led by Walter Cannon at

work.) In the 1930s and 1940s Nyquist, H. S. Black, the Harvard Medical School. A few members of the

and H. W. Bode renewed the study of feedback in MIT faculty, including Wiener, attended these meet-

both practical and theoretical studies of amplifiers ings. Here Wiener met Rosenblueth, with whom he

and other electrical devices. was to collaborate on biocybernetics throughout the

Although Wiener only arrived at the name cyber- remainder of his career.

netics in 1947, as early as 1942 he had participated in Bigelow and Wiener, perhaps as a result of their

interdisciplinary meetings to discuss problems central work on antiaircraft artillery, pointed to feedback as

to the subject. One early meeting held in New York in an important factor in voluntary activity. To illus-

1942 under the auspices of the Josiah Macy Founda- trate, Wiener described the process of picking up a

tion was devoted to problems of “central inhibition in pencil. He pointed out that we do not will certain

the nervous system.” Bigelow, Rosenblueth, and Wie- muscles to take certain actions-instead, we will to

ner read a joint paper, “Behavior, Purpose, Teleology” pick the pencil up.

(Rosenblueth et al. 1943), which used cybernetic prin- Once we have determined on this, our motion proceeds

ciples to examine the functioning of the mind. Von in such a way that we may say roughly that the amount

Neumann and Wiener called another interdisciplinary by which the pencil is not yet picked up is decreasedat

meeting at Princeton early in 1944. Engineers, phys- eachstage.This part of the action is not in full

iologists, and mathematicians were invited to discuss consciousness.

cybernetic principles and computing design. As Wie- To perform an action in such a manner, there must be

a report to the nervous system,consciousor

ner assessed the situation:

unconscious,of the amount by which we have failed to

At the end of the meeting, it had becomeclear to all that pick the pencil up at each instant. (Wiener 1948,p. 14)

there was a substantial commonbasisof ideasbetween

the workers of the different fields, that people in each They advanced their claims about the biological im-

group could already usenotions which had beenbetter portance of feedback mechanisms so far as to use

developedby the others, and that someattempt should them to explain pathological conditions retarding vol-

be madeto achieve a commonvocabulary. (Wiener 1948, untary actions such as ataxia (where the feedback

P. 23) system is deficient) and purpose tremor (where the

In fact, from discussions with electrical engineers feedback system is overactive). Such initial successes

up and down the East Coast, Wiener reported, “Every- assured Wiener that this approach could provide val-

where we met with a sympathetic hearing, and the uable new insights into neurophysiology.

vocabulary of the engineers soon became contami- We thus found a most significant confirmation of our

nated with the terms of the neurophysiologist and the hypothesisconcerning the nature of at least some

psychologist” (Wiener 1948, p. 23). In 1946 McCulloch voluntary activity. It will be noted that our point of view

arranged for a series of meetings to be held in New considerablytranscendedthat current among

York on the subject of feedback-again under the neurophysiologists.The central nervous systemno

auspices of the Josiah Macy Foundation. Among those longer appearsas a self-contained organ, receiving

attending a number of these meetings were the math- inputs from the sensesand discharginginto the muscles.

On the contrary, someof its most characteristic

ematicians Wiener, von Neumann, and Pitts, the activities are explicable only as circular processes,

physiologists McCulloch, Lorente de No, and Rosen- emergingfrom the nervous systeminto the muscles,and

blueth, and the engineer Herman H. Goldstine (who re-entering the nervous systemthrough the sense

was associated with the ENIAC, EDVAC, and IAS com- organs,whether they be proprioceptors or organsof the

puter projects). Thus, there was widespread interac- specialsenses.This seemedto us to mark a new step in

tion in the United States among the participants in the study of that part of neurophysiology which

the new information sciences. Typical of international concernsnot solely the elementary processesof nerves

interchange was a visit by Wiener to England and and synapsesbut the performance of the nervous system

France, where he had a chance to exchange informa- as an integrated whole. (Wiener 1948,p. 15)

tion on cybernetics and artificial intelligence with The revelation that cybernetics provided a new ap-

Turing, then at the National Physical Laboratory at proach to neurophysiology prompted the joint paper

Teddington, and mathematical results on the relation by Rosenblueth, Wiener, and Bigelow. As the title

of statistics and communication engineering with indicates, they gave an outline of behavior, purpose,

French mathematicians at a meeting in Nancy. and teleology from a cybernetic approach. They argued

126 l Annals of the History of Computing, Volume 7, Number 2, April 1985

W. Aspray l Conceptualization of Information

that “teleological behavior thus becomes synonymous

Behavior

with behavior controlled by negative feedback, and

gains therefore in precision by a sufficiently restricted Nonactivk Jctive

connotation” (Rosenblueth et al. 1943, pp. 22-23). (passive)

(passwe)

They also argued that the same broad classifications Nonpurposeful / Pl$osef ul

of behavior (see Figure 2) hold for machines as hold (random)

for living organisms. The differences, they main- Nonfeedback ’ hback

tained, are in the way these functional similarities are (nonteleological) (teleological)

carried out: colloids versus metals, large versus small / 1.

differences in energy potentials, temporal versus spa- Nonpredictive Predictwe

(nonextrapolative) (extrapolative)

tial multiplication of effects, etc.

For the most part, however, Wiener’s work in bio- Figure 2. Behavior classifications. Note that “predictive”

cybernetics was less philosophical and more physio- means order of prediction (depending on the number of

logical than the joint paper with Rosenblueth and parameters). (Redrawn from Rosenblueth et al. (1943, p.

Bigelow would indicate. More typical was a joint proj- 21).)

ect between Rosenblueth and Wiener on the muscle

actions of a cat (Wiener 1948, pp. 28-30). In this rial functioning, that this functioning is amenable to

project they used the (cybernetic) methods of McCall scientific study, and consequently that the brain is

(1945) on servomechanisms to analyze the system in amenable to mathematical analysis. Beginning with

the same way one would study an electrical or me- the work of H. von Helmholtz, T. A. Ribot, and Wil-

chanical system, while using data provided by their liam James at the end of the nineteenth century, phys-

physiological experimentation on cats. For the re- iological psychology became identified with the study

mainder of his career Wiener split his time between of the physiological underpinnings of behavior and ex-

Cambridge and Mexico City, where Rosenblueth perience (Murphy 1949). The subject drew heavily on

taught at the national medical school. Wiener could physiological research concerning the central nervous

thus continue his collaboration with Rosenblueth on system. Of special importance was the work of W.

a series of physiological projects utilizing the cyber- Waldeyer and C. S. Sherrington (Sherrington 1906).

netic approach to understand physiological processes Waldeyer’s “neurone theory,” which argued for the in-

of biological organisms. dependence of the nerve cells and the importance of

the synapses, was quickly accepted by psychologists

Warren McCulloch, Walter Pitts, and the and became the basis of the next generation of phys-

Development of Mathematical Models of the iological study of the brain. Sherrington’s work on re-

Nervous System flex arc was instrumental in convincing psychologists

that they should consider a neurophysiological ap-

Another biological application of the new information proach. McCulloch pointed explicitly to Sherrington’s

science was to the study of nerve systems, and in work as a precursor of his own research. Both men

particular to the study of the human brain. Mathe- adopted a highly idealized approach. Sherrington re-

matical models resulted, based partly on physiology alized that his model of simple reflex was not physio-

and partly on philosophy. The most famous applica- logically precise, but only a “convenient abstraction.”

tion was made in a joint paper by McCulloch and Pitts McCulloch and Pitts made a similar claim for their

(1943) in which they presented a mathematical model model of neuron nets, but their model was even less re-

of the neural networks of the brain based on Carnap’s alistic than the Sherrington model.

logical calculus and on Turing’s work on theoretical Research in physiological psychology had been car-

machines. ried out since the beginning of the century. Its impor-

The application of the information sciences to psy- tance increased rapidly in the 1930s because of two

chology was linked to several active movements within developments: the implementation of electroence-

psychology. The rise of physiological psychology, the phalography enabled researchers to make precise

development of functionalism, the growth of behav- measurements of the electrical activity of the brain;

iorism, and the infusion of materialism into the bio- and the growth of mathematical biology, especially

logical and psychological sciences all contributed to under Nicholas Rashevsky’s Chicago school, contrib-

the study by mathematical models of the functioning uted a precise, mathematical theory of the functioning

of the brain. of the brain that could be tested experimentallv.15 The ”

Physiological psychology was important to infor-

mation science because it contributed the idea that I5 The Rashevsky school published mainly in its own journal, Bul-

one can understand the brain by examining its mate- letin of Mathematical Biophysics.

Annals of the History of Computing, Volume 7, Number 2, April 1985 l 127

W. Aspray l Conceptualization of Information

concentration on the material properties of the brain, chanical thinking machines.17 Watson’s behaviorism

the emphasis on its functioning instead of on its states did not convince the majority of American psycholo-

of consciousness, and the mathematical approach of gists, but a group of dedicated behaviorists did conduct

Rashevsky all set the stage for a mathematical theory experiments using the condition-response method.

of the functioning brain as an information processor. Most influential on McCulloch and Pitts was the work

Most physiological psychologists and many other in the 1930s of the behaviorist K. S. Lashley, whose

psychologists accepted the functionalist position. In viewpoint is indicated by the following quotation.

Principles of Psychology (1890) William James argued To me the essenceof behaviorism is the belief that the

persuasively that mind should be conceived of dynam- study of man will reveal nothing except what is

ically, not structurally, and by the end of the nine- adequatelydescribablein the conceptsof mechanicsand

teenth century there was a consensus that psychology chemistry, and this study far outweighsthe questionof

should concentrate on mental activity instead of on the method by which the study is conducted. (Lashley

1923, p. 244)

states of experience. E. B. Holt took a radical-and

almost cybernetic-position16 (Holt 1915) toward psy- McCulloch was trained within this psychological

chology when he argued that consciousness is merely tradition of experimental epistemology. As an under-

a servomotor adjustment to the object under consid- graduate at Haverford and Yale, he majored in philos-

eration. As one historian of psychology has assessed ophy and psychology. He then went to Columbia,

the importance of functionalism, where he received a master’s degree in psychology for

work in experimental aesthetics. Afterward, he en-

Functionalism did not long maintain itself asa school;

tered the College of Physicians and Surgeons of Co-

but much of the emphasislived on in behaviorism . . .

lumbia University, where he studied the physiology of

and in the increasingtendency to ask lessabout

consciousness, more about activity. (Murphy 1949,p. the nervous system.

223) In 1928I was in neurology at Bellevue Hospital and in

1930at Rockland State Hospital for the Insane, but my

The importance of functionalism to information purpose(to manufacture a logic of transitive verbs)

science is clear. It concentrated on the functional never changed.It wasthen that I encounteredEilhard

operation of the brain, and represented the brain as a von Doramus,the great philosophic student of

processor (of information)-as a doer as well as a psychiatry, from whom I learned to understand the

reflector. logical difficulties of true casesof schizophrenia and the

Behaviorist psychology, by concentrating on behav- developmentof psychopathia-not merely clinically, as

ior and not consciousness, helped to break down the he had learnedthem of Berger, Birnbaum, Bumke,

distinction between the mental behavior of humans Hoche, Westphal, Kahn, and others-but as he

and the information processing of lower animals and understoodthem from his friendship with Bertrand

machines. This step assisted the acceptance of a uni- Russell,Heidegger,Whitehead, and Northrop-under

the last of whom he wrote his great unpublishedthesis,

tied theory of information processors, whether in hu- “The Logical Structure of the Mind: An Inquiry into the

mans or machines. Foundations of Psychology and Psychiatry.” It is to him

American behaviorism was a revolt against the old- and to our mutual friend, CharlesHolden Prescott, that

style, introspective psychology of Wilhelm Wundt and I am chiefly indebted for my understandingof paranoia

E. B. Titchener. As part of the general shift in scien- uera and of the possibility of making the scientific

tific attitude, there was a movement in psychology method applicableto systemsof many degreesof

toward materialism in the last half of the nineteenth freedom. (McCulloch 1965,pp. 2-3)

century. Because behavior is observable, it can be McCulloch left Rockland to return to Yale, where

subjected to scientific study. J. B. Watson, the leader he studied experimental epistemology with Dusser de

of American behaviorism, concentrated on “scientific” Barenne. Upon de Barenne’s death, he moved to the

concepts such as effector, receptor, and learning as University of Illinois Medical School in Chicago as a

opposed to the old concepts of sensation, feeling, and professor of psychiatry; he continued his work on

image (Watson 1919). Watson conceived of mental experimental epistemology and began his collabora-

functions as a type of internal behavior that could be tion with Pitts. He completed his career at the MIT

sensed by scientific probing. Turing adopted a similar Research Laboratory of Electronics, where he collab-

attitude in his unified treatment of human and me- orated with Pitts, Wiener, and others in the study of

electrical circuit theory of the brain.

i6 Of course, this is an anachronistic characterization because the

servomotor was not yet invented and the principles of cybernetics I7 This attitude is most evident in Turing’s 1950 paper, “Computing

had not yet been enunciated. Nevertheless, there is a striking Machinery and Intelligence,” but his 1937 paper, “On Computable

similarity to those later ideas. Numbers,” also suggests the view.

128 l Annals of the History of Computing, Volume 7, Number 2, April 1985

W. Aspray - Conceptualization of Information

Pitts’s background was more mathematical than problemsin hand. . . . It was [Charles] Peirce who broke

McCulloch’s. Pitts studied mathematical logic under the ice with his logic of relatives, from which springsthe

Carnap at the University of Chicago. Carnap sent pitiful beginningsof our logic of relations of two and

Pitts to see the people associated with Rashevsky’s more than two arguments.So completely had the

school of biophysics. There he met Alston House- traditional Aristotelean logic been lost that Peirce

holder, who sent him to see McCulloch. They quickly remarksthat when he wrote the Century Dictionary he

was soconfusedconcerning abduction or apagoge,and

began their joint study of the mathematical structure

induction that he wrote nonsense.. . . Frege, Peano,

of systems built out of nerve nets. Through a mutual Whitehead, Russell,Wittgenstein, followed by a host of

friend, J. Lettvin of Boston City Hospital, Pitts was lesserlights, but sparkedby many a strange character

introduced to Wiener and Rosenblueth in 1943. Later like Schroeder,Sheffer, Gadel, and company, gaveus a

the same year, Pitts accepted a permanent position at working logic of propositions. By the time I had sunk

MIT in order to work with Wiener and learn from my teeth into thesequestions,the Polish schoolwas well

him the cybernetic approach. on its way to glory. In 1923I gave up the attempt to

At that time Mr. Pitts was already thoroughly write a logic of transitive verbs and beganto seewhat I

acquaintedwith mathematical logic and neurophysiology could do with the logic of propositions. (McCulloch

but had not had the chanceto make very many 1965,pp. 7-8)

engineeringcontacts. In particular, he was not What McCulloch had in mind was a psychological,

acquaintedwith Dr. Shannon’swork, and he had not not philosophical, theory for the logic of relations.

had much experienceof the possibilitiesof electronics. Whereas a philbsopher would have attempted to con-

He wasvery much interested when I showedhim struct a formal system that mirrored typical usage of

examplesof modernvacuum tubes and explained to him

the logic of relations, McCull&h intended to develop

these were ideal meansfor realizing in the metal the

a theory that explained the psychological underpin-

equivalents of his neuronic circuits and systems.From

that time, it becameclear to us that the ultra-rapid nings, not just the formal structure.

computing machine, dependingasit doeson consecutive My object, asa psychologist,wasto invent a kind of

switching devices,must representalmost an ideal model least psychic event, or “psychon,” that would have the

of the problemsarising in the nervous system. (Wiener following properties: First it wasto be so simple an

1948,p. 22) event that it either happenedor elseit did not happen.

Second,it wasto happenonly if its bound causehad

Pitts’s work at the Research Laboratory of Elec- happened-shadesof Duns Scotus!-that is, it wasto

tronics involved studying the relation between elec- imply its temporal antecedent. Third, it wasto propose

tronic computers and the human nervous system. this to subsequentpsychons.Fourth, thesewere to be

During this time he continued to work with Mc- compoundedto produce the equivalents of more

Culloch; eventually, in 1952, McCulloch joined him at complicatedpropositionsconcerning their antecedents.

MIT. The most influential article they produced there In 1929it dawnedon me that theseevents might be

was “What the Frog’s Eye Tells the Frog’s Brain” regardedasthe all-or-none impulsesof neurons,

(Lettvin et al. 1959). combinedby convergenceupon the next neuron to yield

Early in his career, between 1919 and 1923, Mc- complexesof propositional events. During the nineteen-

Culloch worked on a problem of philosophical logic, thirties, first under influences from F. H. Pike, C. H.

Prescott, and Eilhard von Doramus,and later, Northrop,

that of creating a formal system to explain the usage

Dusserde Barenne, and a host of my friends in

of transitive verbs. While engaged in this work he neurophysiology, I beganto try to formulate a proper

became interested in a related problem involving the calculusfor these events by subscripting symbolsfor

logic of relations. As McCulloch recalled,‘& propositions (connectedby implications) with the time

The forms of the syllogismand the logic of classeswere of occurrenceof the impulsein each neuron. (McCulloch

taught, and we shall use someof their devices,but there 1965,p. 9).

wasa generalrecognition of their inadequacyto the

Technical difficulties stood in the way of Mc-

Culloch’s psychology of propositions. Then McCulloch

‘8McCulloch is not entirely accurate in his history. Augustus de

Morgan had taken the first steps toward a logic of relations in the met Pitts, who was able to provide the requisite math-

mid-nineteenth century. Godel never contributed directly to the ematical theory to resolve these problems. The result

development of a logic of propositions. His most closely related was their famous joint paper, “A Logical Calculus of

work was the completeness theorem for the predicate calculus,

completing the tie between the semantics and syntax for the pred- the Ideas Immanent in Nervous Activity” (1943). The

icate calculus to parallel the tie for the propositional calculus. paper was published in Rashevsky’s journal, the Bul-

George Boole made the first steps toward a logic of propositions in letin. of Mathematical Biophysics, where it received

the mid-1800s, and Charles Peircecleared up someof the inadequa-

cies at the end of the century (Kneale and Kneale 1962; Kline little notice from biologists and psychologists until

1972). popularized by von Neumann.

Annals of the History of Computing, Volume 7, Number 2, April 1985 * 129

W. Aspray - Conceptualization of Information

Using as axioms the rules McCulloch prescribed for reverberate, we had set up a theory of memory-to

his psychons and as logical framework an amalgam of which every other form of memory is but a surrogate

Carnap’s logical calculus and Russell and Whitehead’s requiring reactivation of a trace. (McCulloch 1965,p. 10)

Principia Mathematics, McCulloch and Pitts pre- In a series of papers (McCulloch 1945; 1947; 1950;

sented a logical model of neuron nets showing their 1952), McCulloch and Pitts carried out the mathe-

functional similarity to Turing’s computing ma- matical details of this theory of the mind, providing,

chines.lg for example, a model of how humans believe universal

What Pitts and I had shown wasthat neuronsthat (“for all”) statements.

could be excited or inhibited, given a proper net, could The precision of their mathematical theory offered

extract any configuration of signalsin its input. Because opportunity for additional speculation about the func-

the form of the entire argument was strictly logical, and tioning of the mind. This precision was accomplished

becauseGodel had arithmetized logic, we had proved, in at the expense of a detailed theory of the biological

substance,the equivalenceof all generalTuring structure and functioning of the individual nerve cells.

machines-man-made or begotten. (McCulloch 1965,pp. Similar to Sherrington’s model of the simple reflex,

9-10) which he had called a “convenient abstraction,”

As von Neumann emphasized in his General and McCulloch and Pitts’s neurons were idealized neu-

Logical Theory of Automata (1951), the essence of rons. One knew what the input and output would be;

McCulloch and Pitts’s contribution was to show how but the neurons themselves were “black boxes,” closed

any functioning of the brain that could be described to inspection of their internal structure and operation.

clearly and unambiguously in a finite number of words Practicing physiologists objected21 (von Neumann

could be expressed as one of their formal neuron nets. 1951) that not only was this model of neurons incom-

The close relationship between Turing machines and plete, it was inconsistent with experimental knowl-

neuron nets was one of the goals of the authors; by edge. They argued further that the simplicity of the

1945 they understood that neuron nets, when supplied idealized neuron was so misleading as to vitiate any

with an appropriate analog of Turing’s infinite tape, positive results the work might achieve. Von Neu-

were equivalent to Turing machines.20 With the Tur- mann popularized McCulloch and Pitts’s work among

ing machines providing an abstract characterization biologists, arguing that the simple, idealized nature of

of thinking in the machine world and McCulloch and the model was necessary to understand the function-

Pitts’s neuron nets providing one in the biological ing of these neurons, and contending that once this

world, the equivalence result suggested a unified the- was understood, biologists could account more easily

ory of thought that broke down barriers between the for secondary effects related to physiological details

physical and biological worlds. of the neurons.

Their paper not only pointed out the similarity in McCulloch and Pitts were able to use their mathe-

abstract function between the human brain and com- matical theory to analyze a number of aspects of the

puting devices; it also provided a way of conceiving of functioning of the human nervous system. A 1950

the brain as a machine in a more precise way than article by McCulloch, entitled “Machines that Think

had been available before. It provided a means for and Want” (McCulloch 1950b), provided a prospective

further study of the brain, starting from a precise of the possible applications of their theory, emphasiz-

mathematical formulation. ing especially the application of cybernetic techniques

But we had done more than this, thanks to Pitts’ to understanding the functioning of the central nerv-

modulo mathematics. In looking into circuits composed ous system. Typical of McCulloch and Pitts’s appli-

of closedpaths of neuronswherein signalscould cation of information theory to physiology was a joint

project in 1947 on prosthetic devices designed to en-

I9It is more properto say that they had shown an exact correspond-

ence between the class of Turing machines and the class of neural able the blind to read by ear (Wiener 1948, pp. 31-32;

nets, such that each Turing machine corresponded to a functionally de Latil 1956, pp. 12-13). The problem resolved into

equivalent neural net, and vice versa. Later, McCulloch and Pitts one of pattern recognition involving the translation of

explicitly stated that Turing’s work on computable numbers was

their inspiration for the neural net paper. See McCulloch’s comment

letters of various sizes into particular sounds. Using

in the discussion following von Neumann (1951). McCulloch also cybernetic techniques, McCulloch and Pitts produced

alludes to this (1965, p. 9). a theory correlating the anatomy and the physiology

*’ Arthur Burks has pointed out that because the threshold functions

are all positive, the addition of a clocked source pulse will make the

of the visual cortex that also drew a similarity between

system universal (private communication). Von Neumann (1945) human vision and television.

was the first to see that the switches and delays of a stored-program