Professional Documents

Culture Documents

Computer Science

Uploaded by

Raghu NandanOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Computer Science

Uploaded by

Raghu NandanCopyright:

Available Formats

Computer science (or computing science) is the study and the science of the theoretical foundations

of information and computation and their implementation and application in computer systems.[1][2]

[3] Computer science has many sub-fields; some emphasize the computation of specific results

(such as computer graphics), while others relate to properties of computational problems (such as

computational complexity theory). Still others focus on the challenges in implementing

computations. For example, programming language theory studies approaches to describing

computations, while computer programming applies specific programming languages to solve

specific computational problems. A further subfield, human-computer interaction, focuses on the

challenges in making computers and computations useful, usable and universally accessible to

people.

History

Main article: History of computer science

The early foundations of what would become computer science predate the invention of the modern digital

computer. Machines for calculating fixed numerical tasks, such as the abacus, have existed since antiquity.

Wilhelm Schickard built the first mechanical calculator in 1623.[4] Charles Babbage designed a difference

engine in Victorian times (between 1837 and 1901)[5] helped by Ada Lovelace.[6] Around 1900, the IBM

corporation sold punch-card machines.[7] However, all of these machines were constrained to perform a

single task, or at best some subset of all possible tasks.

During the 1940s, as newer and more powerful computing machines were developed, the term computer

came to refer to the machines rather than their human predecessors. As it became clear that computers

could be used for more than just mathematical calculations, the field of computer science broadened to study

computation in general. Computer science began to be established as a distinct academic discipline in the

1960s, with the creation of the first computer science departments and degree programs.[8] Since practical

computers became available, many applications of computing have become distinct areas of study in their

own right.

Although many initially believed it impossible that computers themselves could actually be a scientific field

of study, in the late fifties it gradually became accepted among the greater academic population.[9] It is the

now well-known IBM brand that formed part of the computer science revolution during this time. 'IBM' (short

for International Business Machines) released the IBM 704 and later the IBM 709 computers, which were

widely used during the exploration period of such devices. "Still, working with the IBM [computer] was

frustrating...if you had misplaced as much as one letter in one instruction, the program would crash, and you

would have to start the whole process over again".[9] During the late 1950s, the computer science discipline

was very much in its developmental stages, and such issues were commonplace.

Time has seen significant improvements in the usability and effectiveness of computer science technology.

Modern society has seen a significant shift from computers being used solely by experts or professionals to a

more widespread user base. By the 1990s, computers became accepted as being the norm within everyday

life. During this time data entry was a primary component of the use of computers, many preferring to

streamline their business practices through the use of a computer. This also gave the additional benefit of

removing the need of large amounts of documentation and file records which consumed much-needed

physical space within offices.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- LUMP SUM PRICE BREAKDOWN FOR SITE CONSTRUCTIONDocument117 pagesLUMP SUM PRICE BREAKDOWN FOR SITE CONSTRUCTIONgunturlp100% (2)

- Donald Trump Biography - Businessman and President-electDocument1 pageDonald Trump Biography - Businessman and President-electRaghu NandanNo ratings yet

- 1996 Sergey Brin Larry PageDocument1 page1996 Sergey Brin Larry PageRaghu NandanNo ratings yet

- College Student Data ShortDocument71 pagesCollege Student Data ShortRaghu NandanNo ratings yet

- The Divine CosmosDocument281 pagesThe Divine CosmosRaghu Nandan100% (4)

- Passport Application FormDocument6 pagesPassport Application FormAshwini100% (2)

- Technical SeminarDocument15 pagesTechnical SeminarRaghu NandanNo ratings yet

- Chap 1 Intro To Systems Analysis and Design 2Document50 pagesChap 1 Intro To Systems Analysis and Design 2Hai MenNo ratings yet

- 3905 - Engl - 2012 - 11 KTADocument61 pages3905 - Engl - 2012 - 11 KTAkartoon_38No ratings yet

- C3 - Process Selection, Design, and AnalysisDocument54 pagesC3 - Process Selection, Design, and AnalysisMinh Thuận VõNo ratings yet

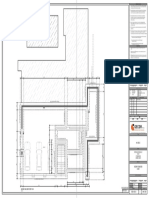

- 00 Basement Foundation LayoutDocument1 page00 Basement Foundation LayoutrendaninNo ratings yet

- CDOT Bridge Design Manual - 20180101Document431 pagesCDOT Bridge Design Manual - 20180101albertoxina100% (1)

- Offshore Process Design StandardsDocument10 pagesOffshore Process Design Standardssri9987No ratings yet

- Online ShoppingDocument54 pagesOnline ShoppingVaryam Pandey50% (6)

- Silangan Fit OutDocument5 pagesSilangan Fit OutNova CastyNo ratings yet

- Emergency Generator Sizing and Motor Starting Analysis: Mukesh Kumar Kirar, Ganga AgnihotriDocument6 pagesEmergency Generator Sizing and Motor Starting Analysis: Mukesh Kumar Kirar, Ganga Agnihotribalwant_negi7520No ratings yet

- P 192.481 Visually Inspect For Atmospheric Corrosion RevisionsDocument2 pagesP 192.481 Visually Inspect For Atmospheric Corrosion RevisionsahmadlieNo ratings yet

- Concrete Problems in AI SafetyDocument29 pagesConcrete Problems in AI SafetyCris D PutnamNo ratings yet

- Column Design - Staad ReactionsDocument12 pagesColumn Design - Staad ReactionsNitesh SinghNo ratings yet

- Data Structures Question BankDocument32 pagesData Structures Question BankMuhammad Anas RashidNo ratings yet

- Fundamentals of Earthquake Engineering: Those Familiar With Newmark - S and RosenDocument1 pageFundamentals of Earthquake Engineering: Those Familiar With Newmark - S and RosenBladimir Jesús Ccama Cutipa0% (1)

- List of UniversitasDocument40 pagesList of UniversitasMuhamad Erlangga SaputraNo ratings yet

- Hvac Design Manual: First EditionDocument124 pagesHvac Design Manual: First EditionNathan Tom100% (7)

- Diagnostic code info for Caterpillar enginesDocument3 pagesDiagnostic code info for Caterpillar enginesevanNo ratings yet

- VIT Campus, Jaipur: Leading Engineering College of Rajasthan/ Best Infrastructure & PlacementDocument85 pagesVIT Campus, Jaipur: Leading Engineering College of Rajasthan/ Best Infrastructure & PlacementOnkar BagariaNo ratings yet

- NOVA Servo CatalogueDocument7 pagesNOVA Servo CatalogueDip Narayan BiswasNo ratings yet

- Railway Over Bridge Training ReportDocument50 pagesRailway Over Bridge Training ReportMitulChopra100% (1)

- BbsDocument72 pagesBbsAkd DeshmukhNo ratings yet

- Procon Boiler Leak Detection Brochure 8 Page Iss. 4 April 17Document4 pagesProcon Boiler Leak Detection Brochure 8 Page Iss. 4 April 17Watchara ThepjanNo ratings yet

- Maintenance Instrument Technician CV - Google SearchDocument26 pagesMaintenance Instrument Technician CV - Google SearchSunket PatelNo ratings yet

- Httpbarbianatutorial.blogspot.comDocument180 pagesHttpbarbianatutorial.blogspot.comsksarkar.barbianaNo ratings yet

- New EM Graduate ProgramDocument18 pagesNew EM Graduate ProgramabofahadNo ratings yet

- OEE Primer Understanding OverDocument483 pagesOEE Primer Understanding OverJorge Cuadros Blas100% (1)

- Lean Models Classification Towards A Holistic ViewDocument9 pagesLean Models Classification Towards A Holistic ViewbayramNo ratings yet