Professional Documents

Culture Documents

Memo Regarding ACS-With Response

Uploaded by

aabeveridgeCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Memo Regarding ACS-With Response

Uploaded by

aabeveridgeCopyright:

Available Formats

IMPACT OF MISCOMPUTED STANDARD ERRORS AND FLAWED DATA RELEASE

POLICES ON THE AMERICAN COMMUNITY SURVEY

TO: ROBERT GROVES, DIRECTOR

FROM: ANDREW A. BEVERIDGE, PROFESSOR OF SOCIOLOGY, QUEENS COLLEGE AND

GRADUATE CENTER CUNY AND CONSULTANT TO THE NEW YORK TIMES FOR CENSUS

AND OTHER DEMOGRAPHIC ANALYSES

*

SUBJECT: IMPACT OF MISCOMPUTED STANDARD ERRORS AND FLAWED DATA RELEASE

POLICES ON THE AMERICAN COMMUNITY SURVEY

DATE: 1/24/2011

CC: RODERICK LITTLE, ASSOCIATE DIRECTOR; CATHY MCCULLY CHIEF U.S. CENSUS

BUREAU REDISTRICTING DATA OFFICE

This memo is to alert the Bureau to two problems with the American Community Survey as released that

degrade the utility of the data through confusion and filtering (not releasing all data available for a specific

geography). The resultant errors could reflect poorly on the Bureaus ability to conduct the American

Community Survey and could potentially undercut support for that effort. The problems are as follows:

1. The Standard Errors, and the Margins of Error (MOE) based upon them, reported for data

in the American Community Survey are miscomputed. For situations where the usual

approximation used to compute standard errors is inappropriate (generally where there a

given cell in a table makes up only a small part or a very large portion of the total) it is used

anyway. The results they yield include reports of negative numbers of people for many

cells within the MOE in the standard reports.

2. The rules for not releasing data about specific fields or variables in the one-year and three-

year files (so-called filtering) in a given table seriously undercuts the value of the one-year

and three-year ACS for many communities and areas throughout the United States by

depriving them of valuable data. (The filtering is also based in part on the miscomputed

MOEs.)

This memo discusses the issues underlying each problem. Attached to this memo are a number of items to

support and illustrate my points.

A. MISCOMPUTED STANDARD ERRORS

Background

The standard errors and MOEs reported with the American Community Survey, were miscomputed

in certain situations and lead to erroneous results. When very small or very large proportions of a given

table are in a specific category then the standard errors are computed improperly. Indeed, there are a massive

number of instances where the Margins of Error (MOEs) include zero or negative counts of persons with

given characteristics. This happens even though individuals were found in the sample with specific

*

I write as a friend of the Census Bureau. Since 1993 I have served as consultant to the New York Times with regard to

the Census and other demographic matters. I use Census and ACS data in my research and have testified using Census

data numerous times in court.

Miscomputation of Standard Error and Flawed Release Rules in the ACS--2

characteristics (e.g. Non-Hispanic Asian). Obviously, if one finds any individuals of a given category in a

given area, the MOE should never be negative or include zero for that category. If it did, then the method by

which it was computed should be considered incorrect. For instance, in the case of non-Hispanic Asians in

counties, in some 742 counties where the point estimate of the number of non-Hispanic Asians is greater

than zero, the published MOE implies that the actual number in those counties could be negative, and in 40

additional cases the MOE includes zero. Similarly, for the 419 counties where the point estimate is zero, the

published MOE implies that there could be negative or minus Non-Hispanic Asians in the county. (See

Appendix 1 for these and other results. Here I have only shared results from Table B03002. Many other

tables exhibit similar issues.) This problem permeates the data released from the 2005-2009 ACS, and has

contributed to filtering issues in the three-year and one-year files, which will be discussed later in this memo.

Issues with the ACS Approach to Computing Standard Error

This problem appears to be directly related to the method by which Standard Errors and MOEs are

computed for the American Community Survey, especially for situations where a given data field constitutes a

very large or very small proportion of a specific table. Chapter 12 of the ACS Design and Methodology describes in

some detail the methods used to compute standard error in the ACS (see Appendix 2). It appears that the

problems with using the normal approximation or Wald approximation in situations with large or small

proportions are ignored. This is especially surprising given the fact that the problems of using a normal

approximation to the binomial distribution under these conditions are well known. I have attached an article

that surveys some of these issues. (See Appendix 3, Lawrence D. Brown, T. Tony Cai and Anirban

DasGupta. Interval Estimation for a Binomial Proportion. Statistical Science 16:2 (2001): p.10133.) The

article also includes responses from several researchers who have tackled this problem. Indeed, even

Wikipedia has references to this problem in its entry regarding the Binomial Proportion. Much of the

literature makes the point that even in conditions where the issue is not a large or small proportion, the

normal approximation may result in inaccuracies. Some standard statistical packages (e.g., SAS, SUDAAN)

have implemented several of the suggested remedies. Indeed, some software programs even warn users when

the Wald or normal approximation should not be used.

It is true that on page 12-6 of ACS Design and Methodology the following statement seems to indicate that the

Census Bureau recognizes the problem (similar statements are in other documents regarding the ACS):

Users are cautioned to consider logical boundaries when creating confidence bounds from

the margins of error. For example, a small population estimate may have a calculated lower

bound less than zero. A negative number of people does not make sense, so the lower

bound should be set to zero instead. Likewise, bounds for percents should not go below

zero percent or above 100 percent.

In fact, this means that it was obvious to the bureau that the method for computing standard errors and

MOEs resulted in errors in certain situations, but nothing, so far, has been done to remedy the problem.

Furthermore in the American Fact Finder MOEs which included negative numbers, where respondents were

found in the area abound. (See Appendix 4, for an example.) It is equally problematic to have the MOE

include zero when the sample found individuals or households with a given characteristic. I should note that

exact confidence intervals for binomial proportions and appropriate approximations under these conditions

are asymmetric, and never include zero or become negative. Therefore, the ACSs articulated (but not

implemented) MOE remedy of setting the lower bound to zero would not be a satisfactory solution. Rather,

the method of computation must be changed to reflect the accepted statistical literature and statistical practice

by major statistical packages and to guard against producing data that are illogical and thus likely to be

misunderstood and criticized.

Miscomputation of Standard Error and Flawed Release Rules in the ACS--3

Consequences of the Miscomputation

There are serious consequences stemming from this miscomputation:

1. The usefulness, accuracy and reliability of ACS data may be thrown into question. With the

advent of the ACS 2005-2009 data, many of the former uses of the Census long-form will now

depend upon the ACS five year file, while others could be based upon the one-year and three-year

files. One of these uses is in the contentious process of redistricting Congress, state legislatures and

local legislative bodies. In any area where the Voting Rights Act provisions are used to assess

potential districting plans, Citizens of Voting Age Population (CVAP) numbers need to be computed

for various cognizable groups, including Hispanics, Asian and Pacific Islanders, Non-Hispanic White

and Non-Hispanic Black, etc. Since citizenship data is not collected in the decennial census, such

data, which used to come from the long form, must now be produced from the 2005-2009 ACS. In

2000 the Census Bureau created a special tabulation (STP76) based upon the long form to report

CVAP by group for the voting age population to help in the computation of the so-called effective

majority. I understand that the Department of Justice has requested a similar file based upon the

2005 to 2009 ACS. Unless the standard error for the ACS is correctly computed, I envision that

during the very contentious and litigious process of redistricting, someone could allege that the ACS

is completely without value for estimating CVAP due to its flawed standard errors and MOEs, and

therefore is not useful for redistricting. I am sure that somewhere a redistricting expert would testify

to that position if the current standard error and MOE computation procedure were maintained.

This could lead to a serious public relations and political problem, not only for the ACS, but for

census data generally.

When a redistricting expert suggested this exact scenario to me, I became convinced that I

should formally bring these issues to the Bureaus attention. Given the fraught nature of the

politics over the ACS and census data generally, I think it is especially important that having

a massive number of MOEs including minus and zero people should be addressed

immediately by choosing an appropriate method to compute MOEs and implementing it in

the circumstances discussed above.

I should note that in addition to redistricting, the ACS data will be used for the following:

1. Equal employment monitoring using an Equal Employment Opportunity

Commission File, which includes education and occupation data, so it must be

based upon the ACS.

2. Housing availability and affordability studies using a file created for the Housing and

Urban Development (HUD) called the Comprehensive Housing Affordability

Strategy (CHAS) file. This file also requires ACS data since it includes several

housing characteristics and cost items.

3. Language assistance assessments for voting by the Department of Justice, which is

another data file that can only be created from the ACS because it is based upon the

report of the language spoken at home.

Because the ACS provides richer data on a wider variety of topics than the decennial census, there

are a multitude of other uses for the ACS in planning transportation, school enrollment, districting

and more where the MOEs would be a significant issue. In short, the miscomputed MOEs are likely

to cause local and state government officials, private researchers, and courts of law to use the ACS

less effectively and less extensively, or to stop using it all together.

Miscomputation of Standard Error and Flawed Release Rules in the ACS--4

B. FLAWED RELEASE POLICIES FOR DATA TABLES IN THE ONE-YEAR AND THREE-

YEAR ACS FILES

Background

Having yearly and tri-yearly data available makes the ACS potentially much more valuable than the infrequent

Census long form. However, much of that added value has been undercut by the miscomputation of MOEs

coupled with disclosure rules for data in the one-year and three-year ACS files. This has meant that

important data for many communities and areas have been filtered, that is not released. The general rule,

of course, is that data are released for areas with a population of 65,000 or greater for the one-year file and for

areas with a population of 20,000 or greater for the three-year files. However, the implementation of the so-

called filtering rule, used to prevent unreliable statistical data from being released, has had the practical

effect of blocking the release of much important data.

As chapter 13 of the ACS Design Methodology states:

The main data release rule for the ACS tables works as follows. Every detailed table consists

of a series of estimates. Each estimate is subject to sampling variability that can be

summarized by its standard error. If more than half of the estimates in the table are not

statistically different from 0 (at a 90 percent confidence level), then the table fails. Dividing

the standard error by the estimate yields the coefficient of variation (CV) for each estimate.

(If the estimate is 0, a CV of 100 percent is assigned.) To implement this requirement for

each table at a given geographic area, CVs are calculated for each tables estimates, and the

median CV value is determined. If the median CV value for the table is less than or equal to

61 percent, the table passes for that geographic area and is published; if it is greater than 61

percent, the table fails and is not published. (Chapter 13 of the ACS Design and Methodology,

p. 13-7, see Appendix 5.)

Negative Consequences of the Filtering Rules

These rules on their face are flawed, since they make the release of data about non-Hispanic blacks or non-

Hispanic whites contingent on the presence of members of other groups living in the same area, such as

Hispanic Native Americans or Alaskan Natives, Hispanic Asians, or Hispanic Hawaiian Natives or other

Pacific Islanders. Therefore, for areas populated by just a few groups, the Bureau will not release the data

about any group for that area. Thus, many cities, counties and other areas will not have important data about

the population composition reported because they do not have enough different population groups living

within the specified area. Furthermore, using the CV based upon the miscomputed standard error (most

especially for the case of where few or zero individuals in the area are in a given category) means that the

likelihood of reporting a high CV is increased and even more areas will not have some data released. (As

noted in the section discussing the miscomputed standard errors, the computation of the standard error and

thus the computation of CV is flawed.)

To demonstrate the impact of this rule, I assessed the filtering for the variable B03002 (Hispanic status by

race) in the 2009 one-year release, using the Public Use Micro-data Areas (PUMAs). PUMAs are areas that

were designed to be used with the Public Use Micro-Data files and are required to include at least 100,000

people. Due to the Bureaus release rules, the only areas released for the one-year and three-year files that

provide complete coverage of the United States were the nation, States, and PUMAs levels of data. Using

PUMAs, it is easy to understand the impact of the filtering rules since every PUMA received data in 2009.

For the Race and Hispanic status table (B03002), the table elements include total population; total non-

Hispanic population; non-Hispanic population for the following groups: white; black; native American or

Alaskan Native; Asian; native Hawaiian or other Pacific Islander; some other race; two or more races; two

races including some other race; two races excluding some other race, and three or more races; and total

Hispanic population and then population for each of the same groups listed above but for those that are

Hispanic, such as Hispanic Asian, Hispanic native Hawaiian and other Pacific Islander, as well as Hispanic

Miscomputation of Standard Error and Flawed Release Rules in the ACS--5

White and Hispanic black. The table contains some 21 items, of which 3 are subtotals of other items of other

items. (See Appendix 2 for an example.)

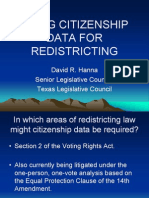

Plainly, many of the cells in this table are likely to be zero or near zero for many geographies within the

United States. For that reason, it is not surprising that 1,274 of 2,101 PUMAs (well more than half) were

filtered for the 2009 one-year file. Those not filtered are likely to be urban areas with a substantial degree

of population diversity, while those filtered are often the opposite. The map presented on the final page of

this memo shows graphically which PUMAs had table B03002 filtered. This particular example shows where

the proportion of the population that was non-Hispanic white was not revealed in this table.

Looking at the map, the problem becomes clear. Vast portions of the United States are filtered, as

represented by the green cross-hatch pattern. Parts of North Dakota and Maine for example do not have a

report on the number of non-Hispanic whites. In a similar vein, there is at least one PUMA in New York

City that is so concentrated in terms of non-Hispanic African American population that B03002 is filtered.

Conclusion and Recommendations

Based upon this analysis, I would make three recommendations to the Bureau:

1) That a User Note immediately be drafted indicating that there is a problem with the MOEs (and the

standard errors which are used to compute them) in the ACS for certain situations, and that the

Bureau will recompute them for the ACS 2005-2009 file on an expedited basis. We are basically one-

month from the start of redistricting with Virginia and New Jersey required to redraw their lines by

early summer. Both states have substantial numbers of foreign-born individuals, many of whom are

Hispanic, so the Citizens of Voting Age by group calculations are very important and dependent on

clear ACS data.

2) That the MOEs for the 2005-2009 ACS, (as well as all of the one and three year files already issues)

be recomputed using a method that takes into account the issues surrounding the binomial

proportion. That the ACS 2005-2009 data be re-released with these new MOE files. This should

be done almost immediately for the more recent files and as soon as possible for the others.

3) That the Bureau adopt a new release policy based not upon whole tables (the specifications of which

are in any event arbitrary), but rather based upon specific variables or table cells. In this way, the

release of important data would not be subject to miscomputed standard errors, or to the size or

estimated variability of other variables or cells. It also may make sense to dispense with filtering

altogether. This policy should then be applied to previously released data, and the data should be re-

released.

The problems identified in this memo are causing serious issues regarding the ACSs use in a wide variety of

settings, and could seriously threaten the viability of this irreplaceable and vital data source if they became

widely known. As a Census and ACS user, I truly hope that steps can be taken to remedy these problems

swiftly.

Miscomputation of Standard Error and Flawed Release Rules in the ACS--6

MAP OF THE LOWER 48 STATES SHOWING NON-RELEASE (FILTERING) OF DATA FOR RACE AND HISPANIC

STATUS BY PUMAS. GREEN CROSS-HATCHES INDICATE NON-RELEASE

Andrew A. Beveridge <andy@socialexplorer.com>

Andrew A. Beveridge <andrew.beveridge@qc.cuny.edu> Tue, Feb 8, 2011 at 8:44 AM

To: Roderick.Little@census.gov, David Rindskopf <drindskopf@gc.cuny.edu>

Cc: robert.m.groves@census.gov, Catherine.Clark.McCully@census.gov, "sharon.m.stern" <Sharon.M.Stern@census.gov>

Bcc: j.trent.alexander@census.gov, Matthew Ericson <matte@nytimes.com>

Dear Rod:

It was good to talk to you yesterday along with David Rindskopf

regarding the issues raised in my memo regarding MOE and filtering in

the ACS Data.

We understand the fact that recomputing and re-releasing the

confidence intervals would be a very time consuming process.

Nonetheless, I do believe that some sort of statement regarding the

MOE's is necessary, particularly around negative and zero values for

groups that were found in the sample, as well as the intervals for

situations where no subjects were found.

I attach to this memo an example table from the CVAP data released on

Friday. This is for a block group in Brooklyn, and as you can see the

point estimates for seven of the 11 groups are zero, and the MOE's are

123 in every case. These groups include several that have very low

frequencies in Brooklyn (e.g., American Indian or Alaskan Native). In

the litigation context, it would be very easy to make such numbers

look absurd. I still remain concerned that this could easily harm the

Bureau's credibility, which would be very damaging given the context

of support for Bureau activities.

I also agree that doing some research into what would be an

appropriate way to view and compute Confidence Intervals for the ACS,

possibly including reference to the Decennial Census results and also

contemplating the importance of various spatial distributions would

ultimately be very helpful. At the same time, it is nonetheless the

fact that at least two of the major statistical package vendors have

implemented procedures, which take into account the issue of high or

low proportions in estimating confidence intervals from survey data.

I attach the write-up on confidence intervals for SAS's SURVEYFREQ,

one of a set of procedures that SAS has developed to handle the

analysis of data drawn from complex samples. They have the following

option for the computation of confidence limits: "PROC SURVEYFREQ

also provides the PSMALL option, which uses the alternative confidence

limit type for extreme (small or large) proportions and uses the Wald

confidence limits for all other proportions (not extreme)." (See

Attachment)

At the same time, later yesterday afternoon I was speaking with a very

well known lawyer who works on redistricting issues. He had looked at

the release of the CVAP data at the block-group level and basically

concluded that it was effectively worthless. Part of this, I think,

is due to the fact that the data such as that in the attachment, do

not look reliable on their face.

If nothing is done, it will be very difficult to defend these data in

court or use them to help in drawing districts. I remain convinced

that there also could be serious collateral damage to the Census

Bureau in general. I for one hope that this does not occur. I look

forward to your response to the issues I raised.

Very truly yours,

Andy

--

Andrew A. Beveridge

Prof of Sociology Queens College and Grad Ctr CUNY

Chair Queens College Sociology Dept

Office: 718-997-2848

Email: andrew.beveridge@qc.cuny.edu

252A Powdermaker Hall

65-30 Kissena Blvd

Flushing, NY 11367-1597

www.socialexplorer.com

Attachment_2_8_2011.doc

196K

Attachment to EMAIL to Rod Little 2/8/2011 -1- 1

Block Group 2, Census Tract 505, Kings County, New York

LNTITLE LNNUMBER CVAP_EST CVAP_MOE

Total 1 625 241

Not Hispanic or Latino 2 195 124

American Indian or Alaska Native Alone 3 0 123

Asian Alone 4 35 55

Black or African American Alone 5 45 51

Native Hawaiian or Other Pacific Islander Alone 6 0 123

White Alone 7 120 80

American Indian or Alaska Native and White 8 0 123

Asian and White 9 0 123

Black or African American and White 10 0 123

American Indian or Alaska Native and Black or African

American

11 0 123

Remainder of Two or More Race Responses 12 0 123

Hispanic or Latino 13 430 197

The SURVEYFREQ Procedure

Confidence Limits for Proportions

http://support.sas.com/documentation/cdl/en/statug/63347/HTML/default/viewer

.htm#statug_surveyfreq_a0000000221.htm

If you specify the CL option in the TABLES statement, PROC SURVEYFREQ computes

confidence limits for the proportions in the frequency and crosstabulation tables.

By default, PROC SURVEYFREQ computes Wald ("linear") confidence limits if you do not

specify an alternative confidence limit type with the TYPE= option. In addition to Wald

confidence limits, the following types of design-based confidence limits are available for

proportions: modified Clopper-Pearson (exact), modified Wilson (score), and logit confidence

limits.

PROC SURVEYFREQ also provides the PSMALL option, which uses the alternative confidence

limit type for extreme (small or large) proportions and uses the Wald confidence limits for all

other proportions (not extreme). For the default PSMALL= value of 0.25, the procedure

computes Wald confidence limits for proportions between 0.25 and 0.75 and computes the

alternative confidence limit type for proportions that are outside of this range. See Curtin et al.

(2006).

Attachment to EMAIL to Rod Little 2/8/2011 -2- 2

See Korn and Graubard (1999), Korn and Graubard (1998), Curtin et al. (2006), and Sukasih and

Jang (2005) for details about confidence limits for proportions based on complex survey data,

including comparisons of their performance. See also Brown, Cai, and DasGupta (2001), Agresti

and Coull (1998) and the other references cited in the following sections for information about

binomial confidence limits.

For each table request, PROC SURVEYFREQ produces a nondisplayed ODS table, "Table

Summary," which contains the number of observations, strata, and clusters that are included in

the analysis of the requested table. When you request confidence limits, the "Table Summary"

data set also contains the degrees of freedom df and the value of that is used to compute the

confidence limits. See Example 84.3 for more information about this output data set.

Wald Confidence Limits

PROC SURVEYFREQ computes standard Wald ("linear") confidence limits for proportions by

default. These confidence limits use the variance estimates that are based on the sample design.

For the proportion in table cell , the Wald confidence limits are computed as

where is the estimate of the proportion in table cell , is the standard error of the

estimate, and is the th percentile of the t distribution with df degrees of

freedom calculated as described in the section Degrees of Freedom. The confidence level is

determined by the value of the ALPHA= option, which by default equals 0.05 and produces 95%

confidence limits.

The confidence limits for row proportions and column proportions are computed similarly to the

confidence limits for table cell proportions.

Modified Confidence Limits

PROC SURVEYFREQ uses the modification described in Korn and Graubard (1998) to compute

design-based Clopper-Pearson (exact) and Wilson (score) confidence limits. This modification

substitutes the degrees-of-freedom adjusted effective sample size for the original sample size in

the confidence limit computations.

The effective sample size is computed as

where is the original sample size (unweighted frequency) that corresponds to the total domain

of the proportion estimate, and is the design effect.

Attachment to EMAIL to Rod Little 2/8/2011 -3- 3

If the proportion is computed for a table cell of a two-way table, then the domain is the two-way

table, and the sample size is the frequency of the two-way table. If the proportion is a row

proportion, which is based on a two-way table row, then the domain is the row, and the sample

size is the frequency of the row.

The design effect for an estimate is the ratio of the actual variance (estimated based on the

sample design) to the variance of a simple random sample with the same number of observations.

See the section Design Effect for details about how PROC SURVEYFREQ computes the design

effect.

If you do not specify the ADJUST=NO option, the procedure applies a degrees-of-freedom

adjustment to the effective sample size to compute the modified sample size. If you specify

ADJUST=NO, the procedure does not apply the adjustment and uses the effective sample size

in the confidence limit computations.

The modified sample size is computed by applying a degrees-of-freedom adjustment to the

effective sample size as

where df is the degrees of freedom and is the th percentile of the t distribution

with df degrees of freedom. The section Degrees of Freedom describes the computation of the

degrees of freedom df, which is based on the variance estimation method and the sample design.

The confidence level is determined by the value of the ALPHA= option, which by default

equals 0.05 and produces 95% confidence limits.

The design effect is usually greater than 1 for complex survey designs, and in that case the

effective sample size is less than the actual sample size. If the adjusted effective sample size is

greater than the actual sample size , then the procedure truncates the value of to , as

recommended by Korn and Graubard (1998). If you specify the TRUNCATE=NO option, the

procedure does not truncate the value of .

Modified Clopper-Pearson Confidence Limits

Clopper-Pearson (exact) confidence limits for the binomial proportion are constructed by

inverting the equal-tailed test based on the binomial distribution. This method is attributed to

Clopper and Pearson (1934). See Leemis and Trivedi (1996) for a derivation of the distribution

expression for the confidence limits.

PROC SURVEYFREQ computes modified Clopper-Pearson confidence limits according to the

approach of Korn and Graubard (1998). The degrees-of-freedom adjusted effective sample size

is substituted for the sample size in the Clopper-Pearson computation, and the adjusted

effective sample size times the proportion estimate is substituted for the number of positive

Attachment to EMAIL to Rod Little 2/8/2011 -4- 4

responses. (Or if you specify the ADJUST=NO option, the procedure uses the unadjusted

effective sample size instead of .)

The modified Clopper-Pearson confidence limits for a proportion ( and ) are computed as

where is the th percentile of the distribution with and degrees of freedom, is the

adjusted effective sample size, and is the proportion estimate.

Modified Wilson Confidence Limits

Wilson confidence limits for the binomial proportion are also known as score confidence limits

and are attributed to Wilson (1927). The confidence limits are based on inverting the normal test

that uses the null proportion in the variance (the score test). See Newcombe (1998) and Korn and

Graubard (1999) for details.

PROC SURVEYFREQ computes modified Wilson confidence limits by substituting the degrees-

of-freedom adjusted effective sample size for the original sample size in the standard Wilson

computation. (Or if you specify the ADJUST=NO option, the procedure substitutes the

unadjusted effective sample size .)

The modified Wilson confidence limits for a proportion are computed as

where is the adjusted effective sample size and is the estimate of the proportion. With the

degrees-of-freedom adjusted effective sample size , the computation uses . With the

unadjusted effective sample size, which you request with the ADJUST=NO option, the

computation uses . See Curtin et al. (2006) for details.

Logit Confidence Limits

If you specify the TYPE=LOGIT option, PROC SURVEYFREQ computes logit confidence

limits for proportions. See Agresti (2002) and Korn and Graubard (1998) for more information.

Logit confidence limits for proportions are based on the logit transformation .

The logit confidence limits and are computed as

Attachment to EMAIL to Rod Little 2/8/2011 -5- 5

where

where is the estimate of the proportion, is the standard error of the estimate, and

is the th percentile of the t distribution with df degrees of freedom. The degrees of

freedom are calculated as described in the section Degrees of Freedom. The confidence level is

determined by the value of the ALPHA= option, which by default equals 0.05 and produces 95%

confidence limits.

Andrew A. Beveridge <andy@socialexplorer.com>

roderick.little@census.gov <roderick.little@census.gov> Tue, Feb 8, 2011 at 9:22 AM

To: "Andrew A. Beveridge" <andrew.beveridge@qc.cuny.edu>

Cc: andy@socialexplorer.com, Catherine.Clark.McCully@census.gov, David Rindskopf <drindskopf@gc.cuny.edu>, robert.m.groves@census.gov,

"sharon.m.stern" <Sharon.M.Stern@census.gov>

Andy and David, thanks for this. The SAS appendix looks interesting and seems to be a good start on the problem. I think I have a better idea of the main

concerns from our conversation, and will convey this to the ACS team as we consider next steps. Best, Rod

From: "Andrew A. Beveridge" <andrew.beveridge@qc.cuny.edu>

To: Roderick.Little@census.gov, David Rindskopf <drindskopf@gc.cuny.edu>

Cc: robert.m.groves@census.gov, Catherine.Clark.McCully@census.gov, "sharon.m.stern" <Sharon.M.Stern@census.gov>

Date: 02/08/2011 08:45 AM

Subject: Confidence Limits for Small and Large Proportion in ACS Data

Sent by: andy@socialexplorer.com

[Quoted text hidden]

[attachment "Attachment_2_8_2011.doc" deleted by Roderick Little/DIR/HQ/BOC]

13.8 IMPORTANT NOTES ON MULTIYEAR ESTIMATES

While the types of data products for the multiyear estimates are almost entirely identical to those

used for the 1-year estimates, there are several distinctive features of the multiyear estimates that

data users must bear in mind.

First, the geographic boundaries that are used for multiyear estimates are always the boundary as

of January 1 of the final year of the period. Therefore, if a geographic area has gained or lost terri-

tory during the multiyear period, this practice can have a bearing on the users interpretation of

the estimates for that geographic area.

Secondly, for multiyear period estimates based on monetary characteristics (for example, median

earnings), inflation factors are applied to the data to create estimates that reflect the dollar values

in the final year of the multiyear period.

Finally, although the Census Bureau tries to minimize the changes to the ACS questionnaire, these

changes will occur from time to time. Changes to a question can result in the inability to build cer-

tain estimates for a multiyear period containing the year in which the question was changed. In

addition, if a new question is introduced during the multiyear period, it may be impossible to

make estimates of characteristics related to the new question for the multiyear period.

13.9 CUSTOM DATA PRODUCTS

The Census Bureau offers a wide variety of general-purpose data products from the ACS designed

to meet the needs of the majority of data users. They contain predefined sets of data for standard

census geographic areas. For users whose data needs are not met by the general-purpose prod-

ucts, the Census Bureau offers customized special tabulations on a cost-reimbursable basis

through the ACS custom tabulation program. Custom tabulations are created by tabulating data

from ACS edited and weighted data files. These projects vary in size, complexity, and cost,

depending on the needs of the sponsoring client.

Each custom tabulation request is reviewed in advance by the DRB to ensure that confidentiality is

protected. The requestor may be required to modify the original request to meet disclosure avoid-

ance requirements. For more detailed information on the ACS Custom Tabulations program, go to

<http://www.census.gov/acs/www/Products/spec_tabs/index.htm>.

138 Preparation and Review of Data Products ACS Design and Methodology

U.S. Census Bureau

Cumulative Cumulative

Frequency Percent

MOE Not Reported 3,098 96.18 3,098 96.18

MOE Less than Estimate 123 3.82 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Not Reported 2,503 77.71 2,503 77.71

MOE Greater than Estimate 2 0.06 2,505 77.77

MOE Less than Estimate 715 22.20 3,220 99.97

Est Zero, MOE Positive 1 0.03 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Greater than Estimate 4 0.12 4 0.12

MOE Less than Estimate 3,215 99.81 3,219 99.94

Est Zero, MOE Positive 2 0.06 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 24 0.75 24 0.75

MOE Greater than Estimate 429 13.32 453 14.06

MOE Less than Estimate 2,473 76.78 2,926 90.84

Est Zero, MOE Positive 295 9.16 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 35 1.09 35 1.09

MOE Greater than Estimate 665 20.65 700 21.73

MOE Less than Estimate 2,133 66.22 2,833 87.95

Est Zero, MOE Positive 388 12.05 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 40 1.24 40 1.24

MOE Greater than Estimate 742 23.04 782 24.28

MOE Less than Estimate 2,020 62.71 2,802 86.99

Est Zero, MOE Positive 419 13.01 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 21 0.65 21 0.65

MOE Greater than Estimate 893 27.72 914 28.38

MOE Less than Estimate 419 13.01 1,333 41.38

Est Zero, MOE Positive 1,888 58.62 3,221 100.00

Appendix 1. Tabulation of MOEs, Including Those That Include Zero or Negative

Cases for Table B03002 (Race by Hispanic Status)

Neg MOE vs EST: Not Hispanic or Latino: Native Hawaiian and Other Pacific Islander

alone

B03002_7_neg

Frequency Percent

Neg MOE vs EST: Not Hispanic or Latino: Asian alone

B03002_6_neg

Frequency Percent

Neg MOE vs EST: Not Hispanic or Latino: American Indian and Alaska Native alone

B03002_5_neg

Frequency Percent

Neg MOE vs EST: Not Hispanic or Latino: Black or African American alone

B03002_4_neg

Frequency Percent

Neg MOE vs EST: Not Hispanic or Latino: White alone

B03002_3_neg

Frequency Percent

Neg MOE vs EST: Not Hispanic or Latino

B03002_2_neg

Frequency Percent

Neg MOE vs EST: Total population

B03002_1_neg

Frequency Percent

Page 1 of 4

Appendix 1. Tabulation of MOEs, Including Those That Include Zero or Negative

Cases for Table B03002 (Race by Hispanic Status)

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 28 0.87 28 0.87

MOE Greater than Estimate 1,082 33.59 1,110 34.46

MOE Less than Estimate 817 25.36 1,927 59.83

Est Zero, MOE Positive 1,294 40.17 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 34 1.06 34 1.06

MOE Greater than Estimate 339 10.52 373 11.58

MOE Less than Estimate 2,706 84.01 3,079 95.59

Est Zero, MOE Positive 142 4.41 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 25 0.78 25 0.78

MOE Greater than Estimate 1,019 31.64 1,044 32.41

MOE Less than Estimate 505 15.68 1,549 48.09

Est Zero, MOE Positive 1,672 51.91 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 32 0.99 32 0.99

MOE Greater than Estimate 354 10.99 386 11.98

MOE Less than Estimate 2,684 83.33 3,070 95.31

Est Zero, MOE Positive 151 4.69 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Not Reported 2,503 77.71 2,503 77.71

MOE Equals Estimate 12 0.37 2,515 78.08

MOE Greater than Estimate 187 5.81 2,702 83.89

MOE Less than Estimate 476 14.78 3,178 98.67

Est Zero, MOE Positive 43 1.33 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 21 0.65 21 0.65

MOE Greater than Estimate 342 10.62 363 11.27

MOE Less than Estimate 2,757 85.59 3,120 96.86

Est Zero, MOE Positive 101 3.14 3,221 100.00

Neg MOE vs EST: Hispanic or Latino: White alone

B03002_13_neg

Frequency Percent

Neg MOE vs EST: Hispanic or Latino

B03002_12_neg

Frequency Percent

Neg MOE vs EST: Not Hispanic or Latino: Two or more races: Two races excluding

Some other race, and three or more races

B03002_11_neg

Frequency Percent

Neg MOE vs EST: Not Hispanic or Latino: Two or more races: Two races including

Some other race

B03002_10_neg

Frequency Percent

Neg MOE vs EST: Not Hispanic or Latino: Two or more races

B03002_9_neg

Frequency Percent

Neg MOE vs EST: Not Hispanic or Latino: Some other race alone

B03002_8_neg

Frequency Percent

Page 2 of 4

Appendix 1. Tabulation of MOEs, Including Those That Include Zero or Negative

Cases for Table B03002 (Race by Hispanic Status)

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 23 0.71 23 0.71

MOE Greater than Estimate 883 27.41 906 28.13

MOE Less than Estimate 788 24.46 1,694 52.59

Est Zero, MOE Positive 1,527 47.41 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 34 1.06 34 1.06

MOE Greater than Estimate 1,062 32.97 1,096 34.03

MOE Less than Estimate 646 20.06 1,742 54.08

Est Zero, MOE Positive 1,479 45.92 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 11 0.34 11 0.34

MOE Greater than Estimate 557 17.29 568 17.63

MOE Less than Estimate 274 8.51 842 26.14

Est Zero, MOE Positive 2,379 73.86 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 6 0.19 6 0.19

MOE Greater than Estimate 363 11.27 369 11.46

MOE Less than Estimate 62 1.92 431 13.38

Est Zero, MOE Positive 2,790 86.62 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 24 0.75 24 0.75

MOE Greater than Estimate 581 18.04 605 18.78

MOE Less than Estimate 2,320 72.03 2,925 90.81

Est Zero, MOE Positive 296 9.19 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 40 1.24 40 1.24

MOE Greater than Estimate 886 27.51 926 28.75

MOE Less than Estimate 1,573 48.84 2,499 77.58

Est Zero, MOE Positive 722 22.42 3,221 100.00

Neg MOE vs EST: Hispanic or Latino: Two or more races

B03002_19_neg

Frequency Percent

Neg MOE vs EST: Hispanic or Latino: Some other race alone

B03002_18_neg

Frequency Percent

Neg MOE vs EST: Hispanic or Latino: Native Hawaiian and Other Pacific Islander

alone

B03002_17_neg

Frequency Percent

Neg MOE vs EST: Hispanic or Latino: Asian alone

B03002_16_neg

Frequency Percent

Neg MOE vs EST: Hispanic or Latino: American Indian and Alaska Native alone

B03002_15_neg

Frequency Percent

Neg MOE vs EST: Hispanic or Latino: Black or African American alone

B03002_14_neg

Frequency Percent

Page 3 of 4

Appendix 1. Tabulation of MOEs, Including Those That Include Zero or Negative

Cases for Table B03002 (Race by Hispanic Status)

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 36 1.12 36 1.12

MOE Greater than Estimate 975 30.27 1,011 31.39

MOE Less than Estimate 1,263 39.21 2,274 70.60

Est Zero, MOE Positive 947 29.40 3,221 100.00

Cumulative Cumulative

Frequency Percent

MOE Equals Estimate 32 0.99 32 0.99

MOE Greater than Estimate 1,023 31.76 1,055 32.75

MOE Less than Estimate 906 28.13 1,961 60.88

Est Zero, MOE Positive 1,260 39.12 3,221 100.00

Neg MOE vs EST: Hispanic or Latino: Two or more races: Two races excluding Some

other race, and three or more races

B03002_21_neg

Frequency Percent

Neg MOE vs EST: Hispanic or Latino: Two or more races: Two races including Some

other race

B03002_20_neg

Frequency Percent

Page 4 of 4

Appendix 2.

ACS Desi gn and Met hodol ogy (Ch. 12 Revi sed 12/ 2010) Vari ance Est i mat i on 12- 1

U.S. Census Bureau

Chapt er 12.

Variance Estimation

12.1 OVERVIEW

Sampl i ng error i s the uncertai nt y associ at ed wi t h an esti mat e t hat i s based on dat a gat hered from

a sampl e of t he popul at i on rat her t han t he ful l popul at ion. Not e t hat sampl e- based est i mat es wi l l

vary dependi ng on the part icul ar sampl e sel ect ed f rom t he popul at i on. Measures of t he magni t ude

of sampl i ng error, such as the vari ance and the st andard error (t he square root of the vari ance),

ref l ect the vari at i on in t he est i mat es over al l possi bl e sampl es t hat coul d have been sel ect ed from

t he popul at i on using the same sampl i ng methodol ogy.

The Ameri can Communi t y Survey (ACS) i s commi t t ed t o provi di ng i t s users wi th measures of

sampl i ng error al ong wi th each publ i shed est i mat e. To accompl i sh t hi s, al l publi shed ACS

est i mat es are accompani ed ei t her by 90 percent margins of error or conf i dence i nterval s, bot h

based on ACS di rect vari ance est i mat es. Due t o t he compl exi t y of the sampl i ng design and t he

wei ghti ng adj ust ment s performed on the ACS sampl e, unbi ased desi gn- based variance est i mat ors

do not exi st . As a consequence, t he di rect vari ance est imat es are comput ed usi ng a repl i cat i on

met hod t hat repeat s t he esti mat i on procedures i ndependent l y several t i mes. The vari ance of t he

f ull sampl e i s t hen est i mat ed by usi ng the vari abi l i t y across t he resul t ing repl i cat e est i mat es.

Al t hough the vari ance est i mat es cal cul at ed usi ng t hi s procedure are not compl et el y unbi ased, t he

current met hod produces vari ances t hat are accurat e enough f or anal ysi s of the ACS dat a.

For Publ i c Use Mi crodat a Sampl e (PUMS) dat a users, repl i cat e wei ght s are provi ded t o approxi mat e

st andard errors f or t he PUMS- t abul at ed est i mat es. Desi gn f act ors are al so provi ded wi t h the PUMS

dat a, so PUMS dat a users can comput e st andard errors of t hei r st at i st i cs usi ng ei ther t he

repl i cat i on method or t he desi gn f act or met hod.

12.2 VARIANCE ESTIMATION FOR ACS HOUSING UNIT AND PERSON ESTIMATES

Unbi ased est i mat es of vari ances f or ACS est i mat es do not exi st because of the syst emat i c sampl e

desi gn, as wel l as t he rat i o adj ust ment s used in est i mat i on. As an al t ernat i ve, ACS i mpl ement s a

repl i cat i on method f or vari ance est i mat i on. An advant age of thi s method i s t hat the vari ance

est i mat es can be comput ed wi t hout consi derat i on of the f orm of t he st at i st i cs or t he compl exi t y of

t he sampl i ng or wei ghti ng procedures, such as t hose bei ng used by t he ACS.

The ACS empl oys t he Successi ve Di f ferences Repl i cat i on (SDR) met hod (Wol t er, 1984; Fay & Trai n,

1995; Judki ns, 1990) t o produce vari ance est i mat es. It has been t he met hod used to cal cul at e ACS

est i mat es of vari ances si nce t he st art of t he survey. The SDR was desi gned t o be used wi t h

syst emat i c sampl es f or which t he sort order of t he sampl e i s inf ormat i ve, as i n t he case of t he

ACS s geographi c sort . Appli cat i ons of t hi s met hod were devel oped t o produce est imat es of

vari ances f or the Current Popul at i on Survey (U.S. Census Bureau, 2006) and Census 2000 Long

Form est i mat es (Gbur & Fai rchi l d, 2002).

In the SDR met hod, t he fi rst st ep i n creat ing a vari ance est i mat e i s const ruct i ng t he repl i cat e

f act ors. Repl i cat e base wei ght s are t hen cal cul at ed by mul t i pl ying the base weight f or each

housi ng uni t (HU) by t he f act ors. The wei ghti ng process t hen i s rerun, usi ng each set of repl i cat e

base wei ght s in t urn, t o creat e f i nal repl i cat e wei ght s. Repl i cat e est i mat es are creat ed by usi ng t he

same est i mat i on met hod as t he ori ginal est i mat e, but appl yi ng each set of repl i cat e wei ght s

i nst ead of t he origi nal weight s. Fi nal l y, t he repli cat e and ori ginal est i mat es are used t o comput e

t he vari ance est i mat e based on t he vari abi li t y bet ween the repl i cat e est i mat es and t he ful l sampl e

est i mat e.

12- 2 Vari ance Est i mat i on (Ch. 12 Revi sed 12/ 2010) ACS Desi gn and Met hodol ogy

U.S. Census Bureau

The f ol l owi ng st eps produce t he ACS di rect vari ance est i mat es:

1. Comput e repl i cat e f act ors.

2. Comput e repl i cat e wei ght s.

3. Comput e vari ance est i mat es.

Replicate Factors

Comput at i on of repl i cat e f act ors begi ns wi th t he sel ect ion of a Hadamard mat ri x of order R (a

mul t i pl e of 4), where R i s t he number of repli cat es. A Hadamard mat ri x H i s a k- by- k mat ri x wi t h

al l ent ri es ei ther 1 or 1, such t hat H'H = kI (t hat i s, the col umns are ort hogonal ). For ACS, t he

number of repl i cat es i s 80 (R = 80). Each of t he 80 col umns represent s one repli cat e.

Next , a pai r of rows i n t he Hadamard mat ri x i s assi gned t o each record (HU or group quart ers (GQ)

person). An al gori thm i s used t o assi gn t wo rows of an 80 80 Hadamard mat ri x t o each HU. The

ACS uses a repeat i ng sequence of 780 pai rs of rows i n t he Hadamard mat ri x t o assi gn rows t o

each record, in sort order (Navarro, 2001a). The assi gnment of Hadamard mat ri x rows repeat s

every 780 records unt il al l records recei ve a pai r of rows f rom t he Hadamard mat ri x. The fi rst row

of t he mat ri x, in whi ch every cel l i s al ways equal t o one, i s not used.

The repl i cat e f act or f or each record then i s det ermi ned f rom these t wo rows of the 80 80

Hadamard mat ri x. For record i (i = 1,,n, where n i s sampl e si ze) and repli cat e r (r = 1,,80), t he

repl i cat e f act or i s comput ed as:

where R1i and R2i are respect i vel y t he f irst and second row of the Hadamard mat ri x assi gned t o

t he i- t h HU, and a

Rl i,r

and a

R2i ,r

are respect i vel y t he mat rix el ement s (ei t her 1 or 1) from t he

Hadamard mat ri x i n rows R1i and R2i and col umn r. Not e t hat t he f ormul a f or

i,r

If a

yi el ds repl i cat e

f act ors t hat can t ake one of t hree approxi mat e val ues: 1.7, 1.0, or 0.3. That i s;

R1i,r

= + 1 and a

R2i,r

If a

= + 1, t he repl i cat e f act or i s 1.

R1i,r

= 1 and a

R2i,r

If a

= 1, the repl i cat e f act or i s 1.

R1i,r

= + 1 and a

R2i,r

If a

= 1, t he repl i cat e f act or i s approxi mat el y 1.7.

R1i,r

= 1 and a

R2i,r

The expect at i on i s t hat 50 percent of repl i cat e f act ors wi l l be 1, and t he ot her 50 percent wil l be

evenl y spli t bet ween 1.7 and 0.3 (Gunl i cks, 1996).

= + 1, t he repl i cat e f act or i s approxi mat el y 0.3.

The f ol l owi ng exampl e demonst rat es t he comput at i on of repli cat e f act ors f or a sampl e of si ze

f i ve, using a Hadamard mat ri x of order f our:

Tabl e 12.1 present s an exampl e of a t wo- row assi gnment devel oped f rom thi s mat ri x, and the

val ues of repl i cat e f act ors for each sampl e uni t .

ACS Desi gn and Met hodol ogy (Ch. 12 Revi sed 12/ 2010) Vari ance Est i mat i on 12- 3

U.S. Census Bureau

Tabl e 12.1 Example of Two- Row Assignment, Hadamard Matrix Elements, and Replicate

Factors

Case

#(i)

Row

Hadamard mat rix element Approximat e replicat e

R1 R2

i

Replicat e 1

i

Replicat e 2 Replicat e 3 Replicat e 4

f f

i ,1

f

i ,2

f

i ,3

a

i ,4

a

R1i ,1

a

R2i ,1

a

R1i ,2

a

R2i ,2

a

R1i ,3

a

R2i ,3

a

R1i ,4

1

R2i ,4

2 3 - 1 + 1 + 1 + 1 - 1 - 1 + 1 - 1 0.3 1 1 1.7

2 3 4 + 1 - 1 + 1 + 1 - 1 + 1 - 1 - 1 1.7 1 0.3 1

3 4 2 - 1 - 1 + 1 + 1 + 1 + 1 - 1 + 1 1 1 1 0.3

4 2 3 - 1 + 1 + 1 + 1 - 1 - 1 + 1 - 1 0.3 1 1 1.7

5 3 4 + 1 - 1 + 1 + 1 - 1 + 1 - 1 - 1 1.7 1 0.3 1

Not e t hat row 1 i s not used. For t he thi rd case (i = 3), rows f our and t wo of t he Hadamard mat ri x

are t o cal cul at e t he repl i cat e f act ors. For t he second repl i cat e (r = 2), t he repl i cat e f act or i s

comput ed usi ng the values i n t he second col umn of rows f our (+1) and t wo (+1) as f ol l ows:

Replicate Weights

Repl i cat e wei ght s are produced i n a way si mi l ar t o t hat used t o produce ful l sampl e f i nal weight s.

Al l of t he weight ing adj ustment processes perf ormed on t he f ul l sampl e f i nal survey wei ght s (such

as appl yi ng nonint ervi ew adj ust ment s and popul at i on cont rol s) al so are carri ed out f or each

repl i cat e weight . However, col l apsi ng pat t erns are ret ained f rom t he f ul l sampl e wei ght ing and are

not det ermined agai n f or each set of repl i cat e weight s.

Bef ore appl yi ng t he weight ing st eps expl ai ned i n Chapter 11, t he repl i cat e base wei ght (RBW) f or

repl i cat e r i s comput ed by mul t i pl ying the full sampl e base wei ght (BW see Chapt er 11 f or t he

comput at i on of t hi s wei ght ) by t he repl i cat e f act or

i,r

; that i s, RBW

i,r

= BW

i

i ,r

, where RBW

i,r

One can el aborat e on the previ ous exampl e of t he repl icat e const ruct i on usi ng fi ve cases and f our

repl i cat es: Suppose t he f ull sampl e BW val ues are gi ven under t he second col umn of t he f oll owi ng

t abl e (Tabl e 12.2). Then, t he repl i cat e base weight values are gi ven i n columns 710.

i s

t he repl i cat e base wei ght f or t he i- t h HU and the r- t h repl i cat e (r = 1, , 80).

Tabl e 12.2 Example of Computation of Replicate Base Weight Factor (RBW)

Case # BW

Approximat e Replicat e Fact or

i

Replicat e Base Weight

f f

i ,1

f

i ,2

f

i ,3

RBW

i ,4

RBW

i,1

RBW

i,2

RBW

i,3

1

i,4

100 0.3 1 1 1.7 29 100 100 171

2 120 1.7 1 0.3 1 205 120 35 120

3 80 1 1 1 0.3 80 80 80 23

4 120 0.3 1 1 1.7 35 120 120 205

5 110 1.7 1 0.3 1 188 110 32 110

The rest of t he wei ght ing process (Chapt er 11) t hen i s appl i ed t o each repli cat e weight RBW

i ,r

(st art i ng f rom the adj ust ment f or CAPI subsampl i ng) and proceedi ng t o the popul ati on cont rol

adj ust ment or raking). Basi cal l y, t he wei ght ing adj ust ment process i s repeat ed independent l y 80

t i mes and t he RBW

i ,r

i s used i n pl ace of BW

i

By t he end of thi s process, 80 f i nal repli cat e wei ght s f or each HU and person record are produced.

(as i n Chapt er 11).

Variance Estimates

Gi ven the repl i cat e weight s, t he comput at i on of vari ance f or any ACS est i mat e i s strai ght f orward.

Suppose t hat i s an ACS est imat e of any t ype of st at i st i c, such as mean, t ot al , or proport i on. Let

denot e t he est i mat e comput ed based on t he f ull sampl e wei ght , and , , , denot e t he

est i mat es comput ed based on t he repl i cat e wei ght s. The vari ance of , , i s est i mat ed as t he

12- 4 Vari ance Est i mat i on (Ch. 12 Revi sed 12/ 2010) ACS Desi gn and Met hodol ogy

U.S. Census Bureau

sum of squared dif f erences bet ween each repl i cat e est imat e (r = 1, , 80) and t he f ul l sampl e

est i mat e . The f ormul a i s as f ol l ows

1

Thi s equat i on hol ds f or count est i mat es as wel l as any ot her t ypes of est i mat es, incl udi ng

percent s, rat i os, and medi ans.

:

There are cert ain cases, however, where thi s f ormul a does not appl y. The f i rst and most i mport ant

cases are est i mat es t hat are cont rol l ed t o popul at i on tot al s and have t hei r st andard errors set t o

zero. These are est i mat es that are f orced t o equal int ercensal est i mat es duri ng t he wei ght i ng

process s raki ng st epf or exampl e, t ot al popul at i on and col l apsed age, sex, and Hi spani c ori gin

est i mat es f or wei ghti ng areas. Al t hough race i s i ncluded i n t he raking procedure, race group

est i mat es are not cont roll ed; t he cat egori es used i n the wei ghti ng process (see Chapt er 11) do not

mat ch t he publ i shed t abul at i on groups because of mult i pl e race responses and the Some Ot her

Race cat egory. Inf ormat i on on t he fi nal col l apsi ng of the person post - st rat i f i cat i on cel l s i s passed

f rom t he wei ght ing t o t he vari ance est i mat i on process in order t o i denti f y est i mat es t hat are

cont rol l ed. Thi s i denti f i cat ion i s done i ndependentl y f or al l wei ghti ng areas and t hen i s appl i ed t o

t he geographi c areas used for t abul at i on. St andard errors f or those est i mat es are set t o zero, and

publ i shed margi ns of error are set t o ***** (wi t h an appropri at e accompanyi ng foot not e).

Anot her speci al case deal s wi t h zero- est i mat ed count s of peopl e, househol ds, or HUs. A di rect

appl i cat i on of t he repl i cat e vari ance f ormul a l eads t o a zero st andard error f or a zero- est i mat ed

count . However, there may be peopl e, househol ds, or HUs wi t h that charact eri st i c i n that area t hat

were not sel ect ed t o be i n the ACS sampl e, but a di ff erent sampl e mi ght have sel ect ed t hem, so a

zero st andard error i s not appropri at e. For t hese cases, t he f ol l owi ng model - based est i mat i on of

st andard error was i mpl ement ed.

For ACS dat a i n a census year, t he ACS zero- est i mat ed count s (f or charact eri st i cs incl uded in t he

100 percent census (short f orm) count ) can be checked agai nst t he corresponding census

est i mat es. At l east 90 percent of the census count s f or t he ACS zero- est i mat ed count s shoul d be

wi t hin a 90 percent confi dence i nt erval based on our model ed st andard error.

2

Then, set the 90 percent upper bound f or the zero est imat e equal t o t he Census count :

Let the vari ance of

t he est i mat e be model ed as some mul t i pl e (K) of the average fi nal wei ght (f or a st at e or t he

nat i on). That i s:

Sol vi ng f or K yi el ds:

K was comput ed f or all ACS zero- est i mat ed count s f rom 2000 whi ch mat ched t o Census 2000

100 percent count s, and t hen t he 90t h percent il e of t hose Ks was det ermined. Based on t he

Census 2000 dat a, we use a val ue f or K of 400 (Navarro, 2001b). As t hi s model ing met hod

requi res census count s, t he 400 val ue can next be updat ed usi ng the 2010 Census and 2010 ACS

dat a.

For publ i cat i on, the st andard error (SE) of t he zero count est i mat e i s comput ed as:

1

A general replicat ion- based variance f ormula can be expressed as

where c

r

is t he mult iplier relat ed t o t he r- th replicat e det ermined by t he replicat ion met hod. For t he SDR

met hod, the value of c

r

is 4 / R, where R is t he number of replicat es (Fay & Train, 1995).

2

This modeling was done only once, in 2001, prior t o t he publicat ion of t he 2000 ACS dat a.

ACS Desi gn and Met hodol ogy (Ch. 12 Revi sed 12/ 2010) Vari ance Est i mat i on 12- 5

U.S. Census Bureau

The average wei ght s (t he maxi mum of the average housi ng uni t and average person f inal wei ght s)

are cal cul at ed at t he st at e and nat i onal l evel f or each ACS si ngl e- year or mul ti year dat a rel ease.

Est i mat es f or geographi c areas wi t hin a st at e use t hat st at e s average weight , and est i mat es f or

geographi c areas t hat cross st at e boundari es use t he nat i onal average wei ght.

Fi nal l y, a si mil ar met hod i s used t o produce an approximat e st andard error f or bot h ACS zero and

100 percent est i mat es. We do not produce approxi mat e st andard errors f or ot her zero est i mat es,

such as rat i os or medi ans.

Variance Estimation for Multiyear ACS Estimates Finite Population Correction Factor

Through t he 2008 and 2006- 2008 dat a product s, t he same vari ance est i mat i on met hodol ogy

descri bed above was i mpl ement ed f or bot h 1- year and 3- year. No changes t o t he met hodol ogy

were necessary due t o usi ng mul ti pl e years of sampl e dat a. However, beginni ng wi t h t he 2007-

2009 and 2005- 2009 dat a product s, t he ACS i ncorporat ed a f i ni t e popul at i on correct i on (FPC)

f act or i nt o t he 3- year and 5- year vari ance est i mat i on procedures.

The Census 2000 l ong f orm, as not ed above, used the same SDR vari ance est i mat i on met hodol ogy

as t he ACS current l y does. The l ong f orm met hodol ogy al so i ncl uded an FPC f act or i n i t s

cal cul at i on. One- year ACS sampl es are not l arge enough f or an FPC t o have much impact on

vari ances. However, wi th 5- year ACS est i mat es, up t o 50 percent of housing uni t s in cert ai n

bl ocks may have been in sampl e over the 5- year peri od. Appl yi ng an FPC f act or t o mul t i - year ACS

repl i cat e est i mat es wi ll enabl e a more accurat e est i mat e of t he vari ance, part i cul arly f or smal l

areas. It was deci ded t o appl y t he FPC adj ust ment t o 3- year and 5- year ACS product s, but not t o

1- year product s.

The ACS FPC f act or i s appl ied i n the creat i on of the repl i cat e f act ors:

where i s t he FPC f act or. Generi cal l y, n i s t he unwei ght ed sampl e si ze, and N i s t he

unwei ght ed uni verse si ze. The ACS uses t wo separat e FPC f act ors: one f or HUs respondi ng by mai l

or t el ephone, and a second f or HUs responding vi a personal vi si t f ol l ow- up.

The FPC i s t ypi cal l y appl i ed as a mul t i pl i cat i ve f act or out si de t he vari ance f ormula. However,

under cert ai n si mpli f ying assumpt i ons, t he vari ance using t he repli cat e f act ors af t er appl yi ng the

FPC f act or i s equal t o t he ori gi nal vari ance mul t i pl ied by t he FPC f act or. Thi s met hod al l ows a

di rect appl i cat i on of t he FPC t o each housi ng uni t s or person s set of repli cat e weight s, and a

seaml ess i ncorporat i on int o t he ACS s current vari ance product i on met hodol ogy, rat her than

havi ng t o keep track of mult i pl i cat i ve f act ors when t abul at i ng across areas of di f f erent sampl i ng

rat es.

The adj ust ed repl i cat e f act ors are used t o creat ed repl i cat e base wei ght s, and ul t i mat el y f i nal

repl i cat e weight s. It i s expect ed t hat t he i mprovement in t he vari ance est i mat e wi l l carry t hrough

t he wei ghti ng, and wil l be seen when t he f inal wei ght s are used.

The ACS FPC f act or coul d be appl i ed at any geographi c l evel . Si nce the ACS sampl i ng rat es are

det ermi ned at the smal l area l evel (mai nl y census t ract s and government al uni t s), a l ow level of

geography was desi rabl e. At hi gher l evel s, t he hi gh sampl i ng rat es i n specif i c bl ocks woul d l ikel y

be masked by t he l ower rates i n surrounding bl ocks. For t hat reason, the f act ors are appl i ed at t he

census t ract l evel.

Group quart ers persons do not have an FPC f act or appl i ed t o t heir repl i cat e f act ors.

12.3 MARGIN OF ERROR AND CONFIDENCE INTERVAL

Once t he st andard errors have been comput ed, margins of error and/ or conf i dence bounds are

produced f or each est i mat e. These are the measures of overal l sampl ing error present ed al ong

wi t h each publ i shed ACS est i mat e. Al l publ i shed ACS margi ns of error and t he l ower and upper

12- 6 Vari ance Est i mat i on (Ch. 12 Revi sed 12/ 2010) ACS Desi gn and Met hodol ogy

U.S. Census Bureau

bounds of confi dence i nt erval s present ed in t he ACS dat a product s are based on a 90 percent

conf i dence l evel, whi ch i s the Census Bureau s st andard (U.S. Census Bureau, 2010b). A margi n of

error cont ains t wo component s: t he st andard error of the est i mat e, and a mul t i pli cat i on f act or

based on a chosen conf i dence l evel. For the 90 percent conf i dence l evel, t he val ue of t he

mul t i pl i cat i on f act or used by t he ACS i s 1.645. The margi n of error of an est i mat e can be

comput ed as:

where SE( ) i s t he st andard error of t he est i mat e . Gi ven t hi s margin of error, t he 90 percent

conf i dence i nt erval can be comput ed as:

That i s, t he l ower bound of t he confi dence int erval i s [ margi n of error ( ) ], and t he upper

bound of the conf i dence i nterval i s [ + margin of error ( ) ]. Roughl y speaki ng, t hi s int erval i s a

range t hat wi l l cont ai n the f ull popul at i on val ue of t he est i mat ed charact eri st i c wi t h a known

probabi l i t y.

Users are caut i oned t o consi der l ogi cal boundari es when creat ing conf i dence bounds f rom t he

margi ns of error. For exampl e, a smal l popul at i on est imat e may have a cal cul at ed l ower bound

l ess t han zero. A negat i ve number of peopl e does not make sense, so t he l ower bound shoul d be

set t o zero inst ead. Li kewi se, bounds f or percent s shoul d not go bel ow zero percent or above 100

percent . For other charact eri st i cs, l i ke i ncome, negat i ve val ues may be l egi t i mat e.

Gi ven the confi dence bounds, a margi n of error can be comput ed as t he di f ference bet ween an

est i mat e and i t s upper or l ower confi dence bounds:

Usi ng t he margin of error (as publ i shed or cal cul at ed f rom t he bounds), t he st andard error i s

obt ai ned as f ol l ows:

For ranking t abl es and compari son prof i les, t he ACS provi des an indi cat or as t o whet her two

est i mat es, Est

1

and Est

2

If Z < 1.645 or Z > 1.645, t he di ff erence bet ween the est i mat es i s si gnif i cant at the 90 percent

l evel. Det ermi nat i ons of st at i st i cal si gnif i cance are made usi ng unrounded values of t he st andard

errors, so users may not be abl e t o achi eve the same resul t using the st andard errors deri ved f rom

t he rounded est i mat es and margi ns of error as publ i shed. Onl y pai rwi se t est s are used t o

det ermi ne si gni fi cance i n the ranking t abl es; no mul t i ple compari son met hods are used.

, are st at i st i cal l y si gni fi cant l y di ff erent at t he 90 percent conf i dence l evel.

That det ermi nat i on i s made by i ni t i all y cal cul at i ng:

12.4 VARIANCE ESTIMATION FOR THE PUMS

The Census Bureau cannot possi bl y predi ct al l combi nat i ons of est i mat es and geography t hat may

be of int erest t o dat a users. Dat a users can downl oad PUMS f i les and t abul at e t he dat a t o creat e

est i mat es of t hei r own choosi ng. Because the ACS PUMS cont ai ns onl y a subset of the ful l ACS

sampl e, est i mat es f rom t he ACS PUMS f i l e wi ll of ten be di f f erent f rom the publi shed ACS est i mat es

t hat are based on t he f ul l ACS sampl e.

Users of t he ACS PUMS fi l es can comput e t he est i mat ed vari ances of thei r st at i st i cs usi ng one of

t wo opt i ons: (1) t he repl i cat i on method using repl i cat e wei ght s rel eased wi t h the PUMS dat a, and

(2) t he desi gn f act or method.

ACS Desi gn and Met hodol ogy (Ch. 12 Revi sed 12/ 2010) Vari ance Est i mat i on 12- 7

U.S. Census Bureau

PUMS Replicate Variances

For t he repl i cat e met hod, direct vari ance est i mat es based on t he SDR f ormul a as descri bed i n

Sect i on 12.2 above can be i mpl ement ed. Users can si mpl y t abul at e 80 repl i cat e est i mat es i n

addi t i on t o t heir desi red esti mat e by usi ng t he provi ded 80 repl i cat e wei ght s, and then appl y t he

vari ance f ormul a:

PUMS Design Factor Variances

Si mi l ar t o met hods used t o cal cul at e st andard errors f or PUMS dat a f rom Census 2000, t he ACS

PUMS provi des t abl es of desi gn f act ors f or vari ous t opics such as age f or persons or t enure f or

HUs. For exampl e, the 2009 ACS PUMS desi gn f act ors are publ i shed at nat i onal and st at e l evel s

(U.S. Census Bureau, 2010a), and were cal cul at ed usi ng 2009 ACS dat a. PUMS desi gn f act ors are

updat ed peri odi cal l y, but not necessari l y on an annual basi s. The desi gn f act or approach was

devel oped based on a model t hat uses a st andard error f rom a si mpl e random sampl e as t he base,

and t hen i nf l at es i t t o account f or an increase i n t he vari ance caused by t he compl ex sampl e

desi gn. St andard errors f or al most al l count s and proport i ons of persons, households, and HUs

are approxi mat ed usi ng desi gn f act ors. For 1- year ACS PUMS f i l es begi nni ng wi th 2005, use:

f or a t ot al , and

f or a percent, where:

= t he est i mat e of t ot al or a count .

= t he est i mat e of a percent .

DF = t he appropri at e desi gn fact or based on t he t opi c of t he est i mat e.

N = t he t ot al f or the geographi c area of int erest (i f the est i mat e i s of HUs, the number

of HUs i s used; i f t he est i mat e i s of f amil i es or househol ds, t he number of

househol ds i s used; otherwise t he number of persons i s used as N).

B = t he denominat or (base) of t he percent .

The val ue 99 i n t he f ormul a i s t he val ue of the 1- year PUMS FPC f act or, whi ch i s comput ed as (100

) / , where (gi ven as a percent ) i s t he sampl i ng rat e f or the PUMS dat a. Si nce t he PUMS i s

approxi mat el y a 1 percent sampl e of HUs, (100 ) / = (100 1)/ 1 = 99.

For 3- year PUMS f i l es beginni ng wi th 20052007, t he 3 years wort h of dat a represent

approxi mat el y a 3 percent sampl e of HUs. Hence, t he 3- year PUMS FPC f act or i s (100 ) / =

(100 3) / 3 = 97 / 3. To cal cul at e st andard errors from 3- year PUMS dat a, subst i t ut e 97 / 3 f or

99 i n t he above f ormul as.

Si mi l arl y, 5- year PUMS f i les, begi nni ng wi t h 2005- 2009, represent approxi mat el y a 5 percent

sampl e of HUs. So, t he 5- year PUMS FPC i s 95 / 5 = 19, whi ch can be subst i t ut ed for 99 i n t he

above f ormul as.

The desi gn f act or (DF) i s def i ned as t he rat i o of t he st andard error of an est i mat ed paramet er

(comput ed under t he repl i cat i on met hod descri bed in Sect i on 12.2) t o t he st andard error based on

a si mpl e random sampl e of t he same si ze. The DF ref l ect s t he ef fect of the act ual sampl e desi gn

and est i mat i on procedures used f or the ACS. The DF f or each t opi c was comput ed by model i ng

12- 8 Vari ance Est i mat i on (Ch. 12 Revi sed 12/ 2010) ACS Desi gn and Met hodol ogy

U.S. Census Bureau

t he rel at i onshi p bet ween t he st andard error under t he repl i cat i on met hod (RSE) wi t h t he st andard

error based on a si mple random sampl e (SRSSE); t hat i s, RSE = DF SRSSE, where the SRSSE i s

comput ed as f ol l ows:

The val ue 39 i n t he f ormul a above i s t he FPC f act or based on an approxi mat e sampl i ng f ract i on of

2.5 percent i n the ACS; t hat i s, (100 2.5) / 2.5 = 97.5 / 2.5 = 39.

The val ue of DF i s obt ai ned by f i t t i ng t he no- i ntercept regressi on model RSE = DF SRSSE usi ng

st andard errors (RSE, SRSSE) f or vari ous publ i shed t abl e est i mat es at t he nat i onal and st at e l evel s.

The val ues of DFs by t opi c can be obt ai ned from t he PUMS Accuracy of t he Dat a st at ement t hat

i s publ i shed wi t h each PUMS f i l e. For exampl e, 2009 1- year PUMS DFs can be f ound i n U,S,

Census Bureau (2010a).. The document at i on al so provides exampl es on how t o use t he design

f act ors t o comput e st andard errors f or t he est i mat es of t ot al s, means, medi ans, proport i ons or

percent ages, rat i os, sums, and di f f erences.

The t opi cs f or t he 2009 PUMS desi gn f act ors are, f or the most part , t he same ones t hat were

avai l abl e f or t he Census 2000 PUMS. We recommend t o users t hat , i n usi ng t he desi gn f act or

approach, i f t he est i mat e i s a combi nat i on of t wo or more charact eri st i cs, t he l argest DF f or thi s

combi nat i on of charact eri st ics i s used. The onl y except ions t o t hi s are i t ems crossed wi t h race or

Hi spani c ori gin; f or t hese i tems, t he l argest DF i s used, af t er removi ng t he race or Hi spani c ori gin

DFs f rom consi derat i on.

12.5 REFERENCES

Fay, R., & Trai n, G. (1995). Aspect s of Survey and Model Based Post censal Est i mat i on of Income

and Povert y Charact eri st i cs f or St at es and Counti es. Joint Statistical Meetings: Proceedings of the

Section on Government Statistics (pp. 154- 159). Al exandri a, VA: Ameri can St at i st i cal Associ at i on:

ht t p:/ / www.census.gov/ di d/ www/ sai pe/ publ i cat i ons/ f i l es/ FayTrai n95.pdf

Gbur, P., & Fai rchi l d, L. (2002). Overvi ew of t he U.S. Census 2000 Long Form Di rect Vari ance

Est i mat i on. Joint Statistical Meetings: Proceedings of the Section on Survey Research Methods (pp.

1139- 1144). Al exandri a, VA: Ameri can St at i st i cal Associ at i on.

Gunl i cks, C. (1996). 1990 Replicate Variance System (VAR90-20). Washi ngt on, DC: U.S. Census

Bureau.

Judki ns, D. R. (1990). Fay's Met hod f or Vari ance Est i mat i on. Journal of Official Statistics , 6 (3),

223- 239.

Navarro, A. (2001a). 2000 American Community Survey Comparison County Replicate Factors.

Ameri can Communi t y Survey Vari ance Memorandum Seri es #ACS- V- 01. Washi ngt on, DC: U.S.

Census Bureau.

Navarro, A. (2001b). Estimating Standard Errors of Zero Estimates. Washi ngt on, DC: U.S. Census

Bureau.

U.S. Census Bureau. (2006). Current Population Survey: Technical Paper 66Design and

Methodology. Ret ri eved from U.S. Census Bureau: ht t p:/ / www.census.gov/ prod/ 2006pubs/ t p-

66.pdf

U.S. Census Bureau. (2010a). PUMS Accuracy of the Data (2009). Ret ri eved f rom U.S. Census

Bureau:

ht t p:/ / www.census.gov/ acs/ www/ Downl oads/ dat a_document at i on/ pums/ Accuracy/ 2009Accurac

yPUMS.pdf

U.S. Census Bureau. (2010b). Statistical Quality Standard E2: Reporting Results. Washi ngt on, DC:

U.S. Census Bureau: ht t p:/ / www.census.gov/ qual i t y/ st andards/ st andarde2.ht ml

ACS Desi gn and Met hodol ogy (Ch. 12 Revi sed 12/ 2010) Vari ance Est i mat i on 12- 9

U.S. Census Bureau

Wol t er, K. M. (1984). An Invest i gat i on of Some Est i mat ors of Vari ance f or Syst emat i c Sampl i ng.

Journal of the American Statistical Association , 79, 781- 790.

Appendix 3.

Statistical Science

2001, Vol. 16, No. 2, 101133

Interval Estimation for

a Binomial Proportion

Lawrence D. Brown, T. Tony Cai and Anirban DasGupta

Abstract. We revisit the problem of interval estimation of a binomial

proportion. The erratic behavior of the coverage probability of the stan-

dard Wald condence interval has previously been remarked on in the

literature (Blyth and Still, Agresti and Coull, Santner and others). We

begin by showing that the chaotic coverage properties of the Wald inter-

val are far more persistent than is appreciated. Furthermore, common

textbook prescriptions regarding its safety are misleading and defective

in several respects and cannot be trusted.

This leads us to consideration of alternative intervals. A number of

natural alternatives are presented, each with its motivation and con-

text. Each interval is examined for its coverage probability and its length.

Based on this analysis, we recommend the Wilson interval or the equal-

tailed Jeffreys prior interval for small n and the interval suggested in

Agresti and Coull for larger n. We also provide an additional frequentist

justication for use of the Jeffreys interval.

Key words and phrases: Bayes, binomial distribution, condence

intervals, coverage probability, Edgeworth expansion, expected length,

Jeffreys prior, normal approximation, posterior.

1. INTRODUCTION

This article revisits one of the most basic and