Professional Documents

Culture Documents

Implementing Matrix Multiplication On An Mpi Cluster of Workstations

Uploaded by

Dr-Firoz AliOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Implementing Matrix Multiplication On An Mpi Cluster of Workstations

Uploaded by

Dr-Firoz AliCopyright:

Available Formats

1st International Conference From Scientific Computing to Computational Engineering 1st IC-SCCE Athens, 8-10 September, 2004 IC-SCCE

IMPLEMENTING MATRIX MULTIPLICATION ON AN MPI CLUSTER OF WORKSTATIONS

Theodoros Typou, Vasilis Stefanidis, Panagiotis Michailidis and Konstantinos Margaritis Parallel and Distributed Processing Laboratory (PDP Lab) University of Macedonia P.O. BOX 1591, 54006 Thessaloniki, Greece e-mail: {typou, bstefan, panosm, kmarg}@uom.gr, web page: http://zeus.it.uom.gr/pdp/ Keywords: Matrix multiplication, Cluster of workstations, Message Passing Interface, Performance prediction model. Abstract. The matrix multiplication is one of the most important computational kernels in scientific computing. Consider the matrix multiplication product C = AB where A, B, C are matrices of size nn. We propose four parallel matrix multiplication implementations on a cluster of workstations. These parallel implementations are based on the master worker model using dynamic block distribution scheme. Experiments are realized using the Message Passing Interface (MPI) library on a cluster of workstations. Moreover, we propose an analytical prediction model that can be used to predict the performance metrics of the implementations on a cluster of workstations. The developed performance model has been checked and it has been shown that this model is able to predict the parallel performance accurately. 1 INTRODUCTION The matrix multiplication is a fundamental operation in many numerical linear algebra applications. Its efficient implementation on parallel computers is an issue of prime importance when providing such systems with scientific software libraries [1, 5, 6, 7]. Consequently, considerable effort has been devoted in the past to the development of efficient parallel matrix multiplication algorithms, and this will remain a task in the future as well. Many parallel algorithms have been designed, implemented, and tested on different parallel computers or cluster of workstations for matrix multiplication [8, 9, 14]. These algorithms may be roughly classified as broadcast and shift algorithms initially presented in [8] and align and shift algorithms, initially presented in [4]. Recently, some papers [2, 12] have been successfully adapted the broadcast based algorithms to the cluster of workstations. Finally, the general efficient algorithms for the master-worker paradigm on heterogeneous clusters have been widely developed in [3]. In this paper we study implementations for matrix multiplication on a cluster of workstations and develop a performance prediction model for these implementations. This work differs from previous ones for two reasons: First, we are developing four parallel implementations for matrix multiplication using dynamic master worker model. Further, these implementations were executed using the Message Passing Interface (MPI) [10, 11] library on a cluster of homogeneous workstations. Second, we are developing a heterogeneous performance model for the four implementations that is general enough to cover performance evaluation of both homogeneous and heterogeneous computations in a dedicated cluster of workstations. So, we consider the homogeneous computing as a special case of heterogeneous computing. The rest of the paper is organized as follows: In Section 2 we briefly present heterogeneous computing model and the metrics. In Section 3 we present a performance prediction model for estimating the performance of the four matrix multiplication implementations on a cluster of workstations. In Section 4 we discuss the validation of the performance prediction model with the experimental results. Finally, in Section 5 we give some remarks and conclusions. 2 HETEROGENEOUS COMPUTING MODEL A heterogeneous network (HN) can be abstracted as a connected graph HN(M,C), where M={M1, M2,, Mp} of heterogeneous workstations (p is the number of workstations). The computation capacity of each workstation is determined by the power of its CPU, I/O and memory access speed. C is standard interconnection network for workstations, such as Fast Ethernet or an ATM network, where the communication links between any pair of the workstations have the same bandwidth. Based on the above definition, if a cluster consists of a set of identical workstations, the cluster is homogeneous.

Theodoros Typou, Vasilis Stefanidis, Panagiotis Michailidis and Konstantinos Margaritis.

2.1 Metrics Metrics help to compare and characterize parallel computer systems. Metrics cited in this section are defined and published in previous paper [15]. They can be roughly divided into characterization metrics and performance metrics. 2.2 Characterization metrics To compute the power weight among workstations an intuitive metric is defined as follows: (1) where A is an application and T(A,Mi) is the execution time for computing A on workstation Mi. Formula 1 indicates that the power weight of a workstation refers to its computing speed relative to the fastest workstation in the network. The value of the power weight is less than or equal to 1. However, if the cluster of workstations is homogeneous then the values of the power weights are equal to 1. To calculate the execution time of a computational segment, the speed, denoted by Sf of the fastest workstation executing basic operations of an application is measured by the following equation: (2) where c is a computational segment, (c) is a complexity function which gives the number of basic operations in a computational segment and tc is the execution time of c on the fastest workstation in the network. Using the speed of the fastest workstation, Sf, we can calculate the speeds of the other workstations in the system, denoted by Si (i=1,,p), using the computing power weight as follows: (3) where Wi is the computing power weight of Mi. So, by the equation 3, the execution time of a segment c across the heterogeneous network HN, denoted by Tcpu (c,HN), can be represented as (4)

where is the computational capacity used which is obtained by summing the individual speeds of the workstations. Here, Tcpu is considered the required CPU time for the segment. Furthermore, substituting Si = 1in above equation for dedicated cluster of homogeneous workstations, the execution time of a segment c returns to the conventional form: (5)

2.3 Performance metrics Speedup is used to quantify the performance gain from a parallel computation of an application A over its computation on a single machine on a heterogeneous network system. The speedup of a heterogeneous computation is given by: (6) where T(A,HN) is the total parallel execution time for application A on HN, and T(A,Mj) is the execution time for A on workstation Mj, j=1,,p. Efficiency or utilization is a measure of the time percentage for which a machine is usefully employed in parallel computing. Therefore, the utilization of parallel computing of application A on a dedicated heterogeneous network is defined as follows: (7) The previous formula indicates that if the speedup is larger than the system computing power, the

Theodoros Typou, Vasilis Stefanidis, Panagiotis Michailidis and Konstantinos Margaritis.

computation presents a superlinear speedup in a dedicated heterogeneous network. Further, substituting Wj = 1 in above equation for dedicated cluster of homogeneous workstations, the utilization returns to the traditional form: (8)

3 PERFORMANCE MODEL OF THE MATRIX IMPLEMENTATIONS In this section, we develop an analytical performance model to describe the computational behaviour of the four parallel matrix multiplication implementations of both kinds cluster. First of all, we consider the matrix multiplication product C = AB where the three matrices A, B, and C are dense of size nn.. The number of workstations in the cluster is denoted by p and we assume that p is power of 2. The performance modeling of the four implementations is presented in next subsections. 3.1 Dynamic allocation of columns of the matrix B For the analysis, we assume that the entire matrix B stored in the local disk of the master workstation. The basic idea of this implementation is as follows: The master workstation broadcasts the matrix A by rows to all workers. It partitions the matrix B into blocks of columns and each block is distributed dynamically to a worker. Each worker executes a sequential matrix vector multiplication algorithm between the matrix A and the corresponding block of b columns. Finally, each worker sends back a block of size b columns of the matrix C. The execution time of the dynamic implementation that is called MM1, can be broken up into five terms: Ta: It includes the communication time for broadcasting of the matrix A by rows to all workers involved in processing of the matrix multiplication. We used the function MPI_Bcast to broadcast information to all workers. The size of each row of the matrix A is n*sizeof(int) bytes. Therefore, the total time Ta for n rows is given by: (9) where is the communication speed. Tb: It is the total I/O time to read the columns of the matrix B into several blocks of size b*n*sizeof(int) bytes from the local disk of the master workstation. The b is the number of columns. Therefore, the master reads n2*sizeof(int) bytes totally of the matrix. Then, the time Tb is given by: (10) where is the I/O capacity of the master workstation. Tc: It is the total communication time to send all blocks of the matrix B to all workers. The size of each block is b*n*sizeof(int) bytes. Therefore, the master sends n2*sizeof(int) bytes totally. Then, the time Tc is given by: (11) where is the communication speed. d: It is the average computation time across the cluster. Each worker performs a matrix vector multiplication between the matrix A and the block of the matrix B with size b*n*sizeof(int) bytes. It is requires b*n2 steps. Then, the time d is given by: (12) where is the computation capacity of the cluster (homogeneous or heterogeneous) when p workstations are used. We include the second max term in the equation 12 which defines the worse load imbalance at the end of the execution when there are not enough blocks of the matrix left to keep all the workstations busy. Te: It includes the communication time to receive n / b results from all workers. Each worker sends back b*n*sizeof(int) bytes. Therefore, the master will receive n2*sizeof(int) bytes totally. Therefore, the time Te is given by: (13)

Theodoros Typou, Vasilis Stefanidis, Panagiotis Michailidis and Konstantinos Margaritis.

where is the communication speed. Further, we note that this dynamic implementation in practice there is parallel communication and computation and this reason we take the maximum value between the communication time i.e. Tc + Te and the computation time i.e. Td. Therefore, the total execution time of our dynamic implementation, Tp, using p workstations, is given by: (14) 3.2 Dynamic allocation of matrix pointers For the analysis, we assume that the matrix B stored in the local disk of the master workstation. Further, in this implementation we assume that the master workstation has a pointer that shows the current column in the matrix B. The basic idea of the second implementation is as follows: The master workstation broadcasts the matrix A by rows to all workers. It also sends pointers for the matrix B dynamically to workers. Each worker reads a block of size b columns of the matrix B from the local disk of the master starting from the pointer that receives. Also, each worker executes a sequential matrix vector multiplication algorithm between the matrix A and the corresponding block of b columns. Finally, each worker sends back a block of size b columns of the matrix C. The execution time of the dynamic implementation with the matrix pointers that is called MM2, can be broken up into five terms: Ta: It includes the communication time for broadcasting of the matrix A by rows to all workers involved. The amount of this time is similar to the Ta of the previous dynamic implementation. Tb: It is same with the time Tc of the previous implementation but this term includes the total communication time to send all matrix pointers instead of blocks of columns of the matrix B to all workers. Therefore, the time Tb is given by: (15) where is the communication speed. Tc: It is the total I/O time to read the matrix B into several blocks of size b*n*sizeof(int) bytes from the local disk of the master workstation. We note that each worker reads a block of the matrix B from the local disk of the master starting from the pointer that receives. The amount of this time is similar to the Tb of the previous implementation. Td: It includes the average computation time across the cluster. The amount of this time is similar to the time Td of the previous implementation. Te: It includes the communication time to receive the results of the matrix - vector multiplication from all workers. The amount of this time is same with the time Te of the previous implementation. We take the maximum value between the communication time i.e. Tb + Te and the computation time i.e. Tc + Td, since in this implementation there is parallel communication and computation. Therefore, the total execution time of our dynamic implementation, Tp, using p workstations, is given by: (16) 3.3 Dynamic allocation of matrix pointers For the analysis, we assume that the entire matrix B stored in the local disk of worker workstations. The basic idea of the third implementation is as follows: The master workstation broadcasts the matrix A by rows to all workers. It sends matrix pointers for the matrix B dynamically to workers. Each worker reads a block of size b columns of the matrix B from the local disk of the worker starting from the pointer that receives. Also, each worker executes a sequential matrix vector multiplication algorithm between the matrix A and the corresponding block of b columns. Finally, each worker sends back a block of size b columns of the matrix C. The execution time of the dynamic implementation with the matrix pointers that is called MM3, can be broken up into five terms: Ta: It includes the communication time for broadcasting of the matrix A by rows to all workers involved. The amount of this time is similar to the Ta of the previous dynamic implementation. Tb: It includes the communication time to send all matrix pointers to all workers. The amount of this time is same with the time Tb of the previous implementation. Tc: It is the average I/O time to read the matrix B into several blocks of size b*n*sizeof(int) bytes from the local disks of the worker workstations. We note that each worker reads from the local disk b*n*sizeof(int) bytes of the matrix B starting from the pointer that receives. Then, the time Tc is given by: (17)

Theodoros Typou, Vasilis Stefanidis, Panagiotis Michailidis and Konstantinos Margaritis.

where is the computation capacity of the cluster (homogeneous or heterogeneous) when p workstations are used. We include the second max term in the equation 17 which defines the worse load imbalance at the end of the execution when there are not enough blocks of the matrix left to keep all the workstations busy. Td: It includes the average computation time across the cluster. The amount of this time is similar to the time Td of the previous implementation. Te: It includes the communication time to receive the results of the matrix - vector multiplication from all workers. The amount of this time is same with the time Te of the previous implementation. We take the maximum value between the communication time i.e. Tb + Te and the computation time i.e. Tc + Td, since in this implementation there is parallel communication and computation. Therefore, the total execution time of our dynamic implementation, Tp, using p workstations, is given by: (18) 3.4 Dynamic allocation of matrix pointers For the analysis, we assume that the matrices A and B stored in the local disk of worker workstations. The basic idea of the fourth implementation is as follows: The master sends matrix pointers for the matrix B dynamically to workers. Each worker reads the entire matrix A from the local disk. Also, each worker reads a block of the matrix B from the local disk starting from the pointer that receives. Further, each worker executes a sequential matrix vector multiplication algorithm between the matrix A and the corresponding block of b columns. Finally, each worker sends back a block of size b columns of the matrix C. The execution time of the dynamic implementation with the matrix pointers that is called MM4, can be broken up into five terms: Ta: It includes the I/O time to read the matrix A from the local disk of the worker workstations. Therefore, the time Ta is given by: (19) Tb: It includes the communication time to send all matrix pointers to all workers. The amount of this time is same with the time Tb of the previous implementation. Tc: It is the average I/O time to read the matrix B into several blocks of size b*n*sizeof(int) bytes from the local disks of the worker workstations. The amount of this time is similar to the time Tc of the previous implementation. Td: It includes the average computation time across the cluster. The amount of this time is similar to the time Td of the previous implementation. Te: It includes the communication time to receive the results of the matrix - vector multiplication from all workers. The amount of this time is same with the time Te of the previous implementation. We take the maximum value between the communication time i.e. Tb + Te and the computation time i.e. Ta + Tc + Td, since in this implementation there is parallel communication and computation. Therefore, the total execution time of our dynamic implementation, Tp, using p workstations, is given by: (20) 4 VALIDATION OF THE PERFORMANCE MODEL

In this section, we compare the analytical results with the experimental results for the four parallel implementations on a cluster of homogeneous workstations connected with 100 Mb/s Fast Ethernet network. The homogeneous cluster consists of 32 Pentium workstations based on 200 MHz with 64 MB RAM. During all experiments, the cluster was dedicated. The MPI implementation used on the network is MPICH version 1.2. The performance estimated results for the four implementations were obtained by the equations 14, 16, 18 and 20, respectively. In order to get these estimated results, we must to determine the values of the power weights and the values of the speeds SI/O and Scomp of the workstation. The average computing power weights of the workstation (Pentium 200 MHz) is 1 for the four implementations. These weights based on formula 1 were measured when the matrix size does not exceed the memory bound of any machine in the system in order to keep the power weights constant. Further, the average speeds SI/O and Scomp of the workstation for all block sizes (b=1, 2, 4 and 8), executing the matrix multiplication are critical parameters for predicting the CPU demand times of computational segments on other workstations. The speeds were measured for different matrix sizes and averaged by formula 2 as follows, SI/O = 147,12526 ints/sec and Scomp = 9116288,837 ints/sec for the four implementations. Finally, the communication speed was measured for different matrix sizes and block sizes as

Theodoros Typou, Vasilis Stefanidis, Panagiotis Michailidis and Konstantinos Margaritis.

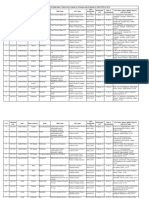

follows, Scomm = 47972,78 ints/sec. Figure 1 presents for some values n, b and p the speedups obtained by experiments and those by the equation 16 for the MM2 implementation on a cluster of homogeneous workstations. Similarly, Figures 2, and 3 demonstrate the speedups obtained by experiments and those by the equations 18 and 20 for the MM3 and MM4 implementations, respectively. It must note that the MM1 implementation is not executed on cluster of workstations because it gives poor results and the performance behavior is similar to the performance of the MV1 implementation according to the paper [13]. Finally, Figures 1 and 2 are not displayed the speedup curves for the block sizes 1 and 2 because they occur too low performance. We observe that the estimated results for the three implementations confirm well the experimental measurements. Therefore, our performance model of the equations 16, 18, and 20 validate well the computational behaviour of the experimental results. Finally, we observe that the maximal differences between theoretical and experimental values are less than 10% and most of them are less than 5%. Consequently, the model for the MM2, MM3 and MM4 implementations is accuracy since the predictions need not be quantitatively exact, prediction errors of 10-20% are acceptable. 5 CONCLUSIONS

In this paper we implemented and analyzed the parallel matrix multiplication implementations on a cluster of workstations and we modeled these implementations using the performance model. It has been shown that the performance prediction model agrees well with the experimental measurements. Finally, the proposed four matrix multiplication implementations could be executed on a cluster of heterogeneous workstations in the future. It can be argued that the performance results of the four implementations on a cluster of heterogeneous workstations can be similar to the performance results of the matrix vector implementations such as is presented in our paper [13]. REFERENCES [1] Anderson, E., Bai, Z., Bischof, C., Demmel, J., Dongarra, J., Du Croz, J., Greenbaum, A., Hammarling, S., McKenney, A., Ostrouchov, S., Sorensen, D. (1992), LAPACK Users' Guide, SIAM, Philadelphia. [2] Beaumont, O., Boudet, V., Rastello, F., Robert, Y. (2000), Matrix-Matrix Multiplication on Heterogeneous Platforms, Technical Report RR-2000-02, LIP, ENS Lyon. [3] Beaumont, O., Legrand, A., Robert, Y. (2001), The master-slave paradigm with heterogeneous processors, Technical Report RR-2001-13, LIP, ENS Lyon. [4] Cannon, L.E. (1969), A Cellular Computer to Implement the Kalman Filter Algorithm, Ph.D. Thesis, Montana State University, Bozman, Montana. [5] Choi, J., Dongarra, J., Pozo, R., Walker, D.W. (1992), ScaLAPACK: A scalable linear algebra library for distributed memory concurrent computers, Proceedings of the Fourth Symposium on the Frontiers of Massively Parallel Computation, McLean, Virginia, October 19-21, pp. 120-127. [6] Choi, J., Dongarra, J., Walker, D.W. (1992), The design of scalable software libraries for distributed memory concurrent computers, in J. Dongarra, B. Tourancheau (Eds.), Environments and Tools for Parallel Scientific Computing, Elsevier, Amsterdam, 1992. [7] Dongarra, J., Moler, C.B., Bunch, J.R., Stewart, G.W. (1979), LINPACK User's Guide, SIAM, Philadelphia. [8] Fox, G., Johnson, M., Lyzenga, G., Otto, S., Salmon, J., Walker, D. (1988), Solving Problems on Concurrent Processors, Vol. I, Prentice-Hall, Englewood Cliffs, NJ. [9] Kumar, V., Gramma, A., Gupta, A., Karypis, G. (1994), Introduction to Parallel Computing, The Benjamin/Cummings, Publishing Company. [10] Pacheco, P. (1997), Parallel Programming with MPI, San Francisco, CA, Morgan Kaufmann. [11] Snir, M., Otto, S., Huss-Lederman, S., Walker, D.W., Dongarra, J. (1996), MPI: The Complete Reference, The MIT Press, Cambridge, Massachusetts. [12] Tinetti, F., Quijano, A., Giusti, A., Luque, E. (2001), Heterogeneous networks of workstations and the parallel matrix multiplication , Proceedings of the Euro PVM/MPI 2001, Springer-Verlag, Berlin, pp. 296303. [13] Typou, T., Stefanidis, V., Michailidis, P., Margaritis, K. (2004), Matrix Vector multiplication on a cluster of workstations, to appear in Proceedings of the First International Conference From Scientific Computing to Computational Engineering, Athens, Greece, September 8-10. [14] Wilkinson, B., Allen, M. (1999), Parallel Programming: Techniques and Applications Using Networking Workstations, Prentice-Hall, Inc. [15] Yan, Y., Zhang, X., Song, Y. (1996), An effective and practical performance prediction model for parallel computing on non-dedicated heterogeneous NOW, Journal of Parallel and Distributed Computing, Vol. 38, pp. 63-80.

Theodoros Typou, Vasilis Stefanidis, Panagiotis Michailidis and Konstantinos Margaritis.

Dimension 256

1,035000 1,030000 1,025000 1,020000 1,015000 1,010000 1,005000 1,000000 0,995000 0,990000 2 4 6 8 12 16 24 32 Processors

Dimension 256

1,035 1,03 1,025 1,02 1,015 1,01 1,005 1 0,995 2 4 6 8 12 16 24 32 Processors

Speedup

block 2 block 4 block 8

Speedup

block 1

block 1 block 2 block 4 block 8

Dimension 512

1,040000 1,035000 1,030000 1,025000 1,020000 1,015000 1,010000 1,005000 1,000000 2 4 6 8 12 16 24 32 Processors

Dimension 512

1,04 1,035 1,03 1,025 1,02 1,015 1,01 1,005 1 2 4 6 8 12 16 24 32 Processors

Speedup

Speedup

block 1 block 2 block 4 block 8

block 1 block 2 block 4 block 8

Dimension 1024

1,040000 1,035000 Speedup 1,025000 1,020000 1,015000 1,010000 1,005000 2 4 6 8 12 16 24 32 Processors block 2 block 4 block 8

Speedup 1,045 1,04 1,035 1,03 1,025 1,02 1,015 1,01 1,005 2 4 6

Dimension 1024

1,030000

block 1

block 1 block 2 block 4 block 8

12

16

24

32

Processors

Dimension 2048

1,045000 1,040000 1,035000 1,030000 1,025000 1,020000 1,015000 1,010000 1,005000 2 4 6 8 12 16 24 32 Processors

1,045 1,04 1,035 1,03 1,025 1,02 1,015 1,01 1,005 2 4 6

Dimension 2048

Speedup

block 2 block 4 block 8

Speedup

block 1

block 1 block 2 block 4 block 8

12

16

24

32

Processors

Figure 1: The experimental (left) and theoretical (right) speedup as a function of the number of workstations on a homogeneous cluster for the MM2 implementation

Theodoros Typou, Vasilis Stefanidis, Panagiotis Michailidis and Konstantinos Margaritis.

Dimension 256

18,000000 16,000000 14,000000 12,000000 10,000000 8,000000 6,000000 4,000000 2,000000 0,000000 2 4 6 8 12 16 24 32 Processors

Dimension 256

30 25

block 2 block 4 block 8 block 1

Speedup

Speedup

20 15 10 5 0 2 4 6 8 12 16 24 32 Processors

block 1 block 2 block 4 block 8

Dimension 512

30,000000 25,000000 Speedup block 2 block 4 block 8 block 1

Dimension 512

30 25 Speedup 20 15 10 5 0 block 1 block 2 block 4 block 8

20,000000 15,000000 10,000000 5,000000 0,000000 2 4 6 8 12 16 24 32 Processors

12

16

24

32

Processors

Dimension 1024

25,000000 20,000000 Speedup 15,000000 10,000000 5,000000 0,000000 2 4 6 8 12 16 24 32 Processors block 2 block 4 block 8

Speedup 35 30

Dimension 1024

block 1

25 20 15 10 5 0 2 4 6 8 12 16 24 32 Processors

block 1 block 2 block 4 block 8

Dimension 2048

30,000000 25,000000 Speedup block 2 block 4 block 8

Speedup 35 30

Dimension 2048

20,000000 15,000000 10,000000 5,000000 0,000000 2 4 6 8 12 16 24 32 Processors

block 1

25 20 15 10 5 0 2 4 6 8 12 16 24 32 Processors

block 1 block 2 block 4 block 8

Figure 2: The experimental (left) and theoretical (right) speedup as a function of the number of workstations on a homogeneous cluster for the MM3 implementation

Theodoros Typou, Vasilis Stefanidis, Panagiotis Michailidis and Konstantinos Margaritis.

Dimension 256

18,000000 16,000000 14,000000 12,000000 10,000000 8,000000 6,000000 4,000000 2,000000 0,000000 2 4 6 8 12 16 24 32 Processors

Dimension 256

20 Speedup

Speedup

block 1 block 2 block 4 block 8

15 10 5 0 2 4 6 8 12 16 24 32 Processors

block 1 block 2 block 4 block 8

Dimension 512

25,000000 20,000000 Speedup 15,000000 10,000000 5,000000 0,000000 2 4 6 8 12 16 24 32 Processors block 2 block 4 block 8

Speedup 30 25

Dimension 512

block 1

20 15 10 5 0 2 4 6 8 12 16 24 32 Processors

block 1 block 2 block 4 block 8

Dimension 1024

25,000000 20,000000 Speedup 15,000000 10,000000 5,000000 0,000000 2 4 6 8 12 16 24 32 Processors block 2 block 4 block 8

Speedup 30 25

Dimension 1024

block 1

20 15 10 5 0 2 4 6 8 12 16 24 32 Processors

block 1 block 2 block 4 block 8

Dimension 2048

30,000000 25,000000 Speedup block 2 block 4 block 8

Speedup 35 30

Dimension 2048

20,000000 15,000000 10,000000 5,000000 0,000000 2 4 6 8 12 16 24 32 Processors

block 1

25 20 15 10 5 0 2 4 6 8 12 16 24 32 Processors

block 1 block 2 block 4 block 8

Figure 3: The experimental (left) and theoretical (right) speedup as a function of the number of workstations on a homogeneous cluster for the MM4 implementation

You might also like

- The Parallel EM Algorithm and Its Applications in Computer VisionDocument8 pagesThe Parallel EM Algorithm and Its Applications in Computer VisionTinhVoNo ratings yet

- 3.2 Performance EvaluationsDocument18 pages3.2 Performance EvaluationsChetan SolankiNo ratings yet

- HW2 2021 CS4215Document5 pagesHW2 2021 CS4215Dan PlămădealăNo ratings yet

- Approach: Matching To Assignment Distributed SystemsDocument7 pagesApproach: Matching To Assignment Distributed SystemsArvind KumarNo ratings yet

- Hardw S97Document10 pagesHardw S97Dinesh DevaracondaNo ratings yet

- Parallel Mapreduce: K - Means Clustering Based OnDocument6 pagesParallel Mapreduce: K - Means Clustering Based OnAshishNo ratings yet

- Heterogeneous Distributed Computing: Muthucumaru Maheswaran, Tracy D. Braun, and Howard Jay SiegelDocument44 pagesHeterogeneous Distributed Computing: Muthucumaru Maheswaran, Tracy D. Braun, and Howard Jay SiegelNegovan StankovicNo ratings yet

- A Parallel Ant Colony Optimization Algorithm With GPU-Acceleration Based On All-In-Roulette SelectionDocument5 pagesA Parallel Ant Colony Optimization Algorithm With GPU-Acceleration Based On All-In-Roulette SelectionJie FuNo ratings yet

- Advanced Computer Architecture 1Document14 pagesAdvanced Computer Architecture 1anilbittuNo ratings yet

- A Data Throughput Prediction Using Scheduling and Assignment TechniqueDocument5 pagesA Data Throughput Prediction Using Scheduling and Assignment TechniqueInternational Journal of computational Engineering research (IJCER)No ratings yet

- Scalability of Parallel Algorithms For MDocument26 pagesScalability of Parallel Algorithms For MIsmael Abdulkarim AliNo ratings yet

- Shift Register Petri NetDocument7 pagesShift Register Petri NetEduNo ratings yet

- The Data Locality of Work Stealing: Theory of Computing SystemsDocument27 pagesThe Data Locality of Work Stealing: Theory of Computing SystemsAnonymous RrGVQjNo ratings yet

- Content PDFDocument14 pagesContent PDFrobbyyuNo ratings yet

- A Hybrid Algorithm For Grid Task Scheduling ProblemDocument5 pagesA Hybrid Algorithm For Grid Task Scheduling ProblemInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Pakhira2009 K Means Distributed 111 PDFDocument8 pagesPakhira2009 K Means Distributed 111 PDFدراز زيان أبو جاسرNo ratings yet

- Approach: Matching To Assignment Distributed SystemsDocument7 pagesApproach: Matching To Assignment Distributed SystemsDan AdwindeaNo ratings yet

- Mesh Tensorflow Deep Learning For SupercomputersDocument10 pagesMesh Tensorflow Deep Learning For SupercomputersJose Antonio Freyre ZavalaNo ratings yet

- Final Report: Delft University of Technology, EWI IN4342 Embedded Systems LaboratoryDocument24 pagesFinal Report: Delft University of Technology, EWI IN4342 Embedded Systems LaboratoryBurlyaev DmitryNo ratings yet

- A Comparative Study of Soft Computing Approaches For Mapping Tasks To Grid Heterogeneous SystemDocument7 pagesA Comparative Study of Soft Computing Approaches For Mapping Tasks To Grid Heterogeneous SystemSyams FathurNo ratings yet

- Quick SortDocument5 pagesQuick SortdmaheshkNo ratings yet

- Real-Time Calculus For Scheduling Hard Real-Time SystemsDocument4 pagesReal-Time Calculus For Scheduling Hard Real-Time SystemsChrisLaiNo ratings yet

- ArticuloDocument7 pagesArticuloGonzalo Peñaloza PlataNo ratings yet

- Modeling of A Queuing System Based On Cen Network in GPSS World Software EnvironmentDocument5 pagesModeling of A Queuing System Based On Cen Network in GPSS World Software EnvironmentAlex HropatyiNo ratings yet

- Max-Average: An Extended Max-Min Scheduling Algorithm For Grid Computing EnvironmentDocument5 pagesMax-Average: An Extended Max-Min Scheduling Algorithm For Grid Computing EnvironmentMohammed nazzalNo ratings yet

- Task Scheduling Problem: A Scheduling System Model Consist of AnDocument4 pagesTask Scheduling Problem: A Scheduling System Model Consist of AnPratik SharmaNo ratings yet

- Simulating Performance of Parallel Database SystemsDocument6 pagesSimulating Performance of Parallel Database SystemsKhacNam NguyễnNo ratings yet

- WriteupDocument5 pagesWriteupmaria kNo ratings yet

- Theano: A CPU and GPU Math Compiler in PythonDocument7 pagesTheano: A CPU and GPU Math Compiler in PythonhachanNo ratings yet

- International Journal of Engineering Research and DevelopmentDocument7 pagesInternational Journal of Engineering Research and DevelopmentIJERDNo ratings yet

- Sensors: Metaheuristic Based Scheduling Meta-Tasks in Distributed Heterogeneous Computing SystemsDocument12 pagesSensors: Metaheuristic Based Scheduling Meta-Tasks in Distributed Heterogeneous Computing SystemsalkimistuNo ratings yet

- Parallel Computing and Monte Carlo AlgorithmsDocument27 pagesParallel Computing and Monte Carlo AlgorithmsAli VallymamodeNo ratings yet

- Rethinking Modular Multi-Exponentiation in Real-World ApplicationsDocument15 pagesRethinking Modular Multi-Exponentiation in Real-World Applicationsfsd fsdfdsNo ratings yet

- Ijait 020602Document12 pagesIjait 020602ijaitjournalNo ratings yet

- Neural Network Toolbox: A Tutorial For The Course Computational IntelligenceDocument8 pagesNeural Network Toolbox: A Tutorial For The Course Computational Intelligencenehabatra14No ratings yet

- Final Project InstructionsDocument2 pagesFinal Project InstructionsjuliusNo ratings yet

- Radial Basis Function Neural NetworksDocument17 pagesRadial Basis Function Neural Networkso iNo ratings yet

- Scheduling Multithread Computations by Stealing WorkDocument28 pagesScheduling Multithread Computations by Stealing Workwmeister31No ratings yet

- A Novel Genetic Algorithm For Static Task Scheduling in Distributed SystemsDocument6 pagesA Novel Genetic Algorithm For Static Task Scheduling in Distributed SystemsVijaya LakshmiNo ratings yet

- A High-Performance Area-Efficient Multifunction InterpolatorDocument8 pagesA High-Performance Area-Efficient Multifunction InterpolatorGigino GigettoNo ratings yet

- Machine Learning AssignmentDocument8 pagesMachine Learning AssignmentJoshuaDownesNo ratings yet

- Parallel K-Means Algorithm For SharedDocument9 pagesParallel K-Means Algorithm For SharedMario CarballoNo ratings yet

- ++probleme TotDocument22 pages++probleme TotRochelle ByersNo ratings yet

- Fpga Implementation of A Multilayer Perceptron Neural Network Using VHDLDocument4 pagesFpga Implementation of A Multilayer Perceptron Neural Network Using VHDLminoramixNo ratings yet

- Performance Analysis of Partition Algorithms For Parallel Solution of Nonlinear Systems of EquationsDocument4 pagesPerformance Analysis of Partition Algorithms For Parallel Solution of Nonlinear Systems of EquationsBlueOneGaussNo ratings yet

- Research of Cloud Computing Task Scheduling Algorithm Based On Improved Genetic AlgorithmDocument4 pagesResearch of Cloud Computing Task Scheduling Algorithm Based On Improved Genetic Algorithmaksagar22No ratings yet

- Performance Evaluation of Artificial Neural Networks For Spatial Data AnalysisDocument15 pagesPerformance Evaluation of Artificial Neural Networks For Spatial Data AnalysistalktokammeshNo ratings yet

- Matrix-Matrix MultiplicationDocument8 pagesMatrix-Matrix MultiplicationJulian ValenciaNo ratings yet

- Multithreaded Algorithms For The Fast Fourier TransformDocument10 pagesMultithreaded Algorithms For The Fast Fourier Transformiv727No ratings yet

- PCD Lab Manual VtuDocument92 pagesPCD Lab Manual VtusankrajegowdaNo ratings yet

- Implementation of Dynamic Level Scheduling Algorithm Using Genetic OperatorsDocument5 pagesImplementation of Dynamic Level Scheduling Algorithm Using Genetic OperatorsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Aravind PDFDocument29 pagesAravind PDFAnonymous bzQwzmVvNo ratings yet

- Modeling of Mixed-Model Assembly System Based On Agent Oriented Petri NetDocument7 pagesModeling of Mixed-Model Assembly System Based On Agent Oriented Petri NetAvinash KumarNo ratings yet

- Electromagnetic Transients Simulation As An Objective Function Evaluator For Optimization of Power System PerformanceDocument6 pagesElectromagnetic Transients Simulation As An Objective Function Evaluator For Optimization of Power System PerformanceAhmed HussainNo ratings yet

- CS 807 Task: C: A GPU-based Parallel Ant Colony Algorithm For Scientific Workflow SchedulingDocument10 pagesCS 807 Task: C: A GPU-based Parallel Ant Colony Algorithm For Scientific Workflow SchedulingSavan SinghNo ratings yet

- DIP Lab Manual No 02Document24 pagesDIP Lab Manual No 02myfirstNo ratings yet

- A Comparison of Parallel Sorting Algorithms On Different ArchitecturesDocument18 pagesA Comparison of Parallel Sorting Algorithms On Different ArchitecturesParikshit AhirNo ratings yet

- Programming with MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"From EverandProgramming with MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"Rating: 4.5 out of 5 stars4.5/5 (3)

- Prasant Mohan: Extract 5Document45 pagesPrasant Mohan: Extract 5lux007No ratings yet

- Winda - Uas B. InggrisDocument5 pagesWinda - Uas B. InggrisWinda SyaqiifaNo ratings yet

- Profil Bahan Ajar Biologi SMA Kelas X Di Kecamatan Ilir Barat I Palembang Dari Perspektif Literasi SainsDocument9 pagesProfil Bahan Ajar Biologi SMA Kelas X Di Kecamatan Ilir Barat I Palembang Dari Perspektif Literasi SainsIndah Karunia SariNo ratings yet

- Church GoingDocument7 pagesChurch GoingSaima yasinNo ratings yet

- Assignment 06 Introduction To Computing Lab Report TemplateDocument10 pagesAssignment 06 Introduction To Computing Lab Report Template1224 OZEAR KHANNo ratings yet

- Rezty Nabila Putri Exercises EnglishDocument13 pagesRezty Nabila Putri Exercises EnglishRezty NabilaNo ratings yet

- Gnenral P CSCF ArchitectureDocument81 pagesGnenral P CSCF ArchitectureAsit SwainNo ratings yet

- The History of The Turkish Language From The Ottoman Empire Until TodayDocument3 pagesThe History of The Turkish Language From The Ottoman Empire Until TodayMaad M. MijwilNo ratings yet

- Gordon Leishman Helicopter AerodynamicsDocument3 pagesGordon Leishman Helicopter AerodynamicsRobertNo ratings yet

- Nao The RobotDocument25 pagesNao The RobotRamya GayuNo ratings yet

- Elementary AlgorithmsDocument618 pagesElementary AlgorithmsAditya Paliwal100% (1)

- Modeling and Simulation of The DC Motor Using Matlab and LabviewDocument6 pagesModeling and Simulation of The DC Motor Using Matlab and LabviewnghiepmechanNo ratings yet

- Reading Comprehension 6 PDFDocument64 pagesReading Comprehension 6 PDFirinachircevNo ratings yet

- A Research Proposal Bab 1 2 3 Original 2.1Document62 pagesA Research Proposal Bab 1 2 3 Original 2.1Ardi100% (1)

- Present-Continuous 5202Document3 pagesPresent-Continuous 5202Raquel Hernandez100% (1)

- 3 DecimalDocument12 pages3 DecimalSubrataThakuraNo ratings yet

- HB Ac2 Profibus Geraeteintegration enDocument92 pagesHB Ac2 Profibus Geraeteintegration enwaa123sNo ratings yet

- GOM Shortcut KeysDocument4 pagesGOM Shortcut KeysClarence Tuazon FloresNo ratings yet

- Ipocc Watchdog enDocument50 pagesIpocc Watchdog enMarthaGutnaraNo ratings yet

- Railway Managment SystemDocument11 pagesRailway Managment SystemRubab tariqNo ratings yet

- International Test of English Proficiency: iTEP Academic Orientation GuideDocument12 pagesInternational Test of English Proficiency: iTEP Academic Orientation GuidesthphaNo ratings yet

- Most Thought ProvokingDocument2 pagesMost Thought ProvokingRăzvan DejuNo ratings yet

- 40 Words You Should KnowDocument53 pages40 Words You Should KnowKawalkhedkar Mohan VinayNo ratings yet

- What Is Classic ASPDocument6 pagesWhat Is Classic ASPsivajothimaduraiNo ratings yet

- ADJECTIVE OR ADVERB Exercises+Document1 pageADJECTIVE OR ADVERB Exercises+Maria Da Graça CastroNo ratings yet

- Application SoftwareDocument37 pagesApplication SoftwareMay Hua100% (1)

- State Wise List of Registered FPOs Details Under Central Sector Scheme For Formation and Promotion of 10,000 FPOs by SFAC As On 31-12-2023Document200 pagesState Wise List of Registered FPOs Details Under Central Sector Scheme For Formation and Promotion of 10,000 FPOs by SFAC As On 31-12-2023rahul ojhhaNo ratings yet

- Aalam-E-Barzakh, Moot or Qabar Ki Hayaat - Part 1.abdul Waheed HanfiDocument64 pagesAalam-E-Barzakh, Moot or Qabar Ki Hayaat - Part 1.abdul Waheed HanfikhudamahlesunatNo ratings yet

- History of ComputerDocument47 pagesHistory of ComputerSharada ShaluNo ratings yet

- GT-S5300 Service ManualDocument47 pagesGT-S5300 Service ManualNo-OneNo ratings yet