Professional Documents

Culture Documents

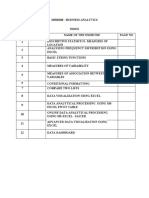

Data Mining

Uploaded by

zhouyun521Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Data Mining

Uploaded by

zhouyun521Copyright:

Available Formats

De Villiers Walton 1 SAP Data Mining

SAP Data Mining

How to get more out of your existing data

Maxim Sychevskiy, Daneel de Villiers

Background

In the last 20 years, the cost of computer hardware has decreased dramatically whilst its capability to

process large volumes of data has increased exponentially. This resulted in a rapid increase in master and

transactional data volumes collected and stored in digital format some claim that the quantity of data held

worldwide doubles approximately once a year.

This growth does not necessarily provide companies with the information required

to react dynamically in an increasingly competitive business environment.

Companies are required to rapidly organise and sift through their

terabytes of data to obtain insights into their customers and markets,

and to guide their future marketing, investment, and management

strategies. Various toolsets are deployed in this quest.

Data Warehouses are software tools designed for the collection,

manipulation, display and utilization of data to support executives and

managers when analysing large volumes data in order to respond faster to

market changes and to improve the quality of their decisions. However, storing

and reporting historical data from a data warehouse has limited value and is often

dependent on the knowledge and experience applied by the data consumer.

Companies therefore need more flexible and informative methods to manipulate and

display their data in order to improve their working knowledge of their customers

and markets and (most importantly) to predict the future.

This has lead to a new technology called Data

Mining, which is a powerful new technology that

enables companies to focus on the most

important aspects of the intelligence hidden

within the data in their domain. Various time-

tested tools, techniques and algorithms (proven

in real-world applications) are deployed to

generate predictive result sets that drive

business strategy and decisions. This, in turn,

improves business performance through

allowing customers to focus on how to apply

their limited resources in order to derive

maximum business benefit.

SAP, through its Data Mining tool (delivered as

part of the SAP Business Intelligence platform)

provides a range of sophisticated data analysis

tools and methods to discover patterns and

relationships in large datasets. These tools include statistical modelling, mathematical algorithms and

machine learning methods (algorithms which improve their performance automatically through experience).

As a result, the SAP BI solution consists of much more than the simple collection, management, and display

of historical data. It is also a powerful analytical and predictive modelling tool.

This whitepaper explores the following aspects related to Data Mining:

The Evolution of Data Analysis

How does Data Mining work?

Components of SAP Data Mining

Integration with SAP Data Warehousing solutions

Consideration of Data Mining solutions and how to avoid oversights during implementation

C

o

s

L

o

f

u

a

L

a

r

o

c

e

s

s

l

n

g

1

e

c

h

n

o

l

o

g

y

C

h

e

a

p

L

x

p

e

n

s

l

v

e

uaLa CollecLlon

(1960's)

uaLa Access

(1980's)

uaLa

Warehouslng /

ueclslon SupporL

(1990's)

uaLa Mlnlng

WhaL was my

LoLal revenue ln

Lhe lasL 2

years?"

l would llke Lo

look aL Lhe sales

for producL x for

counLry ? and

compare ?1u

Lhls year Lo ?1u

lasL year.

l wanL Lo

predlcL my

revenues for

Lhe nexL year

and undersLand

where Lhe sales

ls comlng from

(boLh

cusLomers and

producLs)

All content 2007 De Villiers Walton 2 SAP Data Mining

unsupervlsed

Learnlng

(lnformaLlve)

Supervlsed

Learnlng

(redlcLlve)

ClusLerlng

A8C

ClasslflcaLlon

AssoclaLlon

Analysls

WelghLed

Score 1ables

ueclslon 1rees

8egresslon

Analysls

Llnear

8egresslon

Cn-llnear

8egresslon

The Evolution of Data Analysis

Traditionally, structured query and statistical analysis software have been used to describe and extract

information from within a Data Warehouse. The analyst forms a theory about a relationship and verifies it or

discounts it with a series of queries against the data. This method of data analysis is called the verification-

based approach. For example, an analyst might think that people with low income and high debt are a high

credit risk. By performing a query based on the payment history data in the Data Warehouse, he / she will

confirm the validity of this theory.

The usefulness of this approach can be limited by the creativity or experience of the analyst in developing

the various theories, as well as the capability of the software being used to analyse the findings. In contrast

with the traditional verification-based data analysis approach, Data Mining utilises a discovery approach in

which algorithms are used to examine several multidimensional data relationships simultaneously and

identify those that are unique or frequently represented.

Using this type of analytical toolset, an analyst might use Data Mining to discover a pattern that he did not

think to consider, and (using the example above) find that (1) parents (2) that are homeowners and (3) are

over 30 years of age (4) on low incomes (5) with high debt, are actually low credit risks.

How Does Data Mining Work?

How is Data Mining able to tell you important things that you didn't know or predict the future (within a range

of probabilities)?

Data Mining is performed through a process called an Analysis Process. An Analysis Process encapsulates

a set of rules and mathematical relationships using historical data from situations where the answer is

known; and then applies the model to other situations where the answers are unknown. Various pre-defined

algorithms (see next section) and data manipulation tools (aggregations, filters, joins, sorts etc) are used to

create the Analysis Process.

Information about a variety of situations where the result is known is used to train the Data Mining model (a

process where the Data Mining software extrapolates the data to find the characteristics of the data that are

appropriate to be fed back into the model). Once the model is trained it can then be used in similar situations

where the answer is unknown.

There is nothing new about many of these modelling techniques, but it is only recently that the processing

power of modern servers and PCs, together with the capacity to store huge amounts of historical data

cheaply, have been available to a wide range of companies.

Data Mining models available in SAP BW allow us to create models according to our requirements and then

use these models to draw information from your SAP BW data to support decision-making.

Components of SAP Data Mining Solutions

There are two main types of Data Mining models available in SAP BW: Informative Models and Predictive

Models.

Informative Models

Informative models do not predict a target value, but focus on the

intrinsic structure, relations, and connectivity of the data. They are

also referred to as Unsupervised Learning models.

SAP Data Mining supports the following unsupervised functions:

Clustering

Clustering identifies clusters of

data objects embedded in the

transactional data. A cluster is a

collection of data objects that are similar to one another.

A good clustering method produces high-quality clusters that ensure

the inter-cluster similarity is low and the intra-cluster similarity is high.

In other words, members of a cluster are more like each other than

they are like members of another cluster.

r

o

f

l

L

CrowLh oLenLlal Low Plgh

P

l

g

h

All content 2007 De Villiers Walton 3 SAP Data Mining

In cases where there are no obvious natural groupings, custom Data Mining algorithms can be used to find

natural groupings.

In the diagram to the left, we have four groups of customers based on 2 separate criteria: profit and growth

potential. The group in the top-left quadrant is the so-called cash-cows who have no growth potential but is

making a significant contribution to the profits of the company. Below the cash-cows are the dogs those

customers that are not going to generate much in terms of either profit or their growth potential. The two

clusters to the right are of much more interest, and most of the marketing effort will go into retaining and

developing these clients.

ABC Classification

Prioritization based on the Pareto principle states that (for example) 80% of profits are generally generated

by 20% of your customers (this principle also applies to other

master data objects such as employees, suppliers in different

contexts). As a result, 20% of your customers should receive

80% of your attention thereby changing your business

priorities.

The ABC-classification model is a frequently used type of

classification of a range of items, such as finished products or

customers into three categories:

A = Outstanding importance;

B = Average importance; and

C = Relatively unimportant.

Each category can, and sometimes should, be handled in a different way, with

more attention being devoted to category A, and less to B and C.

Based on Paretos law, the ABC-classification drives us to manage A items

more carefully. This means that these item should be ordered more often,

counted more often, located closer to the door, and be forecasted more carefully.

Conversely, C items are not very important from an investment point of view,

and therefore should be ordered rarely and not counted often. Some companies

use other methods for defining the ABC classification - such as the stock-out cost

or the production criticality of the item.

Association Analysis

Association Analysis is a modelling technique based upon the theory that if you buy a certain group of items,

you are more (or less) likely to buy another group of items. For example, if you are in an English pub and you

buy a pint of beer, but you do not buy a bar meal, then you are more likely to buy crisps at the same time

than somebody who bought wine (who is more likely to buy olives or pistachios) etc.

In retailing, most purchases are made on impulse. Association Analysis gives clues as to what a customer

might have bought if the idea had occurred to them and drives companies to stimulate these thoughts (trays

with sweets at till-points that drive many parents to distraction).

The probability that a customer will buy beer without a bar meal (i.e. that the precursor is true) is referred to

as the SUPPORT for the rule. The conditional probability that a customer will purchase crisps is referred to

as the CONFIDENCE.

SelecL

LransacLlonal daLa

for AssoclaLlon

Analysls

erform Lhe

AssoclaLlon

Analysls

Analyse and

LvaluaLe 8esulLs

SelecL rules wlLh

hlgh Confldence

and SupporL

use Lhese rules as

Cross-selllng

opporLunlLles

Association Analysis can be used in deciding the location and promotion of goods inside a store. But in a

different scenario, we can compare results between different stores, between customers in different

demographic groups, between different days of the week, different seasons of the year, etc.

If we observe that a rule holds in one store, but not in any other (or does not hold in one store, but holds in

all others), then we know that there is something interesting about that particular store. Perhaps the

customers are different, or perhaps it has organized its displays in a different and more lucrative way.

Investigating such differences may yield useful insights which will improve company sales.

All content 2007 De Villiers Walton 4 SAP Data Mining

Although this type of Market Basket Analysis conjures up pictures of shopping carts and shoppers, it is

important to realise that there are many other areas in which it can be applied. These include:

Analysis of credit card purchases;

Analysis of telephone calling patterns;

Identification of fraudulent medical insurance claims (cases where common rules are broken); or

Analysis of telecom service purchases.

Weighted Score Tables

Scoring based on Weighted Tables (also known as Grid Analysis or Pugh Matrix Analysis) is a useful

decision making technique. Decision matrices are most effective in situations where there are a number of

alternatives and many factors to take into account. It is a methodology frequently used in engineering for

example to make design decisions. However, it can also be used to

rank investment, vendor, product options, or any other set of

multidimensional entities.

Scoring Tables involves establishing a set of weighted criteria upon

which the potential options can be decomposed, scored, and

summed to gain a total score which can then be ranked. The

advantage of this approach to decision making is that subjective

opinions about one alternative versus another can be evaluated in a

more objective manner.

Another advantage of this method is that sensitivity studies can be

performed. An example of this might be to see how much opinion

would have to change in order for a lower ranked alternative to outrank a competing alternative.

You can use the Scoring Weighted Tables to rate proposed product design changes or suggested

improvements against a baseline, using customer requirements or organizational goals as the criteria for

comparison.

The Scoring Weighted Tables method could help you to determine:

Which product design proposal best matches customer requirements and other organizational goals;

How alternative design proposals compare to the current (or preferred) design;

Which improvement strategy best matches organizational goals; or

How alternative improvement proposals compare to the suggested improvement.

Predictive Models

Predictive modelling in contrast is similar to the human learning experience in using observations to form a

model of the relevant rules. This approach uses generalisations of the real world and the ability to fit new

data into a general framework. Predictive modelling can be used to analyse

existing data in a Data Warehouse to determine some essential dependencies

within the data set.

Predictive Models are developed using a supervised learning approach which

has two distinct phases:

Training builds a model using a large sample of historical data called a

training set; and

Testing involves trying out the model on new, previously unseen data

called a test set. This determines the accuracy of the model and

physical performance characteristics.

SAP Data Mining supports the following predictive models:

Decision Trees

Decision trees are one of the most popular methods of predictive modelling since it provides interpretable

rules and logic statements that enable more intelligent decision making. The predictive model is represented

as a flow diagram.

PlsLorlcal uaLa

new

uaLa

1ralnlng

Model

8esulL

All content 2007 De Villiers Walton 5 SAP Data Mining

Decision Trees represent combinations of simple rules. By following the Tree, the rules can be interpreted to

understand why a record is classified in a certain way. These rules can then be used to retrieve records

falling into a certain category. These records give rise to a known behaviour for that category, which can

subsequently be used to predict behaviour of a new data record.

Decision Trees can be used to classify existing customer records into

customer segments that behave in a particular manner. The process starts

with data related to customers whose behaviour is already known (for

example, customers who have responded to a promotional campaign and

those who have not). The Decision Tree developed from this data gives us

the splitting attributes and criteria that divide customers into two categories

those that will buy our products and those that will not.

Once the rules (that determine the classes to which different customers

belong) are known, they can be used to classify existing customers and

predict behaviour in the future. For example, if we know from historical

analysis that one particular product is of interest to young customers or

customers who work as an Engineer with annual salary above 50,000 (see

diagram), then a customer whose record shows attributes similar to these is

more likely to buy this product. This then becomes a powerful predictive tool that marketers can use to plan

campaign activities.

Regression Analysis / Forecasting

Regression Analysis is a predictive statistical technique used to establish the relationship between

dependent variables (such as the sales of a company) and one or more independent variables (such as

family formations, Gross Domestic Product per capita income, and other Economic Indicators). Where there

has historically been a significant relationship between these variables, the future value of the dependent

variable can be forecasted.

Regression analysis attempts to measure the degree of correlation between the dependent and independent

variables, thereby establishing the latter's predictive value. For example, a manufacturer of restaurant

supplies might want to determine the relationship between sales and

average household income as part of a sales forecast. Using a

technique called a scatter graph it might plot the historical sales for

the last ten years on the Y axis and the historical annual household

income for the same period on the X Axis.

A line connecting the average dots, called the regression line, would

reveal the degree of correlation between the two factors by showing

the amount of unexplained variation. This is represented by the dots

falling outside the line.

Thus, if the regression line connects all the dots, a perfect

relationship exists between restaurant food sales and household income, meaning that one could be

predicted on the basis of the other.

The number of points scattered far outside the regression line would indicate, on the other hand, a less

significant relationship. So, a high degree of unexplained variation means that there was no meaningful

relationship and that household income has no predictive value in terms of restaurant food sales. Regression

analysis evaluates this variation, so allowing the best predictive relationships to be used.

Integration with SAP Data Warehouse solutions

The Analysis Process Designer (APD) is the application environment

for the SAP Data Mining solution. From SAP BW Release 3.5

onwards, all Data Mining functionality is fully integrated into the APD.

APD uses a graphical drag-and-drop interface to create

sophisticated processes that prepare, transform, mine, display, and

store data. It can provide you with quick answers to questions that

would otherwise require more time-consuming BW configuration (by

modelling a proliferation of data marts).

The Analysis Process Designer enables the analyst to define

complete processes which can disclose relationships within your

Age

lncome

CccupaLlon

8uy Won'L 8uy

Won'L 8uy

8uy

>=33 <33

<=30,000 >30,000

AccounLanL Lnglneer

l

o

o

d

S

a

l

e

s

Pousehold lncome Low Plgh

P

l

g

h

All content 2007 De Villiers Walton 6 SAP Data Mining

data. An analysis process collects data from InfoProviders, queries, or other BI objects, and then dynamically

transforms this data (combining multiple complex steps), before writing back the new information either into

BI objects (such as InfoObjects or ODS objects) or back to a SAP CRM system. The transformations can be

anything from simple filter, aggregation, or projection operations through to executing complex Data Mining

models. The results of an analysis process can then be used to drive the decision making and application

processes, and thus becomes an invaluable strategic, tactical, and operational tool for business

development.

Considerations

SAPs Data Mining environment represents a significant advance in the type of analytical tools currently

available. However, Data Mining (on its own) is not a magic solution to all your business woes!

Although Data Mining can help reveal patterns and relationships, it does not tell the user the value or

significance of these patterns. This must be made by the user. Similarly, the validity of the patterns

discovered is dependent on how they compare to the real life environment.

For example, in order to assess the validity of a Data Mining application designed to identify future demand

of a new organic food product, the analyst may test the Data Mining model using data that includes

information about known historical demand on similar products. However, whilst historical data often does

give some guidance as to the accuracy of the predictive model external parameters may have changed

significantly invalidating any test.

Another consideration is that while Data Mining can identify connections between behaviours and / or

variables, it does not necessarily identify causal relationships. For example, an application may identify that

a pattern of behaviour (such as the propensity to purchase airline tickets shortly before the flight is scheduled

to depart) is related to other characteristics (such as income, level of education, and Internet use). However,

that does not necessarily indicate that the ticket purchasing behaviour is caused by one or more of these

variables. In fact, the individuals behaviour could be affected by some additional variable(s) such as

occupation (the need to make trips on short notice), family status (a sick relative needing care), or a hobby

(taking advantage of last minute discounts to visit new destinations).

Last, but not least, we have to mention data quality. Data quality is a complex issue that represents one of

the biggest challenges for Data Mining. Data quality refers to the accuracy and completeness of the data.

Data quality can also be affected by the structure and consistency of the data being analysed. The presence

of duplicate records, the lack of data standards, the timeliness of updates, and human error can significantly

impact the efficiency of the more complex Data Mining techniques, which are sensitive to small differences

that may exist in the data. To improve data quality, it is sometimes necessary to clean the data, which can

involve the removal of duplicate records and standardising data formats.

Summary

Data Mining involves the extraction of hidden predictive information from large databases. This is a new and

powerful technology with great potential to help companies focus on the most important information in their

data domain. Data Mining tools help to predict future trends and behaviours, allowing businesses to make

proactive, knowledge-driven decisions. The automated, prospective analyses offered by data mining moves

way beyond the analyses provided by decision support systems in the past.

Data mining is important in large data repositories as it locates information that would otherwise have been

hidden. A simple metaphor would be finding two needles in a haystack that match. The haystack is the

database, the individual lengths of the hay represent your data fields, and the needles represent data fields

with a relationship worth more to you than all the hay put together.

SAP Data Mining solutions have been an excellent addition to the SAP Business Intelligence platform. If your

business already has SAP BW and finds that the pre-configured queries and reports it provides does not

meet all your decision making requirements, SAP Data Mining could help you to develop custom Data

Mining applications and to drive your business forward.

De Villiers Walton (DVW) is a

specialist SAP

implementation services

company operating in Europe

and North America. We

design, build and implement

solutions based on SAP

Customer Relationship

Management (CRM) and SAP

NetWeaver (BI, XI, MDM and

CAF).

We provide high quality SAP

Customer Relationship

Management (SAP CRM) and

SAP NetWeaver services.

Over the years, our intense

focus has allowed us to

develop significant expertise

in these areas, enabling us to

offer our clients practical and

cost-effective support across

a wide range of requirements.

Our goal is to deliver

consistently high quality

value-added services. We

achieve this by recruiting and

retaining highly professional,

capable and enthusiastic

people. Our personnel

combine their technology

skills with a business maturity

gained from years of practical

experience.

We work in partnership with

our customers to understand

their requirements and

business intimately. The

consultant who leads the

proposal is the consultant

who will lead the assignment.

So we don't just sell high

calibre consultants, you get to

work with them as well.

To find out more or contact us

please refer to our website at

http://www.dvwsolutions.com.

You might also like

- Unit-1 Data Analytics PPT-1Document109 pagesUnit-1 Data Analytics PPT-1Ashish ChaudharyNo ratings yet

- Paper PresentationDocument2 pagesPaper PresentationHarpreet10No ratings yet

- Spreadsheets To Cubes (Advanced Data Analytics for Small Medium Business): Data ScienceFrom EverandSpreadsheets To Cubes (Advanced Data Analytics for Small Medium Business): Data ScienceNo ratings yet

- Data Mining PrimerDocument15 pagesData Mining PrimerapoorvgadwalNo ratings yet

- Unit 1Document122 pagesUnit 1PriyaNo ratings yet

- By Ghazwan Khalid AudaDocument17 pagesBy Ghazwan Khalid Audaghazwan100% (1)

- Data Mining and Data WarehousingDocument13 pagesData Mining and Data WarehousingKattineni ChaitanyaNo ratings yet

- Data Warehousing and Data Mining Final Year Seminar TopicDocument10 pagesData Warehousing and Data Mining Final Year Seminar Topicanusha5c4No ratings yet

- Free Research Paper On Data WarehousingDocument4 pagesFree Research Paper On Data Warehousinggpxmlevkg100% (1)

- OBI Workshop ManualDocument11 pagesOBI Workshop ManualTanveerNo ratings yet

- Eserver I5 and Db2: Business Intelligence ConceptsDocument12 pagesEserver I5 and Db2: Business Intelligence Conceptsitsme_mahe5798No ratings yet

- Big DataDocument28 pagesBig DataBhawna KhoslaNo ratings yet

- Harnessing The Value of Big Data AnalyticsDocument13 pagesHarnessing The Value of Big Data AnalyticsboraincNo ratings yet

- Data MiningDocument18 pagesData Miningadmin ker100% (1)

- Data AnalyticsDocument10 pagesData Analyticsmalena cardenasNo ratings yet

- Data Mining: What Is Data Mining?: Correlations or Patterns Among Fields in Large Relational DatabasesDocument6 pagesData Mining: What Is Data Mining?: Correlations or Patterns Among Fields in Large Relational DatabasesAnonymous wfUYLhYZtNo ratings yet

- Full Value of Data: Maximizing Business Potential through Data-Driven Insights and Decisions. Part 2From EverandFull Value of Data: Maximizing Business Potential through Data-Driven Insights and Decisions. Part 2No ratings yet

- Big Data: Understanding How Data Powers Big BusinessFrom EverandBig Data: Understanding How Data Powers Big BusinessRating: 2 out of 5 stars2/5 (1)

- Analy Tiko SDocument52 pagesAnaly Tiko SVIVEK T VNo ratings yet

- Data Mining InformationDocument7 pagesData Mining InformationAkshatha A BhatNo ratings yet

- Business Intelligence: Nesrin K. ADocument8 pagesBusiness Intelligence: Nesrin K. AAnnonymous963258No ratings yet

- BT9001: Data Mining - AssignmentDocument6 pagesBT9001: Data Mining - AssignmentPawan MallNo ratings yet

- Research Paper On Market Basket AnalysisDocument7 pagesResearch Paper On Market Basket Analysishqeoguplg100% (1)

- Data Mining NotesDocument21 pagesData Mining NotesaryanNo ratings yet

- Big Data AnalysisDocument30 pagesBig Data AnalysisAdithya GutthaNo ratings yet

- Bana1 VisualizationDocument22 pagesBana1 VisualizationSan Juan, Ma. Lourdes D.No ratings yet

- Bi DW DMDocument39 pagesBi DW DMSushant SinghNo ratings yet

- The Need of Data AnalysisDocument12 pagesThe Need of Data AnalysisVenkat Reddy ANo ratings yet

- Data Discovery & Visualization - NewDocument41 pagesData Discovery & Visualization - Newhello world100% (1)

- Customer Relationship Management: Service Quality Gaps, Data Warehousing, Data MiningDocument6 pagesCustomer Relationship Management: Service Quality Gaps, Data Warehousing, Data MiningAsees Babber KhuranaNo ratings yet

- MC0088 Data Warehousing & Data MiningDocument10 pagesMC0088 Data Warehousing & Data MiningGaurav Singh JantwalNo ratings yet

- Data Science 1Document2 pagesData Science 1Nivesshent RWNo ratings yet

- Sri Venkateswara Engineering CollegeDocument15 pagesSri Venkateswara Engineering Collegeapi-19799369No ratings yet

- Datamining 2Document5 pagesDatamining 2Manoj ManuNo ratings yet

- 01 Unit1Document13 pages01 Unit1Sridhar KumarNo ratings yet

- Lec1 OverviewDocument4 pagesLec1 OverviewAlia buttNo ratings yet

- Big Data Unit1Document6 pagesBig Data Unit1RajuNo ratings yet

- Chapter 9 Business IntelligenceDocument3 pagesChapter 9 Business IntelligenceMardhiah RamlanNo ratings yet

- DATA MINING Unit 1Document22 pagesDATA MINING Unit 1RajuNo ratings yet

- Data Science: What the Best Data Scientists Know About Data Analytics, Data Mining, Statistics, Machine Learning, and Big Data – That You Don'tFrom EverandData Science: What the Best Data Scientists Know About Data Analytics, Data Mining, Statistics, Machine Learning, and Big Data – That You Don'tRating: 5 out of 5 stars5/5 (1)

- Data Mining& Data Warehousing.Document13 pagesData Mining& Data Warehousing.Pavan KumarNo ratings yet

- DMDWDocument4 pagesDMDWRKB100% (1)

- Executive Summary: (CITATION Eri172 /L 1033)Document10 pagesExecutive Summary: (CITATION Eri172 /L 1033)Dhana PandulaNo ratings yet

- 06 English 4 IT Unit 6 Business Intelligence 6Document18 pages06 English 4 IT Unit 6 Business Intelligence 6vladnazarchuk406No ratings yet

- Big Data AnalyticsDocument11 pagesBig Data Analyticsakurathikotaiah100% (1)

- Emerging Trends in Business AnalyticsDocument5 pagesEmerging Trends in Business AnalyticsPrabhuNo ratings yet

- 4298 Gui Ba BG GuideDocument14 pages4298 Gui Ba BG GuideMakendo DestineNo ratings yet

- Evolution/Journey From "Organizational Business Analytics To Self Service Analytics To Smart Analytics"Document13 pagesEvolution/Journey From "Organizational Business Analytics To Self Service Analytics To Smart Analytics"bilal aamirNo ratings yet

- Discuss The Role of Data Mining Techniques and Data Visualization in e Commerce Data MiningDocument13 pagesDiscuss The Role of Data Mining Techniques and Data Visualization in e Commerce Data MiningPrema SNo ratings yet

- Mit401 Unit 08-SlmDocument13 pagesMit401 Unit 08-SlmAmit ParabNo ratings yet

- Module 2 - Fund. of Business AnalyticsDocument26 pagesModule 2 - Fund. of Business AnalyticsnicaapcinaNo ratings yet

- Online Analytical Processing (OLAP) GroupworkDocument8 pagesOnline Analytical Processing (OLAP) Groupworkclinton migonoNo ratings yet

- Data Science 1Document2 pagesData Science 1Nivesshent RWNo ratings yet

- Data MiningDocument14 pagesData MiningAnkit GuptaNo ratings yet

- Data Science and The Role of Data ScientistDocument8 pagesData Science and The Role of Data ScientistMahamud elmogeNo ratings yet

- Gartner Reprint - TigergraphDocument13 pagesGartner Reprint - TigergraphHenkNo ratings yet

- DataDocument9 pagesDataAlston FernandesNo ratings yet

- Data Analytics-Unit1 NotesDocument30 pagesData Analytics-Unit1 NotesAnuraagNo ratings yet

- Quantum Intelligence System For Retail: White PaperDocument9 pagesQuantum Intelligence System For Retail: White PaperszkumarNo ratings yet

- Syllabus For Admission Test For Admission To Class Xi 2020-21 Physics ChemistryDocument2 pagesSyllabus For Admission Test For Admission To Class Xi 2020-21 Physics ChemistryAdhara MukherjeeNo ratings yet

- Electronic - Banking and Customer Satisfaction in Greece - The Case of Piraeus BankDocument15 pagesElectronic - Banking and Customer Satisfaction in Greece - The Case of Piraeus BankImtiaz MasroorNo ratings yet

- Sanitizermachine ZeichenDocument7 pagesSanitizermachine ZeichenprasannaNo ratings yet

- En 2014 New Brochure WebDocument20 pagesEn 2014 New Brochure WebSasa NackovicNo ratings yet

- Stereological Study of Kidney in Streptozotocin-Induced Diabetic Mice Treated With Ethanolic Extract of Stevia Rebaudiana (Bitter Fraction)Document10 pagesStereological Study of Kidney in Streptozotocin-Induced Diabetic Mice Treated With Ethanolic Extract of Stevia Rebaudiana (Bitter Fraction)Fenny Noor AidaNo ratings yet

- 07 Drawer RunnersDocument20 pages07 Drawer RunnersngotiensiNo ratings yet

- ECG553 Week 10-11 Deep Foundation PileDocument132 pagesECG553 Week 10-11 Deep Foundation PileNUR FATIN SYAHIRAH MOHD AZLINo ratings yet

- Angle Chase As PDFDocument7 pagesAngle Chase As PDFNM HCDENo ratings yet

- Summative Test Ist (2nd G)Document2 pagesSummative Test Ist (2nd G)Rosell CabalzaNo ratings yet

- Way4 Manager Menu Editor: Openway Group System Administrator ManualDocument47 pagesWay4 Manager Menu Editor: Openway Group System Administrator ManualEloy Escalona100% (1)

- Leaf Spring - Final DocumentationDocument64 pagesLeaf Spring - Final DocumentationSushmitha VaditheNo ratings yet

- Leica Disto d410 Manual EngDocument24 pagesLeica Disto d410 Manual EngcsudhaNo ratings yet

- Reviewer MathDocument6 pagesReviewer MathLuna Ronquillo100% (1)

- Structural Evaluation TechniquesDocument6 pagesStructural Evaluation TechniquesMohan NaikNo ratings yet

- 2009 06 02 Library-Cache-LockDocument9 pages2009 06 02 Library-Cache-LockAbdul WahabNo ratings yet

- A Lightweight Secure Data Sharing SchemeDocument7 pagesA Lightweight Secure Data Sharing SchemeGopi KrishnaNo ratings yet

- Strike RiskDocument4 pagesStrike RiskAdilson Leite ProençaNo ratings yet

- Indian Pharmacopoeia 2020 - Vol. 1 (PART 1)Document255 pagesIndian Pharmacopoeia 2020 - Vol. 1 (PART 1)the reader100% (1)

- Tesis de Pared de BloquesDocument230 pagesTesis de Pared de BloquesRobert FinqNo ratings yet

- Commissioning Example 797Document15 pagesCommissioning Example 797linkangjun0621No ratings yet

- CELLSDocument21 pagesCELLSPhia LhiceraNo ratings yet

- Amritsar Jamnagar Pavement Crust ThicknessDocument5 pagesAmritsar Jamnagar Pavement Crust ThicknessPurshottam SharmaNo ratings yet

- CR EstimateDocument307 pagesCR EstimateGani AnosaNo ratings yet

- BearingDocument4 pagesBearingJITENDRA BISWALNo ratings yet

- Knitting GSM Calculations PDFDocument11 pagesKnitting GSM Calculations PDFHifza khalidNo ratings yet

- 3107 Enthusiast Score Advanced Paper-2 (E+H) JADocument56 pages3107 Enthusiast Score Advanced Paper-2 (E+H) JAsonu goyalNo ratings yet

- Inductiveand Deductive Reasoning in Geometry October 27 2022Document9 pagesInductiveand Deductive Reasoning in Geometry October 27 2022Seif DelawarNo ratings yet

- Invers Transf LaplaceDocument13 pagesInvers Transf LaplaceMeriska AhmadNo ratings yet

- KKC Model Number System2Document3 pagesKKC Model Number System2zayerirezaNo ratings yet

- Chapter 1Document10 pagesChapter 1Moon LytNo ratings yet