Professional Documents

Culture Documents

Binary Transmission System Probabilities

Uploaded by

Jon AhnOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Binary Transmission System Probabilities

Uploaded by

Jon AhnCopyright:

Available Formats

2102401 Random Processes for EE

Solution to Quiz 1, 2011

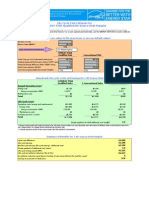

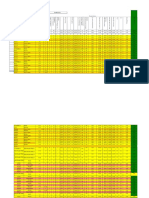

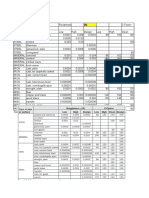

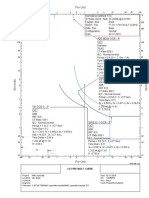

A binary transmission system transmits a signal X (1 to send a 0 bit; +1 to send a 1 bit). The received signal is Y = X + N where noise N has a zero-mean Gaussian distribution with unit variance. Assume that 1 bits occur with probability p and 0 bits occur with probability 1 p. 1. (4 points.) Find the conditional pdf of Y given the input value: fY (y | X = +1) and fY (y | X = 1). Solution. If X is given and equal to 1, then Y = N + 1, i.e., Y is simply an ane function of N . Therefore, we can conclude that Y is also a Gaussian with mean E[N ] + 1 = 1 and variance 12 var(N ) = 1. From this, we can write down the conditional pdf of Y given X = 1 by 1 2 fY (y|X = 1) = e(y1) /2 . 2 Similarly, if X = 1, then Y is a Gaussian with mean 1 and variance 1. Hence, 1 2 fY (y|X = 1) = e(y+1) /2 . 2 2. (6 points.) The receiver decides a 0 was transmitted if the observed value of y satises fY (y | X = 1)P (X = 1) > fY (y | X = +1)P (X = +1) and it decides a 1 was transmitted otherwise. Use the results from part 1) to show that this decision rule is equivalent to: If y < T decide 0; if y T decide 1. T is a threshold for this decision rule. Write down what values of T are for p = 1/4 and p = 1/2. Solution. The above condition is 1 1 2 2 e(y+1) /2 (1 p) > e(y1) /2 p, 2 2 from which it can be further derived as follows. e(y1)

2 /2+(y+1)2 /2

< <

2y

1p p 1p p

1 y < ln 2

1p p

) y < T.

Hence, for p = 1/4, we have T = 0.5 ln 3 and for p = 1/2, we have T = 0. 3. (5 points.) Let P+1 and P1 be the probabilities that the receiver makes an error given that a +1 was transmitted and a 1 transmitted, respectively. These probabilities depend on the parameter T obtained from the previous question. Write down P+1 and P1 as a function of T using the denition of cumulative function of the normalized Gaussian: z 1 2 (z) = et /2 dt. 2 Solution. The probability of error when 1 was transmitted is given by P (Y < T |X = 1) since for Y < T we should have decided the 0 was transmitted. Therefore, we can write P+1 as T T 1 2 e(y1) /2 dy = (T 1). P+1 = P (Y < T |X = 1) = fY (y|X = 1)dy = 2 1

Similarly, the probability of error when -1 was transmitted is P1 = P (Y T |X = 1) T = fY (y|X = 1)dy =

1 2 e(y+1) /2 dy 2

= 1 (T + 1) = ((T + 1)).

The above notation follow from a fact that for a Guassian variable X with mean and variance 2 , we can compute P (X a) by ( ) a P (X < a) = , and a property 1 (a) = (a). 4. (5 points.) Can you compare P+1 and P1 ? Which one is larger ? Compare their values for p = 1/4 and p = 1/2. (No need to compute the actual values ). Solution. We have T 1 (T + 1) for any T 0 and with equality when T = 0. Since the cumulative function () is an increasing function, i.e., (a) (b) for a b,

we can conclude that P+1 P1 for any T 0. In conclusion, for p = 1/4, P+1 is larger than P1 and for p = 1/2, we have P+1 = P1 . This result intuitively makes sense. When the bit 1 is less likely to occur (p < 1/2), the decision threshold T will be greater than zero. Then the probability that receiver makes an error (given 1 was transmitted) will be larger than the error when -1 was sent.

You might also like

- Problems Discussion - Enviroment EthicsDocument2 pagesProblems Discussion - Enviroment EthicsNaufalAqil50% (2)

- CU Annex 2 SEPR Calculation Tool V1 - 4.2 - Rev06.16Document8 pagesCU Annex 2 SEPR Calculation Tool V1 - 4.2 - Rev06.16Madan TiwariNo ratings yet

- Return Air Square: Ceiling DiffuserDocument1 pageReturn Air Square: Ceiling DiffuserEnak Cenir100% (1)

- Overhead Water Tank Structural AnalysisDocument28 pagesOverhead Water Tank Structural Analysiscollins unanka100% (3)

- 3CCC81 Aeration Tank Design Si UnitsDocument2 pages3CCC81 Aeration Tank Design Si Unitsnassif75No ratings yet

- Louver and Pump SizeDocument1 pageLouver and Pump SizeShabeer HamzaNo ratings yet

- Propeller wall fans dimensions and specsDocument2 pagesPropeller wall fans dimensions and specsKok WaiNo ratings yet

- Boiler ChecklistDocument2 pagesBoiler ChecklistJoydev GangulyNo ratings yet

- Al Gharafa Sports Club Irrigation Schedule RevisedDocument1 pageAl Gharafa Sports Club Irrigation Schedule RevisedmansidevNo ratings yet

- Static Pressure Calculation in The Air DuctsDocument2 pagesStatic Pressure Calculation in The Air Ductsmeeng2014No ratings yet

- Cable TakeoffDocument14 pagesCable TakeoffRudivic LumainNo ratings yet

- Air-Handling Unit Pressure Drop: Unit No. Supply Return External ExternalDocument6 pagesAir-Handling Unit Pressure Drop: Unit No. Supply Return External ExternalNghiaNo ratings yet

- 6-Door Louver Size CalculationDocument2 pages6-Door Louver Size CalculationDang Thai SonNo ratings yet

- Ashp Sav CalcDocument5 pagesAshp Sav CalcsauroNo ratings yet

- Simplified Kachelofen Calculator: InstructionsDocument8 pagesSimplified Kachelofen Calculator: InstructionscountlessNo ratings yet

- Recommended Equal Friction MethodDocument2 pagesRecommended Equal Friction MethodPhyu Mar Thein KyawNo ratings yet

- Humidity ChartDocument18 pagesHumidity ChartShadab AhmadNo ratings yet

- Hydraulic Calculation For FFDocument2 pagesHydraulic Calculation For FFArshath FleminNo ratings yet

- STT: Động từ nguyên mẫu và các dạng thì quá khứDocument10 pagesSTT: Động từ nguyên mẫu và các dạng thì quá khứArshavin Watashi WaNo ratings yet

- LightingCalculation SubmitDocument9 pagesLightingCalculation Submitmeeng2014No ratings yet

- Cable's Table & C.B-MKDocument2 pagesCable's Table & C.B-MKMohamed MostafaNo ratings yet

- R0 Cable Schedule 18.05.2017Document12 pagesR0 Cable Schedule 18.05.2017Sandeep DeodharNo ratings yet

- Simple Calculation To Estimate LED Fixture ChipDocument1 pageSimple Calculation To Estimate LED Fixture ChipjakjakNo ratings yet

- Ventilation DesignDocument4 pagesVentilation DesignPhyu Mar Thein KyawNo ratings yet

- FM200 CalculationsDocument2 pagesFM200 CalculationsHamdy AdelNo ratings yet

- Calculation Sheet For Make - Up Water Tank: #Value!Document4 pagesCalculation Sheet For Make - Up Water Tank: #Value!thanh_79No ratings yet

- M E Room DimensionsDocument3 pagesM E Room DimensionsPujayantha KumarNo ratings yet

- Computer Graphics Lab ExperimentsDocument59 pagesComputer Graphics Lab ExperimentsKanika KadianNo ratings yet

- 0626-REF CT CALCS New Not ReviewedDocument2 pages0626-REF CT CALCS New Not ReviewedtceterexNo ratings yet

- Tank sizing for 150% pump capacity and sprinkler demandDocument3 pagesTank sizing for 150% pump capacity and sprinkler demandEngFaisal AlraiNo ratings yet

- OWS Sump Volume CalculationsDocument1 pageOWS Sump Volume CalculationsMuthuKumar ArunachalamNo ratings yet

- Drain Flow CalculatorDocument4 pagesDrain Flow CalculatorarcelitasNo ratings yet

- Dual Fuel Genset - Heat Recovery-1-2Document2 pagesDual Fuel Genset - Heat Recovery-1-2Ab Sami100% (1)

- Project: FT Catcher Fpso Feed: Hvac - Heater Load CalculationDocument7 pagesProject: FT Catcher Fpso Feed: Hvac - Heater Load CalculationinstrengNo ratings yet

- Cable Size CalculationsDocument4 pagesCable Size CalculationsSugianto BarusNo ratings yet

- Irrigation Scheduling by Book Keeping MethodDocument13 pagesIrrigation Scheduling by Book Keeping MethodShekh Muhsen Uddin AhmedNo ratings yet

- TIÊU CHUẨN THIẾT KẾ-All-in-Electrical-DesignDocument32 pagesTIÊU CHUẨN THIẾT KẾ-All-in-Electrical-DesignAnh NhoNo ratings yet

- ,.... - L.. ..... :: L....... .... :. - :... - .L - .... :: - L.Document1 page,.... - L.. ..... :: L....... .... :. - :... - .L - .... :: - L.Shah Manzoor Ahmed QuadriNo ratings yet

- Calculation of Friction Losses, Power, Developed Head and Available Net Positive Suction Head of A PumpDocument11 pagesCalculation of Friction Losses, Power, Developed Head and Available Net Positive Suction Head of A Pumpthanh_79No ratings yet

- Drainage - Junctions in DrainsDocument2 pagesDrainage - Junctions in Drainsmeeng2014No ratings yet

- Flow Meter Orifice CalculationDocument27 pagesFlow Meter Orifice CalculationLaksono BudiNo ratings yet

- DbA CalculationDocument4 pagesDbA Calculationthanh_79No ratings yet

- Modulation in MatlabDocument7 pagesModulation in MatlabNitin ThakurNo ratings yet

- Design Calculation Sheet: Fire Escape On: Stair Doors: Building HeightDocument1 pageDesign Calculation Sheet: Fire Escape On: Stair Doors: Building HeightDlanod Yer NalonedNo ratings yet

- Water Treatment Catalogue GuideDocument12 pagesWater Treatment Catalogue GuideJim TsikasNo ratings yet

- Process Specification Heat Exchanger CpeccDocument2 pagesProcess Specification Heat Exchanger CpeccroyNo ratings yet

- Coil Selection For 38 TR Ahu r0 18.12.2006Document2 pagesCoil Selection For 38 TR Ahu r0 18.12.2006Mohsin ShaikhNo ratings yet

- Pipe Roughness and C-Factors TableDocument48 pagesPipe Roughness and C-Factors Tablesaroat moongwattanaNo ratings yet

- LC1 - C2001-33BQ-0000 - Pump Room - Rev01Document111 pagesLC1 - C2001-33BQ-0000 - Pump Room - Rev01suheil samaraNo ratings yet

- LV 3 PH Fault CurveDocument1 pageLV 3 PH Fault Curveyadav_sctNo ratings yet

- EXcavation Cost AnalysisDocument8 pagesEXcavation Cost AnalysisEngFaisal AlraiNo ratings yet

- Cable Tray CalculatorDocument5 pagesCable Tray CalculatorhughespbrenNo ratings yet

- AA-SM-002 TorsionDocument8 pagesAA-SM-002 TorsionjowarNo ratings yet

- Fire Detection BS 5839 SummaryDocument14 pagesFire Detection BS 5839 Summarys525497No ratings yet

- PL 3900 Battery Room Ventilation SystemDocument2 pagesPL 3900 Battery Room Ventilation SystemcandratrikusumaNo ratings yet

- T&CDocument13 pagesT&CAbdurRahmanFNo ratings yet

- 3ph Isc at LV InstallationDocument7 pages3ph Isc at LV InstallationbambangNo ratings yet

- Orifice, Nozzle and Venturi Flow Rate Meters: Water & Air FlowmetersDocument4 pagesOrifice, Nozzle and Venturi Flow Rate Meters: Water & Air Flowmeterssiva_nagesh_2No ratings yet

- Ecoair HVLS Fans PresentationDocument10 pagesEcoair HVLS Fans PresentationSales ecoairNo ratings yet

- 2 - Hvac Duck WorkDocument41 pages2 - Hvac Duck WorkEngFaisal AlraiNo ratings yet

- Homework1 SolutionsDocument3 pagesHomework1 Solutions5DSX4zKSNo ratings yet

- Inventory ManagementDocument60 pagesInventory Managementdrashti0% (1)

- Internal Controls and Risk Management: Learning ObjectivesDocument24 pagesInternal Controls and Risk Management: Learning ObjectivesRamil SagubanNo ratings yet

- Rules For Assigning Activity Points: Apj Abdul Kalam Technological UniversityDocument6 pagesRules For Assigning Activity Points: Apj Abdul Kalam Technological UniversityAnonymous KyLhn6No ratings yet

- I2E: Embedding Innovation as Organizational StrategyDocument11 pagesI2E: Embedding Innovation as Organizational StrategyDeepak PanditNo ratings yet

- The Eukaryotic Replication Machine: D. Zhang, M. O'DonnellDocument39 pagesThe Eukaryotic Replication Machine: D. Zhang, M. O'DonnellÁgnes TóthNo ratings yet

- Sulzer MC EquipmentDocument12 pagesSulzer MC EquipmentsnthmlgtNo ratings yet

- 1136 E01-ML01DP5 Usermanual EN V1.2Document11 pages1136 E01-ML01DP5 Usermanual EN V1.2HectorNo ratings yet

- Penomoran Bantex - K64&COMPDocument8 pagesPenomoran Bantex - K64&COMPVigour Rizko MurdyneNo ratings yet

- ST326 - Irdap2021Document5 pagesST326 - Irdap2021NgaNovaNo ratings yet

- Managerial Performance Evaluation ProceduresDocument3 pagesManagerial Performance Evaluation Procedures1robcortesNo ratings yet

- 9.tools and Equipment 1Document13 pages9.tools and Equipment 1NKH Mega GasNo ratings yet

- A Hirshfeld Surface Analysis and Crystal StructureDocument8 pagesA Hirshfeld Surface Analysis and Crystal StructureLidiane MicheliniNo ratings yet

- Timothy Prehn CV 021209Document4 pagesTimothy Prehn CV 021209Jason GomezNo ratings yet

- 9-Lesson 5 Direct and Indirect SpeechDocument8 pages9-Lesson 5 Direct and Indirect Speechlaiwelyn100% (4)

- MAPEH 6- WEEK 1 ActivitiesDocument4 pagesMAPEH 6- WEEK 1 ActivitiesCatherine Renante100% (2)

- Sewer CadDocument10 pagesSewer CadAlvaro Jesus Añazco YllpaNo ratings yet

- Daftar PustakaDocument3 pagesDaftar PustakaNurha ZizahNo ratings yet

- VLSI Physical Design: From Graph Partitioning To Timing ClosureDocument30 pagesVLSI Physical Design: From Graph Partitioning To Timing Closurenagabhairu anushaNo ratings yet

- IPA Assignment Analyzes New Public AdministrationDocument8 pagesIPA Assignment Analyzes New Public AdministrationKumaran ViswanathanNo ratings yet

- What Is A Lecher AntennaDocument4 pagesWhat Is A Lecher AntennaPt AkaashNo ratings yet

- Action Plan On GadDocument1 pageAction Plan On GadCherish Devora ArtatesNo ratings yet

- Chemistry 101 - The Complete Notes - Joliet Junior College (PDFDrive)Document226 pagesChemistry 101 - The Complete Notes - Joliet Junior College (PDFDrive)Kabwela MwapeNo ratings yet

- It 7sem Unit Ii IotDocument10 pagesIt 7sem Unit Ii IotMaitrayee SuleNo ratings yet

- 2002 AriDocument53 pages2002 AriMbarouk Shaame MbaroukNo ratings yet

- The Godfather Term One Sample Basic Six Annual Scheme of Learning Termly Scheme of Learning WEEK 1 - 12Document313 pagesThe Godfather Term One Sample Basic Six Annual Scheme of Learning Termly Scheme of Learning WEEK 1 - 12justice hayfordNo ratings yet

- Designers' Guide To Eurocode 7 Geothechnical DesignDocument213 pagesDesigners' Guide To Eurocode 7 Geothechnical DesignJoão Gamboias100% (9)

- J05720020120134026Functions and GraphsDocument14 pagesJ05720020120134026Functions and GraphsmuglersaurusNo ratings yet

- Antenna SpecificationsDocument2 pagesAntenna SpecificationsRobertNo ratings yet

- Sinavy Pem Fuel CellDocument12 pagesSinavy Pem Fuel CellArielDanieli100% (1)