Professional Documents

Culture Documents

TBL Explanation 1

Uploaded by

icebreakerchOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

TBL Explanation 1

Uploaded by

icebreakerchCopyright:

Available Formats

TBL: Saint or Villian?

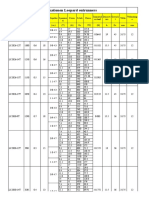

Many competition fliers, judges, officials, and administrators are still not sure of the benefits that the TBL statistical averaging system provides to radio control aerobatics, because they have not taken the trouble to understand the process. Some have even gone to the extreme by suggesting that it may be manipulated by scoring personnel and competition organisers to favour particular fliers or nations. Because they do not understand the system, it is considered evil. The arithmetical calculations (algorithm/algorism) can not be altered, and score manipulation is simply not possible. It is true however that this algorithm is not usually made public, since it would allow any layman to have an attempt at writing scoring software, with disastrous results. It is also a fact that not all people are mathematically inclined, and may not have knowledge of intricate mathematical calculations, and the algorithm will not mean anything to them. The following explanation is taken from the IAC rule book and will hopefully give a laymans guide to what it is that TBL does, and does not do. Competition aerobatics is scored by a panel(s) of judges who grade each manoeuvre in each flight on the basis of their perception of how well the competitor flies against the criteria of perfection, smoothness and gracefulness, positioning, and size. Judges, just like the competitors they grade, are not infallible. Whether caused by bias, inaccuracy, inconsistency, experience level, fatigue, or other factors, there will always be some variation in the scores. Different judges also have different styles. Some score generously, others score miserly. Certain judges use the full range of scores, while the scores of others are may be clustered around a single value (maybe 7, or 8). It is because of these human factors that we use a panel of five judges rather than a single judge. The TBL (Messrs Tarasov, Bauer, Long) system bases its foundations on the premise, that by combining the scores from several judges in a proven statistical way, we can eliminate many of those undesirable effects. Even if a single judge viewed an identical flight a number of times, slightly different scores would be recorded for each flight. There is simply an element of randomness in the scoring process, no matter how competent, accurate, consistent, objective, experienced, or unbiased the judge may be. There is however, a low probability that this randomness will be identical for all the judges on a panel. This is why statistical methods give a more reliable result than simple averaging, or discarding of high and low scores, as was used in the past. The use of straight averages for scoring will result in the judges who use a wide range of scores having far more influence on the final standings than the judges who confine their scores to a narrow range. Discarding the high and low scores does not remove the second or third out-ofrange, or biased judges, and often removes judges who are the most rigorous with their scores. The TBL statistical averaging system normalises the scores, and removes the biased and out-ofrange scores from the final result, even if there were more than one biased or out-of-range score for a single flight. In operation, TBL first normalises the individual judges scores to remove the distortion caused by different judging styles. This is done by: raising the scores of the low-scoring judges lowering the scores of the high-scoring judges compressing the scores of the wide-range scoring judges expanding the scores of the narrow-range scoring judges.

This normalisation process results is a mean (average) score, and a scatter (distance from the mean) for each judge. In case you are concerned that this process corrupts the ranking of the

competitors: when the scores are normalised in this manner to remove stylistic differences between judges, the relative standing of each competitor is not affected. An individual competitors score may be bumped up or down, but the relative finish order, as seen by each judge, does not change. If each judges score is compared to the mean, it will be seen that some scores are greater than the mean, and some lesser than the mean (this difference is termed scatter). This means, the scores do not fall in a straight line, but within a window, with the mean at the centre line. How wide should that window be? That depends on how confident we want to be that a given score is valid. Based on many trials and analysis on contest data from the full-size aerobatic fraternity, it was established that that one standard deviation (a bit less than 70% of the scores population) was good enough. To increase the population would increase the risk of passing biased, or incompetent scores. To lower the population would discard too many valid scores. With the width of the window set, TBL removes any scores that are outside the window, and averages the remaining scores to produce a final score for the competitor. The removal of those out-lying scores is not done on a black and white basis. The window in TBL has fuzzy edges. Instead of a score being simply in, or out of the window, the score is examined to determine exactly how far outside the window it is. If it is only slightly out, the score is still used, but is given less weight (importance) when the final average is calculated. This means that TBL progressively removes scores near the edge of the acceptance window from the final results, rather than using an all-or-nothing approach. When a judges score does fall completely outside the fuzzy edge of the window, the score is totally discarded, and not taken into consideration in the final calculation of the score for a competitor. TBL can fix inconsistent, biased, and inaccurate judging, but it can never turn a bad judge into a good one. All R/C aerobatic judges are urged to study, know, and apply the Sporting Code and the Judges Guide, and to obtain as much practical experience as possible. Then there will be no need for the TBL system to discard your scores, even if the scores are modified to more accurately reflect the performance of the competitors. Competitors are urged to study the TBL process, so that they may understand why a TBL score if often different from a raw score, but that the relative placing and ranking of an individual competitor does not change. Any TBL scoring system should only be applied where there are at least five judges and at least twenty competitors, since there would then be enough data to work with.

You might also like

- The Official LSAT PrepTest 72 - Wendy MargolisDocument83 pagesThe Official LSAT PrepTest 72 - Wendy Margoliskevinrock100% (3)

- Scientific American - May 2014Document88 pagesScientific American - May 2014Christopher Brown100% (1)

- Measurement System AnalysisDocument10 pagesMeasurement System AnalysisRodrigo CandelariaNo ratings yet

- PROMIS Social Isolation Scoring ManualDocument7 pagesPROMIS Social Isolation Scoring ManualMonica ArleguiNo ratings yet

- MSA Training Material - 18 - 04 - 2018Document10 pagesMSA Training Material - 18 - 04 - 2018Mark AntonyNo ratings yet

- How To Create A Data QualityDocument15 pagesHow To Create A Data Qualitykav_archNo ratings yet

- E247Document3 pagesE247diego100% (1)

- Msa (NDC)Document7 pagesMsa (NDC)rkshpanchalNo ratings yet

- Jessup Guide - Judging MemorialsDocument5 pagesJessup Guide - Judging MemorialszloitimaNo ratings yet

- Audit Sampling Notes and Answers To Quiz. Accounting Help.Document17 pagesAudit Sampling Notes and Answers To Quiz. Accounting Help.Cykee Hanna Quizo LumongsodNo ratings yet

- What Is A GageDocument12 pagesWhat Is A GageMohini MaratheNo ratings yet

- Template QUADAS 2 ToolDocument2 pagesTemplate QUADAS 2 ToolDelladuasatu stella0% (1)

- Measurement System AnalysisDocument5 pagesMeasurement System AnalysisRahul BenkeNo ratings yet

- Analysing and Reporting Quantitative Data A How To... ' GuideDocument4 pagesAnalysing and Reporting Quantitative Data A How To... ' GuideEmdad YusufNo ratings yet

- Measurement System AnalysisDocument29 pagesMeasurement System AnalysissidwalNo ratings yet

- Chapter 15 Solutions ManualDocument23 pagesChapter 15 Solutions ManualAvira Eka Tri Utami33% (3)

- For Your Proposal DefenseDocument20 pagesFor Your Proposal Defensepokcik1500100% (3)

- Template - 4SHS-Module-Practical Research 1Document17 pagesTemplate - 4SHS-Module-Practical Research 1Jeanny Mae Pesebre50% (2)

- Solution Manual Auditing and Assurance Services 13e by Arens Chapter 15Document24 pagesSolution Manual Auditing and Assurance Services 13e by Arens Chapter 15Sevistha GintingNo ratings yet

- Lesson Plan EbpDocument12 pagesLesson Plan EbpSwati Sharma100% (2)

- EURL-FA Guide: Protocol For Verification Studies of Single-Laboratory/in-House Validated MethodsDocument26 pagesEURL-FA Guide: Protocol For Verification Studies of Single-Laboratory/in-House Validated MethodsEmilianNo ratings yet

- Aeb SM CH15 1 PDFDocument25 pagesAeb SM CH15 1 PDFAdi SusiloNo ratings yet

- Kepner TregoeDocument8 pagesKepner TregoebuckianNo ratings yet

- McCutcheon, Russell T. Braun, Willi. (Ed.) - Guide To The Study of ReligonDocument288 pagesMcCutcheon, Russell T. Braun, Willi. (Ed.) - Guide To The Study of ReligonGustavo Martins100% (2)

- Summary of Algorithms to Live By: by Brian Christian and Tom Griffiths | Includes AnalysisFrom EverandSummary of Algorithms to Live By: by Brian Christian and Tom Griffiths | Includes AnalysisNo ratings yet

- Processing Judges Marks and Civa'S Fairplay System (FPS) : Sports Results and Judging SystemsDocument9 pagesProcessing Judges Marks and Civa'S Fairplay System (FPS) : Sports Results and Judging SystemsRoland SchäubleNo ratings yet

- Understanding Your SJT Score FINALDocument4 pagesUnderstanding Your SJT Score FINALCameron WhytockNo ratings yet

- Different Types of Scoring SystemsDocument10 pagesDifferent Types of Scoring SystemsgodolfintorentNo ratings yet

- Non Statistical SamplingDocument8 pagesNon Statistical SamplingAnonymous VtsflLix1100% (1)

- Audit Sampling For Tests of Controls and Substantive Tests of TransactionsDocument7 pagesAudit Sampling For Tests of Controls and Substantive Tests of TransactionsMaharani Siip88No ratings yet

- Research DispersionDocument3 pagesResearch Dispersionedson vicenteNo ratings yet

- Lean Six Sigma - Quick Fire Interview Question Answers PDFDocument3 pagesLean Six Sigma - Quick Fire Interview Question Answers PDFnileshnlprNo ratings yet

- Measurement System AnalysisDocument15 pagesMeasurement System AnalysisIndian MHNo ratings yet

- JLPT Scoring, What Is A Passing Score?Document7 pagesJLPT Scoring, What Is A Passing Score?Ryan RyanNo ratings yet

- Variation and Its DiscontentsDocument10 pagesVariation and Its DiscontentsVictor_Andrade_9540No ratings yet

- Out of Trend Results: PreambleDocument3 pagesOut of Trend Results: PreambleMerlitha Puspa DewiNo ratings yet

- Technical Assessment and Selection InformationDocument16 pagesTechnical Assessment and Selection InformationSAURABH SINGHNo ratings yet

- ERRORDocument36 pagesERRORJasmin GogdaNo ratings yet

- Quality Management Maturity GridDocument9 pagesQuality Management Maturity Gridselinasimpson1601No ratings yet

- BehavioSec WhitePaper FAR-FRRDocument8 pagesBehavioSec WhitePaper FAR-FRRapa apaNo ratings yet

- How Can Proficiency Testing Help My Laboratory?: For Other Scores Refer To ISO 13528Document2 pagesHow Can Proficiency Testing Help My Laboratory?: For Other Scores Refer To ISO 13528Ismael Morales PuenteNo ratings yet

- White Paper 99Document13 pagesWhite Paper 99Tanit PaochindaNo ratings yet

- Backtest Overfitting Demonstration ToolDocument9 pagesBacktest Overfitting Demonstration ToolmikhaillevNo ratings yet

- Reflection On EstimationDocument5 pagesReflection On Estimationarhaanadlakha5No ratings yet

- Measurement and ScalingDocument11 pagesMeasurement and ScalingZain KazimNo ratings yet

- Chapter 15Document25 pagesChapter 15Rio SibaraniNo ratings yet

- RIQAS PerformanceDocument18 pagesRIQAS PerformanceAditya JayaprakashNo ratings yet

- Batt Assignment: Stock Relations Demystified: by Group 6 Mrinal Mantravadi Gaurav Agarwal Aseem ShandilyaDocument20 pagesBatt Assignment: Stock Relations Demystified: by Group 6 Mrinal Mantravadi Gaurav Agarwal Aseem Shandilyaankuvit123No ratings yet

- How GMAT Algorithm WorksDocument4 pagesHow GMAT Algorithm WorksmonirjewelduNo ratings yet

- Secret Secret Secret Secret Betting Betting Betting Betting Club Club Club ClubDocument4 pagesSecret Secret Secret Secret Betting Betting Betting Betting Club Club Club Clubnick74bNo ratings yet

- Testing in Industrial & Business SettingsDocument30 pagesTesting in Industrial & Business SettingsspdeyNo ratings yet

- AI & ML NotesDocument22 pagesAI & ML Noteskarthik singaraoNo ratings yet

- What Every IT Auditor Should Know About SamplingDocument3 pagesWhat Every IT Auditor Should Know About SamplinggoldenpeanutNo ratings yet

- Variable Measurement Systems - Part 3: Linearity - BPI ConsultingDocument6 pagesVariable Measurement Systems - Part 3: Linearity - BPI Consultingkikita2911*No ratings yet

- Audit 2 CH 15 Bag 3-4Document4 pagesAudit 2 CH 15 Bag 3-4saruyaNo ratings yet

- Equating Test Scores: (Without IRT)Document80 pagesEquating Test Scores: (Without IRT)gigi3434No ratings yet

- Sampling Acce 311 - Operations Audit (Week 15 16)Document22 pagesSampling Acce 311 - Operations Audit (Week 15 16)Alyssa Paula AltayaNo ratings yet

- Machine Learning Project Report (Group 3) Shahbaz KhanDocument11 pagesMachine Learning Project Report (Group 3) Shahbaz KhanShahbaz khanNo ratings yet

- Detect Baseline AnomaliesDocument10 pagesDetect Baseline AnomaliesdhtuanNo ratings yet

- Equating Test Scores: (Without IRT)Document80 pagesEquating Test Scores: (Without IRT)Alvin SoonNo ratings yet

- What Are Scaled Scores?Document2 pagesWhat Are Scaled Scores?mysticblissNo ratings yet

- Class Imbalance NotesDocument6 pagesClass Imbalance NotesGokul RajendranNo ratings yet

- Angoff Method Article - 1Document5 pagesAngoff Method Article - 1NeeleshSoganiNo ratings yet

- ERRORS (October 6, 2020)Document5 pagesERRORS (October 6, 2020)Duncan StanleyNo ratings yet

- What Calculations?Document7 pagesWhat Calculations?rose_almonteNo ratings yet

- Understanding A Scaled Score: What Is A Scale?Document3 pagesUnderstanding A Scaled Score: What Is A Scale?Rahul KulkarniNo ratings yet

- Audit Sampling: An Application To Substantive Tests of Account BalancesDocument7 pagesAudit Sampling: An Application To Substantive Tests of Account BalancesdakingNo ratings yet

- Clone PacDocument2 pagesClone Paco_fenixNo ratings yet

- Leopard Outrunner SpecDocument13 pagesLeopard Outrunner Specicebreakerch100% (1)

- Esc SkywalkerDocument1 pageEsc Skywalkersushen_kNo ratings yet

- MultiDocument2 pagesMultiicebreakerchNo ratings yet

- Frsky 2.4Ghz Accst Taranis Q X7S Manual Frsky 2.4Ghz Accst Taranis Q X7S ManualDocument2 pagesFrsky 2.4Ghz Accst Taranis Q X7S Manual Frsky 2.4Ghz Accst Taranis Q X7S Manualtsrattan8116No ratings yet

- Clone PacDocument2 pagesClone Paco_fenixNo ratings yet

- Clone PacDocument2 pagesClone Paco_fenixNo ratings yet

- Uli Stein NeueCartoonsDocument18 pagesUli Stein NeueCartoonsicebreakerchNo ratings yet

- Dieter's Nixie Tube Data ArchiveDocument4 pagesDieter's Nixie Tube Data ArchiveicebreakerchNo ratings yet

- GB A UpdateV1 03 Telemetry BoxDocument10 pagesGB A UpdateV1 03 Telemetry BoxicebreakerchNo ratings yet

- GNU Calc Reference CardDocument2 pagesGNU Calc Reference CardSneetsher Crispy100% (4)

- Bash Quick ReferenceDocument7 pagesBash Quick ReferencewjtwjNo ratings yet

- Flip Flop GatesDocument7 pagesFlip Flop GatesicebreakerchNo ratings yet

- Paris Ephemera Printable 2 GraphicsFairyDocument1 pageParis Ephemera Printable 2 GraphicsFairyicebreakerchNo ratings yet

- Guided MiteDocument4 pagesGuided MiteicebreakerchNo ratings yet

- TVTVDocument3 pagesTVTVicebreakerchNo ratings yet

- Test PyDocument1 pageTest PyicebreakerchNo ratings yet

- UML ShapesDocument3 pagesUML ShapesicebreakerchNo ratings yet

- Gpma1321 ManualDocument28 pagesGpma1321 ManualicebreakerchNo ratings yet

- Joukowski ProfileDocument1 pageJoukowski ProfileicebreakerchNo ratings yet

- DLE55 ManualDocument4 pagesDLE55 ManualicebreakerchNo ratings yet

- Scrum Poker CardsDocument12 pagesScrum Poker CardsicebreakerchNo ratings yet

- Fs 120s e ManualDocument5 pagesFs 120s e ManualicebreakerchNo ratings yet

- Business Research Paper Proposal SampleDocument5 pagesBusiness Research Paper Proposal Samplenelnlpvkg100% (1)

- Sampling Methods and Sample Size Calculation For The SMART MethodologyDocument34 pagesSampling Methods and Sample Size Calculation For The SMART MethodologyHazel BandaNo ratings yet

- DLL - 2nd Quarter - wk9Document5 pagesDLL - 2nd Quarter - wk9Aimie Joy DugenoNo ratings yet

- Implementation of Learning Continuity Plan (LCP) Related Variables Amidst Pandemic and Performance of The Secondary SchoolsDocument16 pagesImplementation of Learning Continuity Plan (LCP) Related Variables Amidst Pandemic and Performance of The Secondary SchoolsIOER International Multidisciplinary Research Journal ( IIMRJ)No ratings yet

- 2001 Watch ProjectDocument8 pages2001 Watch ProjectNoegi AkasNo ratings yet

- SFBT VSMS MisicDocument7 pagesSFBT VSMS MisicPrarthana SagarNo ratings yet

- Ethics Programs and Their Dimensions : &even N. BrennerDocument9 pagesEthics Programs and Their Dimensions : &even N. BrennerBilalAshrafNo ratings yet

- Mba Assignment SampleDocument5 pagesMba Assignment Sampleabdallah abdNo ratings yet

- FOUN 1210 Student Course Guide SEM II 2022 - 2023Document21 pagesFOUN 1210 Student Course Guide SEM II 2022 - 2023Josiah D'angelo LyonsNo ratings yet

- Pad1o Culuminating Activity Pellerin 2017-2018Document7 pagesPad1o Culuminating Activity Pellerin 2017-2018api-335989751No ratings yet

- K073 - SETTVEO - Evidence-Based Reflection and Teacher Development - FINAL - WebDocument32 pagesK073 - SETTVEO - Evidence-Based Reflection and Teacher Development - FINAL - WebHafezNo ratings yet

- 9 Testing of HypothesisDocument12 pages9 Testing of HypothesisHanzlah NaseerNo ratings yet

- How To Teach Machine Translation Post-Editing? Experiences From A Post-Editing CourseDocument15 pagesHow To Teach Machine Translation Post-Editing? Experiences From A Post-Editing CourseclaudiaNo ratings yet

- The Design and Feasibility of One Man Copter: ResultsDocument1 pageThe Design and Feasibility of One Man Copter: ResultsVinoth KumarNo ratings yet

- The Effect of Being Orphans in The Socialization Abilities of The Children in DSWD Affiliated CenterDocument67 pagesThe Effect of Being Orphans in The Socialization Abilities of The Children in DSWD Affiliated CenterMarj BaquialNo ratings yet

- Aitech: Accreditation of Innovative Technologies For HousingDocument38 pagesAitech: Accreditation of Innovative Technologies For HousingMaria Aiza Maniwang CalumbaNo ratings yet

- Presentation ReflectionDocument5 pagesPresentation Reflectionembry44No ratings yet

- A Practical Guide To Strategic Planning in Higher EducationDocument59 pagesA Practical Guide To Strategic Planning in Higher EducationPaLomaLujan100% (1)

- Tariq IjazDocument18 pagesTariq IjazJasa Tugas SMBRGNo ratings yet

- Hypothesis TestingDocument26 pagesHypothesis Testingamit jshNo ratings yet

- Definition of HistoryDocument2 pagesDefinition of HistoryRenz EspirituNo ratings yet

- The Principle of FunctorialityDocument15 pagesThe Principle of Functorialitytoschmid007No ratings yet