Professional Documents

Culture Documents

Software Testing

Uploaded by

asdfgf123Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Software Testing

Uploaded by

asdfgf123Copyright:

Available Formats

Software testing From Wikipedia, the free encyclopedia Jump to: navigation, search Software development process Activities

and steps Requirements Specification Architecture Design Implementation Testing Debugging Deployment Maintenance Methodologies Waterfall Prototype model Incremental Iterative V-Model Spiral Scrum Cleanroom RAD DSDM RUP XP Agile Lean Dual Vee Model TDD Supporting disciplines Configuration management Documentation Quality assurance (SQA) Project management User experience design Tools Compiler Debugger Profiler GUI designer IDE v t e Software testing is an investigation conducted to provide stakeholders with info rmation about the quality of the product or service under test.[1] Software test ing can also provide an objective, independent view of the software to allow the business to appreciate and understand the risks of software implementation. Tes t techniques include, but are not limited to, the process of executing a program

or application with the intent of finding software bugs (errors or other defect s). Software testing can be stated as the process of validating and verifying that a software program/application/product: meets the requirements that guided its design and development; works as expected; can be implemented with the same characteristics. satisfies the needs of stakeholders Software testing, depending on the testing method employed, can be implemented a t any time in the development process. Traditionally most of the test effort occ urs after the requirements have been defined and the coding process has been com pleted, but in the Agile approaches most of the test effort is on-going. As such , the methodology of the test is governed by the chosen software development met hodology. Different software development models will focus the test effort at different po ints in the development process. Newer development models, such as Agile, often employ test driven development and place an increased portion of the testing in the hands of the developer, before it reaches a formal team of testers. In a mor e traditional model, most of the test execution occurs after the requirements ha ve been defined and the coding process has been completed. Contents 1 Overview 2 History 3 Software testing topics 3.1 Scope 3.2 Functional vs non-functional testing 3.3 Defects and failures 3.4 Finding faults early 3.5 Compatibility testing 3.6 Input combinations and preconditions 3.7 Static vs. dynamic testing 3.8 Software verification and validation 3.9 The software testing team 3.10 Software quality assurance (SQA) 4 Testing methods 4.1 The box approach 4.1.1 White-Box testing 4.1.2 Black-box testing 4.1.3 Grey-box testing 4.2 Visual testing 5 Testing levels 5.1 Test target 5.1.1 Unit testing 5.1.2 Integration testing 5.1.3 System testing 5.1.4 System integration testing 5.2 Objectives of testing 5.2.1 Installation testing 5.2.2 Sanity testing 5.2.3 Regression testing 5.2.4 Acceptance testing 5.2.5 Alpha testing 5.2.6 Beta testing 6 Non-functional testing 6.1 Software performance testing

6.2 Usability testing 6.3 Security testing 6.4 Internationalization and localization 6.5 Destructive testing 7 The testing process 7.1 Traditional CMMI or waterfall development model 7.2 Agile or Extreme development model 7.3 A sample testing cycle 8 Automated testing 8.1 Testing tools 8.2 Measurement in software testing 9 Testing artifacts 10 Certifications 11 Controversy 12 See also 13 Further reading 14 References 15 External links Overview Testing can never completely identify all the defects within software.[2] Instea d, it furnishes a criticism or comparison that compares the state and behavior o f the product against oracles principles or mechanisms by which someone might reco gnize a problem. These oracles may include (but are not limited to) specificatio ns, contracts,[3] comparable products, past versions of the same product, infere nces about intended or expected purpose, user or customer expectations, relevant standards, applicable laws, or other criteria. Every software product has a target audience. For example, the audience for vide o game software is completely different from banking software. Therefore, when a n organization develops or otherwise invests in a software product, it can asses s whether the software product will be acceptable to its end users, its target a udience, its purchasers, and other stakeholders. Software testing is the process of attempting to make this assessment. A study conducted by NIST in 2002 reports that software bugs cost the U.S. econo my $59.5 billion annually. More than a third of this cost could be avoided if be tter software testing was performed.[4] History The separation of debugging from testing was initially introduced by Glenford J. Myers in 1979.[5] Although his attention was on breakage testing ("a successful test is one that finds a bug"[5][6]) it illustrated the desire of the software engineering community to separate fundamental development activities, such as de bugging, from that of verification. Dave Gelperin and William C. Hetzel classifi ed in 1988 the phases and goals in software testing in the following stages:[7] Until 1956 - Debugging oriented[8] 1957 1978 - Demonstration oriented[9] 1979 1982 - Destruction oriented[10] 1983 1987 - Evaluation oriented[11] 1988 2000 - Prevention oriented[12] Software testing topics Scope A primary purpose of testing is to detect software failures so that defects may be discovered and corrected. Testing cannot establish that a product functions p roperly under all conditions but can only establish that it does not function pr

operly under specific conditions.[13] The scope of software testing often includ es examination of code as well as execution of that code in various environments and conditions as well as examining the aspects of code: does it do what it is supposed to do and do what it needs to do. In the current culture of software de velopment, a testing organization may be separate from the development team. The re are various roles for testing team members. Information derived from software testing may be used to correct the process by which software is developed.[14] Functional vs non-functional testing Functional testing refers to activities that verify a specific action or functio n of the code. These are usually found in the code requirements documentation, a lthough some development methodologies work from use cases or user stories. Func tional tests tend to answer the question of "can the user do this" or "does this particular feature work." Non-functional testing refers to aspects of the software that may not be related to a specific function or user action, such as scalability or other performance , behavior under certain constraints, or security. Testing will determine the fl ake point, the point at which extremes of scalability or performance leads to un stable execution. Non-functional requirements tend to be those that reflect the quality of the product, particularly in the context of the suitability perspecti ve of its users. Defects and failures Not all software defects are caused by coding errors. One common source of expen sive defects is caused by requirement gaps, e.g., unrecognized requirements, tha t result in errors of omission by the program designer.[15] A common source of r equirements gaps is non-functional requirements such as testability, scalability , maintainability, usability, performance, and security. Software faults occur through the following processes. A programmer makes an err or (mistake), which results in a defect (fault, bug) in the software source code . If this defect is executed, in certain situations the system will produce wron g results, causing a failure.[16] Not all defects will necessarily result in fai lures. For example, defects in dead code will never result in failures. A defect can turn into a failure when the environment is changed. Examples of these chan ges in environment include the software being run on a new computer hardware pla tform, alterations in source data or interacting with different software.[16] A single defect may result in a wide range of failure symptoms. Finding faults early It is commonly believed that the earlier a defect is found the cheaper it is to fix it. The following table shows the cost of fixing the defect depending on the stage it was found.[17] For example, if a problem in the requirements is found only post-release, then it would cost 10 100 times more to fix than if it had alre ady been found by the requirements review. Modern continuous deployment practice s, and cloud-based services may cost less for re-deployment and maintenance than in the past. Cost to fix a defect Time detected Requirements Architecture Construction System test Post-release Time introduced Requirements 1 3 5 10 10 10 100 Architecture 1 10 15 25 100 Construction 1 10 10 25 Compatibility testing A common cause of software failure (real or perceived) is a lack of its compatib ility with other application software, operating systems (or operating system ve rsions, old or new), or target environments that differ greatly from the origina l (such as a terminal or GUI application intended to be run on the desktop now b eing required to become a web application, which must render in a web browser).

For example, in the case of a lack of backward compatibility, this can occur bec ause the programmers develop and test software only on the latest version of the target environment, which not all users may be running. This results in the uni ntended consequence that the latest work may not function on earlier versions of the target environment, or on older hardware that earlier versions of the targe t environment was capable of using. Sometimes such issues can be fixed by proact ively abstracting operating system functionality into a separate program module or library. Input combinations and preconditions A very fundamental problem with software testing is that testing under all combi nations of inputs and preconditions (initial state) is not feasible, even with a simple product.[13][18] This means that the number of defects in a software pro duct can be very large and defects that occur infrequently are difficult to find in testing. More significantly, non-functional dimensions of quality (how it is supposed to be versus what it is supposed to do) usability, scalability, performa nce, compatibility, reliability can be highly subjective; something that constitut es sufficient value to one person may be intolerable to another. Static vs. dynamic testing There are many approaches to software testing. Reviews, walkthroughs, or inspect ions are considered as static testing, whereas actually executing programmed cod e with a given set of test cases is referred to as dynamic testing. Static testi ng can be (and unfortunately in practice often is) omitted. Dynamic testing take s place when the program itself is used for the first time (which is generally c onsidered the beginning of the testing stage). Dynamic testing may begin before the program is 100% complete in order to test particular sections of code (modul es or discrete functions). Typical techniques for this are either using stubs/dr ivers or execution from a debugger environment. For example, spreadsheet program s are, by their very nature, tested to a large extent interactively ("on the fly "), with results displayed immediately after each calculation or text manipulati on. Software verification and validation Main article: Verification and validation (software) Software testing is used in association with verification and validation:[19] Verification: Have we built the software right? (i.e., does it implement the requirements). Validation: Have we built the right software? (i.e., do the requirements sat isfy the customer). The terms verification and validation are commonly used interchangeably in the i ndustry; it is also common to see these two terms incorrectly defined. According to the IEEE Standard Glossary of Software Engineering Terminology: Verification is the process of evaluating a system or component to determine whether the products of a given development phase satisfy the conditions impose d at the start of that phase. Validation is the process of evaluating a system or component during or at t he end of the development process to determine whether it satisfies specified re quirements. According to the IS0 9000 standard: Verification is confirmation by examination and through provision of objecti ve evidence that specified requirements have been fulfilled. Validation is confirmation by examination and through provision of objective evidence that the requirements for a specific intended use or application have been fulfilled.

The software testing team Software testing can be done by software testers. Until the 1980s the term "soft ware tester" was used generally, but later it was also seen as a separate profes sion. Regarding the periods and the different goals in software testing,[20] dif ferent roles have been established: manager, test lead, test designer, tester, a utomation developer, and test administrator. Software quality assurance (SQA) Though controversial, software testing is a part of the software quality assuran ce (SQA) process.[13] In SQA, software process specialists and auditors are conc erned for the software development process rather than just the artifacts such a s documentation, code and systems. They examine and change the software engineer ing process itself to reduce the number of faults that end up in the delivered s oftware: the so-called defect rate. What constitutes an "acceptable defect rate" depends on the nature of the software; A flight simulator video game would have much higher defect tolerance than software for an actual airplane. Although the re are close links with SQA, testing departments often exist independently, and there may be no SQA function in some companies. Software testing is a task inten ded to detect defects in software by contrasting a computer program's expected r esults with its actual results for a given set of inputs. By contrast, QA (quali ty assurance) is the implementation of policies and procedures intended to preve nt defects from occurring in the first place. Testing methods The box approach Software testing methods are traditionally divided into white- and black-box tes ting. These two approaches are used to describe the point of view that a test en gineer takes when designing test cases. White-Box testing Main article: White-box testing White-box testing is when the tester has access to the internal data structures and algorithms including the code that implements these. Types of white-box testing The following types of white-box testing exist: API testing (application programming interface) - testing of the applica tion using public and private APIs Code coverage - creating tests to satisfy some criteria of code coverage (e.g., the test designer can create tests to cause all statements in the progra m to be executed at least once) Fault injection methods - intentionally introducing faults to gauge the efficacy of testing strategies Mutation testing methods Static testing - All types Test coverage White-box testing methods can also be used to evaluate the completeness of a test suite that was created with black-box testing methods. This allows the sof tware team to examine parts of a system that are rarely tested and ensures that the most important function points have been tested.[21] Two common forms of code coverage are: Function coverage, which reports on functions executed Statement coverage, which reports on the number of lines executed to com plete the test

They both return a code coverage metric, measured as a percentage. Black-box testing Main article: Black-box testing Black-box testing treats the software as a "black box" without any knowledge of in ternal implementation. Black-box testing methods include: equivalence partitioni ng, boundary value analysis, all-pairs testing, fuzz testing, model-based testin g, exploratory testing and specification-based testing. Specification-based testing: Aims to test the functionality of software acco rding to the applicable requirements.[22] Thus, the tester inputs data into, and only sees the output from, the test object. This level of testing usually requi res thorough test cases to be provided to the tester, who then can simply verify that for a given input, the output value (or behavior), either "is" or "is not" the same as the expected value specified in the test case. Specification-based testing is necessary, but it is insufficient to guard ag ainst certain risks.[23] Advantages and disadvantages: The black-box tester has no "bonds" with the c ode, and a tester's perception is very simple: a code must have bugs. Using the principle, "Ask and you shall receive," black-box testers find bugs where progra mmers do not. On the other hand, black-box testing has been said to be "like a w alk in a dark labyrinth without a flashlight," because the tester doesn't know h ow the software being tested was actually constructed. As a result, there are si tuations when (1) a tester writes many test cases to check something that could have been tested by only one test case, and/or (2) some parts of the back-end ar e not tested at all. Therefore, black-box testing has the advantage of "an unaffiliated opinion", on the one hand, and the disadvantage of "blind exploring", on the other. [24] Grey-box testing Grey-box testing (American spelling: gray-box testing) involves having knowledge of internal data structures and algorithms for purposes of designing tests, whi le executing those tests at the user, or black-box level. The tester is not requ ired to have full access to the software's source code.[25][not in citation give n] Manipulating input data and formatting output do not qualify as grey-box, bec ause the input and output are clearly outside of the "black box" that we are cal ling the system under test. This distinction is particularly important when cond ucting integration testing between two modules of code written by two different developers, where only the interfaces are exposed for test. However, modifying a data repository does qualify as grey-box, as the user would not normally be abl e to change the data outside of the system under test. Grey-box testing may also include reverse engineering to determine, for instance, boundary values or erro r messages. By knowing the underlying concepts of how the software works, the tester makes b etter-informed testing choices while testing the software from outside. Typicall y, a grey-box tester will be permitted to set up his testing environment; for in stance, seeding a database; and the tester can observe the state of the product being tested after performing certain actions. For instance, in testing a databa se product he/she may fire an SQL query on the database and then observe the dat abase, to ensure that the expected changes have been reflected. Grey-box testing implements intelligent test scenarios, based on limited information. This will particularly apply to data type handling, exception handling, and so on.[26] Visual testing The aim of visual testing is to provide developers with the ability to examine w

hat was happening at the point of software failure by presenting the data in suc h a way that the developer can easily ?nd the information he requires, and the i nformation is expressed clearly.[27][28] At the core of visual testing is the idea that showing someone a problem (or a t est failure), rather than just describing it, greatly increases clarity and unde rstanding. Visual testing therefore requires the recording of the entire test pr ocess capturing everything that occurs on the test system in video format. Outpu t videos are supplemented by real-time tester input via picture-in-a-picture web cam and audio commentary from microphones. Visual testing provides a number of advantages. The quality of communication is increased dramatically because testers can show the problem (and the events lead ing up to it) to the developer as opposed to just describing it and the need to replicate test failures will cease to exist in many cases. The developer will ha ve all the evidence he requires of a test failure and can instead focus on the c ause of the fault and how it should be fixed. Visual testing is particularly well-suited for environments that deploy agile me thods in their development of software, since agile methods require greater comm unication between testers and developers and collaboration within small teams.[c itation needed] Ad hoc testing and exploratory testing are important methodologies for checking software integrity, because they require less preparation time to implement, whi lst important bugs can be found quickly. In ad hoc testing, where testing takes place in an improvised, impromptu way, the ability of a test tool to visually re cord everything that occurs on a system becomes very important.[clarification ne eded][citation needed] Visual testing is gathering recognition in customer acceptance and usability tes ting, because the test can be used by many individuals involved in the developme nt process.[citation needed] For the customer, it becomes easy to provide detail ed bug reports and feedback, and for program users, visual testing can record us er actions on screen, as well as their voice and image, to provide a complete pi cture at the time of software failure for the developer. Testing levels Tests are frequently grouped by where they are added in the software development process, or by the level of specificity of the test. The main levels during the development process as defined by the SWEBOK guide are unit-, integration-, and system testing that are distinguished by the test target without implying a spe cific process model.[29] Other test levels are classified by the testing objecti ve.[29] Test target Unit testing Main article: Unit testing Unit testing, also known as component unctionality of a specific section of object-oriented environment, this is l unit tests include the constructors testing, refers to tests that verify the f code, usually at the function level. In an usually at the class level, and the minima and destructors.[30]

These types of tests are usually written by developers as they work on code (whi te-box style), to ensure that the specific function is working as expected. One function might have multiple tests, to catch corner cases or other branches in t he code. Unit testing alone cannot verify the functionality of a piece of softwa re, but rather is used to assure that the building blocks the software uses work independently of each other. Integration testing

Main article: Integration testing Integration testing is any type of software testing that seeks to verify the int erfaces between components against a software design. Software components may be integrated in an iterative way or all together ("big bang"). Normally the forme r is considered a better practice since it allows interface issues to be localis ed more quickly and fixed. Integration testing works to expose defects in the interfaces and interaction be tween integrated components (modules). Progressively larger groups of tested sof tware components corresponding to elements of the architectural design are integ rated and tested until the software works as a system.[31] System testing Main article: System testing System testing tests a completely integrated system to verify that it meets its requirements.[32] System integration testing Main article: System integration testing System integration testing verifies that a system is integrated to any external or third-party systems defined in the system requirements.[citation needed] Objectives of testing Installation testing Main article: Installation testing An Installation test assures that the system is installed correctly and working at actual customer's hardware. Sanity testing Main article: Sanity testing A Sanity test determines whether it is reasonable to proceed with further testin g. Regression testing Main article: Regression testing Regression testing focuses on finding defects after a major code change has occu rred. Specifically, it seeks to uncover software regressions, or old bugs that h ave come back. Such regressions occur whenever software functionality that was p reviously working correctly stops working as intended. Typically, regressions oc cur as an unintended consequence of program changes, when the newly developed pa rt of the software collides with the previously existing code. Common methods of regression testing include re-running previously run tests and checking whether previously fixed faults have re-emerged. The depth of testing depends on the ph ase in the release process and the risk of the added features. They can either b e complete, for changes added late in the release or deemed to be risky, to very shallow, consisting of positive tests on each feature, if the changes are early in the release or deemed to be of low risk. Acceptance testing Main article: Acceptance testing Acceptance testing can mean one of two things: A smoke test is used as an acceptance test prior to introducing a new build to the main testing process, i.e. before integration or regression. Acceptance testing performed by the customer, often in their lab environment on their own hardware, is known as user acceptance testing (UAT). Acceptance te sting may be performed as part of the hand-off process between any two phases of development.[citation needed]

Alpha testing Alpha testing is simulated or actual operational testing by potential users/cust omers or an independent test team at the developers' site. Alpha testing is ofte n employed for off-the-shelf software as a form of internal acceptance testing, before the software goes to beta testing.[33] Beta testing Beta testing comes after alpha testing and can be considered a form of external user acceptance testing. Versions of the software, known as beta versions, are r eleased to a limited audience outside of the programming team. The software is r eleased to groups of people so that further testing can ensure the product has f ew faults or bugs. Sometimes, beta versions are made available to the open publi c to increase the feedback field to a maximal number of future users.[citation n eeded] Non-functional testing Special methods exist to test non-functional aspects of software. In contrast to functional testing, which establishes the correct operation of the software (fo r example that it matches the expected behavior defined in the design requiremen ts), non-functional testing verifies that the software functions properly even w hen it receives invalid or unexpected inputs. Software fault injection, in the f orm of fuzzing, is an example of non-functional testing. Non-functional testing, especially for software, is designed to establish whether the device under test can tolerate invalid or unexpected inputs, thereby establishing the robustness of input validation routines as well as error-management routines. Various comme rcial non-functional testing tools are linked from the software fault injection page; there are also numerous open-source and free software tools available that perform non-functional testing. Software performance testing Performance testing is in general executed to determine how a system or sub-syst em performs in terms of responsiveness and stability under a particular workload . It can also serve to investigate, measure, validate or verify other quality at tributes of the system, such as scalability, reliability and resource usage. Load testing is primarily concerned with testing that the system can continue to operate under a specific load, whether that be large quantities of data or a la rge number of users. This is generally referred to as software scalability. The related load testing activity of when performed as a non-functional activity is often referred to as endurance testing. Volume testing is a way to test function ality. Stress testing is a way to test reliability under unexpected or rare work loads. Stability testing (often referred to as load or endurance testing) checks to see if the software can continuously function well in or above an acceptable period. There is little agreement on what the specific goals of performance testing are. The terms load testing, performance testing, reliability testing, and volume te sting, are often used interchangeably. Usability testing Usability testing is needed to check if the user interface is easy to use and un derstand. It is concerned mainly with the use of the application. Security testing Security testing is essential for software that processes confidential data to p revent system intrusion by hackers. Internationalization and localization The general ability of software to be internationalized and localized can be aut

omatically tested without actual translation, by using pseudolocalization. It wi ll verify that the application still works, even after it has been translated in to a new language or adapted for a new culture (such as different currencies or time zones).[34] Actual translation to human languages must be tested, too. Possible localization failures include: Software is often localized by translating a list of strings out of context, and the translator may choose the wrong translation for an ambiguous source str ing. Technical terminology may become inconsistent if the project is translated b y several people without proper coordination or if the translator is imprudent. Literal word-for-word translations may sound inappropriate, artificial or to o technical in the target language. Untranslated messages in the original language may be left hard coded in the source code. Some messages may be created automatically at run time and the resulting str ing may be ungrammatical, functionally incorrect, misleading or confusing. Software may use a keyboard shortcut which has no function on the source lan guage's keyboard layout, but is used for typing characters in the layout of the target language. Software may lack support for the character encoding of the target language. Fonts and font sizes which are appropriate in the source language may be ina ppropriate in the target language; for example, CJK characters may become unread able if the font is too small. A string in the target language may be longer than the software can handle. This may make the string partly invisible to the user or cause the software to c rash or malfunction. Software may lack proper support for reading or writing bi-directional text. Software may display images with text that was not localized. Localized operating systems may have differently-named system configuration files and environment variables and different formats for date and currency. To avoid these and other localization problems, a tester who knows the target la nguage must run the program with all the possible use cases for translation to s ee if the messages are readable, translated correctly in context and do not caus e failures. Destructive testing Main article: Destructive testing Destructive testing attempts to cause the software or a sub-system to fail, in o rder to test its robustness. The testing process Traditional CMMI or waterfall development model A common practice of software testing is that testing is performed by an indepen dent group of testers after the functionality is developed, before it is shipped to the customer.[35] This practice often results in the testing phase being use d as a project buffer to compensate for project delays, thereby compromising the time devoted to testing.[36] Another practice is to start software testing at the same moment the project sta rts and it is a continuous process until the project finishes.[37] Further information: Capability Maturity Model Integration and Waterfall model Agile or Extreme development model In contrast, some emerging software disciplines such as extreme programming and the agile software development movement, adhere to a "test-driven software devel opment" model. In this process, unit tests are written first, by the software en

gineers (often with pair programming in the extreme programming methodology). Of course these tests fail initially; as they are expected to. Then as code is wri tten it passes incrementally larger portions of the test suites. The test suites are continuously updated as new failure conditions and corner cases are discove red, and they are integrated with any regression tests that are developed. Unit tests are maintained along with the rest of the software source code and general ly integrated into the build process (with inherently interactive tests being re legated to a partially manual build acceptance process). The ultimate goal of th is test process is to achieve continuous integration where software updates can be published to the public frequently. [38] [39] A sample testing cycle Although variations exist between organizations, there is a typical cycle for te sting.[40] The sample below is common among organizations employing the Waterfal l development model. Requirements analysis: Testing should begin in the requirements phase of the software development life cycle. During the design phase, testers work with dev elopers in determining what aspects of a design are testable and with what param eters those tests work. Test planning: Test strategy, test plan, testbed creation. Since many activi ties will be carried out during testing, a plan is needed. Test development: Test procedures, test scenarios, test cases, test datasets , test scripts to use in testing software. Test execution: Testers execute the software based on the plans and test doc uments then report any errors found to the development team. Test reporting: Once testing is completed, testers generate metrics and make final reports on their test effort and whether or not the software tested is re ady for release. Test result analysis: Or Defect Analysis, is done by the development team us ually along with the client, in order to decide what defects should be assigned, fixed, rejected (i.e. found software working properly) or deferred to be dealt with later. Defect Retesting: Once a defect has been dealt with by the development team, it is retested by the testing team. AKA Resolution testing. Regression testing: It is common to have a small test program built of a sub set of tests, for each integration of new, modified, or fixed software, in order to ensure that the latest delivery has not ruined anything, and that the softwa re product as a whole is still working correctly. Test Closure: Once the test meets the exit criteria, the activities such as capturing the key outputs, lessons learned, results, logs, documents related to the project are archived and used as a reference for future projects. Automated testing Main article: Test automation Many programming groups are relying more and more on automated testing, especial ly groups that use test-driven development. There are many frameworks to write t ests in, and continuous integration software will run tests automatically every time code is checked into a version control system. While automation cannot reproduce everything that a human can do (and all the wa ys they think of doing it), it can be very useful for regression testing. Howeve r, it does require a well-developed test suite of testing scripts in order to be truly useful. Testing tools Program testing and fault detection can be aided significantly by testing tools and debuggers. Testing/debug tools include features such as:

Program monitors, permitting full or partial monitoring of program code incl uding: Instruction set simulator, permitting complete instruction level monitor ing and trace facilities Program animation, permitting step-by-step execution and conditional bre akpoint at source level or in machine code Code coverage reports Formatted dump or symbolic debugging, tools allowing inspection of program v ariables on error or at chosen points Automated functional GUI testing tools are used to repeat system-level tests through the GUI Benchmarks, allowing run-time performance comparisons to be made Performance analysis (or profiling tools) that can help to highlight hot spo ts and resource usage Some of these features may be incorporated into an Integrated Development Enviro nment (IDE). A regression testing technique is to have a standard set of tests, which cov er existing functionality that result in persistent tabular data, and to compare pre-change data to post-change data, where there should not be differences, usi ng a tool like diffkit. Differences detected indicate unexpected functionality c hanges or "regression". Measurement in software testing Usually, quality is constrained to such topics as correctness, completeness, sec urity,[citation needed] but can also include more technical requirements as desc ribed under the ISO standard ISO/IEC 9126, such as capability, reliability, effi ciency, portability, maintainability, compatibility, and usability. There are a number of frequently-used software measures, often called metrics, w hich are used to assist in determining the state of the software or the adequacy of the testing. Testing artifacts The software testing process can produce several artifacts. Test plan A test specification is called a test plan. The developers are well aware wh at test plans will be executed and this information is made available to managem ent and the developers. The idea is to make them more cautious when developing t heir code or making additional changes. Some companies have a higher-level docum ent called a test strategy. Traceability matrix A traceability matrix is a table that correlates requirements or design docu ments to test documents. It is used to change tests when related source document s are changed, to select test cases for execution when planning for regression t ests by considering requirement coverage. Test case A test case normally consists of a unique identifier, requirement references from a design specification, preconditions, events, a series of steps (also kno wn as actions) to follow, input, output, expected result, and actual result. Cli nically defined a test case is an input and an expected result.[41] This can be as pragmatic as 'for condition x your derived result is y', whereas other test c ases described in more detail the input scenario and what results might be expec ted. It can occasionally be a series of steps (but often steps are contained in a separate test procedure that can be exercised against multiple test cases, as

a matter of economy) but with one expected result or expected outcome. The optio nal fields are a test case ID, test step, or order of execution number, related requirement(s), depth, test category, author, and check boxes for whether the te st is automatable and has been automated. Larger test cases may also contain pre requisite states or steps, and descriptions. A test case should also contain a p lace for the actual result. These steps can be stored in a word processor docume nt, spreadsheet, database, or other common repository. In a database system, you may also be able to see past test results, who generated the results, and what system configuration was used to generate those results. These past results woul d usually be stored in a separate table. Test script A test script is a procedure, or programing code that replicates user action s. Initially the term was derived from the product of work created by automated regression test tools. Test Case will be a baseline to create test scripts using a tool or a program. Test suite The most common term for a collection of test cases is a test suite. The tes t suite often also contains more detailed instructions or goals for each collect ion of test cases. It definitely contains a section where the tester identifies the system configuration used during testing. A group of test cases may also con tain prerequisite states or steps, and descriptions of the following tests. Test data In most cases, multiple sets of values or data are used to test the same fun ctionality of a particular feature. All the test values and changeable environme ntal components are collected in separate files and stored as test data. It is a lso useful to provide this data to the client and with the product or a project. Test harness The software, tools, samples of data input and output, and configurations ar e all referred to collectively as a test harness. Certifications Several certification programs exist to support the professional aspirations of software testers and quality assurance specialists. No certification currently o ffered actually requires the applicant to demonstrate the ability to test softwa re. No certification is based on a widely accepted body of knowledge. This has l ed some to declare that the testing field is not ready for certification.[42] Ce rtification itself cannot measure an individual's productivity, their skill, or practical knowledge, and cannot guarantee their competence, or professionalism a s a tester.[43] Software testing certification types Exam-based: Formalized exams, which need to be passed; can also be learn ed by self-study [e.g., for ISTQB or QAI][44] Education-based: Instructor-led sessions, where each course has to be pa ssed [e.g., International Institute for Software Testing (IIST)]. Testing certifications Certified Associate in Software Testing (CAST) offered by the QAI [45] CATe offered by the International Institute for Software Testing[46] Certified Manager in Software Testing (CMST) offered by the QAI [45] Certified Software Tester (CSTE) offered by the Quality Assurance Instit ute (QAI)[45] Certified Software Test Professional (CSTP) offered by the International

Institute for Software Testing[46] CSTP (TM) (Australian Version) offered by K. J. Ross & Associates[47] ISEB offered by the Information Systems Examinations Board ISTQB Certified Tester, Foundation Level (CTFL) offered by the Internati onal Software Testing Qualification Board [48][49] ISTQB Certified Tester, Advanced Level (CTAL) offered by the Internation al Software Testing Qualification Board [48][49] TMPF TMap Next Foundation offered by the Examination Institute for Infor mation Science[50] TMPA TMap Next Advanced offered by the Examination Institute for Informa tion Science[50] Quality assurance certifications CMSQ CSQA CSQE CQIA Controversy Some of the major software testing controversies include: What constitutes responsible software testing? Members of the "context-driven" school of testing[52] believe that there are no "best practices" of testing, but rather that testing is a set of skills that allow the tester to select or invent testing practices to suit each unique situ ation.[53] Agile vs. traditional Should testers learn to work under conditions of uncertainty and constant ch ange or should they aim at process "maturity"? The agile testing movement has re ceived growing popularity since 2006 mainly in commercial circles,[54][55] where as government and military[56] software providers use this methodology but also the traditional test-last models (e.g. in the Waterfall model).[citation needed] Exploratory test vs. scripted [57] Should tests be designed at the same time as they are executed or shoul d they be designed beforehand? Manual testing vs. automated Some writers believe that test automation is so expensive relative to its va lue that it should be used sparingly.[58] More in particular, test-driven develo pment states that developers should write unit-tests of the XUnit type before co ding the functionality. The tests then can be considered as a way to capture and implement the requirements. Software design vs. software implementation [59] Should testing be carried out only at the end or throughout the whole p rocess? Who watches the watchmen? The idea is that any form of observation is also an interaction the act of tes ting can also affect that which is being tested.[60] See also Portal icon Software Testing portal Book icon Book: Software testing Wikipedia books are collections of articles that can be downloaded or ordered in print. offered offered offered offered by by by by the the the the Quality Assurance Institute (QAI).[45] Quality Assurance Institute (QAI)[45] American Society for Quality (ASQ)[51] American Society for Quality (ASQ)[51]

Acceptance testing All-pairs testing Automated testing Dynamic program analysis Formal verification GUI software testing Independent test organization Manual testing Orthogonal array testing Pair Testing Reverse semantic traceability Software testability Static code analysis Orthogonal Defect Classification Test management tools Web testing Further reading Bertrand Meyer, "Seven Principles of Software Testing," Computer, vol. 41, n o. 8, pp. 99-101, Aug. 2008, doi:10.1109/MC.2008.306; available online. References ^ Exploratory Testing, Cem Kaner, Florida Institute of Technology, Quality A ssurance Institute Worldwide Annual Software Testing Conference, Orlando, FL, No vember 2006 ^ Software Testing by Jiantao Pan, Carnegie Mellon University ^ Leitner, A., Ciupa, I., Oriol, M., Meyer, B., Fiva, A., "Contract Driven D evelopment = Test Driven Development - Writing Test Cases", Proceedings of ESEC/ FSE'07: European Software Engineering Conference and the ACM SIGSOFT Symposium o n the Foundations of Software Engineering 2007, (Dubrovnik, Croatia), September 2007 ^ Software errors cost U.S. economy $59.5 billion annually, NIST report ^ a b Myers, Glenford J. (1979). The Art of Software Testing. John Wiley and Sons. ISBN 0-471-04328-1. ^ Company, People's Computer (1987). "Dr. Dobb's journal of software tools f or the professional programmer". Dr. Dobb's journal of software tools for the pr ofessional programmer (M&T Pub) 12 (1 6): 116. ^ Gelperin, D.; B. Hetzel (1988). "The Growth of Software Testing". CACM 31 (6). ISSN 0001-0782. ^ until 1956 it was the debugging oriented period, when testing was often as sociated to debugging: there was no clear difference between testing and debuggi ng. Gelperin, D.; B. Hetzel (1988). "The Growth of Software Testing". CACM 31 (6 ). ISSN 0001-0782. ^ From 1957 1978 there was the demonstration oriented period where debugging a nd testing was distinguished now - in this period it was shown, that software sa tisfies the requirements. Gelperin, D.; B. Hetzel (1988). "The Growth of Softwar e Testing". CACM 31 (6). ISSN 0001-0782. ^ The time between 1979 1982 is announced as the destruction oriented period, where the goal was to find errors. Gelperin, D.; B. Hetzel (1988). "The Growth o f Software Testing". CACM 31 (6). ISSN 0001-0782. ^ 1983 1987 is classified as the evaluation oriented period: intention here is that during the software lifecycle a product evaluation is provided and measuri ng quality. Gelperin, D.; B. Hetzel (1988). "The Growth of Software Testing". CA CM 31 (6). ISSN 0001-0782. ^ From 1988 on it was seen as prevention oriented period where tests were to demonstrate that software satisfies its specification, to detect faults and to prevent faults. Gelperin, D.; B. Hetzel (1988). "The Growth of Software Testing"

. CACM 31 (6). ISSN 0001-0782. ^ a b c Kaner, Cem; Falk, Jack and Nguyen, Hung Quoc (1999). Testing Compute r Software, 2nd Ed.. New York, et al: John Wiley and Sons, Inc.. pp. 480 pages. ISBN 0-471-35846-0. ^ Kolawa, Adam; Huizinga, Dorota (2007). Automated Defect Prevention: Best P ractices in Software Management. Wiley-IEEE Computer Society Press. pp. 41 43. ISB N 0-470-04212-5. ^ Kolawa, Adam; Huizinga, Dorota (2007). Automated Defect Prevention: Best P ractices in Software Management. Wiley-IEEE Computer Society Press. p. 426. ISBN 0-470-04212-5. ^ a b Section 1.1.2, Certified Tester Foundation Level Syllabus, Internation al Software Testing Qualifications Board ^ McConnell, Steve (2004). Code Complete (2nd ed.). Microsoft Press. p. 29. ISBN 0-7356-1967-0. ^ Principle 2, Section 1.3, Certified Tester Foundation Level Syllabus, Inte rnational Software Testing Qualifications Board ^ Tran, Eushiuan (1999). "Verification/Validation/Certification". In Koopman , P.. Topics in Dependable Embedded Systems. USA: Carnegie Mellon University. Re trieved 2008-01-13. ^ see D. Gelperin and W.C. Hetzel ^ Introduction, Code Coverage Analysis, Steve Cornett ^ Laycock, G. T. (1993) (PostScript). The Theory and Practice of Specificati on Based Software Testing. Dept of Computer Science, Sheffield University, UK. R etrieved 2008-02-13. ^ Bach, James (June 1999). "Risk and Requirements-Based Testing" (PDF). Comp uter 32 (6): 113 114. Retrieved 2008-08-19. ^ Savenkov, Roman (2008). How to Become a Software Tester. Roman Savenkov Co nsulting. p. 159. ISBN 978-0-615-23372-7. ^ Patton, Ron. Software Testing. ^ "www.crosschecknet.com". ^ "Visual testing of software - Helsinki University of Technology" (PDF). Re trieved 2012-01-13. ^ "Article on visual testing in Test Magazine". Testmagazine.co.uk. Retrieve d 2012-01-13. ^ a b "SWEBOK Guide - Chapter 5". Computer.org. Retrieved 2012-01-13. ^ Binder, Robert V. (1999). Testing Object-Oriented Systems: Objects, Patter ns, and Tools. Addison-Wesley Professional. p. 45. ISBN 0-201-80938-9. ^ Beizer, Boris (1990). Software Testing Techniques (Second ed.). New York: Van Nostrand Reinhold. pp. 21,430. ISBN 0-442-20672-0. ^ IEEE (1990). IEEE Standard Computer Dictionary: A Compilation of IEEE Stan dard Computer Glossaries. New York: IEEE. ISBN 1-55937-079-3. ^ van Veenendaal, Erik. "Standard glossary of terms used in Software Testing ". Retrieved 17 June 2010. ^ "Globalization Step-by-Step: The World-Ready Approach to Testing. Microsof t Developer Network". Msdn.microsoft.com. Retrieved 2012-01-13. ^ EtestingHub-Online Free Software Testing Tutorial. "e)Testing Phase in Sof tware Testing:". Etestinghub.com. Retrieved 2012-01-13. ^ Myers, Glenford J. (1979). The Art of Software Testing. John Wiley and Son s. pp. 145 146. ISBN 0-471-04328-1. ^ Dustin, Elfriede (2002). Effective Software Testing. Addison Wesley. p. 3. ISBN 0-201-79429-2. ^ Marchenko, Artem (November 16, 2007). "XP Practice: Continuous Integration ". Retrieved 2009-11-16. ^ Gurses, Levent (February 19, 2007). "Agile 101: What is Continuous Integra tion?". Retrieved 2009-11-16. ^ Pan, Jiantao (Spring 1999). "Software Testing (18-849b Dependable Embedded Systems)". Topics in Dependable Embedded Systems. Electrical and Computer Engin eering Department, Carnegie Mellon University. ^ IEEE (1998). IEEE standard for software test documentation. New York: IEEE . ISBN 0-7381-1443-X.

^ Kaner, Cem (2001). "NSF grant proposal to "lay a foundation for significan t improvements in the quality of academic and commercial courses in software tes ting"" (PDF). ^ Kaner, Cem (2003). "Measuring the Effectiveness of Software Testers" (PDF) . ^ Black, Rex (December 2008). Advanced Software Testing- Vol. 2: Guide to th e ISTQB Advanced Certification as an Advanced Test Manager. Santa Barbara: Rocky Nook Publisher. ISBN 1-933952-36-9. ^ a b c d e "Quality Assurance Institute". Qaiglobalinstitute.com. Retrieved 2012-01-13. ^ a b "International Institute for Software Testing". Testinginstitute.com. Retrieved 2012-01-13. ^ K. J. Ross & Associates[dead link] ^ a b "ISTQB". ^ a b "ISTQB in the U.S.". ^ a b "EXIN: Examination Institute for Information Science". Exin-exams.com. Retrieved 2012-01-13. ^ a b "American Society for Quality". Asq.org. Retrieved 2012-01-13. ^ "context-driven-testing.com". context-driven-testing.com. Retrieved 2012-0 1-13. ^ "Article on taking agile traits without the agile method". Technicat.com. Retrieved 2012-01-13. ^ We re all part of the story by David Strom, July 1, 2009 ^ IEEE article about differences in adoption of agile trends between experie nced managers vs. young students of the Project Management Institute. See also A gile adoption study from 2007 ^ Willison, John S. (April 2004). "Agile Software Development for an Agile F orce". CrossTalk (STSC) (April 2004). Archived from the original on unknown. ^ "IEEE article on Exploratory vs. Non Exploratory testing". Ieeexplore.ieee .org. Retrieved 2012-01-13. ^ An example is Mark Fewster, Dorothy Graham: Software Test Automation. Addi son Wesley, 1999, ISBN 0-201-33140-3. ^ "Article referring to other links questioning the necessity of unit testin g". Java.dzone.com. Retrieved 2012-01-13. ^ Microsoft Development Network Discussion on exactly this topic[dead link] External links At Wikiversity you can learn more and teach others about Software testin g at: The Department of Software testing Software testing tools and products at the Open Directory Project "Software that makes Software better" Economist.com Automated software testing metrics including manual testing metrics [show] v t e Major fields of computer science [show] v t e Software engineering View page ratings

Rate this page What's this? Trustworthy Objective Complete Well-written I am highly knowledgeable about this topic (optional) Categories: Software testing Computer occupations Log in Create account Article Talk Read Edit View history Main page Contents Featured content Current events Random article Donate to Wikipedia Interaction Help About Wikipedia Community portal Recent changes Contact Wikipedia Toolbox Print/export Languages ??????? ??????????? (???????????)? ????????? Catal Cesky Deutsch Eesti Espaol ????? Franais ??? ?????? Bahasa Indonesia Italiano ????? ????? ??????? Magyar Bahasa Melayu

Nederlands ??? ?Norsk (bokml)? Polski Portugus Romna ??????? Shqip Simple English Slovencina Suomi Svenska ????? ??? ?????????? Ti?ng Vi?t ?? This page was last modified on 8 June 2012 at 15:08. Text is available under the Creative Commons Attribution-ShareAlike License; additional terms may apply. See Terms of use for details. Wikipedia is a registered trademark of the Wikimedia Foundation, Inc., a nonprofit organization. Contact us Privacy policy About Wikipedia Disclaimers Mobile view Wikimedia Foundation Powered by MediaWiki

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- DML StatementsDocument12 pagesDML Statementsaniket somNo ratings yet

- AWS Sso UgDocument146 pagesAWS Sso UgTanayaNo ratings yet

- Business Analyst Resume SampleDocument1 pageBusiness Analyst Resume Sampleraj28_999No ratings yet

- 70-533 - Implementing Azure Infrastructure SolutionsDocument101 pages70-533 - Implementing Azure Infrastructure SolutionsPaul Fuyane100% (1)

- Big Data and Hadoop For Developers - SyllabusDocument6 pagesBig Data and Hadoop For Developers - Syllabusvkbm42No ratings yet

- Uma Musume Translate Installation For BeginnersDocument28 pagesUma Musume Translate Installation For BeginnersChainarong PomsarayNo ratings yet

- DSA 2nd YrsDocument62 pagesDSA 2nd YrsSkull CoreNo ratings yet

- K21 QuestionsDocument12 pagesK21 QuestionsSamNo ratings yet

- Software ReqDocument39 pagesSoftware ReqReetee RambojunNo ratings yet

- SE 6329-OOADocumentDocument41 pagesSE 6329-OOADocumenthamad cabdallaNo ratings yet

- An A-Z Index of The Command Line For LinuxDocument7 pagesAn A-Z Index of The Command Line For LinuxAli BabayunusNo ratings yet

- Advantages of Digital SystemsDocument2 pagesAdvantages of Digital SystemsB2 064 Pavan VasekarNo ratings yet

- Internet: Packet Switching and ProtocolsDocument16 pagesInternet: Packet Switching and ProtocolsAKANKSHA RAJNo ratings yet

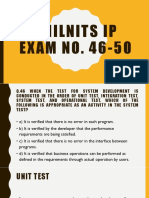

- Philnits Ip ExamDocument19 pagesPhilnits Ip Examjimvalencia0% (1)

- Microsoft Azure: Infrastructure As A Service (Iaas)Document16 pagesMicrosoft Azure: Infrastructure As A Service (Iaas)wilberNo ratings yet

- CH 14Document46 pagesCH 14ZareenNo ratings yet

- Difference Between High Level Languages and Low Level LanguagesDocument5 pagesDifference Between High Level Languages and Low Level LanguagesFaisal NawazNo ratings yet

- CEH v10 Module 20 - Cryptography PDFDocument39 pagesCEH v10 Module 20 - Cryptography PDFdonald supa quispeNo ratings yet

- LogDocument1,081 pagesLogTINENGA WONDA TINENGA WONDANo ratings yet

- Tugas Forum 13 - Achmad Aldo Alfalus - 41817120056Document5 pagesTugas Forum 13 - Achmad Aldo Alfalus - 41817120056ROY LISTON PANAHATAN PANJAITANNo ratings yet

- Role of Internal AuditorsDocument22 pagesRole of Internal AuditorsanggaturanaNo ratings yet

- CH 06Document15 pagesCH 06jaalobiano100% (1)

- WpDataTables DocumentationDocument164 pagesWpDataTables DocumentationBogdanSpătaruNo ratings yet

- Data Analyst Role at SuperControlDocument3 pagesData Analyst Role at SuperControlWear my GlovesNo ratings yet

- Master Civil Engineer 10+ years BIM experience Data Center projectsDocument2 pagesMaster Civil Engineer 10+ years BIM experience Data Center projectsGabriel Alvarez CambroneroNo ratings yet

- SatNet NetManager White Paper v4Document29 pagesSatNet NetManager White Paper v4gamer08No ratings yet

- Logcat CSC Update LogDocument1,524 pagesLogcat CSC Update LogIvan LopezNo ratings yet

- IT1705 SplunkITServiceIntelligence Final 1538831076142001yheyDocument17 pagesIT1705 SplunkITServiceIntelligence Final 1538831076142001yheyDodo winyNo ratings yet

- Normal FormsDocument14 pagesNormal FormsDr. Durga Prasad DogiparthiNo ratings yet

- Privacy PolicyDocument2 pagesPrivacy Policyreinaldo CastañedaNo ratings yet