Professional Documents

Culture Documents

Particle Swam CA

Uploaded by

Nguyen AnhOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Particle Swam CA

Uploaded by

Nguyen AnhCopyright:

Available Formats

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Particle Swarm optimisation

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

The inventors (1)

Russell

Eberhart

eberhart@engr.iupui.edu

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

The inventors (2)

James

Kennedy

Kennedy_Jim@bls.gov

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Cooperation example

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

The basic idea

Each particle is searching for the optimum

Each particle is moving (cant search

otherwise!), and hence has a velocity.

Each particle remembers the position it was

in where it had its best result so far (its

personal best)

But this would not be much good on its own; particles

need help in figuring out where to search.

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

The basic idea II

The particles in the swarm co-operate. They

exchange information about what theyve discovered

in the places they have visited

The co-operation need only be very simple. In basic

PSO (which is pretty good!) it is like this:

A particle has a neighbourhood associated with it.

A particle knows the fitnesses of those in its

neighbourhood, and uses the position of the one with best

fitness.

This position is simply used to adjust the particles velocity

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Initialization. Positions

and velocities

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

The basic idea

Each particle is searching for the optimum

Each particle is moving (cant search

otherwise!), and hence has a velocity.

Each particle remembers the position it was

in where it had its best result so far (its

personal best)

But this would not be much good on its own; particles

need help in figuring out where to search.

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

The basic idea II

The particles in the swarm co-operate. They

exchange information about what theyve discovered

in the places they have visited

The co-operation need only be very simple. In basic

PSO (which is pretty good!) it is like this:

A particle has a neighbourhood associated with it.

A particle knows the fitnesses of those in its

neighbourhood, and uses the position of the one with best

fitness.

This position is simply used to adjust the particles velocity

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

What a particle does

In each timestep, a particle has to move to a

new position. It does this by adjusting its

velocity.

The adjustment is essentially this:

The current velocity PLUS

A weighted random portion in the direction of its personal

best PLUS

A weighted random portion in the direction of the

neighbourhood best.

Having worked out a new velocity, its position is

simply its old position plus the new velocity.

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Neighbourhoods

geographical

social

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Neighbourhoods

Global

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

The circular

neighbourhood

Virtual circle

1

5

7

6

4

3

8

2

Particle 1s 3-

neighbourhood

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Particles Adjust their positions according to a

``Psychosocial compromise between what an

individual is comfortable with, and what society reckons

Here I

am!

The best

perf. of my

neighbours

My best

perf.

x

p

g

p

i

v

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Pseudocode

http://www.swarmintelligence.org/tutorials.php

Equation (a)

v[] = c0 *v[]

+ c1 * rand() * (pbest[] - present[])

+ c2 * rand() * (gbest[] - present[])

(in the original method, c0=1, but many

researchers now play with this parameter)

Equation (b)

present[] = present[] + v[]

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Pseudocode

http://www.swarmintelligence.org/tutorials.php

For each particle

Initialize particle

END

Do

For each particle

Calculate fitness value

If the fitness value is better than its peronal best

set current value as the new pBest

End

Choose the particle with the best fitness value of all as gBest

For each particle

Calculate particle velocity according equation (a)

Update particle position according equation (b)

End

While maximum iterations or minimum error criteria is not attained

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Pseudocode

http://www.swarmintelligence.org/tutorials.php

Particles' velocities on each dimension

are clamped to a maximum velocity

Vmax. If the sum of accelerations would

cause the velocity on that dimension to

exceed Vmax, which is a parameter

specified by the user. Then the velocity

on that dimension is limited to Vmax.

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

The basic algorithm:

again

)

)

) ) )

) ) )

|

+

+

= +

t x p rand

t x p rand

t v

t v

d d g

d d i

d

d

, 2

, 1

, 0

, 0

1

N F

N F

E

for each particle

update

the

velocity

) ) 1 ) 1 ( t v t x t x then move

for each component d

At each time step t

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Animated illustration

Global

optimum

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Parameters

Number of particles

C1 (importance of personal best)

C2 (importance of neighbourhood best)

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

How to choose

parameters

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Parameters

Number of particles

(1050) are reported as usually

sufficient.

C1 (importance of personal best)

C2 (importance of neighbourhood best)

Usually C1+C2 = 4. No good reason other

than empiricism

Vmax too low, too slow; too high, too

unstable.

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Some functions ...

Rosenbrock

Griewank

Rastrigin

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

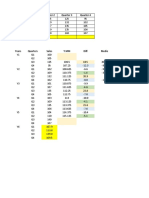

... and some results

30D function PSO Type 1" Evolutionary

algo.(Angeline 98)

rie ank [300] 0.003944 0.4033

Rastrigin [5] 82.95618 46.4689

Rosenbrock [10] 50.193877 1610.359

Optimum=0, dimension=30

Best result after 40 000 evaluations

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Some variants

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Adaptive swarm size

There has been enough

improvement

but there has been not enough

improvement

although I'm the worst

I'm the best

I try to kill myself

I try to generate a

new particle

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Adaptive coefficients

The better I

am, the more I

follow my own

way

The better is my best

neighbour, the more

I tend to go towards

him

Ev

rand(0b)(p-x)

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

Is this Just A Variation on

Evolutionary Algorithms?

Particle Swarm optimisation

2002-04-

24

Maurice.Clerc@WriteM

e.com

An applet

Next Cellular Automata

You might also like

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- ChatLog Meet Now 2013 - 11 - 14 22 - 49Document1 pageChatLog Meet Now 2013 - 11 - 14 22 - 49Nguyen AnhNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Product Specifications: RO-46-1821-NF-02 (DMU)Document3 pagesProduct Specifications: RO-46-1821-NF-02 (DMU)Nguyen AnhNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- E3 White Paper SensingDocument18 pagesE3 White Paper SensingNikhil GaikwadNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Lesson 15 Detection Theory r1Document12 pagesLesson 15 Detection Theory r1Nguyen AnhNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- De Barra - Measure Theory and IntegrationDocument236 pagesDe Barra - Measure Theory and IntegrationItzalá Mendoza83% (12)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Acid Base Titration ExperimentDocument2 pagesAcid Base Titration ExperimentDark_KiroNo ratings yet

- Assign-3-Nptel - Qm-Jan21 - Questions and SolutionsDocument4 pagesAssign-3-Nptel - Qm-Jan21 - Questions and SolutionsMeharNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Outline of A New Approach To The Analysis of Complex Systems and Decision Processes Article RevisionDocument3 pagesOutline of A New Approach To The Analysis of Complex Systems and Decision Processes Article RevisionDamián Abalo MirónNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- CEM 333 Instrumental AnalysisDocument12 pagesCEM 333 Instrumental AnalysisEGa SharfinaNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Aplicatie 4Document35 pagesAplicatie 4AndreeaPopescuNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- Probability and Statistics - Linear RegressionDocument98 pagesProbability and Statistics - Linear RegressionFaraz JawedNo ratings yet

- Guidelines For Implementation of The Inspection, Measuring and Test Equipment Requirements in Reference To The Iso 9000:2000 Series StandardsDocument11 pagesGuidelines For Implementation of The Inspection, Measuring and Test Equipment Requirements in Reference To The Iso 9000:2000 Series StandardsGaapchuNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- STEM Pre-Calculus Curriculum GuideDocument4 pagesSTEM Pre-Calculus Curriculum GuideJan Alexis Monsalud75% (8)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- MA4704 Tutorial Sheet 1 - Statistical AnalysisDocument21 pagesMA4704 Tutorial Sheet 1 - Statistical AnalysisMohamed Essam AbdelmeguidNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Sroi Impact Map TemplateDocument11 pagesSroi Impact Map TemplateMichelle LimNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- 8523827Document2 pages8523827Arora Rameshwar50% (2)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Semester I Quantitative TechniquesDocument2 pagesSemester I Quantitative TechniquesRahul AttriNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- Research Methodology TechniquesDocument1 pageResearch Methodology Techniquesrenisha mNo ratings yet

- Module 1 Assessment 2Document5 pagesModule 1 Assessment 2Carp EvaNo ratings yet

- A Geometric Series Is The Sum of The Terms of A Geometric Sequence. A R NDocument5 pagesA Geometric Series Is The Sum of The Terms of A Geometric Sequence. A R NReygie FabrigaNo ratings yet

- NPTEL Courses Cover Core Sciences, Engineering & TechnologyDocument6 pagesNPTEL Courses Cover Core Sciences, Engineering & TechnologyK Sudarshana ReddyNo ratings yet

- Case Study 2Document2 pagesCase Study 2Kiran Shehzadi75% (4)

- Electrostatics: Introduction to Electric Potential and Poisson's EquationDocument10 pagesElectrostatics: Introduction to Electric Potential and Poisson's EquationBilhan Rudi siagianNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- 4 1 C A MathematicalmodelingDocument10 pages4 1 C A Mathematicalmodelingapi-248595624No ratings yet

- Making Sense of The Legendre TransformDocument10 pagesMaking Sense of The Legendre TransformFaisal AmirNo ratings yet

- HPCOS81 - Learning Unit 3 - 2023Document9 pagesHPCOS81 - Learning Unit 3 - 2023Tshifhiwa MathivhaNo ratings yet

- Probability Theory and Stochastic ProcessDocument9 pagesProbability Theory and Stochastic Processms_aramanaNo ratings yet

- Abou-Faycal Trott ShamaiDocument12 pagesAbou-Faycal Trott ShamaiNguyễn Minh DinhNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Chapter 1 Measure of Central TendecyDocument22 pagesChapter 1 Measure of Central Tendecysyahrilazuan8No ratings yet

- Analysis of Aircraft Sortie Generation Using Fork-Join Queueing Network ModelDocument12 pagesAnalysis of Aircraft Sortie Generation Using Fork-Join Queueing Network Model오승헌 / 학생 / 조선해양공학과No ratings yet

- Sample MCQ Iipu Matematics 2023-24Document48 pagesSample MCQ Iipu Matematics 2023-24qzglsefafNo ratings yet

- Planning and Scheduling - CPMDocument30 pagesPlanning and Scheduling - CPMTeguh Setyo PurwantoNo ratings yet

- Surah Luqman ExolanationDocument101 pagesSurah Luqman ExolanationAtif Ali Anwar FastNUNo ratings yet

- The Evolution of Geostatistics: G. Matheron and W.1. KleingeldDocument4 pagesThe Evolution of Geostatistics: G. Matheron and W.1. KleingeldFredy HCNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)